Chubby Lock Service: A Literature Review Abstract

advertisement

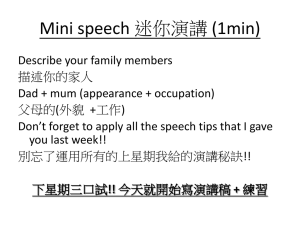

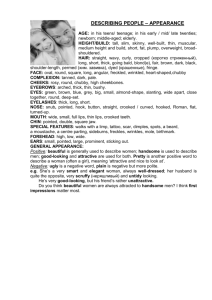

Anesu Chaora B534 Literature Review 03/28/2011 Chubby Lock Service: A Literature Review Abstract The following is a literature review of Google’s Chubby Lock Service. The lock service provides coarsegrained locking and reliable storage for loosely-coupled distributed systems. Its emphasis is on availability and reliability as opposed performance. The rest of this review covers the following aspects of the Chubby lock-service: An overview of the Chubby lock service; motivations for using a lock service; the structure and design of the service; and literary comparisons of the service with other similar services. Overview of Chubby The Chubby lock service serves to facilitate the synchronization of client activities and the gaining of a consensus on basic information in the environment. The main goals are for reliability and availability as opposed to performance. A typical Chubby cell instance may serve ten thousand 4-processor machines connected through 1 Gbit/s Ethernet and located in a single data-center or machine room. The client API allows for whole-file reads and writes, with advisory locks and a notification system for different events. Services that use Chubby include Google File System (GFS) and Bigtable. GFS relies on Chubby for election of master servers (distributed consensus) and for storing small amounts of metadata, while Bigtable uses Chubby for the election of master servers, for enabling client servers to discover their masters and vice versa. Motivations for Using Chubby The motivations for using a fully-fledged lock service like Chubby for implementing locks, as opposed to using client libraries is that a lock service can handle availability better through replication and distributed consensus. This becomes increasingly important as the number of clients for a service increases. A lock service requires minimal modification to existing programs and communication structures in order to achieve this, hence the choice. Chubby is suited for advertising results in a distributed system because of its availability for clients to read and write small quantities of data. This is useful in implementing the functions of a name service and reduces the number of servers in a system by eliminating the need for a distinct name server. Instead, Chubby’s consistent client caching is utilized for its superiority to time-based caching. Programmer’s familiarity with locks also makes Chubby a good choice for distributed decision making. Chubby is ideal in resolving the distributed consensus problem in that less servers are required for a client system to function. It eliminates the need for having a separate set of servers to implement the consensus service. Anesu Chaora B534 Literature Review 03/28/2011 Structure and Design of the Service The above motivations influenced the design of Chubby in the following ways: It was chosen to implement decision consensus over the use of client libraries Chubby was chosen to allow elected primary servers to advertise their details through small files served by the service Chubby files had to have the ability to allow thousands of clients to observe files advertising a primary server Clients and replicas were to receive information on primary server changes through the service, requiring notification services Caching of files was desirable due to periodic polling by clients Consistent caching would be adopted to reduce developer confusion from non-intuitive caching semantics Access control would be required for security purposes Coarse-grain locking A design using coarse-grained locks was chosen over a fine-grained one due to the reduced load incurred by the former on the lock server. This ensures that clients are delayed less by temporary unavailability of the service when locks are acquired. An application can implement its own fine-grained locking mechanism, if required, and allocate these to groups. Chubby can then be used to allocate these groups to application-specific lock servers. Chubby Structure The structure of a Chubby cell is shown in fig. 1 below: Fig. 1 Anesu Chaora B534 Literature Review 03/28/2011 Chubby has a server component and a library for client application linkage. Communication is achieved via RPC and servers are normally in a replica of 5 servers. Distributed consensus is employed in electing the primary server. Each primary server, or master, maintains a master lease – a promise that the servers in the replica will not elect another master for an interval, and votes from a majority. If a master continues to win the vote (conducted periodically), it retains its primary role. Only the master server initiates reads and writes to a simple database while all other servers in the replica copy its updates. By using DNS, clients send requests to servers in the replica requesting for the location of the master server. The master’s location is divulged by non-masters in the replica, paving the way for clients to direct all requests to the primary server until it ceases to be master, or fails to respond to requests. Only the master services read requests, however write requests are carried out in all servers in the replica, with an acknowledgement made when a majority of the servers in the cell have processed the request. The election protocol is run when a master fails or when their lease expires. Files and Directories Chubby’s naming structure is similar to a UNIX file system, facilitating its use with applications and simplifying the process of writing browsing and name space tools for it. It also facilitates training for Chubby users by inference against structures already familiar to the users. Differences from a UNIX file system are that Chubby does not maintain date modified times for directories, nor does it allow files to be moved from one director to another. These restrictions are tailored to ease distribution. Files and directories in the name space are collectively called nodes, and each node has three Access Control List metadata files (ACLs). The files are for access control of reads, writes and changing the ACL names of the node. Other node metadata files facilitate the detection of changes to the nodes. Locking Mechanism Any number of client handles can hold each Chubby file and directory, which act as locks, in read mode. While only one client handle can hold a lock in write mode. Chubby does not use mandatory locks - locks are advisory. To create a handle on a file, the open() call is made, and to destroy a handle, a close() call is made. The client specifies how the file will be used (e.g. read; write; lock; change ACL), events that should be delivered (e.g. . file contents modified; child node added, removed or modified; Chubby master failed; handle has become invalid) , the lock delay (i.e. length of time for which lock will be reserved for client in the event of a client failure), and whether a new file or directory should be created. Other potential calls are Poison(), GetContentAndStat(), GetStat(), SetContetnts(), CheckSequencer(), GetSequencer(). Chubby clients cache data and metadata to reduce read traffic. Changes to data are held while the master server sends notifications to every client with cached data to invalidate it. The writes are only Anesu Chaora B534 Literature Review 03/28/2011 effected after the master verifies that clients have flushed the invalidated data. This is achieved through KeepAlive remote procedure calls, which are periodic handshakes for maintaining relationships between Chubby cells and their clients. Master Failover Fig. 2 below shows a lengthy failover. Lease C1 to C3depicts leases for the client, while lease M1 to M3 are leases for the master server. Fig. 2 If the old master fails, the client can contact a new master before its current lease expires if a new master is quickly elected. If not, the client will flush its cash and continue to seek a master for the duration of the grace period. If a new master is elected within that period, the client attains a new lease as shown above. Backup The master server periodically writes to a GFS file server for backup. The GFS is kept maintained off-site for disaster recovery. Chubby also allows for mirroring across Chubby cells Scaling The most effective scaling methods reduce communication with the master server to prevent clients from overwhelming the master. Such methods include creating an arbitrary number of Chubby cells so that clients use which-ever cell is closest to them; having the master increase session lease times to clients; Chubby clients cache data and metadata to reduce the number of calls they make to the master server; the use of protocol conversion to translate Chubby protocol to less complex protocols like DNS. Comparisons Chubby’s session and cache handling is similar to that of Echo [3]. It employs the file-system model in the implementation of its API. Anesu Chaora B534 Literature Review 03/28/2011 Other services with comparable features are AFS, and Boxwood lock server [4]. Summary Chubby has found wide use in Google products such as MapReduce, GFS and Bigtable. Its main uses have been in implementing DNS services, in distributed consensus, and as a repository for files where high availability and reliability is needed. The Chubby locking service is very versatile and can resoundingly be considered a great success. Anesu Chaora B534 Literature Review 03/28/2011 References [1] BURROWS, M. The Chubby lock service for loosely coupled distributed systems. Google Inc [2] Wikipedia.org [3] MANN, T., BIRRELL, A., HISGEN, A., JERIAN, C.,AND SWART, G. A coherent distributed file cache with director write-behind. TOCS 12, 2 (1994), 123–164. [4] MACCORMICK, J., MURPHY, N., NAJORK, M.,THEKKATH, C. A., AND ZHOU, L. Boxwood: Abstractions as the foundation for storage infrastructure. In 6th OSDI (2004), pp. 105–120.