Slide 1

advertisement

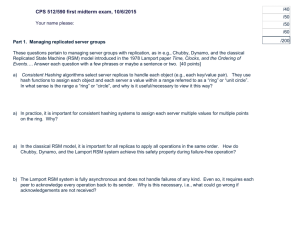

Distributed Systems CS 15-440 Case Study: Replication in Google Chubby Recitation 5, Oct 06, 2011 Majd F. Sakr, Vinay Kolar, Mohammad Hammoud Today… Last recitation session: Google Chubby Architecture Today’s session: Consensus and Replication in Google Chubby Announcement: Project 2 Interim Design Report is due soon Overview Recap: Google Chubby Consensus in Chubby Paxos Algorithm Recap: Google Data center Architecture (To avoid clutter the Ethernet connections are shown from only one of the clusters to the external links) Chubby Overview A Chubby Cell is the first level of hierarchy inside Chubby (ls) /ls/chubby_cell/directory_name/…/file_name Chubby instance is implemented as a small number of replicated servers (typically 5) with one designated master Replicas are placed at failure-independent sites Typically, they are placed within a cluster but not within a rack The consistency of replicated database is ensured through a consensus protocol that uses operation logs Chubby Architecture Diagram Consistency and Replication In Chubby Challenges in replication of data in Google infrastructure: 1. Replica Servers may run at arbitrary speed and fail 2. Replica Servers have access to stable persistent storage that can survive crashes 3. Messages may be lost, reordered, duplicated or delayed Google has implemented a consensus protocol, using Paxos algorithm, for ensuring consistency The protocol operates over a set of replicas with the goal of reaching an agreement to update a common value Paxos Algorithm Another algorithm proposed by Lamport Paxos ensures correctness, but not liveliness Algorithm initiation and termination: Any replica can submit a value with the goal of achieving consensus on a final value In Chubby, if all replicas have this value as the next entry in their update logs, then consensus is achieved Paxos is guaranteed to achieve consensus if: A majority of the replicas run for long enough with sufficient network stability Paxos Approach Steps 1. Election Group of replica servers elect a coordinator 2. Selection of candidate value Coordinator selects the final value and disseminates to the group 3. Acceptance of final value Group will accept or reject a value that is finally stored in all replicas 1. Election Approach: Each replica maintains highest sequence number seen so far If the replica wants to bid for coordinator: It picks a unique number that is higher than all sequence numbers that the replica has seen till now Broadcast a “propose” message with this unique sequence number If other replicas have not seen higher sequence number, they send a “promise” message Promise message signifies that the replica will not promise to any other candidate lesser than the proposed sequence number The promise message may include a value that the replica wants to commit Candidate replica with majority of “promise” message wins Challenges: Multiple coordinators may co-exist Reject messages from old coordinators Message Exchanges in Election 2. Selection of candidate values Approach: The elected coordinator will select a value from all promise messages If the promise messages did not contain any value then the coordinator is free to choose any value Coordinator sends the “accept” message (with the value) to the group of replicas Replicas should acknowledge the accept message Coordinator waits until a majority of the replicas answer Possible indefinite wait Message Exchanges in Consensus 3. Commit the value Approach If a majority of the replicas acknowledge, then the coordinator will send a “commit” message to all replicas Otherwise, Coordinator will restart the election process Message Exchanges in Commit References http://cdk5.net “Paxos Made Live – An Engineering Perspective”, Tushar Chandra, Robert Griesemer, and Joshua Redstone, 26th ACM Symposium on Principles of Distributed Computing, PODC 2007