Lecture 20 Reminder: Homework 4 due today after spring break.

advertisement

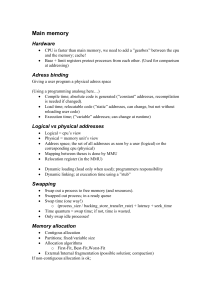

Lecture 20 Reminder: Homework 4 due today Homework 5 posted; due Wednesday after spring break. Questions? Friday, February 25 CS 470 Operating Systems - Lecture 20 1 Outline Fragmentation Paging Page tables Translation look-aside buffer (TLB) Effective memory access time Very large address spaces Friday, February 25 CS 470 Operating Systems - Lecture 20 2 Variable-Size Partitions Memory request CPU burst time (ms) P1 600K 10 P2 1000K 5 P3 300K 20 P4 700K 8 P5 500K 15 The processes arrive at time 0 in the order specified. Friday, February 25 CS 470 Operating Systems - Lecture 20 3 Variable-Size Partitions If q = 1, then P2 terminates at t = 14, leaving a 1000K hole. This is enough to load P4, leaving a 300K hole. P1 terminates at t = 28, and OS allocates P5, leaving a 100K hole. OS - 400K P5 - 500K Hole - 100K P4 - 700K Hole - 300K P3 - 300K Hole - 260K Friday, February 25 CS 470 Operating Systems - Lecture 20 4 Variable-Size Partitions In this scheme, releasing is easy, just add hole to the head of a linked list. Allocation is much harder - if more than one hole is big enough, which hole? First fit Best fit Worst fit ?? Friday, February 25 CS 470 Operating Systems - Lecture 20 5 Fragmentation Both fixed-sized and variable-sized partition schemes suffer from fragmentation, that is, memory space that is unused due to the memory organization. Variable-size partition organization causes external fragmentation. That is, the unused memory is outside of any allocation. In particular, eventually all the holes will be small, so while there may be enough total free space to run another program, there is not enough contiguous to do so. Friday, February 25 CS 470 Operating Systems - Lecture 20 6 Fragmentation Fixed-size partition organization causes internal fragmentation. That is, a process has been allocated more memory than it needs to run, so the unused memory is inside an allocation. On average, a partition will be only half filled. Thus even when all of the memory is allocated, the system could have run more programs had the partitions been smaller. Friday, February 25 CS 470 Operating Systems - Lecture 20 7 Fragmentation Since fixed-size partitions are determined by the memory architecture, there is not much that can be done about internal fragmentation. But we can and should do something about external fragmentation when using variable-size partitions. E.g., in the earlier scenario, when P 1 terminates, it releases 1000K of which P 4 took 700K, leaving a 300K hole. If this free space were added to the other 260K hole, 560K would be large enough to hold P5 as well. Friday, February 25 CS 470 Operating Systems - Lecture 20 8 Fragmentation A simple technique to handle fragmentation is to coalesce holes that are next to each other forming one larger hole of contiguous memory. This can be done just after a process terminates, but would not help our scenario, since the holes are not contiguous. Friday, February 25 CS 470 Operating Systems - Lecture 20 9 Fragmentation A better technique is to do compaction. That is, put all the free space together by moving processes. E.g., Slide all process towards one end Move processes from one end to the other Move processes in the middle to the ends Deallocation becomes more difficult. Friday, February 25 CS 470 Operating Systems - Lecture 20 10 Fragmentation Compaction can be done by swapping processes out to disk and then back into a different location. This requires a data structure to keep track of what's on disk and where. Usually want to do this anyway, so that programs can be "pre-loaded" (resolving memory addressing, etc.), so that loading into memory is faster than trying to do it directly from the file system. Friday, February 25 CS 470 Operating Systems - Lecture 20 11 Static, Complete, Contiguous Organization Generally, fixed-size partitions are favored over variable-size partitions. They are faster to allocate and deallocate, and are simpler to manage. Just need to make sure the partition size is big enough, but not too big. Friday, February 25 CS 470 Operating Systems - Lecture 20 12 Static, Complete, Non-Contiguous Organization Recall: The issues in storage organization include providing support for: single vs. multiple processes complete vs. partial allocation fixed-size vs. variable-size allocation contiguous vs. fragmented allocation static vs. dynamic allocation of partitions Looked at schemes created by the bolded choices. What happens if we allow noncontiguous allocation? Friday, February 25 CS 470 Operating Systems - Lecture 20 13 Paging In non-contiguous allocation schemes, the logical address space is still contiguous, but it is divided into multiple partitions that are mapped separately into (possibly) non-contiguous physical space. Simplest is paging, which uses fixed-size partitions. Logical memory is divided into fixedsize partitions called pages. Physical memory is divided into partitions of the same size called frames. Backing store also is divided this way. Friday, February 25 CS 470 Operating Systems - Lecture 20 14 Paging An admitted program is allocated memory by finding enough physical frames to map the logical pages. Since all the partitions are the same size (logical page, backing store partition, physical frame), any frame can accept any page. The MMU for this is more complex. Need a page table (basically an array) that is indexed by page numbers with frame number element values. Friday, February 25 CS 470 Operating Systems - Lecture 20 15 Address Translation f log. addr. phys. addr. d CPU p d f d p f page table Friday, February 25 CS 470 Operating Systems - Lecture 20 main memory 16 Address Translation Page size is determined by hardware. Size is usually a power of 2 between 512 bytes (9 bits of displacement) and 8192 byes (13 bits of displacement). Power of 2 makes address translation easy: 2m byte logical address space, divided by 2n bytes per page, results in 2(m-n) logical pages, so (m-n) bits of page number and n bits of displacement Friday, February 25 CS 470 Operating Systems - Lecture 20 17 Very Small, Concrete Example 16 bytes of logical address (24 bytes) 4 bytes per page (22 bytes) => 4 logical pages (22 pages) => 2 bits page number, 2 bits displacement page# displacement 00 | abcd | 00 (a), 01 (b), 10 (c), 11 (d) 01 | efgh | 00 (e), 01 (f), 10 (g), 11 (h) 10 | ijkl | 00 (i), 10 (k), 11 (l) 11 | mnop | 00 (m), 01 (n), 10 (o), 11 (p) Friday, February 25 01 (j), CS 470 Operating Systems - Lecture 20 18 Very Small, Concrete Example 32 bytes of physical memory (25 bytes) 4 bytes per frame (22 bytes) => 8 physical frames (23 frames) => 3 bits frame number, 2 bits displacement page table physical memory p f f 0 | 5| 0 | | 4 | | 1 | 6| 1 |ijkl | 5 |abcd | 2 | 1| 2 |mnop | 6 |efgh| 3 | 2| 3 | 7 | Friday, February 25 | CS 470 Operating Systems - Lecture 20 | 19 Larger Example 8192 bytes logical address space 1024 bytes per logical page / physical frame 32,768 bytes physical memory How many logical pages? How many bits in a logical address? How many physical frames? How many bits in a physical address? Friday, February 25 CS 470 Operating Systems - Lecture 20 20 Paging Paging is a form of dynamic relocation. Every page has its own base "register". Advantages are the same as for all fixed-size partition schemes: no external fragmentation; all frames are alike, so any free frame can be used, and allocation/deallocation is efficient. Likewise disadvantages: some internal fragmentation. E.g., if request is 1 byte more than page size. Expect half page per process. Friday, February 25 CS 470 Operating Systems - Lecture 20 21 Paging Page size affects performance of systems Smaller is better for utilization, less internal fragmentation; but slower, e.g. disk transfers from backing store. Pages are getting larger as memory and disks get faster and cheaper. Current systems are usually 2KB or 4KB pages. Friday, February 25 CS 470 Operating Systems - Lecture 20 22 Page Tables With complete allocation, a new process enters only when all of its memory requirements can be granted. PCB holds the page table (PT) that is loaded on a context switch. Implementation of PT is an issue. If it is stored in memory, need two physical memory accesses per logical access. Slows down system by half. Friday, February 25 CS 470 Operating Systems - Lecture 20 23 Translation Look-aside Buffer (TLB) Standard solution for implementing PTs is to use a very fast, small, special hardware cache called a translation look-aside buffer (TLB). A TLB is a set of associative registers containing (key, value) pairs, meaning that they are wired together to receive a key, compare it to multiple values simultaneously, and output the corresponding value of any key match in one step. Friday, February 25 CS 470 Operating Systems - Lecture 20 24 Translation Look-aside Buffer (TLB) key key1 value1 key2 value2 key3 value3 key4 value4 value TLB hardware is fairly expensive, so they tend to be small. Some are as few as 8 entries, but some are as large as 2K entries. To use a TLB, it is put between the CPU and the PT. The basic idea is to look for an entry for page p in the TLB and only look in the PT if there is no match in the TLB. Friday, February 25 CS 470 Operating Systems - Lecture 20 25 Address Translation with TLB f log. addr. phys. addr. d CPU p d f d TLB p# f# p f TLB hit p TLB miss f main memory page table Friday, February 25 CS 470 Operating Systems - Lecture 20 26 Address Translation with TLB When there is a TLB hit, the frame number is obtained nearly instantaneously. When there is a TLB miss, the page table must be consulted, resulting in an extra memory access. The frame number is then loaded into the TLB, possibly replacing an existing entry. The TLB must be flushed on a context switch. Friday, February 25 CS 470 Operating Systems - Lecture 20 27 Effective Memory Access Time The effect of a TLB is calculated based on the hit ratio of memory accesses. I.e., the percentage of times the desired page number is in the TLB. For example, assume 100ns to access memory, 20ns to search the TLB, and 80% hit ratio. Mapped (TLB hit) memory access takes 120ns (TLB search + memory access). Unmapped (TLB miss) memory access takes 220ns (TLB search + PT access + memory access) Friday, February 25 CS 470 Operating Systems - Lecture 20 28 Effective Memory Access Time Effective memory access time (emat) is computed by weighting each case by its probability: emat = TLB hit % * TLB hit time + TLB miss % * TLB miss time = .80 * 120ns + .20 * 220ns = 140ns A 40% slowdown over direct mapped access, but better than 100% slowdown without TLB. Friday, February 25 CS 470 Operating Systems - Lecture 20 29 Effective Memory Access Time What is the emat if the hit ratio is increased to 98%? Hit ratio depends on size of TLB. Studies have shown that 16-512 entries can get 80-98%. Motorola 68020 has 22 entries. Intel 486 has 32 entries and claimed 98% hit ratio. Friday, February 25 CS 470 Operating Systems - Lecture 20 30 Paging Issues Sharing is easy. Can set up PTs of multiple processes to have same entries for shared parts of the logical address space. Especially good for code segments, as long as they are reentrant (i.e., program does not modify itself). Most modern OS's support very large address spaces that require very large PTs. 32-bit addresses are common. 64-bit is becoming so. Friday, February 25 CS 470 Operating Systems - Lecture 20 31 Very Large Address Spaces For example, 32-bit address space with 4KB pages results in 20 bits of page number and 12 bits of displacement. If each PT entry is 32 bits (4 bytes), need 4MB for the PT alone! Must divide the PT into smaller pieces using paging. Then have a PT to find the actual PT. For 32-bit addressing, need two levels of paging. Friday, February 25 CS 470 Operating Systems - Lecture 20 32 Very Large Address Spaces Page number part is divided into the page table page number (pp) and the page table displacement (pd). pp is used to index the PT's PT to find the location of the part of the PT containing the needed page number. pd is used to index the obtained part of the PT to get the frame number Friday, February 25 CS 470 Operating Systems - Lecture 20 33 Very Large Address Spaces For a 64-bit address space, need four levels of PTs. Under same assumptions as before: Unmapped (TLB miss) memory access is 520ns (20ns for TLB search + 400ns for 4 PT accesses + 100ns for memory access) For a 98% hit ratio emat = .98 * 120ns + .02 * 520ns = 128 ns Only slightly slower than the 1 level case! Shows the importance of TLB hardware. Friday, February 25 CS 470 Operating Systems - Lecture 20 34