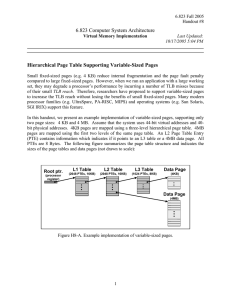

page table

advertisement

Paging Andrew Whitaker CSE451 Review: Process (Virtual) Address Space user space kernel space Each process has its own address space The OS and the hardware translate virtual addresses to physical frames Multiple Processes user space kernel space proc1 proc2 Each process has its own address space And, its own set of page tables Kernel mappings are the same for all Linux Physical Memory Layout Paging Issues Memory scarcity Virtual memory, stay tuned… Making Paging Fast Reducing the Overhead of Page Tables Review: Mechanics of address translation virtual address virtual page # offset physical memory page table physical address page frame # offset … page frame # page frame 0 page frame 1 page frame 2 page frame 3 page frame Y Problem: page tables live in memory Making Paging Fast We must avoid a page table lookup for every memory reference This would double memory access time Solution: Translation Lookaside Buffer Fancy name for a cache TLB stores a subset of PTEs (page table translation entries) TLBs are small and fast (16-48 entries) Can be accessed “for free” TLB Details In practice, most (> 99%) of memory translations handled by the TLB Each processor has its own TLB TLB is fully associative Any TLB slot can hold any PTE entry Who fills the TLB? Two options: Hardware (x86) walks the page table on a TLB miss Software (MIPS, Alpha) routine fills the TLB on a miss TLB itself needs a replacement policy Usually implemented in hardware (LRU) What Happens on a Context Switch? Each process has its own address space So, each process has its own page table So, page-table entries are only relevant for a particular process Thus, the TLB must be flushed on a context switch This is why context switches are so expensive Alternative to flushing: Address Space IDs We can avoid flushing the TLB if entries are associated with an address space 4 1 1 1 2 ASID V R M prot 20 page frame number When would this work well? When would this not work well? TLBs with Multiprocessors page table TLB 1 page frame # TLB 2 Each TLB stores a subset of page table state Must keep state consistent on a multiprocessor Today’s Topics Page Replacement Strategies Making Paging Fast Reducing the Overhead of Page Tables Page Table Overhead For large address space, page table sizes can become enormous Example: IA64 architecture 64 bit address space, 8KB pages Num PTEs = 2^64 / 2^13 = 2^51 Assuming 8 bytes per PTE: Num Bytes = 2^54 = 16 Petabytes And, this is per-process! Optimizing for Sparse Address Spaces Observation: very little of the address space is in use at a given time virtual address space Basic idea: only allocate page tables where we need to And, fill in new page tables lazily (on demand) Implementing Sparse Address Spaces We need a data structure to keep track of the page tables we have allocated And, this structure must be small Otherwise, we’ve defeated our original goal Solution: multi-level page tables Page tables of page tables “Any problem in CS can be solved with a layer of indirection” Two level page tables virtual address master page # secondary page# offset physical memory master page table physical address page frame # secondary page table page frame 1 page frame 2 page frame 3 empty empty offset page frame number … secondary page table page frame 0 page frame Y Key point: not all secondary page tables must be allocated Generalizing Early architectures used 1-level page tables VAX, x86 used 2-level page tables SPARC uses 3-level page tables Alpha 68030 uses 4-level page tables Key thing is that the outer level must be wired down (pinned in physical memory) in order to break the recursion Cool Paging Tricks Basic Idea: exploit the layer of indirection between virtual and physical memory Trick #1: Shared Libraries Q: How can we avoid 1000 copies of printf? A: Shared libraries Linux: /usr/lib/*.so Firefox Open Office libc libc libc Physical memory Shared Memory Segments Virt Address space 1 Physical memory Virt Address space 2 Trick #2: Copy-on-write Copy-on-write allows for a fast “copy” by using shared pages Especially useful for “fork” operations Implementation: pages are shared “read-only” OS intercepts write operations, makes a real copy V R M prot page frame number Trick #3: Memory-mapped Files Normally, files are accessed with system calls Open, read, write, close Memory mapping allows a program to access a file with load/store operations Virt Address space Foo.txt