The Workshops of the Thirtieth AAAI Conference on Artificial Intelligence

Artificial Intelligence Applied to Assistive Technologies and Smart Environments:

Technical Report WS-16-01

A Prototype Intelligent Assistant to

Help Dysphagia Patients Eat Safely At Home

Michael Freed1, Brian Burns1, Aaron Heller1, Daniel Sanchez1, Sharon Beaumont-Bowman2

1

SRI International, 333 Ravenswood Ave., Menlo Park, CA 94025 / {firstname.lastname}@sri.com

2

Brooklyn College, 2900 Bedford Ave., Brooklyn, NY, 11210 / SharonB@brooklyn.cuny.edu

al., 2013). These strategies have been shown to significantly improve patient health and well-being (Low et al.,

2001). Patient compliance, however, is poor, as few patients can continuously self-monitor eating behavior (Colodny, 2005; Krisciunas et al., 2012; Shinn et al., 2013).

Monitoring by caregivers can be beneficial, but caregivers

are often inadequately trained or unavailable (Chadwick et

al., 2006); Nund et al., 2014; Warm, Parasuraman, and

Matthews, 2008). Such monitoring produces significant

relationship strain and caregiver burden (Patterson et al.,

2013; Nund et al., 2014). “It is poignant to note that we can

assist people with dysphagia by first assisting their caregivers (Cichero and Altman, 2012).”

Our preliminary results indicate the feasibility of shifting the burden of monitoring and encouraging compliance

to a device that actively monitors patient-eating behavior

and provides appropriate real-time feedback. Dysphagia

patients are often advised to eat in front of a mirror to enhance self-awareness. We have constructed a proof-ofconcept prototype for a laptop- or tablet-based personal

digital assistant application that, when positioned in front

of the patient, functions as both a mirror and a source of

feedback to optimize safe compensatory strategies. The

prototype successfully monitors compliance for a single

eating strategy—specifically, whether the patient is compliant with flexing the head downward into a “chin tuck”

after spooning food into the mouth.

We hypothesize that currently available machine-vision

and machine-learning algorithms can be adapted to monitor adherence with the most commonly prescribed safe

eating strategies, and that this monitoring can be done in

real time by using widely available consumer electronics

hardware. Our ultimate goal is to develop a fully functional, patient-friendly Dysphagia Coach application that provides direct feedback on eating behavior, thereby increasing patients’ eating safety and well-being while decreasing

dysphagia-related mortality, morbidity, and healthcare

costs (Archer et al., 2013; Krisciunas et al., 2012). Here

we describe the initial steps towards this goal including a

Abstract

For millions of people with swallowing disorders, preventing

potentially deadly aspiration pneumonia requires following prescribed safe eating strategies. But adherence is poor, and caregivers’ ability to encourage adherence is limited by the onerous

and socially aversive need to monitoring another’s eating. We

have developed an early prototype for an intelligent assistant that

monitors adherence and provides feedback to the patient, and

tested monitoring precision with healthy subjects for one strategy

called a “chin tuck.” Results indicate that adaptations of current

generation machine vision and personal assistant technologies

could effectively monitor chin tuck adherence, and suggest the

feasibility of a more general assistant that encourages adherence

to a wide range of safe eating strategies.

Dysphagia Patients Need Help Adhering to Safe

Eating Strategies

Dysphagia, or difficulty swallowing, is a widespread and

often devastating disorder that affects 10–30% of the elderly population (Barczi and Sullivan, 2000), including 11–

16% of individuals living in the community (Bloem et al.,

1990; Kawashima et al., 2004) and 55–68% of nursinghome residents (Kayser-Jones and Pengilly, 1999); Steele

et al. 1997). It is particularly prevalent among patients with

stroke (50–75%), Parkinson’s Disease (up to 95%), and

other neurological conditions (Martino et al., 2005; Hunter

et al., 1997). Dysphagia creates numerous risks; chief

among them is aspiration pneumonia—an infection caused

by accidental ingestion of bacteria-laden food into the

lungs (Marik and Kaplan, 2003). Mortality ranges from

10–70% (DeLegge, 2002). For those with neurogenic dysphagia, aspiration pneumonia is the most likely cause of

death (Marik, 2001).

Clinicians frequently prescribe risk-reducing compensatory strategies such as tucking the chin to the chest before

swallowing to protect the airway, and making an “effortful

swallow” to clear residual food in the pharynx (Archer et

Copyright © 2016, Association for the Advancement of Artificial Intelligence (www.aaai.org). All rights reserved.

24

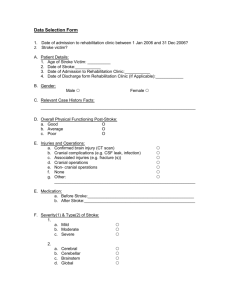

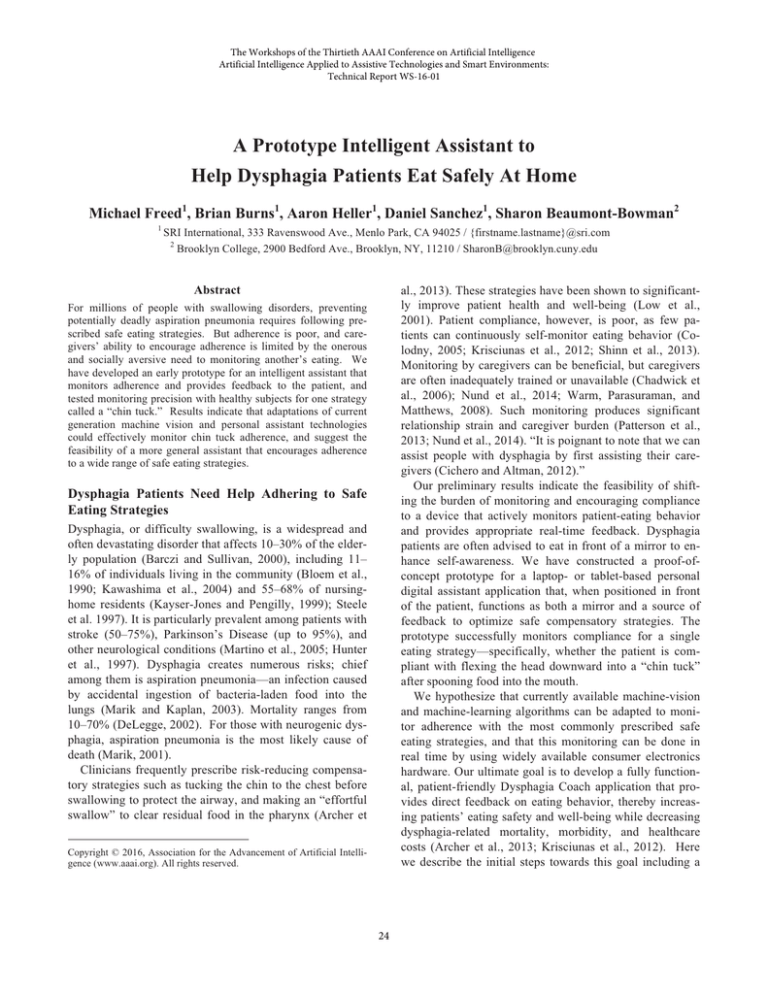

Action Sequence

Common Errors

System Requirement (sysreq)

1. Detect nearby face in view of camera

(determine when to initiate monitoring)

Sit in upright position

Head/trunk misaligned

2. Estimate head/trunk alignment

Sip liquid/spoon in food Swallow before tuck

3. Track cup/utensil movement toward and into mouth.

Tuck chin to chest

4. Estimate head/angle to torso in sagittal plane

Forget to perform tuck

Incomplete tuck

Swallow mid-tuck

Swallow in that position Raise head during swallow

Raise the head

5. Detect swallow (timing and omission errors)

Swallow post tuck

Table 1. Chin Tuck Actions and Errors

Speech and Language pathologists (SLPs) after assessing a

patient’s swallowing deficits. The maneuver involves lowering the chin to the chest before swallowing. This moves

the epiglottis into a protective position over the airway,

compensating for a weak or delayed reflex. Examples of

other strategies include a head turn, where a patient rotates

the head prior to the swallow to compensate for weakness

or paralysis on one side of the pharyngeal wall, and making an effortful swallow to clear residual food.

Table 1 summarizes the sequence of actions for a correctly performed chin tuck, errors that might occur at each

step, and corresponding system requirements for automatically detecting correct and incorrect actions. We envision

the monitoring process starting with a patient sitting at a

table in front of an already upright tablet computer and

selecting the Dysphagia Coach app. The app would immediately detect the patient’s presence and begin monitoring.

If that proves to be more effort than patients are willing or

able to make, a real possibility given common comorbidities such as movement disorders and cognitive deficits, an alternative approach could use dedicated alwayson hardware.

breakdown of requirements for monitoring adherence to

the chin tucking eating strategy, a description of the technical approach taken in our initial prototype, and initial

results in assessing the monitoring accuracy.

Monitoring Requirements for an Automated

Dysphagia Coach

Current practice for helping patients adhere to safe eating

practices relies on human caregivers, with no technologybased support to reduce caregiver burden. However, technology-based solutions exist for the related problem of

helping patients perform swallowing rehabilitation exercises. SwallowStrong, for example, uses a custom molded

mouthpiece and other specialized hardware to measure key

performance indicators for patients’ therapeutic goals as

they perform swallowing exercises (Constantinescu et al.,

2014). iSwallow, an Apple iOS app marketed as a “Personal Rehabilitation Assistant” (UCDavis-CV&S website),

alerts patients when it is time to perform scheduled exercises and provides real-time feedback to the patient using

data from microphones taped to the patient’s throat. Such

devices are similar to what we would expect of a fully realized Dysphagia Coach in the types of activities monitored

and the need to provide real time performance feedback.

However, differences in time frame of use (weeks or

months vs. years) and objective (recovering lost function

vs. maintaining safety) imply a very different patient attitude, with the Dysphagia Coach likely having to meet a

higher standard for ease of use for patients to remain motivated to continue using the device. For this reason, we

treat the goals of low effort setup and passive monitoring

(i.e. without body-attached sensors) as requirements.

To assess whether these requirements could be met using current generation machine vision technology, we developed a prototype Dysphagia Coach to monitor adherence in performing a commonly used safe eating strategy

called a chin tuck maneuver. A chin tuck is one of nine

typical strategies (Goher and Crary, 2009) prescribed by

Prototype Approach and Initial Results

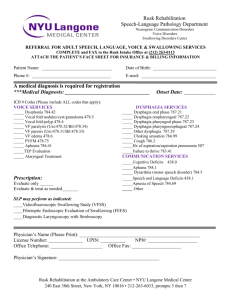

As illustrated in Figure 1, our prototype provides a simple

chin tuck monitoring and user feedback capability. A

monitoring session begins when the system detects a face

within an estimated threshold distance from, and orientation, towards the camera (Viola and Jones, 2004), indicating that the patient may be ready to eat (sysreq-1, left image). It recognizes spoons with and without food, and

tracks movement to and from the mouth (sysreq-3, middle

image). When the system detects that the spoon has been

inserted into the mouth, it monitors for a large, downward

rotation, or “tuck,” of the head (sysreq-4, right image),

followed by an upward rotation into a rest position. If a

tuck is detected after spoon insertion and before any subsequent insertion, the system gives the patient positive

25

Figure 1. Dysphagia Coach prototype showing detection of user and feedback on a chin tuck maneuver.

feedback. Otherwise, it indicates that the tuck was omitted. The initial prototype does not incorporate means for

estimating head-trunk alignment (sysreq-2) or detecting

swallow actions (sysreq-5), although we are exploring approaches to these separately.

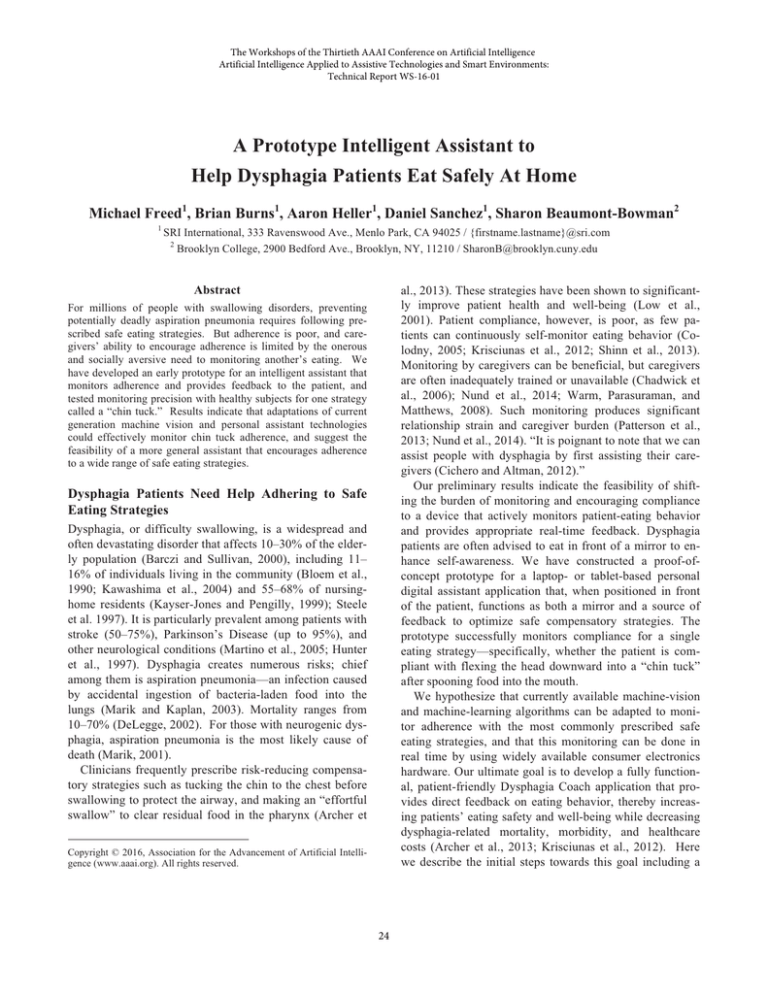

Of the requirements explored using our prototype, the

need to precisely estimate head angle (sysreq-4) using sensors and processors on commodity hardware raised the

most significant question of technical feasibility. We selected an approach incorporating landmark-based machine

vision algorithms (Sagonas et al., 2013; Asthana et al.,

2014; Cao, Wei, and Sun, 2014; Zhu and Ramanan, 2012)

running on a standard, camera-equipped laptop. Landmark

algorithms find standard head and neck reference points,

such as the tip of the nose, in a video image, and then map

them to corresponding points in a reference digital

head/neck model. Head pose is then estimated by computing the best 3D rotation fit between points and model

(Demonthon and Davis, 1995). Importantly for detecting

chin tucks, tilt angle can be treated as approximation of

head-torso angle in the sagittal plane.

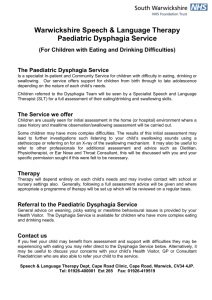

Discriminating a partial tuck from a complete tuck requires not only detecting downward head rotation, but also

determining whether the head-torso angle (the amount of

tuck) is large enough given a patient-specific minimum

determined by the clinician. Figure 2 illustrates our approach to determining whether landmark-based vision

methods can accurately assess head-torso angle. The top

row shows three frames from a tuck video sequence, with

the green dots indicating automatically identified head and

neck landmarks. The second row shows the estimated head

angle “pose” of those video frames on a digital head model. Pose is automatically computed by mapping landmarks

in the image to corresponding reference points on the model (yellow dots). The third row compares the head inclination angle as estimated by machine-vision software (blue

graph line, red data points corresponding to the shown

sample frames) to the actual angle measured by hand

(green circles). Pilot data (n=5) showed an RMS estimation

Figure 2. Chin-tuck angle is determined by finding landmark

head/neck locations in a video image, data mapping those to a

digital head model, and then computing the head pose. Results

show accuracy to within 4 degrees.

error of 3.6 degrees. This result was less than the withinsubject variability of 5.2 degrees for correctly performed

chin tucks, suggesting that estimation error will not be

found to be clinically significant upon more comprehensive testing.

Discussion and Next Steps

Advances in computing and sensing hardware, machine

vision, machine learning, and personal assistant AI are

increasing the potential diversity and efficacy of support

that might be offered to people with disabilities living at

home, improving their safety and quality of life, while reducing dependence on caregivers. As one example poten-

26

tially affecting millions of people, a Dysphagia Coach

would decrease cognitive burden associated with adherence to safe eating strategies (Brehm and Self, 1989), decreasing the effort needed to sustain motivation (Murayen

and Baumeister, 2000; Reason, 1995). As an alternative to

socially aversive and onerous monitoring by a human caregiver (Bandura, 1997), it would appeal to patient’s needs

for self-efficacy (Ryan and Deci, 2000) and thus help reinforce behaviors that reduce morbidity, caregiver burden,

and healthcare costs (Archer et al., 2013; Krisciunas et al.,

2012).

We designed the described Dysphagia Coach prototype

to get feedback from clinicians on the concept, and to

gauge the technical feasibility of the approach. Feedback

confirmed the potential benefit to patients and provided

guidance in prioritizing which safe eating strategies are

most important to monitor. Clinicians also indicated strong

interest in using the technology to collect patient performance data, both to improve care for individual patients

and to advance scientific understanding of dysphagia.

Next steps include creating a corpus of video data with a

diverse patient population in diverse settings, using it to

test and refine the current non-individualized visual modeling approach, extend if needed to use person-specific appearance and behavior data, and then generalize to additional eating strategies such as head turns and effortful

swallows. In parallel, we will seek to understand user experience considerations affecting adoption and ongoing

use. We anticipate that common dysphagia co-morbidities

such as hearing loss, visual attention deficits, and cognitive

deficits will prevent any single user interaction design from

being suitable for all patients, and that multiple user interaction designs will be needed. These efforts will prepare

the way for clinical trials to assess patient benefit.

Cao X, Wei Y, Sun J. Face alignment by explicit shape regression. Int J Comput Vis. 2014;107(2):177-190. doi:

10.1007/s11263-013-0667-3.

Brehm, J.W. and E.A. Self, The intensity of motivation. Annu Rev

Psychol, 1989. 40: p. 109-31.

Chadwick DD, Jolliffe J, Goldbart J, Burton MH. Barriers to

caregiver compliance with eating and drinking recommendations

for adults with intellectual disabilities and dysphagia. J Appl Res

Intellect Disabil. 2006;19(2):153–162. doi:10.1111/j.14683148.2005.00250.x.

Cichero JA, Altman KW. Definition, prevalence and burden of

oropharyngeal dysphagia: A serious problem among older adults

worldwide and the impact on prognosis and hospital resources.

Nestle

Nutr

Inst

Workshop

Ser. 2012;72:1–11.

doi:

10.1159/000339974. PMID: 23051995.

Colodny, N. Dysphagic independent feeders' justifications for

noncompliance with recommendations by a speech-language

pathologist. Am J Speech Lang Pathol. 2005;14(1):61-70. PMID:

15962847.

Constantinescu G, Stroulia E, Rieger J. Mobili-T: A mobile swallowing-therapy device: An interdisciplinary solution for patients

with chronic dysphagia. 2014 IEEE 27th Int Symp Comput Med

Syst. 2014:431–434. doi:10.1109/CBMS.2014.47.Swallow Solutions. SwallowStrong Device. http://swallowsolutions.com/mostinfo.

DeLegge MH. Aspiration pneumonia: Incidence, mortality, and

at-risk populations. J Parenter Enteral Nutr. 2002;26(6

Suppl):S19-24. PMID: 12405619.

Dementhon, D. and Davis, L.

Model-based object pose in 25

lines of code. International Journal of Computer Vision;

15(1):123-141.

Goher ME, Crary MA. Dysphagia: Clinical Management in

Adults and Children. Maryland Heights, Missouri: Elsevier

Health Sciences; 2009.

References

Hunter PC, Crameri J, Austin S, Woodward MC, Hughes AJ.

Response of Parkinsonian swallowing dysfunction to dopaminergic stimulation. J Neurol Neurosurg Psychiatry. 1997;63(5):579–

83. PMID: 9408096.

Archer SK, Wellwood I, Smith CH, Newham DJ. Dysphagia

therapy in stroke: A survey of speech and language therapists. Int

J Lang Commun Disord. 2013;48(3):283–96. doi:10.1111/14606984.12006. PMID: 23650885.

Kawashima K, Motohashi Y, Fujishima I. Prevalence of dysphagia among community-dwelling elderly individuals as estimated

using a questionnaire for dysphagia screening. Dysphagia.

2004;19(4):266–271. doi:10.1007/s00455-004-0013-6. PMID:

15667063.

Asthana A, Zafeiriou S, Cheng S, Pantic M. Incremental face

alignment in the wild. Proc IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2014). 2014.

Bandura, Albert. Self-efficacy: The exercise of control. Macmillan, 1997.

Kayser-Jones J, Pengilly K. Dysphagia among nursing home

residents. Geriatric Nursing. 1999;20(2):77–84. PMID: 10382421

Barczi SR, Sullivan PA, Robbins, J. How should dysphagia care

of older adults differ? Establishing optimal practice patterns.

Semin Speech Lang. 2000;21:347–361. PMID: 11085258.

Krisciunas GP, Sokoloff W, Stepas K, Langmore SE. Survey of

usual practice: Dysphagia therapy in head and neck cancer patients. Dysphagia. 2012;27(4):538–49. doi:10.1007/s00455-0129404-2. PMID: 22456699.

Bloem BR, Lagaay AM, van Beek W, Haan J, Roos RAC,

Wintzen AR. Prevalence of subjective dysphagia in community

residents aged over 87. BMJ Br Med J. 1990;300(6726):721–722.

PMID: 2322725.

Low J, Wyles C, Wilkinson T, Sainsbury R. The effect of compliance on clinical outcomes for patients with dysphagia on vide-

27

ofluoroscopy.

Dysphagia.

2001;16(2):123–127.

doi:10.1007/s004550011002. PMID: 11305222

Zhu X, Ramanan D. Face detection, pose estimation, and landmark localization in the wild. Proc IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2012;2879-2886.

Martino R, Foley N, Bhogal S, Diamant N, Speechley M, Teasell

R. Dysphagia after stroke: Incidence, diagnosis, and pulmonary

complications.

Stroke.

2005;36(12):2756–63.

doi:10.1161/01.STR.0000190056.76543.eb.

Marik PE. Aspiration pneumonitis and aspiration pneumonia. N

Engl J Med. 2001;344(9):665-71. PMID: 11228282.

Marik PE, Kaplan D. Aspiration pneumonia and dysphagia in the

elderly. Chest. 2003;124(1):328–36. doi:10.1378/chest.124.1.328.

PMID: 12853541.

Muraven, M. and R.F. Baumeister, Self-regulation and depletion

of limited resources: does self-control resemble a muscle? Psychol Bull, 2000. 126(2): p. 247-59.

Nund RL, Ward EC, Scarinci N a, Cartmill B, Kuipers P, Porceddu S V. Carers’ experiences of dysphagia in people treated for

head and neck cancer: A qualitative study. Dysphagia.

2014;29(4):450–8.

doi:10.1007/s00455-014-9527-8.

PMID:

24844768.

Patterson JM, Rapley T, Carding PN, Wilson JA, McColl E. Head

and neck cancer and dysphagia: Caring for carers. Psychooncology.

2013;22(8):1815–20.

doi:10.1002/pon.3226.

PMID:

23208850.

Reason J. Understanding adverse events: human factors. Qual

Health Care. 1995;4(2):80-9. PMID: 10151618.

Ryan, R.M. and E.L. Deci, Intrinsic and Extrinsic Motivations:

Classic Definitions and New Directions. Contemp Educ Psychol,

2000. 25(1): p. 54-67.

Sagonas C, Tzimiropoulos G, Zafeiriou S, Pantic M. 300 Faces

in-the-wild challenge: The first facial landmark localization challenge. Proc International Conference on Computer Vision

(ICCVW). 2013;397(403):2-8. doi:10.1109/ICCVW.2013.59.

Shariatzadeh MR, Huang JQ, Marrie TJ. Differences in the features of aspiration pneumonia according to site of acquisition:

Community or continuing care facility. J Am Geriatr Soc.

2006;54(2):296–302.

doi:10.1111/j.1532-5415.2005.00608.x.

PMID: 16460382.

Shinn EH, Basen-engquist K, Baum G, et al. Adherence to preventive exercises and self-reported swallowing outcomes in postradiation head and neck cancer patients. Head Neck.

2013;35(12):1707–1712. doi:10.1002/HED. PMID: 24142523.

Steele CM, Greenwood C, Ens I, Robertson C, Seidman-Carlson

R. Mealtime difficulties in a home for the aged: Not just dysphagia. Dysphagia. 1997;12(1):45–50. PMID: 8997832.

UC Davis Center for Voice & Swallowing. iSwallow App.

http://www.ucdvoice.org/iswallow-iphone-app.

Viola P, Jones M. Robust real-time object detection. Int J Comput

Vis. 2004;57(2):137-154.

Warm JS, Parasuraman R, Matthews G. Vigilance requires hard

mental work and is stressful. Hum Factors. 2008;50(3):433–441.

PMID: 18689050.

28

![Dysphagia Webinar, May, 2013[2]](http://s2.studylib.net/store/data/005382560_1-ff5244e89815170fde8b3f907df8b381-300x300.png)