Appendix G: Current View Reliability and Validity

advertisement

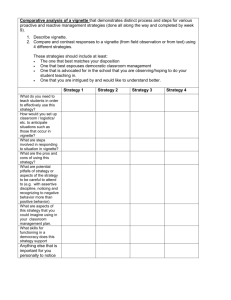

Appendix G: Current View Reliability and Validity Data Analysis Team: Andy Whale, Amy Macdougall, Peter Martin 1. Current View – Interrater Reliability using Vignettes Vignette Development: Analysis of expert rater reliability (four clinicians familiar with the Current View form rate ten case vignettes) 1.1 Introduction This is an analysis of ratings of 10 CAMHS case vignettes. The vignettes were written by four different authors in the style of those used in the Current View training material (reference: http://pbrcamhs.org/training/ ). The authors subsequently rated one another’s vignettes. These ratings were compared, and vignettes were changed to resolve ambiguities that led to differences in ratings. A set of ‘standard’ ratings for each case vignette was agreed between authors. The revised vignettes were sent to four CAMHS clinicians who were familiar with the Current View Form. Each of the ten vignettes was then rated independently by two of the four raters, so that each rater rated five case vignettes. Raters also gave indicative CGAS scores. The purpose of this analysis is twofold: - to provide indications of potential problems with vignettes, such as ambiguity in formulations, that would lead to poor interrater reliability; to test common understanding of the coding rules of the Current View Tool. Two sets of indicators of reliability were computed: 1. ‘by vignette’, to identify vignettes which may need revision; 2. ‘by problem’ / ‘by factor’ (i.e. by presenting problem, context factor, or complexity factor) to identify characteristics that may be difficult to rate. The analysis was performed separately for presenting problems, complexity factors, context factors, and CGAS ratings. Statistics computed are: ICC: Intraclass correlation coefficient. This shows the proportion of the variance in ratings that is shared between all three raters: the author and the two independent raters. In this analysis, ICC was used for ordinal ratings (presenting problems and complexity factors). The ICC is a number that varies between 0 and 1. The bigger the ICC, the better the interrater reliability. For the purpose of ICC computation, ‘not known’ ratings were treated as missing (for the by-vignette analysis) or as equivalent to ‘none’ (for by-characteristic analysis). There are no scientific cut-offs for saying how big an ICC needs to be ‘acceptable’. As a very rough guidance: ICCs larger than .9 indicate rather good agreement. ICCs smaller than .7 indicate poor agreement. ICCs between .7 and .9 are in a grey area. Total Agreement. Total agreement is simply the proportion of ratings on which a pair of raters agree. A total agreement of 1 indicates that two raters agree in all their ratings of a 1 given vignette, a rating of 0 indicates that they agree on no rating. ‘Not known’ was treated as a separate category. Cohen’s kappa: Cohen’s kappa is based on total agreement, but adjusts this for the probability of ‘chance agreement’ due to some ratings being more frequent than others across both raters. A Cohen’s kappa of 1 indicates perfect agreement between two raters. Values close to zero indicate poor agreement. (Cohen’s kappa can be negative, indicating extremely poor agreement.) ‘Not known’ was treated as a separate category. Cohen’s kappa cannot be computed when one or both raters give the same rating to all problems/factors. Cohen’s kappa does not take account of the ordered nature of problem and context ratings. 2 1.2 Analyses by Vignette Table G.1: Presenting Problems (by-vignette analysis) Vignette ICC Cohen’s kappa Au-R1 Au-R2 R1-R2 1 .889 .438 .131* .077* 2 .756 .089 .413 .398 3 .803 .704 .403 .444 4 .946 .641 .250* .176* 5 .664 .155* .384 .220* 6 .694 .306 .551 .054* 7 .641 .193* .126* .596 8 .817 .044* .529 .189* 9 .778 .300 .398 .545 10 .813 .400 .538 .451 Notes: ICC (Mixed Model Consistency Single Rating) computed on valid ratings only (excluding ‘not known’). Cohen’s kappa computed on all ratings. Au: Authors, R1: Rater 1, R2: Rater 2. Low kappas are starred* if the disagreement appears to be mainly due to frequent use of ‘not known’ by one or more raters. (This concerns vignettes 1 ,4 , 5, 8 involving rater ‘1’ vignette 6 involving rater ‘2’; vignette 7 involving ‘3’ and ‘4’.) Quick summary: Overall agreement appears to be acceptable for most vignettes, but only if we ignore substantial inter-rater differences in the use of the ‘not known’ category. In particulary, one rater (‘1’) was considerably more likely to use ‘not known’ than others. Differences in the use of ‘not known’ were responsible for most of the very low kappa values. The ICCs, which treat ‘not known’ as missing, indicate mostly good or acceptable agreement. No vignette stands out as particularly problematic. Overall, ‘not known’ was used 116 times in 900 ratings (13 %). 3 Table G.2: Complexity Factors (by-vignette analysis) Vignette 1 Total Agreement (proportion) Au- Au-R2 R1-R2 R1 .79 .93 .79 Cohen’s kappa 2 .86 .86 .93 .462 .462 .600 3 .79 .79 .93 .432 .506 .844 4 .71 .71 .64 .533 .521 .421 5 .86 .93 .79 --- --- 6 1 1 1 ---- --- .105* --- 7 .79 .71 .79 .354* .282* .548 8 .71 .93 .64 .533 .854 .426 9 .79 .86 .79 .580 .600 .580 10 .71 .86 .71 .417 .725 .417 Au-R1 .192 AuR2 .641 R1R2 .311 Notes: ‘Not known’ was treated as distinct category. “---“ for Cohen’s kappa indicates that statistic cannot be computed because one rater’s ratings are constant. Low kappas are starred* if the disagreement appears to be mainly due to frequent use of ‘not known’ by one or more raters. Quick summary: Complexity factors appear to be the easiest to rate overall. A limitation of the current analysis, however, is that all raters tended to rate most factors as ‘not present’ in all vignettes. This means that most agreement was in terms of identifying the absence of a factor, which is less meaningful than agreement on the presence of a factor. The category ‘not known’ was used 49 times in 420 ratings (12 %). Differences in the use of ‘not known’ did lead to some low kappa values, but not to the same extent as was the case for Presenting Problems. 4 Table G.3: Context Problems (by-vignette analysis) Vignette ICC (items) Cohen’s kappa Au-R1 Au-R2 R1-R2 1 --- (1) .077 .000* .118* 2 .319 (6) .200 .200 .714 3 .553 (6) .250 .333 .000 4 --- (2) .077* .000* .333 5 .185 (6) --- .226 --- 6 .488 (5) 1 -.059 -.059 7 --- (2) -.071* -.029 .063* 8 --- (1) .143* .000* .368 9 --- (2) .111* .143 .280* 10 .367 (4) --- --- .000 Notes: ICC (Mixed Model Consistency Single Rating) computed on valid ratings only (excluding ‘not known’). Number of items included in ICC calculation is shown in brackets. Cohen’s kappa computed on all ratings. “---“indicates that statistic cannot be computed because of too many missing values (for ICC) or one rater’s ratings are constant (for kappa). Quick summary: Context problems appear to be the most difficult to rate. This is reflected in the low reliability indices for all vignettes, as well as in the frequency of the use of ‘not know’. The category ‘not known’ was used 30 times in 180 ratings (17 %). CGAS ICC = .578. This suggests moderate agreement on level of functioning at best. The largest discrepancies were in vignette 1 (70 vs. 50), vignette 3 (70 vs. 54), and vignette 8 (60 vs. 45). 5 1.3 Analysis by Problem / Factor The following analyses look at the same data as the analyses above, but from a different perspective. Above, we investigated agreement between raters on the ratings of a given vignette. Here, we focus on how reliable each presenting problem, context factor or complexity factor can be rated. Since we have ten vignettes and three raters per vignette, each problem or context/complexity factor was rated 30 times. We can look at the interrater reliability of these ratings by considering how similar ratings on the same vignette are compared to ratings on different vignettes. This is measured by the ICC1 coefficient. A limitation of the analyses that follow is that there is little variability in ratings for some problems and factors. For example, no vignette was designed to present a child with Gender Discomfort Issues (presenting problem number 26), and therefore all author ratings of this problem are zero. Where such lack of variability exists, a meaningful analysis of interrater reliability is impossible. Furthermore, even if there are non-zero ratings, if almost all ratings are zero, the strength of reliability as measured by ICC can be influenced very strongly by a single agreement or disagreement. Presenting Problems There are thirty presenting problems overall. Six ICCs were not computable due to lack of variation (all ratings were ‘none’ or ‘not known’). Many others, although sometimes quite high or quite low, were not meaningful due to low variation (only 1 to 3 ratings different from zero). We do not present all 24 ICCs, but instead present their distribution. Table G.4. Distribution of ICCs (by-problem analysis) Min 1st quartile median 3rd quartile Max 0 .34 .51 .85 1 Note: Reliability coefficient: ICC1 (one-way). “Not known” and “none” were treated as being the same (value 0). Quick summary. Only 40 % of ICCs (10 out of 24 computable) are above 0.7. So reliability of most ratings would seem to be poor. Furthermore, most of the high ICCs stem from variables with very little variation (e.g. only one vignette was rated to be different from zero by any rater). It is unclear whether we have sufficient variation in the data to assess reliability. Some problems that had substantial variation in ratings and poor reliability were: - Depression (ICC = .40) Carer Management (ICC = .35) Family Relationship Difficulties (ICC = .28) Peer relationship difficulties (ICC = .16) Overall, the reliability of problem ratings seems poor. This is in contrast to the results from the by-vignette analysis. 6 Table G.5. Complexity Factors: by-factor analysis ICC LAC Young carer 1 .78 LD 1 Serious physical health issues 0 Pervasive Developmental Disorders --- Neurological Issues --- Current Protection Plan .74 Child in Need .74 Refugee or asylum seeker 1 Experience of war, torture, trafficking .50 Abuse or neglect .55 Parental health issues 0 Contact with Youth Justice system 0 Financial difficulties .56 Note: Reliability coefficient: ICC1 (one-way). “Not known” and “none” were treated as being the same (value 0). “---“ indicates that the ICC1 was not computable due to lack of variation (i.e. because all ratings were the same). Quick summary. Some factors appear to be rated with perfect reliability (ICC=1), for others there appears to be no relationship between different raters’ ratings (ICC=0). However, due to the rarity of all complexity factors in these vignettes ICCs can be strongly influenced by a single instance of agreement or disagreement between two raters with respect to one vignette. Overall, there is some evidence here that the reliability is less than would be desirable. This is in contrast to the results from the by-vignette analysis of complexity factors. 7 Table G.6. Contextual Problems and EET (by-factor analysis) ICC (not known = none) Home -.11 School, Work, Training -.26 Community .28 Service Engagement .65 Attendance Difficulties .70 Attainment Difficulties .38 Note: Reliability coefficient: ICC1 (one-way). “Not known” and “none” were treated as being the same (value 0). Quick summary. Only Service Engagement and Attendance Difficulties were rated more or less reliably. Otherwise agreement was poor. Correlations between ratings for Home and School/Work/Training were negative. This suggests that raters had different and nonoverlapping criteria for assessing these types of contextual problems. 8 1.4 Examples To illustrate the meaning of reliability coefficients, Tables G.7 & G.8 below display the ratings given for each of the ten vignettes on Depression and Family Relationship Difficulties, respectively. Table G.7. Ratings for Depression Vignette Authors Rater A Rater B 1 moderate moderate mild 2 mild none mild 3 mild mild none 4 5 Not known mild Not known moderate 6 mild Not known Not known mild 7 moderate moderate 8 none none Not known none 9 none none none 10 none none none none Note: ICC = 0.4. Note that “Rater A” and “Rater B” are not necessarily the same person across vignettes. Table G.8. Ratings for Family Relationship Difficulties Vignette Authors Rater A Rater B 1 mild mild 2 moderate mild Not known mild 3 moderate mild mild 4 severe 5 severe Not known mild Not known mild 6 severe mild severe 7 severe moderate severe 8 severe moderate moderate 9 none moderate none 10 none Not mild known Note: ICC = 0.28. Note that “Rater A” and “Rater B” are not necessarily the same person across vignettes. 9 Consider Table G.7, which shows the ratings for Depression. There were four instances where all three raters agreed. These agreements occurred where either all raters thought that there was no depression present (‘none’), or where all raters thought that the category was not rateable (‘not known’). Significantly, there was never agreement between all raters on the presence AND severity of depressive symptoms. These disagreements are what is reflected in the relatively low intraclass correlation coefficient (ICC = 0.4). Consider Table G.8, which shows the ratings for Family Relationship Difficulties. There was no instance where all raters agreed. Although in most cases of disagreement, the differences of ratings was only one point on the ordinal scale (e.g. ‘severe’ vs. ‘moderate’), there were also several instances of two-point disagreements. Overall, agreement between raters was poor, and this is reflected in the low intraclass correlation coefficient (ICC=0.28). 1.5 Conclusion Overall, reliability of ratings is moderate at best. In a by-vignette analysis, reliability coefficients, for the most part, indicate acceptable reliability, at least if ‘don’t know’ ratings are ignored. However, in a ‘by problem’ analysis, interrater reliability is poor. It may be that the relatively high coefficients in the by-vignette analysis are a result of the fact that raters can often agree on the absence of many types of problems in a given vignette (i.e. most agreement is on ‘none’/’not present’). However, there is less agreement when it comes to either the identification of a problem, or in rating an identified problem’s severity. The ‘not known’ category is not used consistently between raters. It appears that some raters have a systematic tendency to use ‘not known’ more often than others. Context Factors and Education/Employment/Training variables appear to be most difficult to rate overall. In summary, reliability of vignette ratings is poorer than would be desirable, although given the limitations of the data it is unclear exactly how poor it is. At the present stage of the investigation we cannot say to what degree poor reliability is an artefact of the vignette method, i.e. whether ‘real cases’ would be easier or more difficult to rate. See the third section of this appendix, ‘Naturalistic Interrater Reliability Study’. 10 2. Current View – Interrater Reliability using Vignettes Independent Vignette Rating: Analysis of expert rater reliability (five clinicians familiar with the Current View form rate ten case vignettes following their development). 2.1 Introduction In the previous section four CAMHS clinicians each rated five of the set of ten case vignettes. These were analysed alongside a set of ‘standard’ ratings which had been developed by the authors of the vignettes. In this section, we use a further set of ratings based on the same vignettes as in section G.1. Five CAMHS clinicians each rated all ten vignettes, independently of each other. These were different clinicians from those in section G.1, however we note that two were involved in the development of the Current View tool. The tool consists of four components: 1. 30 ‘problem descriptors’, rated on a scale from ‘none’, ‘mild’, ‘moderate’ and ‘severe’. 2. 14 ‘complexity factors’, rated as either ‘yes’ or ‘no’. 3. 4 ‘contextual problems’, rated on a scale from ‘none’, ‘mild’, ‘moderate’ and ‘severe’. 4. 2 ‘education, employment or training’ difficulties, rated on a scale from ‘none’, ‘mild’, ‘moderate’ and ‘severe’. In addition, every question on all 4 components has a ‘not known’ option, which is intended to be used when a rater feels that they do not have enough information to answer a specific question. It should not be used when a rater is unsure which level of severity to select. The intention behind a reliability analysis is to determine how much agreement there is between different raters on the Current View. Since all the raters are looking at the same information and (should) have had the same guidance as to how to complete the tool, their responses to the tool should be relatively similar. A major difficulty we have encountered when attempting to answer this question is the high level of ‘not knowns’ which were reported. It would not be sensible to attempt to conduct an analysis where there are a high number of unknowns, so instead, we have examined the reliability with which raters have determined that a vignette does not contain enough information to answer specific questions. 11 2.2 Results The first thing we noticed when looking at the distribution of ‘not known’ responses is the level of difference between raters in how often they responded ‘not known’, in response to all 500 question (10 vignettes, 50 questions per vignette). Table G.9: Total ‘Not Known’s per rater Rater 1 Rater 2 Total ‘not known’ 64 67 Rater 3 Rater 4 Rater 5 110 89 118 It is also worth noting which vignettes elicited more ‘not known’ responses, and so perhaps are lacking in clarity. Table G.10: Total ‘Not Known’s per vignette Vignette 1 Vignette 2 Vignette 3 Total ‘not known’ Total ‘not known’ Vignette 4 Vignette 5 33 14 17 102 25 Vignette 6 Vignette 7 Vignette 8 Vignette 9 Vignette 10 33 75 85 36 29 12 We can further break this down and examine how many ‘not known’ responses each rater gave to each vignette. Table G.11: Total ‘Not Known’s by rater and vignette Rater 1 Rater 2 Rater 3 Rater 4 Rater 5 Vignette 1 2 4 7 0 20 Vignette 2 1 2 5 1 5 Vignette 3 2 3 8 0 4 Vignette 4 21 21 17 17 26 Vignette 5 0 0 7 4 14 Vignette 6 2 7 13 2 9 Vignette 7 10 6 18 27 14 Vignette 8 14 18 16 20 17 Vignette 9 1 4 12 16 3 Vignette 10 11 2 8 2 6 Considering Table G.11, it seems there is a reasonable level of agreement between the five raters in terms of how many ‘not known’ ratings have been given to each vignette. When examining the data at the level of individual questions however, it becomes apparent that there is significant disagreement between raters. For 35 out of the 50 questions, there was a high level of disagreement1 about whether the vignette contained enough information to provide an answer, so while raters are tending to give a similar number of ‘not known’ answers for each vignette overall, it seems that they are giving them in response to different questions. 1 ‘High level of disagreement’ meaning that the level agreement between the raters was not significantly different from zero (which would results if two raters’ ratings were independent and any agreement came about by chance alone. Kappa values for the items with statistically significant Kappa ranged from 0.206 to 1, Kappa values for items where Kappa was not statistically different from zero ranged from -0.064 to 0.219: see overleaf for the scale. 13 In terms of addressing the issue of agreement between raters on the actual raw data, we conducted an analysis ignoring the ‘not known’ responses and focusing only on cases where raters had enough information to rate the severity of a condition. There was a large amount of variation between questions, with near perfect agreement on some questions (such as question 4; ‘Compelled to do or think things (OCD)’ while other questions showed almost no agreement at all (e.g. question 2; ‘Anxious in social situations (Social anxiety/phobia)’ The ranges and average level of agreement for each sub-section (as well as the Current View overall) is in Table G.12. Only questions where there was sufficient2 information (i.e. lack of ‘not known’ responses), are included; • 5 out of 30 questions from the problem descriptor sub-scale. • 4 out of 14 questions from the complexity factors sub-scale. • 3 out of 4 questions from the contextual factors sub-scale. • 2 out of 2 questions from the education/employment/training sub-scale. 2 Fewer than 5 ‘not known’ responses across all five raters. 14 Table G.12: Agreement between raters across Current View form. Problem Complexity Contextual Education/ Descriptors Factors Problems Employment/ Overall Training Range3 0.26 - 1 0.4 - 1 0.17 – 0.55 0.39 – 0.92 0.17 – 1 Mean 0.54 0.85 0.32 0.66 0.6 Agreement Moderate Near Perfect Fair Substantial Moderate 4 Agreement is measured on a scale from 0 to 1, where 0 is no agreement whatsoever, and 1 is perfect agreement. Within that scale, values below 0.2 are said to show ‘slight’ agreement, 0.21 to 0.4 represents ‘fair’ agreement, 0.41 to 0.6 represents ‘moderate’ agreement, 0.61 to 0.8 represents ‘substantial’ agreement, and values above 0.8 represent ‘near perfect’ agreement. It is also important to note that these estimates were obtained omitting all ‘not known’ scores, which are a major source of disagreement between raters, and, as such, are likely to be an optimistic rating of agreement. 3 Based on average pairwise agreement between raters for each question Agreement was measured using a combination of the ‘weighted Kappa’ and ‘Light’s Kappa’ methods; weighted Kappa scores were calculated for each pair of raters on each question, with scores weighted using the squared method. The mean average of weighted Kappa values for all pairwise comparisons was taken to represent the overall level of agreement between all 5 raters for each question. 4 15 2.3 Conclusions It is concerning that there appears to be a low level of agreement between these 5 raters, even on the relatively straightforward question as to whether each vignette contains enough information to provide an answer to most questions. This may be a consequence of studying the level of agreement using vignettes rather than real case data, where we would perhaps expect the rater to be more familiar with the case and to have more information than is present in some of these vignettes (see section G.3 of this appendix for analysis using data from face to face interviews). Where there were not a large number of ‘not known’ responses given, there was a moderate level of agreement between raters. It is also important to note the differing level of agreement on different sub-scales, with the complexity factors having a much better level of agreement, which is likely to be down to the yes/no nature of these questions compared to the more open response scale of the other questions. One final point of interest is that two of the five raters (raters 2 and 5) were involved in developing the Current View; although there was not a significant difference in the number of ‘not known’ responses given by these two raters, on the fourteen questions for which there was enough data, these two raters had a much higher average level of agreement than the other three raters. Average Kappa for raters 2 and 5 was equal to 0.8, compared to an average Kappa between raters 1, 3 and 4 of 0.51. Average agreement between the two ‘expert’ raters and the remaining three was similar. This raises the possibility that there is a difference among the raters in their level of experience with the Current View, which could be a potential source of variation overall. The most important factor to consider in future work examining the reliability of the Current View is addressing the level of ‘not known’ responses; it could be useful to ensure that all raters are using the ‘not known’ responses appropriately (that is, when there is not enough information to make a judgement in response to a specific question, and not because they are unsure as to which category a response belongs in). It could also be a consequence of using vignettes5. See the following section (G.3) for an analysis using face to face interviews, which are completed using all of the information gained in a clinical setting 5 The use of vignettes also introduces a second problem, which is a lack of variability. As a result of only having 10 cases to rate, a number of the questions do not relate at all to some cases, resulting in all (or nearly all) raters answering zero, creating instability in the Kappa statistic, so any interpretation should be treated with caution. 16 3. Current View – Naturalistic Interrater Reliability Study Naturalistic Ratings: Investigating reliability of Current View form using a set of data in which each patient has had two Current View forms filled out by separate clinicians. 3.1 Introduction There is at present little information on how consistently the Current View form is being used by practitioners. This analysis does not give a definitive answer to the question of consistency, but rather an indication, and discussion of the issues which present themselves when trying to answer such a question. The dataset was collected not from a designed experiment, but from practitioners who were generating two Current View forms per patient as part of their work. The issues this has introduced are discussed in section one, the methods used to analyse the data are given in section two. The sections following that give an overview of the differences between forms completed on the same patient. 3.2 Description of the data At the start of the data collection period three sites treating children or young people were contacted. In the case that two practitioners had seen the same patient, they were asked to each submit a Current View form for the purposes of comparison. In total 116 forms were submitted (for 58 patients), plus some information regarding the practitioner and his or her co-rater (the practitioner who had filled out a form for the same patient): • • • profession of practitioner, the number of times the practitioner had previously met with the patient, whether the practitioner had met the patient alongside his or her co-rater (at their last meeting or ever). The patients The youngest patient was 7, the oldest 17. Most were between 10 and 17 (75%), with 8% aged 7-9 and the remaining 17% with no age given. The prevalence of each problem description is given in the plot below. Compared to the Main sample as described in the main report, there were more patients with severe or moderate problems. 17 Figure G.1: Proportions of patients with each problem from the ‘Provisional Problem Description’ section of the Current View form. 18 Figure G.2: Types of practitioner The practitioners There were 34 practitioners, who were a mixture of Psychologists, Psychiatrists and Child Psychiatric Nurses (including students of all types). The ‘other’ category in the plot to the left includes ambiguous categories such as ‘Clinician’. It is clear from Figure G.2 that the most common type of practitioner for a patient to be seen by was Psychologist. About one third of practitioners saw only one patient (35%), others up to 14 (65%). Issues with the sample Patients seen at different times: It was not the case that all of the Current View forms were filled out on the basis of the same meeting with the patient. Information on whether practitioners had met with the patient jointly was missing for approximately one third of all forms. Of the remaining patients, roughly half had been seen jointly by two practitioners, the other half were seen some months apart (one to five, with one gap of eight months). In this time a patient’s presentation could have changed, leading to inconsistencies between the two forms for that patient. Practitioners had inconsistent prior knowledge of patients: As well as possibly seeing patients at different times, in some cases practitioners had differing prior knowledge of patients. This in fact occurred at site C only, where 60% of patients had been seen a different amount of times by practitioners (34% of overall total). In these cases one practitioner would have seen the patient between 2 and 8 times (in one case 21 times), the other practitioner just once. On sites A and B practitioners each saw the patient once (together or separately), although the information was missing for around one fifth (18%). Although the Current View form is intended to be filled out at assessment, this was not always the case in this sample. Missing values were present, in some parts of the form more than others. The ‘Provisional Problem Description’ (PPD) section was well reported, just 8% of forms had one item missing from the 30 in that section. In the ‘Details’ section, this rises to 44% having between 19 one and seven items missing (this includes information not on the form, such as practitioner’s profession). Small numbers of forms had the entire Selected Complexity Factors, Contextual Problems, or Education/Employment/Training section missing (13 for EET, 6 for CP and 5 for SCF). Overall there were few missing values. 3.3 Methods The main focus of the study was to find out how different two forms for the same patient were, on average. All of the items on the Current View form are categorical variables, with either the following options: Or a simple ‘Yes’, ‘No’ or ‘Not Known’. The first four options above can be easily coded as a simple numeric scale starting with 0 for ‘None’ going up to 4 for ‘Severe’, but the ‘Not Known’ option does not fit on this scale. Consequently, disagreements on the severity of a problem, and whether information about it is known on not, have been dealt with in separate sections. Firstly, in order to examine agreement on the use of the ‘Not Known’ option, entries on the forms were coded as follows: • • 0 if the ‘Not Known’ option was selected; 1 if any other option was selected. The distances between each form were then calculated (details will be given shortly) and plotted. Secondly, in order to be able to use a numeric scale as mentioned above, the entries were coded as: • • 0 if ‘None’ or the ‘Not Known’ option was selected, 1 for ‘Mild’, 2 for ‘Moderate’ and 3 for ‘Severe’. The differences between the forms were then measured with Gower distance (as defined in Gower (1971)), which lies on a scale between 0 and 1. The higher the Gower distance the greater the level of disagreement. Close to zero indicates the two forms are very similar, close to one indicates that they are in almost total disagreement. The Gower distance for patient defined as: = 1 | max − | − min( ) 20 Where: − | is the absolute difference between the two (coded) entries for patient ( • | • item on the form, for first practitioner, for the second), for the − min( ) is the difference between the maximum and minimum values of max • item (and is included to ensure that all variables are on the same scale), the the total number of items depended on whether the whole form was being considered ( = 50)or just the PPD section ( = 30). This is essentially the sum of the differences between entries on each part of the form, where all variables are scaled to lie on a scale between 0 and 1. The divisor is either: • • The total number of variables, in this case either the number of items on the whole form or within a section of the form, or The number of relevant problems, defined as the number of items which at least one practitioner recorded a non-zero entry. The latter option is used when it is not desirable to count agreement on the absence of a problem (see section 4 ‘Rating the Severity of a problem’ for more). In the last section agreement across problems rather than patients will be examined, using the Intra-class Correlation Coefficient (ICC, see McGraw and Wong (1996)). The aim here will be to see whether some problems are more difficult to agree on than others, or not. As the practitioners were not constant across all patients it was not possible to use a measure more suited to categorical data, such as Cohen’s weighted Kappa. 3.4 Use of the ‘Not Known’ option The ‘Not Known’ option of the form is intended to be used when a practitioner does not have enough information to make a judgement about whether a problem is present or not. The scatter plot in Figure G.3 is intended to give an indication of how consistently this option is being used across the entire form, and represents the Gower distance between the two forms that were submitted for each patient. Note that the Gower distance was calculated using the total number of items on the form as the denominator (the variables were considered symmetric, agreeing that information on a problem was not present was considered as meaninful as agreeing that it was present). What can be read from the plot (Figure G.3): • Height gives the number of times the two practitioners have used the ‘Not Known’ option in different places (there are 50 items on the form in total, and each increase of 0.02 represents a disagreement of one out of those 50 items)6 • Size gives the number of ‘Not Known’ options selected in total per patient. • Colour gives the site. 6 sometimes this is approximate due to missing values (though these are not so widespread as to cause serious misinterpretation). 21 Figure G.3: Distances between forms in terms of use of the ‘Not Known’ option The higher the point, the more times the two practitioners have used the ‘Not Known’ option differently. It seems clear that the more times the ‘Not Known’ option is used, the more disagreements there are, as the larger points are higher in the plot. Also, most practitioners do not use this option very often, as most of the points are small. 22 Out of the 58 patients, close to half have three or fewer disgreements on their forms, and a high number of disagreements between forms is fairly uncommmon. Most practitioners use the ‘Not Known’ option quite sparingly, and therefore most of the disagreements are quite small. However, of the 39 patients whose forms featured at least one ‘Not Known’ option, only two had no disagreements. So differing use of the ‘Not Known’ option is quite widespread. One explanation could be that practitioners differ in their understanding of when to use the option. Another could be that practitioners generally understand it in the same way, but the patient has presented in different ways at different times. Table G.13: Distribution of number of disagreements Number of disagreements Percentage (number) 0 1,2 or 3 36% (21) 35% (20) More than 3, less than 10 12% (7) 10 or more 17% (10) Of the ten patients who have ten or more disagreements, seven come from site C, three from B. Of the seven from C, all patients had one practitioner identified as ‘P12’, so all high scores from this site (and therefore most of the high scores for the whole set) can be traced to practitioner ‘P12’. Practitioner ‘P12’ clearly used the ‘Not Known’ option far more often than other practitioners. The number and pattern of disagreements does not change significantly when only the PPD part of the form is examined. The number of patients with three or fewer disagreements falls from 41 to 38, a very small change, and the same patients have high scores. The boxplots in Figure G.4 show the distances for each part of the form, with ‘CE’ including the last two sections (‘Contextual Problems’ and ‘Education/employment/training’). 23 Figure G.4: Distances between forms for each section of the Current View form. The last two boxplots in Figure G.4 indicate that there are fewer disagreements in the last two sections of the form than in the first, as might be expected given the more objective nature of the factors and problems listed in the former. Note that the ‘not known’ option is still used very little in these parts of the form. 24 Main points: • • • • • Most practitioners use the ‘Not Known’ option quite sparingly, and therefore most of the disagreements are quite small. However, of the 39 patients whose forms featured at least one ‘Not Known’ option, only two had no disagreements. So differing use of the ‘Not Known’ option is quite widespread. Different sites have different patterns of use. This could be down to their case mix, or different understanding of when to use the option. One practitioner selected ‘Not Known’ many more times than any of the others, and was perhaps using the ‘Not Known’ option where others recorded ‘None’. The last three sections of the form (selected contextual factors, contextual problems, education/employment/training) have fewer disagreements than the first, it may be that the factors and problems in these sections are less ambiguous than in the PPD section. 3.5 Rating the Severity of a Problem In the PPD section of the form, practitioners must rate the severity of a problem. A scale from ‘none’ to ‘severe’ is used. How much do practitioners agree on severity across all problems in this section? The scatterplot in Figure G.5 shows the distances between the two forms for each patient, for the PPD section only. However in this case, the height does not give the percentage of the form which practitioners disagree on, but the proportion of ‘relevant problems’ on which the practitioners disagree (weighted by the degree of disagreement). Relevant problems here refer to those which are rated as present by at least one practitioner. This is because the Gower distance: = 1 | max − | − min( ) has been calculated with as the number of relevant problems, instead of the total number of items on the form. A simple example may help to explain why this method has been chosen here (some may wish to skip this section and proceed to Figure G.5). Take a simplified example where the only possible ratings are 0 (‘none’) or 1 (‘condition present’, two categories are used here so there is no need to scale the variables). At least one practitioner has given a rating for the first four problems, the rest have been recorded as ‘none’. Table G.14 illustrates. 25 Table G.14: Simplified example of two Current View forms Problem Practitioner 1 Practitioner 2 Disagreement 1 1 0 OCD 1 1 0 Panics 1 0 Poses risk to others 1 0 1 1 Does not speak 0 0 0 All other problems Number of relevant problems = 4, Total number of problems in PPD section=30 Therefore the number of relevant problems is four, and the total amount of disagreement is 1-0=1 (the difference between the ratings for ‘Does not speak’ – on all other problems the practitioners agree). The Gower distance can either be calculated as: ) = = 1 = 0.25 4 or: ) = = 1 = 0.03 30 In method (A), more weight is given to the items which are rated as present and agreed upon. By including the agreement on problems which are not present in method (B), the distance becomes very low (0.03), and it seems that the practitioners are in almost total agreement. In this section method (A) will be used, and only disagreement on problems which at least one practitioner has rated as present are will be counted. We proceed to the scatter plot of these distances between forms in Figure G.5, recalling that the higher the distance, the more the two forms disagree. What can be read from the plot (Figure G.5): • Height gives the Gower distance between forms. • Size gives the number of relevant problems. • Colour gives the site. 26 Figure G.5: Distances between forms in terms of severity. Most patients across all sites have distances between 0.2 and 0.4, with distances at site C again displaying some more variability. Some examples will give an idea of what these distances mean. First a low and a high Gower distance, followed by the two median distances. A Low and a High Gower Distance Patient ‘c27’ at site B has a relatively low Gower distance of 0.22. Table G.15 shows the entries of the two practitioners’ forms who saw this patient (the parts of the form where both practitioners recorded ‘None’ are left out for brevity). Here ‘0’ indicates ‘none’ or ‘not known’. 27 Table G.15: Two Current View forms with a low level of disagreement Patient's ID Patient's Age Comments CGAS Practitioner's Name Practitioner's Profession Site Name Number of Meetings Co-rater present at your last meeting with the patients Even seen the patient jointly with your co-rater Even seen the patient jointly with your co-rater Anxious away from caregivers Anxious in social situations Anxious generally Compelled to do or think things Panics Avoids going out Repetitive problematic behaviours Depression/ low mood Self-Harm Behavioural difficulties Disturbed by traumatic events Eating issues Family relationship difficulties Peer relationship difficulties Persistent difficulties managing relationships with others Self-care issues Key to shading Agree Disagree by one point on the scale (e.g. ‘Mild’ versus ‘Moderate’) Disagree by two points (e.g. ‘Mild’ versus ‘Severe’) Disagree by three points (e.g. ‘None’ versus ‘Severe’) c27 16 c27 16 NA P33 ST B 1 1 1 1 Mild Severe Mild Severe Mild Moderate Mild Moderate Mild Mild 0 Moderate Moderate Mild Mild NA P24 Trainee Clinical Psychologist B 1 1 1 1 0 Severe Mild Severe 0 Severe 0 Mild Mild 0 Not Known Mild Mild Mild 0 Mild 0 28 One practitioner, P13, has tended to select more problems than the other, recording six more, all ‘Mild’. Where the practitioners disagree on severity, it is only by one rating point. These do not look like drastically different forms overall, even though the practitioners only agree on 7 out of 18 problems (38.9%). In contrast, patient ‘c12’ from site A has a higher Gower distance of 0.41, and summary of the practitioners’ forms in Table G.16 are coloured as above. One date is missing so it is not possible to know if the patient was seen at quite different times or not. Table G.16: Two Current View forms with a high level of disagreement Patient ID c12 c12 Patient's Age NA NA Comments Changed situation CGAS Practitioner's Name P29 P25 Practitioner's Profession Psychiatrist NA Site Name A A Number of Meetings NA 1 Co-rater present at your last meeting with the patients NA NA Even seen the patient jointly with your co-rater NA NA Anxious away from caregivers Mild Moderate Anxious in social situations Moderate Mild Anxious generally Moderate Moderate Panics 0 Mild Avoids specific things 0 Mild Repetitive problematic behaviours 0 Moderate Difficulties sitting still or concentrating Moderate Severe Behavioural difficulties Severe Mild Poses risk to others Mild 0 Carer management of CYP behaviour Severe 0 Doesn’t get to toilet in time 0 Moderate Disturbed by traumatic events Moderate Severe Family relationship difficulties Severe Mild Problems in attachment to parent/carer Severe Severe Peer relationship difficulties Severe Mild These two practitioners disagree far more often than they agree (13 times out of 15), and sometimes by more than one point on the scale. For example, ‘behavioural difficulties’ or ‘carer management’. There is a mixture of disagreements over whether a problem exists, and the severity of problems they both agree exist. These forms seem like somewhat different records of the patient’s problems. As the second practitioner’s profession is missing, it is difficult to say whether this may be due to differences in levels of experience (s/he may be a student for example). It also could be because the practitioners saw the patient at different times and had different discussions 29 with the patient, amongst other possibilities. In short it is difficult to isolate the sources of variation, in particular which might be attributable to the form itself rather than other factors. The median Gower distance The median Gower distance for the PPD part of the form lies between patients ‘c53’ and ‘c52’. Patient ‘c53’ happens to have practitioner ‘P12’, who uses the ‘Not Known’ option far more often than most other practitioners. To make the summary in Table G.17 shorter and therefore easier to interpret, the problems where one practitioner has selected ‘Not Known’ and the other ‘None’ have been left out. Patient ‘c53’ has a Gower distance of 0.2717. Table G.17: Average level of disagreement (I) Patient's ID Patient's Age Comments CGAS Practitioner's Name Practitioner's Profession Site Name Number of Meetings Co-rater present at your last meeting with the patients Even seen the patient jointly with your co-rater Anxious in social situations Anxious generally Panics Repetitive problematic behaviours Depression/ low mood Self-Harm Difficulties sitting still or concentrating Behavioural difficulties Poses risk to others Peer relationship difficulties c53 16 c53 16 55 P9 Assistant psychologist (Not IAPT trained) C 5 0 65 P12 Associate Practitioner (Not IAPT trained) C 1 0 0 Mild Mild Mild Mild Mild Mild 0 Mild Mild Mild 0 0 Mild 0 Not Known Mild Not Known Mild Severe Moderate Moderate In this case, one practitioner (‘P9’) has seen the patient five times in total, the other (‘P12’) only once. Dates were not recorded on either of the forms. Half of the disagreements are over whether a condition exists or not, with ‘P9’ recording that a condition exists more often than ‘P12’. Practitioner ‘P12’ has recorded six conditions as present, and judged three of these as more severe than ‘P9’ has. ‘P9’ records more conditions, nine, and all of them mild. The most significant difference is between the ratings for Behavioural Difficulties, which is the only difference which may affect how the patient is grouped (see later for more details on 30 grouping). Otherwise the practitioners tend to disagree often, but only by a little (one point on the scale). The other median patient (‘c52’) has a Gower distance of 0.2712. Some information for patient ‘c52’ is missing from the start of the form, so it is not possible to tell whether practitioner ‘P12’ has seen the patient before. The dates on the forms are the same, however this does not guarantee that the patients were seen at the same time. The practitioners agree on 9 of the 28 (32.1%) problems where at least one practitioner has not recorded ‘None’. 31 Table G.18: Average level of disagreement (II) Patient's ID Patient's Age Comments CGAS Practitioner's Name Practitioner's Profession Site Name Number of Meetings Co-rater present at your last meeting with the patients Even seen the patient jointly with your co-rater Anxious away from caregivers Anxious in social situations Anxious generally Compelled to do or think things Panics Avoids going out Avoids specific things Repetitive problematic behaviours Depression/ low mood Self-Harm Extremes of mood Delusional beliefs and hallucinations Difficulties sitting still or concentrating Behavioural difficulties Poses risk to others Carer management of CYP behaviour Disturbed by traumatic events Eating issues Family relationship difficulties Problems in attachment to parent/carer Peer relationship difficulties Persistent difficulties managing relationships with others Does not speak Unexplained physical symptoms Unexplained developmental difficulties Self-care issues Adjustment to health issues c52 16 c52 16 NA P19 Clinical Psychologist B NA NA NA Not Known Moderate Mild Severe Moderate Moderate Not Known Moderate Mild Severe Mild Moderate 0 Severe Severe Severe Severe Not Known Moderate Moderate Moderate Severe 0 Not Known Moderate Moderate Not Known NA P23 NA B 1 NA NA Mild Mild 0 Severe Not Known Severe Not Known Moderate Mild Moderate Not Known Mild Moderate Severe Severe Moderate Moderate Mild Moderate Moderate Severe Severe Mild Mild 0 Mild Moderate 32 This is quite a complex case compared to the previous ones. Although the practitioners disagree in some way on most of the problems, they mostly disagree by only one point on the scale (for example, ‘severe’ versus ‘moderate’ for Agoraphobia). They are in agreement that the patient has multiple severe problems, and agree on 4 out of 9 problems rated as severe by at least one practitioner. On the 5 where there they disagree, it is only by one point (the other practitioner rated the patient as ‘moderate’, nothing lower). As in the previous example, the practitioners tend to disagree often, but only by one point on the scale. The number of severe or moderate problems is similar, which suggests that patients might be grouped in the same way, despite many small differences between the forms. Summary of PPD Gower distances and Grouping Table G.19 gives a summary of the patients that have been shown in this section, plus a patient with a Gower distance three patients below the median (‘c36’) and one patient three above (‘c17’) for reference. 33 Table G.19 Grouping information Patient Gower Distance Proportion of relevant problems on which practitioners agree Patient assigned to the same super grouping? c27 0.22 7/18 = 38% No – one Getting More Help, one Multiple Emotional problems. c36 0.26 1/9 = 11% Yes – one ‘Depression’ and one ‘Self Harm’ c52 0.2712 9/28 = 32% Yes – one ‘Psychosis’ and one ‘Getting more help’ c53 0.2717 2/10 = 20% No – one ‘Getting Advice’ one ‘AUT’ c17 0.2778 2/7 = 29% No – one ‘Getting Advice’, one ‘GAP’ c12 0.41 2/15 = 13% Yes – both ‘Getting More Help’ In this small set of examples, there is not a clear relationship between the level of disagreement between the forms (the Gower distance), and whether patients would be assigned to the same grouping or not by both practitioners. Patient ‘c27’, with a low level of disagreement has been assigned to different grouping. Patient ‘c12’, on the other hand, has been assigned to the same grouping. This may be because the grouping process is sensitive to small changes on the Current View form, particularly in terms of the ‘index problems’ (problems which are related to a specific NICE category). As well as the above examples, each patient in the whole sample was assigned to a grouping using the algorithm detailed in the main report. Two patients could not be grouped due to missing values (in both cases age), of the remaining 56 patients: • 32% were assigned to the same grouping by both practitioners, 34 • 70% were assigned to the same super grouping. Of the 30% of patient who were assigned to different super groupings, none were more than one level apart (that is, no patients were simultaneously assigned to super groupings ‘Getting Advice’ and ‘Getting More Help’). Given the many sources of variation present in this dataset, this seems like a relatively high number of patients being assigned to the same super grouping. Main points: • • • • • The PPD sections filled out by each practitioner range from reasonably similar (Gower distance of around 0.2) to quite distinct but with some agreement (Gower of 0.4). Even patients with a relatively low Gower distance (indicating a high level of agreement) have a number of disagreements between practitioners about the exact nature of the problems presenting. Many of the reasons for this are potentially unrelated to the form, for example if one practitioner has met a patient on more occasions than the other has. Given the sources of variation present, a substantial proportion of practitioners would have assigned patients to the same super grouping (based on the Current View information only, not taking clinical judgement into account). The level of disagreement is again not apparently related to the number of problems identified, and sites vary somewhat (although this is a small, non-random sample). Gower distances for Selected Complexity Factors (SCF), Contextual Problems and Education/Employment/Training. In the above section only the Gower distances for the PPD section of the form were considered. The following boxplots will give some indication of the distances for the other parts of the form. 35 Figure G.6: Distribution of distances between forms for each section of the Current View form. The SCF section has a different scale (‘Yes’ or ‘No’, with ‘Not Known’ counted as ‘No’ in this section) from the other sections, and the number of relevant problems in the SCF section was sometimes very low, which may account for the wide spread in distances. The PPD and CE (which includes Contextual Problems and Education/Employment/Training) sections have a very similar spread of distances, generally lower than the SCF section, so perhaps these sections relatively easier to agree on than the SCF section. 3.6 Measuring disagreement within problems - which problems are more difficult to agree on than others? Until now the overall agreement across all problems has been analysed using Gower distances to judge how closely the two forms for each patient agreed. However, this does not give any indication of how consistently each particular problem is rated. It may be the case, for example, than in general practitioners agree more often on whether a patient is a ‘Looked after child’, than whether they are ‘Anxious generally’ (rather than fitting into one of the other two Anxiety categories). The measure which will be used to give an indication of the level of agreement between practitioners across problems, instead of patients, is called the Intra-class Correlation Coefficient (ICC). If the ICC for a problem description is close to one, this means that practitioners tend to agree, if it is close to zero, they do not. For the ICC to be meaningful there have to be a reasonable number of ratings, so for the more unusual problems it was simply not possible to find the ICC. At the extreme end, 36 ‘Gender discomfort issues’ had no ratings at all, so it is impossible to find out anything about this problem from this sample. The ICC for the items which had more than 20 patients (that is, at least one practitioner used a response that was at least ‘Moderate’ for at least 20 patients) are shown in Figure G.7. 37 Figure G.7: ICCs plus confidence intervals for variables with more than 20 pairs of ratings. 38 One of the sites is a specialist site for patients with OCD, so the apparently high value for OCD cannot be taken at face value here (many of the patients will have been referred to this service because they have OCD, so it will have been less difficult to agree on whether OCD is present or not). Out of the 19 remaining problems or factors, 18 have confidence intervals which overlap 0.5. This is a relatively low ICC score, and is possibly an indication again of the difficulty on agreeing the precise problems that a child or young person may be presenting with. Although the problems in the plot are ordered from highest ICC (and therefore highest reliability) to lowest, most of the confidence intervals overlap each other so it not possible to tell them apart. However, the nature of the sample meant that it was not the same set of raters (practitioners) for each patient. This meant that some extra sources of variation, such as bias associated with a particular rater, could not be investigated. The low reliability then may be due to other factors which cannot be isolated within this sample. Also, as previously mentioned, it is important to remember that many of the patients (around half) were seen at least one month apart, by practitioners who may have had differing prior knowledge of that patient. 3.7 Summary The problems introduced by the nature of the sample have been discussed, including: forms filled out on the basis of different meetings with a patient; practitioners who had seen the patient a different number of times; a set of practitioners that was neither totally random (some practitioners saw more than two patients), nor consistent across all patients (for example, the same practitioners seeing all patients). Also: practitioners rating the same patient all worked at the same site, which may mean that they have developed a collective understanding of how to asses and/or rate a patient. That is, we are unable to investigate variation between ratings due to differing ‘cultures’ between sites. Although these lead to caution when interpreting the results, they were not so insurmountable as to prevent any insights being drawn from the data. It also may be of some value that the forms collected were from practitioners using them with real patients in their usual places of work. The study is less a formal test of the reliability of the Current View form, and more an impression of how consistently forms are filled out by practitioners under the constraints of everyday practice. Looking firstly at the use of ‘not known’, the lack of disagreements was mostly down to practitioners choosing not to use this option. The default category for most practitioners (with one exception) appeared to be ‘none’. Although practitioners did not use ‘not known’ much, they usually disagreed at least once on forms where it was used. Therefore there is some evidence that this option is not being used consistently. Disagreement on the severity and existence of problems was quite varied. Two forms with a low level of disagreement (Gower distance of 0.22) were found to be fairly similar in their overall impression of the patient, forms with a relatively high level (0.41) were quite dissimilar, and seemed to have arisen from quite different meetings. 39 In about one third of cases patients would have been assigned to exactly the same grouping on the basis of the two Current View forms, in over two thirds they would be assigned the same super grouping. Note that this does not take into account practitioner judgement or the shared decision making process which may influence the final grouping in practice. Given the limitations of the data, it may be reasonable to suppose that these figures would be higher in reality. It is also interesting to note that the level of disagreement between forms does not appear to be related to whether patients would be classified in the same way or not (see Table G.19). This is possibly because the grouping process is sensitive to small changes in the Current View form, particularly in relation to the ‘index problems’. A recommendation for future work is to investigate this relationship more methodically. There is some evidence that the Selected Complexity Factors, Contextual Problems and Education/Employment/Training sections of the form are also being filled out inconsistently. It is always to be borne in mind however that distances between the forms could have been caused by factors unrelated to the form itself, such as those mentioned above to do with the nature of the sample (and hence to everyday practice). It is strongly recommended that a more methodical study of the reliability of the Current View form, and of the grouping process in general, be carried out. In particular, a study with a consistent set of raters (practitioners), seeing patients for the first time, and filling out the form on the basis of the same meeting (or recording of a meeting). 40 4. Current View – Validity Validity: Current View Items and Norm-Referenced Outcome Measures (SDQ & RCADS Sub-scales) 4.1 Introduction A vital part of the analysis presented in this report is the Current View tool, as it organises the recording of the type(s) of presenting problem(s) that a clinician judges an individual patient to have at the start of their work with CAMHS, and therefore informs the allocation of each individual into a grouping. While the Current View tool is increasingly used across CAMHS services as part of the CYP-IAPT data collection process, the extent to which it is a valid and reliable tool is not yet fully understood. It therefore seems sensible to assess the extent to which clinician ratings as per the Current View agree with ratings given in response to measures whose psychometric properties are better understood. Suitable comparator measures are not available for all 30 Current View problem descriptors. In this report, we will consider the Strengths and Difficulties Questionnaire (SDQ; Goodman, 1997), and the Revised Child Anxiety and Depression Scale (RCADS; Chorpita et al., 2000a), two of the most widely used measures in CAMHS services in the UK, as well as in the data set used in the present analysis. They have generally shown to be valid and reliable measures (e.g. He et al., 2013; Mathyssek et al., 2013), and consist of several sub-scales. In the analysis that follows, we will make use of the fact that some subscales within the SDQ, as well as all subscales within the RCADS, aim to measure the same psychological problem that is the intended measurement target of one of a set of items within the Current View section “Provisional Problem Description”. The correlation between these specific Current View items and the scores on the corresponding SDQ and RCADS scales gives us an indication of the concurrent validity of the Current View items. 4.2 Method The data used for this analysis consist of data from all available cases within the whole Payment System dataset (not just limited to the closed cases used for the currencies work), that had a recorded Current View and either a recorded SDQ or RCADS measure (or both). We only considered pairs of measures where both measures were completed on the same day, in order to avoid conflating measurement effects with change in the patient’s condition. Sub-scale scores were computed for the SDQ peer relationship difficulties, hyperactivity and conduct problems sub-scales, and scores on the RCADS depression, panic disorder, separation anxiety, social phobia, GAD and OCD sub-scales were transformed to a T-score, using the population norms reported in Chorpita et al., (2000). SDQ scores from measures completed by parents and children are computed and analysed separately. Population norms for the RCADS sub-scales are only available for measures completed by children; therefore only measures recorded as being completed by the child or young person are included in the analysis. Sub-scale scores were computed using a ‘prorating’ procedure, whereby measures with one or two missing items for a given sub-scale are still calculated by taking the average response given to the answered items (this is the procedure recommended by the authors of the measure). 41 Current View items being associated with the sub-scales noted above are those that map neatly onto a single sub-scale. As such, the SDQ emotional difficulties sub-scale was not included for analysis, as it there is no single Current View item designed to measure “emotional problems” in general. Neither was there a Current View equivalent of the SDQ “Prosocial Behaviour” scale. Specific kinds of emotional problems are, however, measured by the subscales of the RCADS, and we will investigate their correlations with the corresponding items on the Current View Form. The Current View Items investigated are displayed in Table G.20 alongside the corresponding SDQ or RCADS measures. 42 Table G.20. Pairing of Current View Item and RCADS/SDQ Sub-scale Current View Question Behavioural difficulties Difficulties sitting still or concentrating Peer relationship difficulties Low-mood (depression) Panics Avoids going out Anxious away from caregivers Anxious generally Feels compelled to do or think things Sub-Scale SDQ Conduct Problems Sub-scale SDQ Hyperactivity Sub-scale SDQ Peer Relationship Sub-scale RCADS Depression Sub-scale RCADS Panic Sub-scale RCADS Social Phobia Sub-scale RCADS Separation Anxiety Sub-scale RCADS GAD Sub-scale RCADS OCD Sub-scale Note: The item in the left hand column is the specific Current View item that is being compared to the specific sub-scale located in the right hand column. Individuals were only included for analysis where the Current View item has been positively completed; where the question was answered with a ‘not known’ response, or was missing a response entirely, this was not taken to mean a response of ‘no problem’ (for the purposes of this analysis). We investigated the correlation between CV items and corresponding scales in two ways: (1) correlational analysis using Spearman’s rank correlation coefficient and Pearson’s product moment correlation coefficient, and (2) linear regression to investigate both linear and curvilinear relationships among the variables. For each pairwise relationship, we fit two linear regression models: Linear Model: = + + , where yi is the SDQ or RCADS scale score of the ith individual, i=1,…,n; xi is the corresponding CV rating of the ith individual, εi is an error term, a and b are regression coefficients to be estimated. Curvilinear Model: = ′+ ′ + ′ + ′ , Where yi and xiare defined as before,xi2is the squared CV rating of the ith individual, ε’Iis again an error term, and a’, b’, and c’are regression coefficients to be estimated. For each pairwise comparison, we conducted an F-test of change in model fit to determine whether there was evidence in favour of the curvilinear model over the linear model, or not. 43 4.3 Results Results are presented graphically below in Figures G.9-14, and the results of statistical analyses are presented in Table G.20. It is important to note that scatterplots are not plotted using the raw data, as this presents difficulties due to the number of points which occupy the same space, making it very difficult to accurately appreciate the relative density of individuals in some areas of the chart. An example of the raw data being plotted directly is presented in Figure G.8a. Instead, an automated algorithm has been applied which ‘jitters’ points which are in precisely the same place (or very nearly) in order to avoid over-plotting of multiple points. An example of the same data as in Figure G.8a, but with this adjustment made, is presented in Figure G.8b. The results demonstrate a variety in the strength of the relationship between Current View items and RCADS/SDQ sub-scales, ranging 0.19 to 0.56 (Spearman’s Rho) for the linear relationship. Seven of the twelve relationships also demonstrate evidence for a curvilinear relationship between the Current View item and the sub-scale, although the improvements in r2 are relatively modest. 44 Figure G.8a (left) and G.8b (right). Example of plotting raw data (left), and with a correction to avoid over-plotting (right). Examples demonstrate the relationship between the depression sub-scale of the RCADS and the low mood question of the Current View. Note that due to the limited number of possible Current View responses, a large number of points on the chart on the left are plotted on-top of each other, corrected in the chart on the right. 45 Figures G.9a (left) and G.9b (right). Relationship between SDQ peer relationship difficulties sub-scale, and clinician reported peer relationship difficulties Current View item (SDQ parent completed left, SDQ child completed right). 46 Figures G.10a (left) and G.10b (right). Relationship between SDQ conduct sub-scale, and clinician reported behavioural difficulties Current View item (SDQ parent completed left, SDQ child completed right). 47 Figures G.11a (left) and G.11b (right). Relationship between SDQ hyperactivity sub-scale, and clinician reported difficulties sitting still or concentrating Current View item (SDQ parent completed left, SDQ child completed right). 48 Figures G.12a (left) and G.12b (right). Relationship between RCADS depression sub-scale (left) T-score, and clinician reported low mood Current View item, and the relationship between RCADS panic sub-scale (right) T-score, and clinician rated panics Current View item. 49 Figures G.13a (left) and G.13b (right). Relationship between RCADS separation anxiety sub-scale (left) T-score and clinician reported anxious away from caregivers Current View item, and the relationship between RCADS social phobia sub-scale (right) T-score and clinician reported avoids going out Current View item. 50 Figures G.14a (left) and G.14b (right). Relationship between RCADS GAD sub-scale (left) T-score and clinician reported anxious generally Current View item, and the relationship between RCADS OCD sub-scale (right) T-score and clinician reported compelled to do or think things Current View item. 51 Table G.20: Correlation coefficients and coefficient of determination for each model presented above7 Model (SDQ / RCADS Sub-scale & Current View Item (& sample size) Linear Model R2 Curvilinear Model R2 Spearman’s Rho Pearson’s R (95% CI) SDQ Peer (Parent) / Peer Relationship Difficulties (n = 3483) 0.40*** 0.41*** (0.38 – 0.43) 0.16 0.17 SDQ Peer (Child) / Peer Relationship Difficulties (n = 3019) 0.37*** 0.38*** (0.35 – 0.42) 0.15 0.15 SDQ Conduct (Parent) / Behavioural Problems (n = 3736) 0.52*** 0.53*** (0.51 – 0.55) 0.28 0.28*** SDQ Conduct (Child) / Behavioural Problems (n = 3131) 0.38*** 0.39*** (0.36 – 0.42) 0.15 0.15** SDQ Hyperactivity (Parent) / Difficulties Sitting Still or Concentrating (n = 3656) 0.52*** 0.50*** (0.47 – 0.52) 0.25 0.26*** SDQ Hyperactivity (Child) / Difficulties Sitting Still or Concentrating (n = 3066) 0.31*** 0.31*** (0.27 – 0.34) 0.09 0.10** RCADS Depression / Low Mood (n = 1461) 0.56*** 0.55*** (0.511 – 0.58) 0.30 0.31*** RCADS Panic / Panics (n = 1496) 0.30*** 0.31*** (0.26 – 0.35) 0.09 0.09 RCADS Separation Anxiety / Anxious Away from Caregivers (n = 1357) 0.24*** 0.25*** (0.20 – 0.30) 0.06 0.06 RCADS Social Phobia / Avoids Going Out (n = 1562) 0.19*** 0.17*** (0.12 – 0.22) 0.03 0.04** RCADS GAD / Anxious Generally (n = 1553) 0.28*** 0.27*** (0.23 – 0.32) 0.07 0.08* RCADS OCD / Compelled to do or Think Things (n = 1490) 0.25*** 0.27*** (0.22 – 0.31) 0.07 0.07 7 (* = p < 0.05, ** = p < 0.01, *** = p < 0.001), and coefficient of determination of the curvilinear model (where significance codes represent the significance of the improvement in model fit resulting from the inclusion of the curvilinear term). 52 4.4 Conclusions The results presented here suggest that we can be reasonably confident that the ratings (for these specific questions) given by clinicians using the Current View tool are correlated to the ratings given by children and parents on commonly used norm-referenced measures, although it is very important to note that the strength of this relationship is not uniform and varies according to each sub-scale. The correlation between clinician rated low mood and the depression sub-scale of the RCADS for shows a moderate fit, while the relationship between the RCADS OCD sub-scale and the Current View ‘feels compelled to do or think’ item is much weaker (albeit statistically significant). Another interesting trend to note in the correlations for the SDQ sub-scales, which demonstrate that parent reported scores are consistently better correlated with clinician rated Current View scores than child reported SDQ scores. This may help to partially explain why (with the exception of the depression / low mood correlation), the correlations between RCADS sub-scales (which are all child reported), are much more modest, compared to the SDQ scores). One final important feature to note is the evidence that the relationship between parent/child reported SDQ/RCADS scores and clinician rated Current View items is not strictly linear, but actually traces a curvilinear path. This is more apparent in some places than in others (the relationship between SDQ hyperactivity and the ‘difficulties sitting still or concentrating’ Current View item shows it particularly clearly), but where a curve is apparent it consistently in the direction of a ‘levelling off’ as the severity of the condition increases. What this means in practical terms is that the increase in the severity of a patients’ condition (according to responses to an SDQ or RCADS) shows a more pronounced increase as the clinician ratings increase from 0 (mild) to 1 (mild), than the increase in severity accorded by the increase in clinician ratings from 1 to 2 (moderate), and the increase in severity as clinician ratings increase from 2 to 3 (severe), is very small (and in some cases the severity according to SDQ/RCADS actually decreases as clinician ratings increase from 2 to 3, although this is likely to be at least partially the result of the relatively small number of patients with a clinician rating of severe). This implies that the ratings system in the Current View does not always represent a linear increase in the severity of a patients’ condition, and the difference between ratings at the top of the scale represent a finer change in the actual severity of a patients’ condition than the differences between ratings at the bottom of the scale. While the results presented here demonstrate that these items of the Current View show a reasonably good association with ratings provided by children and their parents, these results only refer to the 9 specific questions addressed, and should not be taken as a judgement on the validity of the Current View as a whole. We recommend further research into the validity of Current View Ratings, using either established psychometric measures or clinical diagnoses, or both. 53 References Chorpita, B. F., Yim, L., Moffitt, C., Umemoto, L. A., & Francis, S. E. (2000). Assessment of symptoms of DSM-IV anxiety and depression in children: A Revised Child Anxiety and Depression Scale. Behaviour Research and Therapy, 38(8), 835-855. Goodman, R. (1997). The Strengths and Difficulties Questionnaire: A research note. Journal of Child Psychology and Psychiatry, 38, 581-586. Methyssek, C. M., Olino, T. M., Hartman, C. A., Ormel, J., Verhlust, F. C., & Van Oort, F. V. A. (2013). Does the Revised Child Anxiety and Depression Scales (RCADS) measures anxiety symptoms consistently across adolescence? The TRAILS study. International Journal of Research Methods in Psychiatric Research, 22(1), 27-35. He, J-P., Burstein, M., Schmitz, A., & Merikangas, K. R. (2013). The Strengths and Difficulties Questionnaire (SDQ): The factor structure and scale validation in U.S. adolescents. Journal of Abnormal Child Psychology, 41, 583-595. 54