From: AAAI Technical Report SS-93-02. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved.

Three-Level Knowledge Representation of Predicate-Argument Mapping

for MultilinguM Lexicons

Chinatsu

Aone and Doug McKee

Systems Research and Applications

2000 15th Street North

Arlington,

VA 22201

aonec@sra.com,

mckeed@sra.com

lexical

1

Introduction

levels,

automatic acquisition

arise from predicate-argument mapping mismatches.

Namely, verbs in different languages denoting the

Constructing a semantic concept hierarchy covers same semantic concept do not always represent their

only part of the problem in building any knowledge- thematic roles with the same syntactic structures (cf.

based natural language processing (NLP) system:

McCord[17], Oorr [10]). For example, "I like Mary"

Language understanding requires mapping from syn- is translated into "Maria me gusta a m{", where the

tactic structures into conceptual (i.e. interlingua)

subject and the object in the English sentence are

representation (henceforth verb-argument mapping), the object and the subject in the Spanish sentence.

while language generation requires the inverse map- Furthermore, a verb with two different predicateping. Jacobs [13] proposed a solution in which argument mappings may correspond to two different

he augmented his concept hierarchy in the knowl- verbs in another language. For example, the Enedge base with verb-argument mapping information.

glish word "break," when AGENTis expressed as

While his solution works for monolingual understand- in "John broke the window," is mapped to Japanese

ing/generation, associating each semantic concept of word "kowasu", while without AGENT,as in "The

a verb with English specific mapping information in window broke," "break" should map to "kowareru".

the knowledge base is not ideal for an interlinguabased NLP system, since verb-argument mapping

varies greatly amonglanguages.

2 Three-Level Representation

In this paper, we discuss how the lexicon of

our interlingua-based

NLPsystem 1 abstracts the Each lexical sense of a verb in our lexicon encodes

language-dependent portion of predicate-argument

its semantic meaning (i.e. semantic concept), its demapping information from the core meaning of verb fault predicate-argument mappingtype (i.e. situation

senses (i.e. semantic concepts as defined in the knowl- type), and any word-specific mappingexceptions (i.e.

edge base). We also show how we represent this

idiosyncrasies) in addition to its morphological and

mapping information in terms of cross-linguistically

syntactic information. In the following, these three

generalized mappingtypes called situation types and levels are discussed in detail.

word sense-specific idiosyncrasies. This three-level

representation

enables us to keep the languageSituation

Types

2.1

independent knowledgebase intact and make efficient

use of lexical data by inheritance. Wetake advantage Eachof a verb’s lexical senses is classified into one of

of large multilingual corpora to automatically acquire the four default predicate-argument mapping types

language- and domain-specific lexical information.

called situation types. As shown in Table 1, situaIn addition, this representation framework eas- tion types of verbs are defined by two kinds of inily deals with some of the MTdivergences which formation: 1) the number of subcategorized NPor

1 Ourinterlingua-basedNLPsystemconsists of Solomon,a arguments and 2) the types of thematic roles which

broad-coverage

text understandingsystemfor English, Spanish these arguments should or should not map to. Since

and Japanese, and an experimental generation system which this kind of information is applicable to verbs of any

constructssentencesfrominterlingua representations.

language, situation types are language-independent

predicate-argument mapping types. Thus, in any lan- whole sentence rather than a verb itself (cf. Krifka

guage, a verb of type CAUSED-PROCESS

has two [15], Dorr [11], Moensand Steedman [20]). For inarguments which map to AGENTand THEMEin the stance, as shown below, the same verb can be classidefault case (e.g. "kill"). A verb of type PROCESS-fied into two different aspectual classes (i.e. activity

OR-STATEhas one argument whose thematic role

and accomplishment) depending on the types of obis THEME,and it does not allow AGENTas one ject NP’s or existence of certain PP’s.

of its thematic roles (e.g. "die"). An AGENTIVEACTIONverb also has one argument but the argu- (1) a. Sue drank wine for/*in an hour.

b. Sue drank a bottle of wine *for/in an hour.

ment maps to AGENT(e.g. "look"). Finally,

INVERSE-STATEverb has two arguments which (2) a. Harry climbed for/*in an hour.

b. Harry climbed to the top *for/in an hour.

map to THEMEand GOAL; it does not allow

AGENT

for its thematic role (e.g. "see").

Situation types are intended to address the issue of

Althoughverbs in different languages are classified

into the same four situation types using the same cross-linguistic predicate-argument mappinggeneraldefinition,

mapping rules which map grammatical ization, rather than the semantics of aspect.

functions (i.e. subject, object, etc.) in the syntactic

structures 2 to thematic roles in the semantic strucSemantic

Concepts

2.2

tures maybe different from one language to another.

This is because languages do not necessarily express Each lexical meaning of a verb is represented by

the same thematic roles with the same grammati- a semantic concept (or frame) in our languagecal functions. This mappinginformation is language- independent knowledge base, which is similar to the

one described in Onyshkevych and Nirenburg [22].

specific (cf. Nirenburg and Levin [21]).

Each verb frame has thematic role slots, which have

The default mapping rules for the four situation

TYPE and MAPPING. A TYPE facet

types are shown in Table 2. They are nearly iden- two facets,

tical for the three languages (English, Spanish, and value of a given slot provides a constraint on the type

of objects which can be the value of the slot. This

Japanese) we have analyzed so far. The only difference is that in Japanese the THEME

of an INVERSE- information is used during the semantic disambiguaSTATEverb is expressed by marking the object NP tion process and during Debris Semantics, a semantically based syntax-to-semantics mapping strategy

with a particle "-ga", which is usually a subject

3

employed

whena parse fails (cf. [1]).

marker (cf. Kuno[16]). 4 So we add such information

In

the

MAPPINGfacets,

we have encoded some

to the INVERSE-STATE

mapping rule for Japanese.

cross-linguistically

general

predicate-argument mapGeneralization expressed in situation types has saved

ping

information.

For

example,

we have defined

us from defining semantic mappingrules for each verb

that

all

the

subclasses

of

#COMMUNICATIONsense in each language, and also made it possible to

EVENT¢/: (e.g.

#REPORT#, #CONFIRM#, etc.)

acquire them from large corpora automatically.

map

their

sentential

complements (SENT-COMB)

This classification system has been partially deTHEME,

as

shown

below.

rived from Vendler and Dowty’s aspectual classifications [24, 12] and Talmy’s lexicalization patterns (#COMMUNICATION-EVENT#

[23]. For example, all AGENTIVE-ACTION

verbs

(AKO #DYNAMIC-SITUATION#)

(AGENT (TYPE

#PERSON#

#ORGANIZATION#))

are so-called activity verbs, and so-called stalive verbs

(THEME (TYPE #SITUATION#

#ENTITY#)

fall under either INVERSE-STATE

(if transitive)

(MAPPING (SENT-COMB W)))

(GOAL

(TYPE

#PERSON#

#ORGANIZATION#)

5 However,

PROCESS-OR-STATE

(if intransitive).

(MAPPING (P-AP~G GOAL))))

the situation types are not for specifying the semantics of aspect, which is actually a property of the

Also, by grouping prepositions (for languages like

English

and Spanish) and postpositions (for those

2 We use structures

sinfilar

to LFG’s f-structures.

However,

like Japanese) into semantically related concepts, we

unlike those defined in Bresnan [4] or used in KBMT-89 [5],

we normalize passive constructions.

express other default mapping rules in the knowl3There is a debate

over whether

the NP with "ga" is a

edge base language-independently. For example, the

subject

or object.

However, our approach

can accolmnodate

MAPPINGfacet of the INSTRUMENT

slot in Figeither analysis.

4The GOAL of some INVERSE-STATE verbs

in Japanese

ure 1 requires that the thematic role INSTRUMENT

call be expressed

by a "hi" postpositional

phrase.

However,

of the event @BREAK@

be mapped from a P-ARG

as Kuno [16] points out, since this is an idiosyncratic

phe(i.e. a pre/postpositional argument in the syntactic

nomenon, such infomnation

does not go to the default

mapping

structure) whose pre/postposition

denotes INSTRUrule but to the lexicon.

5h~ both cases, the reverse does not hold.

MENT.In the following three sentences in English,

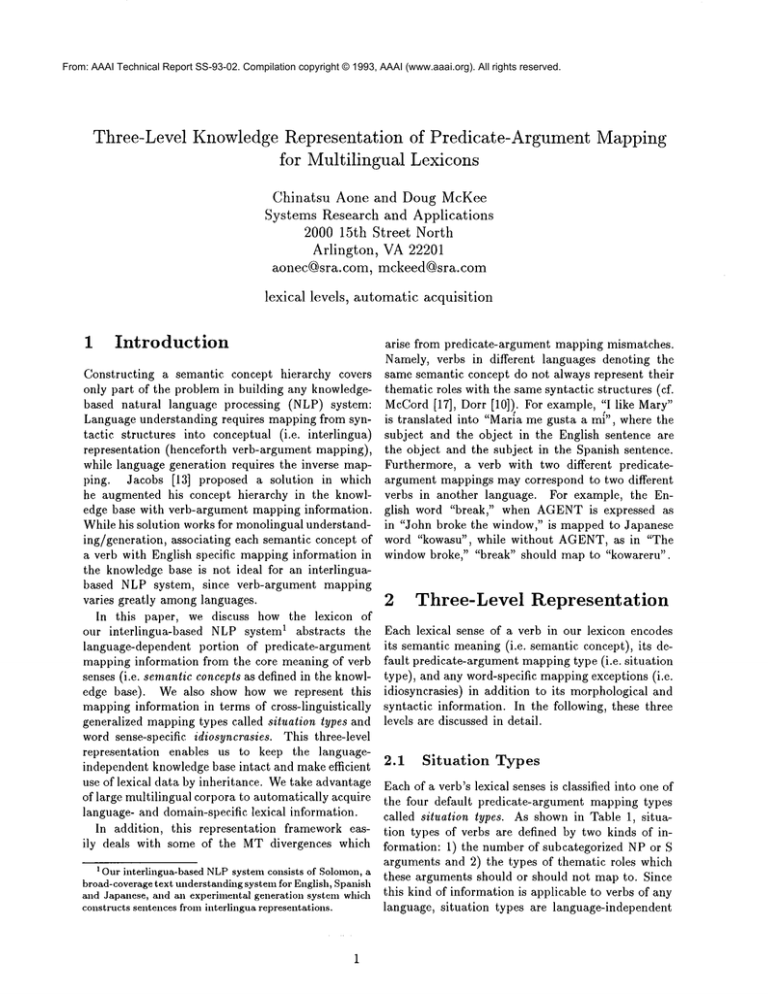

CAUSED-PROCESS

PROCESS-OR-STATE

AGENTIVE-ACTION

INVERSE-STATE

# of required NP or S arguments

2

1

1

2

default thematic roles

Agent Theme

Theme

Agent

Goal Theme

prohibited thematic roles

Agent

Agent

Table 1: Definitions of Situation Types

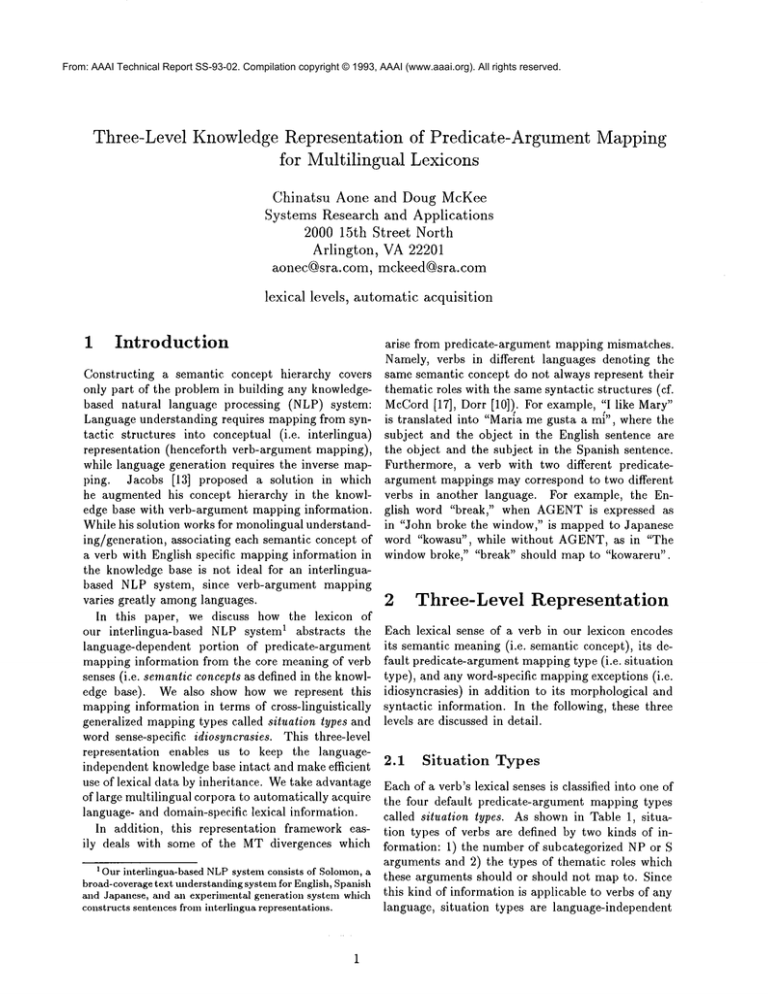

CAUSED-PROCESS

AGENT

THEME

PROCESS-OR-STATE THEME

AGENTIVE-ACTION AGENT

INVERSE-STATE

GOAL

THEME

English/Spanish Mapping

Japanese Mapping

(SURFACE SUBJECT)

(SURFACE SUBJECT)

(SURFACE OBJECT)

(SURFACE OBJECT)

SURFACESUBJECT)

(SURFACE SUBJECT)

SURFACESUBJECT)

(SURFACE SUBJECT)

SURFACESUBJECT)

(SURFACE SUBJECT)

(SURFACE OBJECT)

(SURFACEOBJECT) (PARTICLE "GA")

Table 2: Default MappingRules for Three Languages

Note that our representation

can also handle cases

where two internal arguments "flip-flop".

For example, in (5), "infected"

maps its object NP to GOAL

and the PP to THEMEidiosyncratically,

while the

Japanese counterpart

"utsushita"

maps its object

NP ("infuruenza

uirusu-o")

to THEMEand the

("saru-ni")

to GOAL.6 Such idiosyncratic

mapping

for "infect" is stated in the lexicon as in Figure 2.

(#BREAK#

(AKO #ANTI-CREATION-EVENT#)

(AGENT (TYPE #CREATURE# #ORGANIZATION#))

(THEME (TYPE #ENTITY#))

(INSTRUMENT(TYPE #PHYSICAL-OBJECT#)

(MAPPING(P-ARG INSTRUMENT))))

Figure 1: KB entry #BREAK#

Spanish and Japanese, the words which indicate the

instrument used for the #BREAK#event are different (i.e. "with", "con", "-de"). However, by assigning

the same semantic concept INSTRUMENTto each of

these words in the lexicons, the same default mapping

rule is shared across languages.

(3) John broke the vase with a hammer.

Juan rompi5 el vaso con un martillo.

John-wa kanazuchi-de kabin-o kowashita.

2.3

Idiosyncrasies

Idiosyncrasiesslots in the lexicon specify wordsensespecific idiosyncratic phenomenawhich cannot be

(5) a. The researchers

infected

the monkeys with flu

virus.

b. kenkyusha-tachi-wasaru-ni infuruenza uirusu-o

utsushita.

Word-specific (hence language-specific) TYPEidiosyncrasies can be also stated in IDIOSYNCRASIES

slots in the lexicon to deal with someof the semantic mismatches (cf. Barnett et al. [2], Kameyama

el al. [14]). For example,Japanese verbs like "shibousuru (die)" and "shiyousuru (use)" take only

ple as their subjects while "shinu (die)" and "tsukau

(use)" take any animate subjects. Suchword-specific

semantic constraint

lows and overrides

knowledge base.

is defined in the lexicon as folthe constraint

inherited from the

captured by semantic concepts or situation types. In (SHIBOUSURU

(CATEGORY.V)

particular,

subcategorized pre/postpositions

of verbs

(SENSE-NAME.SHIBOUSURU-1)

are specified here. For example, the fact that "look"

(INFLECTION-CLASS.IRS)

(SEMANTIC-CONCEPT#DIE#)

denotes its THEMEargument by the preposition

(IDIOSYNCRASIES (THEME(TYPE #PERSON#)))

"at" is captured by specifying idiosyncrasies as shown

(SITUATION-TYPEPROCESS))

in Figure 2. As discussed in the next section, we derive this kind of word-specific information automati2.4

Sharing

Information

through

Incally from corpora.

heritance

In addition, word-specific mapping rules are stated

in idiosyncrasies slots to deal with thematic divergenTheinformation stored in the three levels discussed

cies (e.g. (4)). In Figure 2, a Spanish entry "gustar"

aboveis shared throughinheritance (cf. Jaeobs [13]).

is shown.

6The Japanese mappingis done by using the situation type

of the verb (i.e. CAUSED-PROCESS)

and the semantic con(4) a. li ke Ma

ry.

cept assigned to the postposition "ni" (i.e. GOAL)

(cf. Section

b. Maria me gusta a m~.

2.2).

3

(LOOK (CATEGORY .

(SENSE-NAME.

LOOK-l)

(SEMANTIC-CONCEPT

#LOOK#)

(IDIOSYNCRASIES

(THEME (MAPPING (P-ARG

(SITUATION-TYPE

AGENTIVE-ACTION))

"AT"))))

(GUSTAR (CATEGORY .

(SENSE-NAME.

GUSTAR-1)

(SEMANTIC-CONCEPT

#LIKE#)

(IDIOSYNCRASIES

(THEME (MAPPING (SURFACE SUBJECT)))

(GOAL (MAPPING (SURFACE OBJECT))))

(SITUATION-TYPE

INVERSE-STATE))

(INFECT

(CATEGORY.

V)

(SENSE-NAME.

INFECT-I)

(SEMANTIC-CONCEPT

#INFECT#)

(IDIOSYNCRASIES

(THEME (MAPPING (P-ARG "WITH")))

(GOAL (MAPPING (SURFACE OBJECT))))

(SITUATION-TYPE

CAUSED-PROCESS))

Figure 2: Lexical entries for "look", "gustar", and "infect"

(BREAK.1

(ISA #BREAK#)

(THEME WINDOW.2)

(TENSE PAST))

(BREAK.5

Figure 4: "The window broke."

Figure 5: "John broke the window."

3

The idiosyncrasies take precedence over the situation types and the semantic concepts, and the situation types over the semantic concepts. For example,

two mappingrules derived by inheritance for "break"

are shown in Figure 3. Notice that the semantic

TYPErestriction

and INSTRUMENT

role stored in

the knowledgebase are also inherited at this time.

(ISA

#BREAK#)

(AGENT JOHN.6)

(THEME WINDOW.7)

(TENSE PAST))

Automatic

Corpora

Acquisition

from

In order to expand our lexicon to the size needed

for broad coverage and to be able to tune the system to specific domains quickly, we have implemented

algorithms to automatically build multi-lingual lexicons from corpora. The three-level representation described here lends itself to automatic acquisition. In

this section, we discuss howthe situation types and

By

separating

language-specific

lexical idiosyncrasies are determined for verbs.

predicate-argument mapping information (i.e. situOur overall approach is to use simple robust

ation types and idiosyncrasies) from semantic concepts, we can capture predicate-argument mapping parsing techniques that depend on a few languagedivergences without proliferating semantic concepts. dependent syntactic heuristics (e.g. in English and

For instance, we generate Japanese sentences from Spanish, a verb’s object usually directly follows the

English within this framework in the following way. verb), and a dictionary for part of speech information. Wehave used these techniques to acquire inforGiven a semantic representation

for the sentence

"The window broke" (cf. Figure 4, detail omit- mation from English, Spanish, and Japanese corpora

ted), a verb is chosen from the Japanese lexicon varying in length from about 25000 words to 2.7 milwhose semantic concept is #BREAK#and which lion words.

expresses its THEMEbut not its AGENT,namely

"kowareru", which has situation type PROCESS-OR-3.1

Acquiring

Situation

Type InforSTATE.On the other hand, given a semantic repremation

sentation for the sentence "John broke the window"

(cf. Figure 5), a verb whose semantic concept maps Weuse two surface features to restrict the possible

to #BREAK#and which expresses both its AGENT situation types of a verb: the verb’s transitivity raling

and THEME,namely "kowasu", which has situationand its subjec~ animacy.

type CAUSED-PROCESS,

is chosen.

The transitivity rating of a verb is defined to be

YPE #ENTITY#))

NT (TYPE #PHYSICAL-OBJECT#)

J

(MAPPING

(P-ARG INSTR~

!

CAUSED-PROCESS

PROCESS-OR-STATE

Situation

Types

~m-l~

V

¯ break"

(MAPPING (SURFACE SUBJECT)

(THEME (TYPE #ENTITY#)

(MAPPENG (SURFACEOBJECT)

(INSTRUMENT(TYPE #PHYSICAL-OBJECT#)

(MAPPING (P-ARG INSTRUMENT)

Figure 3: Information from the KB, the situation

argument mappings for the verb "break."

~xioon

(MAPPING (SURFACESUBJECT)

INSTRUMENT TYPE #PHYSICAL-OBJECT#

MAPPING P-ARG INSTRUMENT

l

type, and the lexicon all combine to form two predicate-

Transitivity:

CP/IS

,

,

..

~ .... .,.--.¯

().0 O1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0

Ambig : ¯ : .. :...:

- :. --:-- -:

.....

t

0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0

AA/PS

..........

o o 02 03 04

66 67 d8 d9 i o

Subject Animacy:

CP/AA ().0

Ambig.

IS/PS

d.1 (~.2 6.3

,

, ee t ¯ ¯ ~,e

eee

,ee

6.5 0.6 0.7 0.8 0.9 1.0

¯

,- -.. ,.

¯

0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7

,

,

¯ ..

0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7

¯

,

0.8 0.9 1.0

0.8 0.9 1.0

Figure 6: This graph shows the accuracy of the Transitivity

and Subject Animacymetrics.

verb

SUFFICE

TIME

TRAIN

WRAP

SORT

UNITE

TRANSPORT

SUSTAIN

SUBSTITUTE

TA RG ET

STORE

STEAL

SHUT

STRETCH

STRIP

THREATEN

WEAR

TREAT

TERMINATE

WEIGH

TEACH

SURROUND

TOTAL

VARY

WAIT

SPEAK

SURVIVE

UNDERSTAND

SURGE

SUPPLY

SIT

TEND

BREAK

WRITE

WATCH

SUCCEED

STAY

STAND

TELL

SPEND

SUPPORT

SUGGEST

TURN

START

LOOK

THINK

TRY

WANT

USE

TAKE

0CC8

8

15

2O

22

25

27

28

32

33

36

36

36

36

53

57

58

61

77

79

81

82

85

97

112

130

139

146

180

188

188

199

200

219

243

268

277

300

310

368

445

454

57O

852

890

1084

1227

1272

1659

2211

2525

TR

0.6250

0,8333

1.0000

0,7222

0.4211

0.5833

0,8571

0.9062

0.7500

0.7778

0.9091

0.9167

0.2400

0.5278

0.7609

0.8793

0.8033

0.8052

0,9726

0,2069

0.7794

0.8000

0.0515

0.1354

0.1923

0.1667

0.4754

0,6946

0.0182

0.7176

0,0625

0.8594

0.4771

0.4637

0.7069

0.5379

0.2156

0.2841

0.8054

0.3823

0.8486

0.7782

0.3418

0.3474

0.1718

0.7602

0.7904

0.8559

0.8416

0.7447

SA

0.0000

1.0000

1.0000

0.6667

1.0000

1.0000

0.6667

0.6842

0.5000

0.8000

1.0000

0.6667

0.5000

0.5000

0.8571

0.4419

0.6667

0.8000

1.0000

0.5294

0.6875

0.6667

0.2759

0.0294

1.0000

0.7500

0.3846

0.8684

0.3125

0.8571

0.7027

0.4340

0.5000

0.9123

0.8462

0.8899

0.6604

0.7237

0.8101

0,8125

0.5370

0.5918

0.5891

0.6221

0.6520

0.9237

0.8743

0.8787

0.7725

0.5933

Pred.

(IS)

ST

Correct ST

IS)

CP)

CP PS)

(CP)

(CP AA)

(CP AA)

(CP)

(CP)

(CP PS)

(CP)

(CP)

(CP)

(cP is)

CP IS AA PS)

CP IS AA PS)

(CP

/i%~ IIsS/

(CP IS)

(CP IS)

(CP IS)

(is ps)

(IS PS)

(CP IS)

(CP)

(CP IS)

(IS)

CP)

CP PS)

(CP PS)

(is)

(CP IS)

(IS PS)

CP

/ IS

CP IS

PS)

(IS PS)

IS AA

(cp)

(CP)

(CP PS)

(CP PS)

AA)

AA cp)

IS PS)

IS)

PS)

cPisAA;

t

ICP

IS PS)

CP IS)

(PS)

(CP IS)

(AA PS)

(IS)

(IS PS)

(CP IS AA PS)

CP IS)

CP IS AA PS)

(CP IS AA PS)

(CP IS AA PS)

CP IS)

CP IS AA PS)

(IS)

(IS)

IS PS)

CP IS AA PS)

(CP IS AA PS)

(CP IS

cp)

AA PS)

CP IS)

(CP PS)

(CP AA)

OP)

CP PS)

(PS)

(PS CP AA)

(CP)

(CP)

(CP IS)

(CP IS)

PS

l

I

I

(AA PS)

is)

Prepositional

Idio

at

up over in with

out

for

on

from

up for

over into out from

from into of

over

over

as

on with into

at

at

from over

for up

out at up

with

on with at out in up

up into out

off

out over

out up on with at

up at as out on

on over

out into up over

with off out

at into for up

(Is)

(cP)

(CP IS)

(IS)

(CP IS)

over off out into up

Table 3: Automatically Derived Situation Type and Idiosyncrasy Data

6

the number of transitive occurrences in the corpus

Verbs that have a low subject animacy candivided by the total occurrences of the verb. In En- not be either

CAUSED-PROCESSor AGENTIVEglish, a verb appears in the transitive wheneither:

ACTION,since the syntactic subject must map to

the AGENTthematic role. A high subject animacy

¯ the verb is directly followed by a noun, de- does not correlate with any particular situation type,

terminer, personal pronoun, adjective, or wh- since several stative verbs take only animate subjects

pronoun (e.g. "John owns a cow.")

(e.g. perception verbs).

The predicted situation types shown in Figure 6

¯ the verb is directly followed by a "THAT"as a

were

calculated with the following algorithm:

subordinating conjunction (e.g. "John said that

he liked cheese.")

1. Assumethat the verb can occur with every situation type.

¯ the verb is directly followed by an infinitive (e.g.

"John promised to walk the dog.")

¯ the verb past participle is preceded by "BE," as

would occur in a passive construction (e.g. "The

apple was eaten by the pig.")

.

If the transitivity rating is greater than 0.6,

then discard

the AGENTIVE-ACTION and

PROCESS-OR-STATE

possibilities.

If the transitivity rating is below 0.1, then dis.

For Spanish, we use a very similar algorithm, and

card the CAUSED-PROCESS and INVERSEfor Japanese, we look for noun phrases with an obSTATEpossibilities.

ject marker near and to the left of the verb. A high

transitivity

is correlated

with CAUSED-PROCESS . If the subject animacy is below 0.6, then discard the CAUSED-PROCESSand AGENTIVEand INVERSE-STATE

while a low transitivity

corACTION

possibilities.

relates

with AGENTIVE-ACTIONand PROCESSOR-STATE.Table 3 shows 50 verbs and their calcuWeare planning several improvements to our situlated transitivity rating. Figure 6 shows that for all

ation

type determination algorithms. First, Because

but one of the verbs that are unambiguously transisome

stative

verbs can take animate subjects (e.g.

tive the transitivity

rating is above 0.6. The verb

perception

verbs

like "see", "know", etc.), we some"spend" has a transitivity

rating of 0.38 because

times

cannot

distinguish

between INVERSE-STATE

most of its direct objects are numeric dollar amounts.

or

PROCESS-OR-STATE

and

CAUSED-PROCESS

Phrases which begin with a number are not recogor

AGENTIVE-ACTION

verbs.

This

problem, hownized as direct objects, since most numeric amounts

ever,

can

be

solved

by

using

algorithms

by Brent [3]

following verbs are adjuncts as in "John ran 3 miles."

or

Dorr

[11]

for

identifying

stative

verbs.

Wedefine the a verb’s subject animacy to be the

Second, verbs ambiguous between CAUSEDnumber of times the verb appears with an animate

PROCESS

and PROCESS-OR-STATE(e.g. "break",

subject over the total occurrences of the verb where

"vary")

often

get inconclusive results because they

we identified the subject. Any noun or pronoun diappear

transitively

about 50% of the time. When

rectly preceding a verb is considered to be its subject.

these

verbs

are

transitive,

the subjects are almost

This heuristic fails in cases where the subject NPis

always

animate

and

when

they

are intransitive,

the

modified by a PP or relative clause as in "The man

subjects

are

nearly

always

inanimate.

We

plan

to

recunder the car wore a red shirt." Wehave only implemented this metric for English. The verb’s subject ognize these situations by calculating animacy sepais considered to be animate if it is any one of the rately for transitive and intransitive cases.

following:

Acquiring

Idiosyncratic

Informa3.2

¯ A personal pronoun ("it" and "they" were extion

cluded, since they may refer back to inanimate

objects.)

Weautomatically identify likely pre/postpositional

argument structures for a given verb by looking for

¯ A proper name

pre/postpositions in places where they are likely to

¯ A word under "agent" or "people" in WordNet attach to the verb (i.e. within a few words to the

right for Spanish and English, and to the left for

(cf. [19])

Japanese). Whena particular pre/postposition

ap¯ A word that appears in a MUC-4template slot

pears here much more often than chance (based on eithat can be filled only with humans(eft [9])

ther Mutual Information or a chi-squared test [7, 6]),

word

know

vow

eat

w~,nt

resume

possible clausal complements

THATCOMP

THATCOMP,

TOCOMP

TOCOMP

INGCOMP

Table 4: English Verbs which Take Complementizers

we assume that it is a likely argument. A very similar strategy works well at identifying verbs that take

sentential complements by looking for complementizers (e.g. "that", "to") in positions of likely attachment. Some examples are shown in Tables 3 and

4. The details of the exact algorithm used for English are contained in McKeeand Maloney [18]. Areas for improvement include distinguishing between

cases where a verb takes a prepositional arguments,

a prepositional particle, or a commonadjunct.

4

Conclusion

[3] Michael Brent. Automatic Semantic Classification of Verbs from Their Syntactic Contexts: An

ImplementedClassifier for Stativity. In Proceedings of the 5th European ACLConference, 1991.

[4] Joan Bresnan, editor. The Mental Representation of GrammaticalRelations. MITPress, 1982.

[5] Center for Machine Translation, Carnegie Mellon University. KBMT-89:Project Reprot, 1989.

[6] Kenneth Church and William Gale. Concordances for Parallel Text. In Proceedings of the

Seventh Annual Conference of the University of

Waterloo Centre for the New OEDand Text Research: Using Corpora, 1991.

[7] Kenneth Church and Patrick Hanks. Word Association Norms, Mutual Information, and Lexicography. Computational Linguistics,

16(1),

1990.

[8] Ido Dagan and Alon Itai. Automatic Acquisition

of Constraints for the Resolution of Anaphora

References and Syntactic Ambiguities. In Proceedings of the 13th International Conference on

Computational Linguistics, 1990.

Wehave used this three-level knowledge representation for our English, Spanish and Japanese lexicons, and have built lexicons with data derived from

corpora for each language. The lexicons have been

[9] Defense Advanced Research Projects Agency.

Proceedings of Fourth Message Understanding

used for several multilingual data extraction applicaConference (MUC-4). Morgan Kaufmann Pubtions (cf. Aone et al. [1]) and a prototype MTsyslishers, 1992.

tem (Japanese - English). The fact that this representation is language-independent, bidirectional and

amenable to automatic acquisition has minimized our [10] Bonnie Dorr. Solving Thematic Divergences in

Machine Translation. In Proceedings of 28th Andata acquisition effort considerably.

nual Meeting of the ACL, 1990.

Currently we are investigating ways in which thematic role slots of verb frames and semantic type [11] Bonnie Dorr. A Parameterized Approach to Inrestrictions on these slots are derived automatically

tegrating Aspect with Lexical-Semantics for Mafrom corpora (cf. Dagan and Itai [8], Zernik and Jachine Translation. In Proceedings of 3Oth Annual

cobs [25]) so that knowledgeacquisition at all three

Meeting of the ACL, 1992.

levels can be automated.

[12] David Dowty. Word Meaning and Monlague

Grammar. D. Reidel, 1979.

References

[1] Chinatsu Aone, Doug McKee, Sandy Shinn,

and Hatte Blejer. SRA: Description of the

SOLOMON

System as Used for MUC-4. In Proceedings of Fourth Message Understanding Conference (MUC-]t), 1992.

[2] Jim Barnett, Inderjeet Mani, Elaine Rich, Chinatsu Aone, Kevin Knight, and Juan C. Martinez. Capturing Language-Specific Semantic

Distinctions in Interlingua-based MT. In Proceedings of MTSummit III, 1991.

[13] Paul Jacobs. Integrating Language and Meaning in Structured Inheritance Networks. In John

Sowa, editor, Principles of Semantic Networks.

Morgan Kaufmann, 1991.

[14] Megumi Kameyama, Ryo Ochitani, and Stanley

Peters. l~esolving Translation Mismatches with

Information Flow. In Proceedings of 29lh Annual

Meeting of the ACL, 1991.

[15] Manfred Krifka. Nominal Reference, Temporal

Construction, and Quantification in Event Semantics. In R. Bartsch et al., editors, Semantics

and Contextual Expressions. Forts, Dordrecht,

1989.

[16] Susumu Kuno. The Structure

Language. MIT Press, 1973.

of the Yapanese

[17] Michael McCord. Design of LMT: A prologbased Machine Translation System. Computational Linguistics, 15(1), 1989.

[18] Doug McKee and John Maloney. Using Statistics Gained from Corpora in a KnowledgeBased NLP System. In Proceedings

of The

AAAI Workshop on Statistically-Based

NLP

Techniques, 1992.

[19] George Miller, Richard Beckwith, Christiane

Fellbaum, Derek Gross, and Katherine Miller.

Five papers on WordNet. Technical Report

CSL Report 43, Cognitive Science Laboratory,

Princeton University, 1990.

[20] Marc Moens and Mark Steedman. Temporal ontology and temporal reference. Computational

Linguistics, 14(2), 1988.

[21] Sergei Nirenburg and Lori Levin. Syntax-Driven

and Ontology-Driven Lexical Semantics. In Proceedings of A CL Lexical Semantics and Knowledge Representation Workshop, 1991.

[22] Boyan Onyshkevych and Sergei Nirenburg. Lexicon, Ontology and Text Meaning. In Proceedings

of A CL Lexical Semantics and Knowledge Representation Workshop, 1991.

Patterns: Se[23] Leonard Talmy. Lexicalization

mantic Structure in Lexical Forms. In Timothy Shopen, editor, Language Typology and Syntactic Descriptions. CambridgeUniversity Press,

1985.

[24] Zeno Vendler. Linguistics in Philosophy. Cornell

University Press, 1967.

[25] Uri Zernik and Paul Jacobs. Tagging for Learning: Collecting Thematic Relations from Corpus. In Proceedings of the 13th International

Conference on Computational Linguistics, 1990.