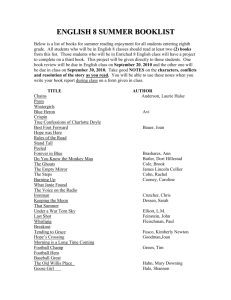

Artificial Neural Network Prediction of Stability Flow Rule

1

Artificial Neural Network Prediction of Stability

Numbers for Two-layered Slopes with Associated

Flow Rule

Pijush Samui and Bimlesh Kumar

Research Scholar, Dept. of Civil Engg., IISc

Abstract

The design of earthen embankments is quite often carried out with the use of stability number charts as originally introduced by Taylor. However, such charts are not easily available for the layered soil slopes with the inclusion of pore water pressure and seismic forces. In this paper, a neural network modeling using back propagation technique was done to predict the stability numbers for two layered soil slopes. The effect of the pore water pressure and horizontal earthquake body forces was also incorporated in this modeling. Comparisons were made by through literature results. A thorough sensitive analysis has been made to ascertain which parameters are having maximum influence on stability numbers.

Key-words: Stability number, friction angle, pore water pressure, ANN, Levenberg-

Marquadt, slopes, upper bound limit analysis.

2

Body of the text

INTRODUCTION

The stability of homogeneous slopes can be expressed in terms of a dimensionless group known as the stability number, N s

, which is defined as, γ H c

/c where ‘c’ is the cohesion, γ is the bulk unit weight of the soil and H c

is critical height of the slope. The design of earthen embankments is quite often carried out with the use of stability numbers as originally introduced by Taylor (1948). Taylor provided the charts indicating the variation of Ns for homogeneous slope with changes in slope angle ( β ) for various soil friction angles ( φ ). Later on Bishop (1955) used the method of slices in obtaining the stability of slopes. In order to solve the problem, Bishop assumed that the resultant of inter slices forces acts in the horizontal direction. Morgenstern and Price (1965) attempted to satisfy all the equations of statistical equilibrium in obtaining the solutions the stability problem using the method of slices. It is found that the method of slice do not satisfy all the conditions of statical equilibrium.

Chen (1975) used the upper bound theorem of the limit analysis to obtain the critical heights for the homogeneous soil slopes. A rotational discontinuity mechanism was assumed in this analysis; it was indicated that in order that the rupture surface remains kinematically admissible, its shape should become an arc of the logarithmic spiral. However, Chen (1975) did not incorporate either the effect of pseudo-static earthquake body forces or the pore water pressure.

Michalowski (2002) also used the upper bound theorem of limit analysis in order to obtain the stability numbers for homogeneous slopes in the presence of pore water pressures as well as pseudo-static earthquake forces.

The charts, providing the variation of stability numbers, are available in literature for homogeneous soil slopes (Taylor, 1948; Chen, 1975; Michalowksi, 2002). However, such

3 charts are not available for layered soil slopes with the inclusion of pore water pressure and seismic forces. Present work aims in producing the stability charts for the non homogeneous soil slopes. The methodology used here is the upper bound theorem of the limit analysis. The general function obtained from the analysis is solved by applying the neural network modeling. A thorough sensitive analysis has also been made to ascertain which variables are having maximum influence on stability number.

DEFINITION OF THE PROBLEM

In order to determine the stability number of a two layered soil slope subjected to pore water pressure and seismic forces; the definition of the stability number was taken as the same as introduced by the Taylor (1948). The analysis has been carried out by assuming the both the layers are having the same bulk unit weight ( γ ) and cohesion (c). The differentiation between the two layers has been made by difference in their friction angle ( φ ). The soil mass is subjected to pseudo-static horizontal acceleration of magnitude k h g; where g is the acceleration due to the gravity. The pore water pressure along the rupture surface is defined my means of the pore water coefficient r u

; where, pore pressure u at any point on the rupture surface is given by the expression, u = r u

γ z.; r u

is the pore pressure coefficient, γ is the bulk unit weight of the soil and z is the vertical distance of the point on the log spiral from the slope surface.

Assumptions

1.

The soil is a perfectly plastic material and it obeys an associative flow rule. d

ε

ij

= d

λ

∂ f

∂

σ

′ ij

[ 1 ]

Where, d ε ij is a tensor of incremental strain, σ ′ ij is a tensor of effective stress, f( σ ′ ij

)=0 is the yield condition and d λ is a non-negative plastic multiplier.

4

2.

The soil mass obeys the Mohr-Coulomb’s yield condition, i.e. τ = c+ σ′ tan φ where

τ and σ ′ are the magnitudes of shear stress and the effective normal stress along the shear plane.

3.

The failure surface is an arc of the logarithmic spiral and it always passes through the toe of the slope.

4.

The problem is assumed to be a two-dimensional plane strain problem.

Upper bound theorem of the limit analysis of dissipation of total internal energy in any kinematic admissible collapse mechanism should be equal to the rate of total work done by the various external and the body forces. For a two dimensional plane strain problem, mathematically this equality can be expressed as

A

∫

σ

ij

δε

ij dA

+ ∫

L t i

[ ] i dL

= ∫

S t i

V i dS

+ ∫

A

γ

i

V i dA [ 2 ]

Where the LHS terms represent the rate of internal work done by the stresses σ ij

over the incremental strain δ ε ij

within the region A and by the tractions t i

over the velocity jump

[V] i

along the velocity discontinuity line L. The RHS terms define the rate of the external work done by the tractions t i

over the velocity V i

along the boundary line S and of the body forces γ i

over the velocities V i

in region A.

If the material bounded by rupture and boundary surfaces is subdivided into different rigid regions undergoing either translation or rotation, the incremental strain δε ij within region A will become equal to zero, and equation (2) will, therefore, become

L

∫

t

i

[ V ]

i

dL =

S

∫

t

i

V

i

dS +

A

∫

γ

i

V

i

dA

[3]

For a material obeying the Mohr-Coulomb yield condition and the associated flow rule, the

5 velocity jump vector [V] i

must incline at an angle φ with the velocity discontinuity line; the magnitude of the product t i

[V] i

then becomes equal to c[V] i cos φ .

By solving the Eq.2, the following expression for the stability number of the slope is obtained.

N

= f

( r u

,

φ

1

,

φ

2 k h

, H

1

H

,

β

)

[ 4 ]

Where the definitions of H1/H, φ

1

, φ

2

and β are given in Fig.1. When H1/H=0 and 1, then the equation (3) is the stability number of homogeneous soil slope.

[Insert Figure 1]

NEURAL NETWORK

Neural networks, as they are known today, originate from the work of McCulloch and

Pitts (1943), who demonstrated the ability of interconnected neurons to calculate some logical functions. Hebb (1949) pointed out the importance of the synaptic connections in the learning process. Later, Rosenblatt (1958) presented the first operational model of a neural network: the ‘Perceptron’. The perceptron, built as an analogy to the visual system, was able to learn some logical functions by modifying the synaptic connections.

ANNs are massively parallel, distributed and adaptive systems, modeled on the general features of biological networks with the potential for ever improving performance through a dynamical learning process (Bavarian, 1988). Neural networks are made up of a great number of individual processing elements, the neurons, which perform simple tasks. A neuron, schematically represented in Fig. 2, is the basic building block of neural network technology which performs a nonlinear transformation of the weighted sum of the incoming

6 inputs to produce the output of the neuron. The input to a neuron can come from other neurons or from outside the network. The nonlinear transfer function can be a threshold, a sigmoid, a sine or a hyperbolic tangent function.

[Insert Figure 2]

Neural networks are comprised of a great number of interconnected neurons. There exists a wide range of network architectures. The choice of the architecture depends upon the task to be performed. For the modeling of physical systems, a feed forward layered is usually used. It consists of a layer of input neurons, a layer of output neurons and one or more hidden layers. In the present work, a three-layer feed forward network was used.

In a neural network, the knowledge lies in the interconnection weights between neuron and topology of the networks (Jones and Hoskins, 1987). Therefore, one important aspect of a neural network is the learning process whereby representative examples of the knowledge to be acquired are represented to the network so that it can integrate this knowledge within its structure. Learning implies that the processing element somehow changes its input/output behavior in response to the environment. The learning process thereby consists in determining the weight matrices that produce the best fit of the predicted outputs over the entire training data set. The basic procedure is to first set the weights between adjacent layers to random values. An input vector is then impressed on the input layer and is propagated through the network to the output layer. The difference between the computed output vector of the network and the target output vector is then adapt the weight matrices using an iterative optimization technique in order to progressively minimize the sum of squares of the errors (Hornik et al.,1989). The most versatile learning algorithm for the feed forward layered network is back-propagation (Irie and Miyanki, 1988). The back-

7 propagation learning law is a supervised error-correction rule in which the output error, that is, the difference between the desired and the actual output is propagated back to the hidden layers. Now, if the error at the output of each layer can be determined, it is possible to apply any method which minimizes the performance index to each layer sequentially.

Back-propagation algorithm with Levenberg-Marquardt algorithm

Multi-Layer Perceptrons (MLP) are perhaps the best-known type of feed forward networks.

MLP has generally three layers: an input layer, an output layer and an intermediate or hidden layer. Neurons in the input layer only act as buffers for distributing the input signal x i to neurons in the hidden layer. Each neuron j in the hidden layer sums up its input signals x i after weighting them with the strengths of the respective connections w ji

from the input layer and computes its outputs y j as a function f of the sum, viz. y j

=

f

(

Σ

w ji

x i

)

[4] where, f can be a simple threshold function or a sigmoid, hyperbolic tangent or radial basis function.

The output of neurons in the output layer is computed similarly. The back-propagation algorithm, a gradient descent algorithm, is the most commonly adopted MLP training algorithm. It gives the change

Δ

w ji in the weight of a connection between neurons j and i as follows:

Δ

w ji

=

η δ

j x i

[5]

8

Where

η

is a parameter called the learning rate and

δ

j is a factor depending on whether neuron j is an output neuron or a hidden neuron. For output neurons,

δ

j

=

⎜

⎜

⎝

⎛

∂ f

∂ net j

⎟

⎟

⎠

⎞

⎝

⎜

⎛ y t j

− y j ⎠

⎟

⎞

[6] and for hidden neurons,

δ

j

=

⎛

⎜

⎜

⎝

∂

∂ f net j

⎟

⎟

⎠

⎞

∑ q

(

W qj

δ

q

)

[7]

In equation (3), net j is the total weighted sum of input signals to neuron j and y j

(t) is the target output of neuron j . As there are no target outputs for hidden neurons, in equation (4), the difference between the target and actual output of a hidden neuron j is replaced by the weighted sum of the

δ

q

terms already obtained for neurons q connected to the output of j .

Thus, iteratively, beginning with the output layer, the

δ

term is computed for neurons in all layers and weight updates determined for all connections.

Back-propagation searches on the error surface by means of the gradient descent technique in order to minimize the error. It is very likely to get stuck in local minima.

Various other modifications to back-propagation to overcome this aspect of back-propagation have been proposed and the Levenberg-Marquardt modification (Hagan and Menhaj, 1994) has been found to be a very efficient algorithm in comparison with the others like Conjugate gradient algorithm or variable learning rate algorithm.

Levenberg-Marquardt works by making the assumption that the underlying function being modeled by the neural network is linear. Based on this calculation, the minimum can be determined exactly in a single step. The calculated minimum is tested, and if the error there is lower, the algorithm moves the weights to the new point. This process is repeated iteratively

9 on each generation. Since the linear assumption is ill-founded, it can easily lead Levenberg-

Marquardt to test a point that is inferior (perhaps even wildly inferior) to the current one. The clever aspect of Levenberg-Marquardt is that the determination of the new point is actually a compromise between a step in the direction of steepest descent and the above-mentioned leap. Successful steps are accepted and lead to a strengthening of the linearity assumption

(which is approximately true near to a minimum). Unsuccessful steps are rejected and lead to a more cautious downhill step. Thus, Levenberg-Marquardt continuously switches its approach and can make very rapid progress.

The equations for changing the weights during training in Levenberg-Marquardt method are given as follows:

Modifying

⇒ Δ r

W

=

J

(

T

J

+

μ

I

)

− 1

J

T [ 8 ] where J is the Jacobian matrix of the derivative of each error to each weight, µ is a scalar and e is an error vector. The Levenberg–Marquardt algorithm performs very well and its efficiency is found to be of several orders above the conventional back propagation with learning rate and momentum factor.

Neural modeling

In order to analyze the non homogeneous slope, input vectors have been given in the form of different β (45 o to 90 o ), different values of φ

1

and φ

2

, r u

from 0 to 0.25 and H1/H from 0 to 1.

In the present work, to predict the value of N s lying between H1/H =0 and H1/H=1

,

neural network has been optimized by generating the input and target vectors from the charts of

Michalowski (2002), which is stability numbers for homogeneous soil slope where H1/H = 0 and 1. Total numbers of training patterns given in the neural network are 2000 and for testing

10

500. Predictions have been made by getting the optimum neural network obtained during the analysis of input and target vectors taken from the Michaloswki (2002). Prediction is also made for the case of H1/H=0 and 1, and compared through the charts of Michaloswki (2002).

RESULTS OF NEURAL MODELING

The critical step in building a robust ANN is to create an architecture, which should be as simple as possible and has a fast capacity for learning the data set. The robustness of the

ANN will be the result of the complex interactions between its topology and the learning scheme.

The choice of the input variables is the key to insure complete description of the systems, whereas the qualities as well as the number of the training observations have a tremendous impact on both the reliability and performance of the ANN. Determining the size of the layers is also an important issue. One of the most used approaches is the constructive method, which is used to determine the topology of the network during the training phase as an integral part of the learning algorithm. The common strategy of the constructive methods is to start with a small network, train the network until the performance criterion has been reached, add a new node and continue until a ‘global’ performance in terms of error criterion has reached an acceptable level. The final architecture of neural net used in the analysis is shown in Fig.3.

[Insert Figure 3]

The transfer function used in the hidden layer is tanh and at the output layer is purelin. The maximum epochs has been set to 10000. Entire modeling has been done by using MATLAB

® software. Results of neural modeling are shown in Figs. 4 and 5.

11

[Insert Figure 4]

[Insert Figure 5]

[Insert Figure 6]

It can be clearly seen from Fig. 5 that the linear coefficient of correlation is very high between observed experimental data and values predicted through neural nets and it is 0.997 in training and 0.996 during testing phase. This shows the learning and generalization performance of the network is good.

VARIATION OF N

S

WITH

β

Case 1

For k h

=0 and r u

=0

[Insert Figure 7a] [Insert Figure 7b] [Insert Figure 7c]

Case 2 For k h

=0.1 and r u

=0

[Insert Figure 8a] [Insert Figure 8b] [Insert Figure 8c]

Case 3 For k h

=0 and r u

=0.25

[Insert Figure 9a] [Insert Figure 9b] [Insert Figure 9c]

Case 4 For k h

=0.1 and r u

=0.25

12

[Insert Figure 10a] [Insert Figure 10b] [Insert Figure 10c]

Following observations can be made from figs. 7 to 10.

1.

Neural network output for homogeneous slope well matches with the literature’s data.

2.

Stability numbers for non homogeneous slope can be obtained from the neural network modeling. Therefore to get the stability numbers for non homogenous soil sloe, a neural net with weights can be supplied for the modeling.

3.

The stability numbers have been found to decrease continuously with (i) increase in kh; (ii) increase in ru; (iii) increase in slope angle β .

SENSITIVITY ANALYSIS

Sensitivity analysis is a method for extracting the cause and effect relationship between the inputs and outputs of the network. The basic idea is that each input channel to the network is offset slightly and the corresponding change in the output is reported. To ascertain the influence of the input variables on output variables, sensitivity analysis is also carried out. This testing process provides a measure of the relative importance among the inputs of the neural model and illustrates how the model output varies in response to variation of an input. The first input is varied between its mean ± standard deviations while all other inputs are fixed at their respective means. Similar exercises have been made for all others input parameters.

As shown in Fig.11, pore water pressure coefficient is having more influence on stability number followed by the slope angle ( β ). The stability number as a function of r u

by keeping the other parameters as constant, as predicted by the ANN model is shown in Fig.12.

The stability numbers have been found to decrease with an increase in r u

. Figure 13 gives the stability numbers characteristics as a function of k h

. It has been found that the stability

13 numbers is decreasing with an increase in k h

. Effect of non homogeneity of slopes is described in Fig. 14 in terms of H1/H. Fig. 14 shows that increase in H1/H will decrease the stability number. Effect of slope angle is illustrated in Fig.15. It shows that the stability numbers have been found to decrease continuously with an increase in slope angle β . Effect of friction angle φ

1

and φ

2

is depicted in Figs. 16 and 17. It is seen clearly from Figs.16 and

17 that an increase in φ

1

and φ

2

will increase the stability number

[Insert Figure 11]

[Insert Figure 12]

[Insert Figure 13]

[Insert Figure 14]

[Insert Figure 15]

[Insert Figure 16]

[Insert Figure 17]

14

CONCLUSION

• The results presented in this paper have clearly shown that the neural network methodology can be used efficiently to predict the stability numbers for non homogeneous soil slope. The main advantage of neural networks is to remove the burden of finding an appropriate model structure or to find a useful regression equation. The network showed excellent learning performance and achieved good generalization.

• Sensitivity analyses with the trained neural net or during training could provide valuable additional information on the relative influence of various parameters on the stability number. The r u

is having more influence on stability number than any other parameter.

• The stability numbers have been found to decrease continuously with (i) increase in r u

, (ii) increase in k h

and (iii) increase in slope angle ( β ).

• The stability number will decrease with an increase in H1/H.

• The stability number will increase with an increase in φ

1

and φ

2

.

15

References:

Bavarian, B. (1998).

“Introduction to neural networks for intelligence control”, IEEE controls Syst. Mag.3-7.

Bishop, A.W. (1955)

. “The use of slip circle in the stability of slopes”, Geotechnique, 5(1):

7-17.

Chen, W.F. (1975) . “Limit analysis and soil plasticity”. Elsevier, Amsterdam.

Hagan, M.T., and Menhaj , M.B. (1994.

“Training Feedforward Networks with the

Marquardt Algorithm”, IEEE Trans on Neural Networks vol. 5.

Hebb , D.O. (1949.

“The organization of behaviour”, Wiley, New York.

Hornik, K., Stinchcombe, M., and White , H. (1989).

“Multilayer feedforward networks are universal approximaters”, Neural Networks 2, 359-366.

Irie, B. and Miyanki, S. (1988). “ Capabilities of three layer perceptrons, In IEEE second Int.

Conf. on Neural networks”, San Diego, Vol.1, pp. 641-648.

Jones, W.P., and Hoskins

,

J. (1987).

“Back–propagation a generalized delta learning rule”,

BYTE oct., 155-162.

McCulloch, W.S. and Pitts, W. (1943)

. “A logical calculus in the ideas immanent in nervous activity”, Bull. Math.Biophys. 5, 115-133.

Michalowski, R.L. (2002).

“Stability charts for uniform slopes”, Journal of Geotechnical and

Geoenvirometal Engineering, ASCE, 128(4): 351-355.

Morgenstern, N.R., and Price, V.E. (1965)

. “The analysis of the stability of general slip surfaces” Geotechnique, 15(1): 79-93.

Rosenblatt

,

F. (1958.

“The perceptron: a probabilistic model for information storage and organization in the brain”, Psychol. Rev. 68, 386-408.

Taylor, D.W. (1948) . “Fundamental of soil mechanics”, John Wiley, New York..

16

Notation:

ANN=artificial neural network;

MSE = mean squared error;

NMSE = normalized mean squared error;

MAE = mean absolute error;

R = linear correlation coefficient;

LM = Levenberg-Marquardt method; c = soil cohesion; f = transfer functions;

H c

= critical height of the slope;

H

1

= height of the upper layer of the slope in Figure1;

H = height of the slope defined in Figure 1;

J = jacobian matrix; k h

= horizontal earthquake acceleration coefficient;

N s

= stability number; r u

= pore water pressure coefficient;

[V] = velocity jump vector;

V i

= velocity; x = input vectors;

W=weights;

β = horizontal inclination of slope;

φ (Phi) = soil friction angle;

γ = bulk unit weight of soil;

φ

1

= soil friction angle for upper layer in Figure1;

φ

2

= soil friction angle for lower layer in figure 1;

17

σ

/ ij

= tensor of effective stress;

σ / = effective normal stress along the shear plane;

τ = magnitudes of shear stresses along the shear plane;

δ = error;

η = learning rate.

18

Figures caption:

Fig. 1: Slope geometry

Fig. 2: A simple processing neuron

Fig. 3: Neural net architecture used in the analysis

Fig.4: MSE versus epochs

Fig. 5: Results of training phase

Fig. 6: Results of testing phase

Fig. 7a:

φ

1

=10,

φ

2

=20

Fig. 7b:

φ

1

=10,

φ

2

=40

Fig. 7c:

φ

1

=20,

φ

2

=40

Fig. 8a:

φ

1

=10,

φ

2

=20

Fig. 8b:

φ

1

=10,

φ

2

=40

Fig. 8c:

φ

1

=20,

φ

2

=40

Fig. 9a:

φ

1

=10,

φ

2

=20

Fig. 9b:

φ

1

=10,

φ

2

=40

Fig. 9c:

φ

1

=20,

φ

2

=40

Fig. 10a:

φ

1

=10,

φ

2

=20

Fig. 10b:

φ

1

=10,

φ

2

=40

Fig. 10c:

φ

1

=20,

φ

2

=40

Fig. 11: sensitivity analysis of Stability numbers

Fig. 12: Effect of r u

on stability number

Fig. 13: Effect of k h

on stability number

Fig. 14: Effect of H1/H on stability number

Fig. 15: Effect of

β

on stability number

Fig. 16: Effect of

φ

1

on stability number

Fig. 17: Effect of

φ

2

on stability number

19

20

Figures:

H w

M

O P

F

V

0

G

φ

2

D

A

E

φ

1

H1 c, γ , c, γ , φ

2

φ

1

C

φ

2

∠ POC= θ c

, ∠ MGC= β , ∠ POK= θ , ∠ POD= θ s

and ∠ AOP= θ a

Fig. 1: Slope geometry

21

X i=1 to n

∑ f

Y = f

[ X

T .

w]

Fig. 2: A simple processing neuron

22 k h

H1/H

β

φ

1

φ

2 r u

Input Layer Hidden

Layer

15 th neuron

Fig. 3: Neural net architecture used in the analysis

Output

Layer

N s

23

Fig.4: MSE versus epochs

24

Fig. 5: Results of training phase

25

Fig. 6: Results of testing phase

26

N s

10

8

6

4

2

0

40

18

16

14

12

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski,2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0, φ

1

=10, φ

2

=20 and k h

=0

80 50 60 70

β

Fig. 7a: φ

1

=10, φ

2

=20

90

27

200

180

160

140

120

N s

100

80

60

40

20

0

40

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

50 60

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0, φ

1

=10, φ

2

=40 and k h

=0

70 80

β

90

Fig. 7b: φ

1

=10, φ

2

=40

28

200

180

160

140

120

N s

100

80

60

40

20

0

40

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski,2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0, φ

1

=20, φ

2

=40 and k h

=0

80 50 60

β

70

Fig. 7c: φ

1

=20, φ

2

=40

90

8

N s

6

4

2

0

40

14

12

10

29

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0, φ

1

=10, φ

2

=20 and k h

=0.1

80 50 60 70

β

Fig. 8a: φ

1

=10, φ

2

=20

90

30

25

N s

20

15

10

5

0

40

45

40

35

30

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

50 60

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0, φ

1

=10, φ

2

=40 and k h

=0.1

70 80

β

90

Fig. 8b: φ

1

=10, φ

2

=40

31

N s

25

20

15

10

5

0

40

50

45

40

35

30

(H1/H=1) (Michalowski,2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski,2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0, φ

1

=20, φ

2

=40 and k h

=0.1

50 60 70 80

β

90

Fig. 8c: φ

1

=20, φ

2

=40

12

N s

6

4

10

8

2

0

40

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

32

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0.25, φ

1

=10, φ

2

=20 and k h

=0

80 50 60 70

β

Fig. 9a: φ

1

=10, φ

2

=20

90

18

N s

10

8

6

4

2

16

14

12

0

40

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

33

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0.25, φ

1

=10, φ

2

=40 and k h

=0

80 50 60

β

70

Fig. 9b: φ

1

=10, φ

2

=40

90

N s

15

10

5

0

40

30

25

20

34

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0.25, φ

1

=20, φ

2

=40 and k h

=0

80 50 60

β

70

Fig. 9c: φ

1

=20, φ

2

=40

90

35

5

N s

4

3

2

1

0

40

9

8

7

6

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski, 2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN)

50 60 r u

=0.25, φ

1

=10, φ

2

=20 and k h

=0.1

β

70 80 90

Fig. 10a: φ

1

=10, φ

2

=20

20

18

16

14

12

N s

10

8

6

4

2

0

40

36

(H1/H=1) (Michalowski,2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski,2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0.25, φ

1

=10, φ

2

=40 and k h

=0.1

80 50 60

β

70

Fig.10b: φ

1

=10, φ

2

=40

90

20

18

16

14

12

N s

10

8

6

4

2

0

40

37

(H1/H=1) (Michalowski, 2002)

(H1/H=0.8) (ANN)

(H1/H=0.4) (ANN)

(H1/H=0) (Michalowski,2002)

(H1/H=1) (ANN)

(H1/H=0.6) (ANN)

(H1/H=0.2) (ANN)

(H1/H=0) (ANN) r u

=0.25, φ

1

=20, φ

2

=40 and k h

=0.1

50 60

β

70

Fig. 10c: φ

1

=20, φ

2

=40

80 90

38

2.5

2

1.5

1

0.5

0

Ru kh Beta H1/H

Input Variables

Phi1 Phi2

Fig. 11: sensitivity analysis of Stability numbers

N

39

All other parameters are constant at their mean.

N

13

12

11

10

9

8

7

6

17

16

15

14

5

4

3

2

1

0

0.00

0.05

0.10

R u

0.15

0.20

Fig.12: Effect of r u

on stability number

0.25

40

All other parameters are constant at their mean.

8.5

8.0

7.5

7.0

N

6.5

6.0

5.5

5.0

4.5

0.00

0.02

0.04

K h

0.06

0.08

Fig.13: Effect of k h

on stability number

0.10

41

6.7

6.6

6.5

6.4

N

6.3

6.2

6.1

6.0

5.9

0.1

All other parameters are constant at their mean.

0.2

0.3

0.4

0.5

H1/H

0.6

0.7

Fig.14: Effect of Η1/Η on stability number

0.8

0.9

42

All other parameters are constant at their mean.

N

7

8

6

5

4

11

10

9

50 55 60 65 70 75

β

Fig.15: Effect of β on stability number

80 85

43

8.0

All other parameters are constant at their mean.

7.5

7.0

6.5

N

6.0

5.5

5.0

4.5

8 10 12 14 16

φ

1

18 20

Fig.16: Effect of φ

1

on stability number

22 24 26

44

N 6.4

6.2

6.0

5.8

5.6

5.4

24

7.4

7.2

7.0

6.8

6.6

All other parameters are constant at their mean.

26 28 30 32

φ

2

34 36

Fig.17: Effect of φ

2

on stability number

38 40 42