From: ISMB-93 Proceedings. Copyright © 1993, AAAI (www.aaai.org). All rights reserved.

1Protein Structure Prediction System based on Artificial

Neural Networks

J. Vanhala and K. Kaski

TampereUniversity of Technology/Microelectronics Laboratory

P.O.Box 692

33101 Tampere, Finland

email jv@ee.tut.fi, tel. +358-31-161407,fax +358-31-162620

Abstract

Methodsbased on the neural network techniques are

among

the mostaccurate in the secondarystructure prediction of globular proteins. Here the sameprinciples have

beenusedfor the tertiary structurepredictionproblem.The

mapof dihedral dp and V angles is divided into 10 by 10

squareseach spanning36 by 36 degrees. Bypredicting the

classification of eachresidue in the protein chain in this

mapa roughtertiary structure can be deduced.A complete

prediction systemrunningon a cluster of workstationsand

a graphicaluser interface wasdeveloped.Keywords:

artificial neuralnetworks,protein structure prediction,distributed computing.

Introduction

Neural networktechniques have been successfully used in

the prediction of the secondary structure of the globular

proteins. Although secondary structures are normally

defined by the presence or absence of certain hydrogen

bonds between amino acid residues, they could also be

described by the dihedral angles of the backbone. When

the protein chain adopts a certain secondary structure the

angles are forced to corresponding canonical values. Thus

predicting the secondarystructure is equivalent to predicting the classification of the backbonedihedral angles into

classes correspondingto helix, sheet, and coil -structures.

Each class include residues whose dihedral angles may

differ over 90 degrees. Thusthe secondary structure classification is not sufficiently accurate for deducingthe tertiary structure. Howeverby refining the classification the

network can be taught to predict more accurate dihedral

angles which in turn can be used as starting point for a

search of the three dimensionalstructure.

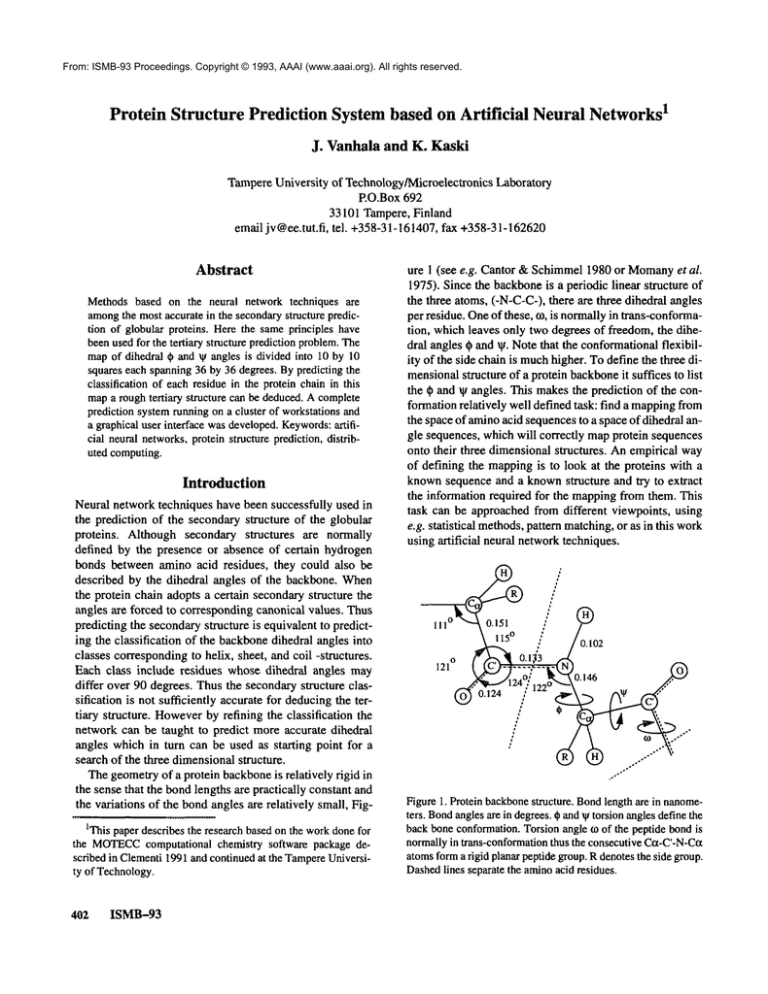

Thegeometryof a protein backboneis relatively rigid in

the sense that the bondlengths are practically constant and

the variations of the bondangles are relatively small, FigIThis paperdescribesthe researchbasedon the workdonefor

the MOTECC

computational chemistry software package described in Clementi1991and continuedat the Tampere

University of Technology.

4O2 ISMB-93

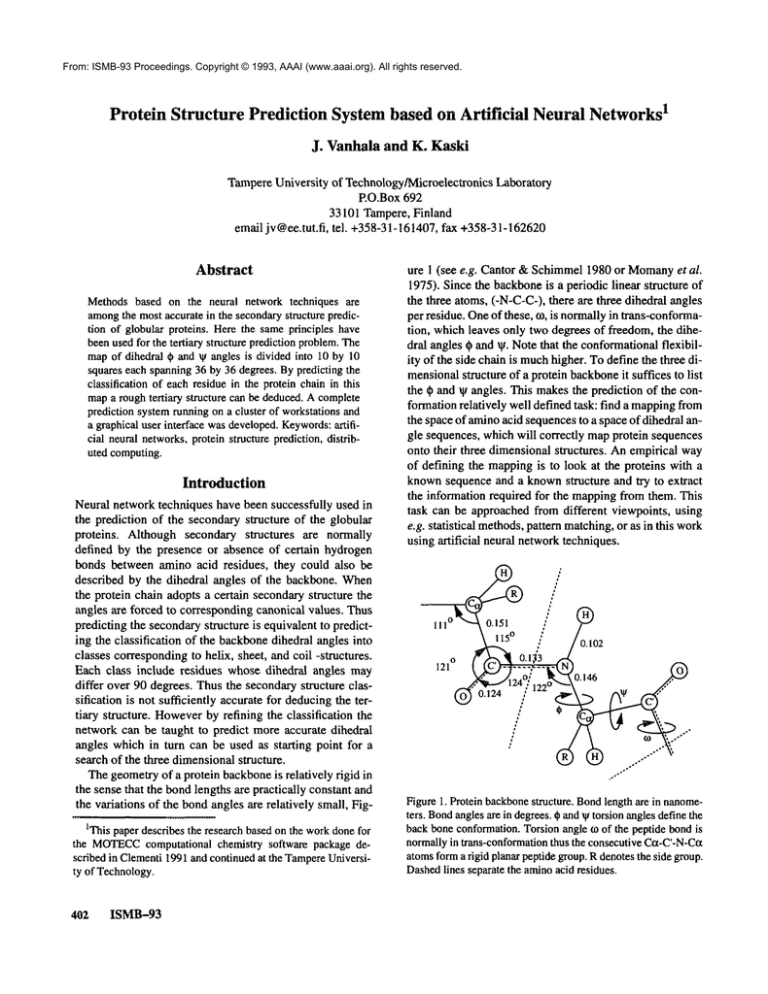

ure 1 (see e.g. Cantor & Schimmel1980 or Momany

et al.

1975). Since the backboneis a periodic linear structure of

the three atoms, (-N-C-C-),there are three dihedral angles

per residue. Oneof these, co, is normallyin trans-conformation, which leaves only two degrees of freedom, the dihedral angles ~ and tit. Notethat the conformationalflexibility of the side chain is muchhigher. To define the three dimensionalstructure of a protein backboneit suffices to list

the ~ and ~ angles. This makesthe prediction of the conformationrelatively well defined task: find a mappingfrom

the space of aminoacid sequencesto a space of dihedral angle sequences, which will correctly mapprotein sequences

onto their three dimensionalstructures. Anempirical way

of defining the mappingis to look at the proteins with a

knownsequence and a knownstructure and try to extract

the information required for the mappingfrom them. This

task can be approachedfrom different viewpoints, using

e.g. statistical methods,pattern matching,or as in this work

using artificial neural networktechniques.

Figure1. Protein backbone

structure. Bondlengthare in nanometers. Bondanglesare in degrees.¢ andVtorsionanglesdefinethe

backboneconformation.Torsionangle to of the peptidebondis

normallyin trans-conformation

thus the consecutiveCct-C’-N-Cct

atomsforma rigid planarpeptidegroup.R denotesthe side group.

Dashedlines separatethe aminoacid residues.

Anartificial neural networkis used to predict the main

chain conformation of globular proteins from their amino

acid sequence. The dihedral ~ and V angles of the protein

mainchain are calculated from the X-ray coordinates of a

set of proteins from the BrookhavenProtein Data Bank

(Bernstein et al. 1977) and has been given to the network

during the learning phase. In prediction phase an amino

acid sequencenot included in the training set is input to the

networkand the networkgives its prediction for the (or

set of) dihedral ¢ and ~ angles for each residue. Theresults

can be used as a starting point for computationallyintensive energy minimization calculations. Therefore neural

networkapproaches mayserve as a significant time saving

by suggesting conformationsrelatively close to an energy

minimum.

Neural networks in globular protein secondary

structure prediction

Several research groups have used neural network techniques to predict the secondarystructure of globular proteins from their amino acid sequence e.g. (~lan and

Sejnowsld (1988), Holley and Karplus (1989), Bohr et al.

(1988), and Mejia and Foge!man-Soulie(1990). Although

the groups worked independently they used basically the

same network architecture. The network was a feedforward layered network with full connection topology

betweenlayers and with sigmoid type nonlinear activation

function and error back-propagation learning rule i.e. a

standard back-prop network. The input to the networkis a

fragmentof the aminoacid sequence of a protein¯ The output gives the secondarystructure classification for the residue in the middle of the fragment, see Figure 2.

Secondarystructure for the wholeprotein can be produced

by sliding a windowover the whole length of the amino

acid sequence. The input to the network is represented

using singular coding where each residue is coded with 20

input nodes. Eachof these nodes correspond to one of the

20 naturally occurring aminoacids. Thusthe active unit in

a input group flags the presence of a given residue type in

that sequence position. For a windowof n residues the

input layer has the total of 20*nunits.

In the output layer there is a separate unit for eachof the

secondarystructure class used e.g. ot helix, 13 sheet, random

coil or turn. Thelevel of activity of a given output unit can

be interpreted as a probability of the correspondingclass.

Classification is then done by choosingthe class with the

highestprobability i.e. activity. In addition to the input and

output layers there is also a hiddenlayer¯ This is neededbecause a networkwith only input and output layers is capable of extracting only the first order features fromthe training set. Theseare the features that are causedby each input

unit individually. Since the secondarystructure of the proteins clearly dependson the local context of the aminoacid

Secondaryclass

Helix

Output

layer

Hidden layer

~i~i~i~

Gr iI

S [~

N~

+1~

J~p

¯

V

[H

i Illl~l

i i ii

|1

IIII i I i I i I

Coil

~ I

I I ~ ~ I I ~ I I ~ I I

i I’1i ii IIIi 1~I i IIIi i Ii i I i IIIi[i IIIllllll]l

i[i i i ii i i Ii

i I~E i iI i ii ii i i i lmB i i i i i i ii ii

I I I I1[i i i i i i i i i i i i i mlB

I1|

IIII

I III1~1

II

I~ ~illllllllE~

I

II I$~-I I I I I I I1-1 II IT[I I I I I i i i i ~ i i i[I

~111111

Illll

I I I I~1~111111111111

I

III I I I I[I I I I I I I I I I I I I I I I I ~) 1~ I I I

IIIIIII

IIII

$111 II1[1111111

II

IIII1~11111 I IIII I II III

IIIII

IIIIII1~11

III I I I I I I I I I ITIITI i]fl’|l

I I I I I I I I I ~

II I11 III I I I I I I I I I I I I I IEiS$1 I I I~ I I

tSRI I I I ~ IN’I I I I I I I I I I I I I I I I I I I I I I I I

IIIIIII

IIIII

I I.~ + I lllllmllllllll

I

II II I I I I I I I I I I I ~IE I I I I I I I I I

I II I

lllllll]llll

IIIIIIIIIIIIIIIIIIIII

I

III I I I I I I I BI III I I I I I I i i i i i i i i i i i i I

IIIIIIII1~|

IIIII

IIIIIIIIIIIIIIII

I

Ii

i

I

+lrrw-I .....................

~-4

m ,,,,

Ei

Sheet

I ~ ~l

-N/2

IIIIII

fill ....

.....................

0

N/2-1

Input layer

...PFANGHFSFYNKCTEOPICPNSMEMVDAVHCL...

Aminoacid sequence

Figure2. Network

architecturefor the secondarystructurepredictiorL Theinput layer has one active node(shownin black)coding

the residue type at eachsequenceposition. Thewindow

widthis

N. Everynodein the input layer is connected

to everynodein the

hiddenlayer troughthe lowerset of weights. Alsoeverynodein

the hiddenlayer is connectedto everynodein the output layer

trough the upperset of weights. Amino

acid types are given in

one-lettercode.Theclassificationfor the prolinein the middleof

the window

is sheet, whichhas the highestactivity level in the outsequence a hidden layer is needed. This gives the network

the ability to represent also secondorder features i.e. features that dependon morethan one input unit at a time.

Both Qian and Sejnowski and Holley and Karplus used

also alternative waysof representing the input to the network. They used physicochemical properties of the amino

acid residues such as hydrophobicity, charge, side chain

bulk, and backboneflexibility. Qian and Sejnowski also

provided the networkwith global information such as average hydrophobicityof the protein and the position of the

residue in the sequence. All these attempts apparently

failed to improvethe prediction reliability over the basic

scheme.

Bohret al. used a separate networkfor each secondary

structure class. Also they used two nodes to represent the

output class. One node gives the probability p for belonging to the class and the other gives the probability(l-p) i.e.

Vanhala

403

the probability for not belongingto the class. In this wayit

is possible to recognize those cases where the network is

not able to give a valid prediction.

Neural networks in globular protein tertiary

structure prediction

Bohr et al. (1990) have developed a method where they

predict the distance matrix (or rather a contact matrix) of

globular protein from its aminoacid sequenceusing a feed

forward neural network. The networktells if the distance

betweentwo ix- carbons is less than a preassigned threshold value, e.g. 8 angstroms. All pairs of ct- carbons up to

30 residues apart are considered, thus the network gives

distance constraints for a diagonal band whichis 30 residues wide. To generate the full distance matrix they use a

deepest decent optimization to satisfy as manyof the distance constraints as possible. Since the distance matrix can

be generated from the back bone internal coordinates and

vice verse, this method bears resemblance to the work

described below, but uses a different wayto represent the

three dimensionalstructure of the protein backbone.

Their networkis large compared

to the size of their training set. Indeed the networkis capable of reproducing the

training set 100percent correct. This will reduce the ability

to generalize to unseen examples. Howeverthey use the

networkto predict the structure of a protein that has highly

homologouscounterparts in the training set obtaining good

results. Theydo not report results for proteins that do not

have homologousproteins in the training set. Wilcoxand

Poliac (1989) report even bigger network and use fewer

proteins in the training set. Their networkis capableof correctly recalling the distance matrices of the proteins in the

training set whengiven the hydrophobicities of the amino

acid sequence; howeverthey do not test the network with

unknownproteins. Fiedrics and Wolynes (1989) use

methodclosely related to Hopfield type neural networks to

predict the tertiary structure of globular proteins. Theycall

their methodassociative memoryhamiltonian.

Methods

Our approach draws ideas from two lines of development

in protein structure prediction. The first is the workdone

with artificial neural networksaimedat predicting the secondary structure. This has given us the tool. The second

source of inspiration has been the methodsdeveloped by

Lambert and Scheraga (1989a, b, c) to predict tertiary

structures. This has given us somepractical guide-lines

and a reference for comparingour initial results.

Our training set is the sameas the one used by Lambert

and Scheraga in their pattern recognition-based importance-sampling minimization (PRISM) method. This

choice offers the advantagefor fair comparisonof results.

404

ISMB-93

Torsion angles

Output layer

I I II |

~

n lnnl

I I I I I

-rm 0 I--TT-I

II I I II

I I I F.~:: I I I I

IIIII

I

I VI I I

-180 I ] n I I

-180

O

180

@

Hidden layer

G

S

-~

¯ ~

N

iii

i RI~ i i i i iiiiiiiiIiIIiiI

iiilllil

A ...........................

~.~

V

O

f-I

H

]~I

N

IIII

]IIIIIIIIIIIIIIIIIIIIIIII~I~III

I|I

I I I I I I lllllll~mlllll]

IIII

III I I I

fTI

IIIIII I I II iIII1~11

i

III I II II$I I I I II1 Illlllllll

I I I III I I III

i i i ii ~R

I I I I I I I I I I I I I I I I[] I I I I I[~l ~I[U I I I I

III

I I I I IIIIIIII1~11~111111111111

I I I I I I I m~i I I I I I I I ~1 I I I Kill

I |1[I I

III

I

II I1 IIIIIIIJI

I |llllllllllllllllll

I

I I I I I I I I I I $I I I I I I I I I I I I I I I I I I I I I I

III

I I I[I I I~1 I I I I I I I I I I I I I I I I I | I I i

I

~1K ,,,,,,,,,,

E

i

IIIIII

III

III1~1111111111

Illl

[l|ll

III

III1[11111111[[11~1111111111

I

I I I I I I I I I I I I I I I I I I I I I I I I I I

I I I I I I

I I li~ I [ I II lI l~i

I ! III

IIII

I~ III I III III II I III III III III II~II II II I I Im~l

[I I I~g

i i ii i i i i i I ~ II

-N/2

............

,, .........

O

N/2-1

Input layer

...PFANGHFSFYNKCTEOPICPNSMEMVDAVHCL...

Aminoacid sequence

Figure3. Thenetworkarchitecturefor the tertiary structureprediction. Theoutputlayer in Figure2 has beenreplacedwitha map

of 10 by 10 output nodes. Eachnodecorrespondto 36 by 36 degreessquareof the d?--Vdiagram.Everynodein the hiddenlayer

is connectedto everynodein the output maptroughthe upperset

of weights.In this case the outputfor the prolinein the middleof

°

the window

can be interpreted approximately

as tl)=-100°, V=100

Also we could skip the time consumingphase of studying

the protein structure data banks and makingfair and unbiased selections from them. Wecould as well have took either of the training sets collected by Kabschand Sander

(1983) or by Qian and Sejnowski (1988). The training

is collected fi-om the BrookhavenProtein Data Bank by

Bernstein et al. (1977) and it contains 46 strands from

proteins and has 6964residues.

Because of the differences in the methods we had to

makesomeslight modificationsto the training set. ’Lambert

and Scheraga calculated conformational probabilities for

tripeptides i.e. the windowwidthis three. Becauseof this

choice they had to drop the first and the last residue from

each amino acid sequence. Instead we have used a much

larger windowof residues. The width of the windowvaries

from case to case from 7 to 51 residues. Since neglecting

one half of the windowsize of the terminating residues at

both ends woulddecrease the training set too much,we define a newnull residue that can be appendedto both ends

of the aminoacid chain (as is also doneby e.g. Qianand Sejnowski). In addition we could not simply removefrom the

training set those residues that are in cis-conformationor

those that are of nonstandardtype. Instead we define another new null residue which is skipped during the training

phase. Note that in the prediction phase all amidebondsare

also considered to be in the planar trans-conformation. All

protein sequences have been concatenatedinto one long sequencewith the null spacer residues betweenthe proteins.

Thefile that contains the training set has the residue identifiers together with the corresponding ¢ and ~g angles.

Theseangles were calculated from the protein X-ray chrystallog,~c data in the protein structure data bank. Notethat

although the training set file contains the actual angles,

they are classified into discrete output classes before being

given to the networkin the training phase.

Wehave used a feed forward layered neural network and

the error back propagation algorithm. Lambertand Scheraga (1989a, b, c) did not report on prediction for the d~ and

~g angles. The four classes is the upper limit for the Lambert’s and Scheraga’sapproach since they collect the statistics for each of the 64 (--43) possible tripeptide

conformational combinations. For even four classes the

frequency of more rare combinations is not sufficient to

obtain good prior probabilities. In our case in order to

makemorerefined predictions for the angles we divide the

¢-~g mapinto a 10 by 10 grid. Eachsquare spans an area of

36 degrees by 36 degrees, see Figure 3. As the output layer

in the network mayhave several active units, we need a

postprocessing phase that identifies the most probable

answersand also gives the actual angles instead of a classification into the hundredoutput classes. First the output

is low pass filtered to smooth out excessive roughness.

Thenall peaks are identified and their relative strength is

calculated to give them a rank. At this point the output

Sequence

G P

S QP

TY PGDDA

180 E

90O[:g

0O~

-90 -

-180

-180

I

-90

I

90

0

180

Figure3. Conformational

quadratures.Regiona containsIx helices, x helices, andisolated turns. Regione contains[~ bridges,

sheets, andprolinerich strands.RegionIx* containsleft handedIx

helices. Regione* containsglycinestrands.

mapis still in discrete form.Theactual t~ and ~g angles are

produced by computingthe "center of mass" for a neighborhood of each peak.

Results

Predicting

conformafional

quadratures

of PPT

To be able to make comparison to work done previously

we choosedto try to predict the conformationalclasses of

the avian pancreatic polypeptide as have been done by

Lambert and Scheraga (1989a). They divide the two

dimensional ~¥ map into four regions as shownin Figure

4. Their prediction methodPRISM

gives the classification

PVEDL

I

R F YDN LQQY

LNVV

TRIIRY

Correct

e e E e e e o~e*

oco~ e Iz o~ cx cx ~ ~x Ix oc cx Ix ix oc o~ Ix ix o~ ot Ix Ix (xlx*E Ix

Neural net

e e e

e e

e* ot cx e e ~ oc cx ~ o~ ,’ ,’ cx cx oc ~ o~

E

Et IX, E

a*

~k_lal._Acx!._!

Figure4. Secondary

structure predictionfor avianpancreaticpolypeptideI PPT.Thetop line gives the aminoacid sequencein one-letter

codeand belowthat is the correct classification into the four conformational

quadratures.Thepredictionswiththe neuralnetworkand

with the PRISM

methodare on the bottomtwolines. Harderrors are shownin solid boxesand soft errors in dashedboxes.Thedots at

the bothendsof the classificationdenotethe unusedresidues.

Vanhala

405

into one of the conformationalclasses for each residue in

the sequence. This approximatedescription can be used as

an input to a subsequent energy minimization step. In the

similar mannerwe created a neural networkwith four output nodes each correspondingto one of the conformational

quadratures. Using the sameprotein structure data base we

taught the networkto mapthe aminoacid sequence to output classes. The results for both methodsare shownin Figure 5. The four conformationalclasses a, E, c~* and ~* are

shown.The first line gives the aminoacid sequence in one

letter code. Thesecondline gives the correct classification

derived from the X-ray coordinates. Belowthose there are

the prediction results from the neural networkalgorithm

and from the PRISMmethod. The neural network does not

simply give one class as an answerbut rather a probability

of the correct classification of being in somequadrature. In

this way one can have a multiple choices in situations

were the networkis not able to makeits mind. Errors are

classified in twoclasses: a boxwith thin edgesis an error

wherethe alternate answer is correct and a box with thick

edgesis an hard error wherefor all alternatives the prediction is wrong. Both the neural network and the reference

has 6 hard errors, but the network gives also two soft

errors. The performanceof the networkis a bit worsethan

that reported for PRISM.One explanation for this is that

they used elaborate statistical meansin dealing with rare

cases in the aminoacid sequence data, somethingthat the

neural networkin its extremesimplicity is not able to do.

Sincethe classification is not fully correct, it is not possible to obtain a tertiary structure whichis relatively close to

the native one. In PRISM,the problemis solved by generating a multitude of dihedral angle classification

sequences and choosing the most probable ones for further

processing. This tends to be a very time consumingprocedure with an exponential run time complexity with respect

to the chain length. Indeed it becomesunpractical to process proteins with morethan 100 residues. The neural networkapproachdoes not have this limitation, since it gives

multiple answers simultaneously and it is easy to sort

these accordingto their probabilities.

gions. The predicted structure for BPTIis not globular but

elongated. Howeversince the cysteine residue pairs that

form the sulphur bridges are normally knownit "should be

possible" to fold the structure into moreglobular shape by

forcing the correct cysteine pairs together and at the same

time trying to maintain the predicted dihedral angles. The

native and the predicted structure of BPTIis shownin Figure 7.

The distance matrices shownhere display all distances

of the a -carbons of the protein chain. The matrix is symmetric, thus only one half of it is shown.This makesit easy

to comparetwo structures by placing themon each side of

the diagonal. The distances are gray scale coded. The

change of colour correspondsto a distance difference of 2

A, ngslr6ms. The 16 shades cover a region from 0 to 32 A.

The distance matrix representation has some advantages

over displaying the structures on the computerscreen: It is

rotation invariant so that the structures do not have to be

aligned. It can showboth local small scale details (close to

....,., S

1

Predicting ~b and V angles of BPTI

Bovine Pancreatic Trypsin Inhibitor (BPTI, 5PTI) is

muchstudied protein that has 58 residues. The prediction

by the networkhas been analysed by finding the fragments

of the protein chain that has (near) correct ~ and ¥ angles.

In the Figure 6 the predicted backbonestructure of BPTIis

showntogether with fragmentsof the native BPTIstructure

from the X-ray data. Abouta half of the residues fall into

correctly predicted regions. Both a helices (residues 2-7

and 47-56) and the 13 sheet fragment (residues 29-35)

correct. Also four ends (residues 5, 14, 38 and 51) of the

three sulphur bridges (S1, S2, and $3) are inside these re406 ISMB--93

......... 2

’i’°

14-

s2 or-Figure 6. Fragmentsof the BPTIbackbonefrom X-ray data

alignedwiththe predictedstructure. Solidline showsthe correctly

predictedregions. Thenumbersrefer to the aminoacid sequence

positions. Thethree sulphurbridgesSI-S3 are also shown.

a)

Figure8. Thedistancematrixof BPTI.

b)

perpendicularto the diagonal) is predicted. Onecould also

imagine to see parts of the two spherical "eyes" on both

sides of the I~ sheet. Theoverall structure is elongated,thus

the right uppercomeris black whichtells that the residues

far from each other in the sequenceare also far from each

other in the three dimensionalstructure.

Prediction of the ~ and ¥ angles of LZ

Figure7. Thenativestructure(a) andthe predictedstructure(b)

of BPTI.

the diagonal) and the overall conformationin the large (the

overall pattern). The scale dependson from howfar you are

lookingat the picture; It is insensitive to fewbig errors in

dihedral angles. Inspecting visually the three dimensional

structures on the computer screen can be very difficult

since although most of the predicted angles are relatively

close to correct ones, there are few angles that are predicted

with almost the maximum

error, 180degrees. This distorts

the overall structure and the corresponding residues may

end up in completelydifferent places and rotations.

In Figure 8 is the distance:matrix of BPTI.The two sides

close to the diagonal match rather nicely. The tx helices

whichare shown~.n light colour are predicted correctly otherwise but there is an extra piece of helix in the lowerfight

part. Onlythe very start of the [~ sheet structure (light strand

HumanLysozyme (1LZ1) is an example of a bit longer

protein chain. It has 130residues. Againthe overall structure of the prediction seems to be nonglobular, Figure 9a.

It seems to be put together from smaller regions that are

connected by long strands of randomcoil. The distance

matrix in Figure 10 showsthe same interesting features.

The closely packed regions have good correspondence to

the native protein. Specially the upper left corner of the

distance matrix, whichshowsthe start of the protein chain,

makesalmost an exact match, tx helices are correctly identiffed. The I]- structures in the middleof the matrix seem

to have somerelation to the native side.

Prediction of the ¢~ and ~ angles of PPT

Avian Pancreatic Polypeptide (1PPT) is an example of

small protein. It has only 36 residues. The native structure

of 1PPTis a fold of one long ~t helix and a shorter strand

of 1~ sheet. This can also be seen fromthe lowerleft part of

the distance matrix, Figure 11. The prediction has correctly producedthe helix and the I~ sheet but their relative

Vanhala

407

Figure10. Thedistancematrixof ILZI.

Figure9. Thepredictedstructure of I LZ1(a) and 1PPT(b).

the Insert button. First a structure nameis highlighted by

clicking the mousebutton on that entry and then clicking

on the Insert button. The system will copy the structure

nameon the Training set list. If a wrongstructure is accidentally inserted into the training set, it can be removedby

highlighting the structure nameand clicking the Delete

button. The PDBdataset is first loaded by giving its location on the computerfile system(i.e. the directory containing the files) on the line next to the Loadbutton and then

clicking the button. Training set list showsthe namesof

the structures that will be used whentraining the neural network. The list can be saved for a later use with the Save

positions are wrong. The secondary structures are correct

but their packingis not. This can also be seen in Figure 9b.

Theuser interface

The arrangements of the elements in the graphical user

interface for the protein tertiary structure prediction system is shownin Figure 12. This version is build using the

OpenWindowsGraphical User Interface Design Editor,

GUIDE(SunSoft 1991).

The Protein structures list showsthe structures that are

available on the computersystem. Each structure is in a

separate file in PDB-format.The system uses only the SEQRESand ATOM

records. The training set for the neural

networkis created by copying structures from the Protein

structures list to the Training set list. This is done with

4O8 ISMB-93

Figure1 I. Thedistancematrixof 1PPT.

Log

Training set

Protein structures

Tra|nlng Um~%

)

Pl. .........

0

100

(r~-)

~let’work file

Type

1

Sequence

file

In

S~’uc~urefile out

Figure 12. The graphical user interface.

q

button. The saved set can. be retrieved from the saved fde

with the Loadbutton. In this wayseveral different training

sets can easily be maintainedfor e.g. predicting the structures in a knownprotein family.

Whenthe training set has been created the network can

be trained simply by clicking the Train button. Since the

training maytake longer than one is willing to wait at the

keyboardthere is a gaugethat showshowfar the training

has proceeded. The trained network is saved into a file

whose name must be given on the line below the Network

f’de heading. In case the networkhas been trained before

with the sametraining set there is no sense to train the network again. Thusthe networkcan be retrieved from a previously saved file with the Loadbutton. If the networkis

already trained or loaded froma file before the train button

is clicked the training is continuedfromthis old state.

A new structure can be generated by first giving the

nameof the file containing the sequence information. The

file maybe in either PDB-format

or written with the I-letter code or the 3-letter code. Thetype must be indicated by

clicking the appropriate button. The nameof the prediction

result which is always in the PDB-format(with only SEQRESand ATOM

reco~s) must also be given. The structure is generated by clicking the Predict button. The prediction phase is very. fast comparedto the training phase,

thus there is no gaugeto showthe training time.

Everything that is done is echoed on the Log window.

Every time a button is pressed or a file nameis given the

log is updated. Youcan also makeyour ownnotes on the

log. Thelog can be savedinto a file for later referencewith

the Save button.

Discussion and future development

Werecall that Holley and Karplus and Qian and Sejnowski have attempted a coding based on physicochemical properties of aminoacids They were not successful in

improving over the simple method, using singular coding.

Perhapsthis has blurred the networkvisibility and the identity of residues is no longer visible to the network.Onthe

other hand we knowthat the stereochemical structure is of

importanceespecially in the short range interactions e.g. in

forming the hydrogenbonds, as has been shownby Presta

and Rose (1988). Another feasible explanation might

that the performanceof the networkis not limited by the

net-worksability to extract the informationfromthe training set whateverthe input codingis but rather by someother factor inherent in the approachor in the training set.

Usinga neural networkmethodsfor predicting the tertiary structure of a globular protein has its limitations. Some

of these are inherent to the neural networkapproachitself

and someraise fromthe nature of the application. Themain

limitation is probably the numberof currently available

protein structure data. Roomanand Wodak(1988) show

that some1500 proteins with average length of 180 residues wouldbe neededin their approach to obtain goodresuits in structural prediction. It will take several years to

collect the required structural data and eventhen there is no

guarantee that the newproteins contain the missing inforVanhala

409

marion of the sequence-structure mapping.

Also for a neural networkthere is alwaysa trade-off between the memorizationand the ability of generalization.

The size of the networkplays a majorrole. Asa rule of the

thumb(which also has theoretical foundations as described

by Baumand Haussler 1989), in order to get a good degree

of generalization, there should be about 10 times as many

training examplesin the training set as there are weightsin

the network.In practice this rule holds for every fitting of

parameters, if they are nearly linearly independent. The

smallest network we are currently using has 2540 weights

(154 input nodes, 10 hidden nodes and 100 output nodes).

Thus there should be about 25000 training examples. The

training set with its 7000examplesis only one third of the

theoretical minimum.

Weconclude that the back propagation neural network

has had limited success in predicting the protein backbone

dihedral angles. Prediction can be quite close to the correct

one over a short sequenceof residues. For examplect helices that are energetically the mostfavourablestructures are

often predicted in their correct places. By nowit has becomeapparent that attempt to reproducethe wholethree dimensionalstructure from the backbonedihedral angles will

be too sensitive

to errors in them. Whatis neededis a rep#

resentation that is more robust and contains more redundancy. In this respect the distance malrix representation

seems to be promising.

As a bottom line we would like to emphasize tha~ the

neural network(or in a broader context the artificial intelligence) approachis not per se unsuitable for the prediction

of molecular phenomena.Our research is only taking its

first steps and it mayhave a long wayto go before it will

proveits feasibility andlater yield significant results.

References

Baum,E. B., and Haussler, D. 1989. WhatSize Net Gives

Valid Generalization?. Advancesin neural information

processing systems L, Touretzky, D.S. ed., Morgan

KaufmannPublishers, San Mateo, CA.

Bernstein, F.C., Koctzle, T.F., Williams, G.J.B., Meyer,

E.F.Jr., Brice, M.D., Rodgers,J.R., Kennard,O., Shimanouchi, T., and Tasumi, M. 1977. The Protein Data

Bank: a computer-basedarchival file for macromolecular

structures. J. Mol. Biol. 112: 535-542.

Bohr, H., Bohr, J., Brunak,S., Cotterill, R.M.J., Lautrup,

B., Norskov,L., Olsen, O.H., and Petersen, S.B. 1988. Protein secondary structure and homologyby neural networks.

FEBSLett. 241: 223-228.

Bohr, H., Bohr, J., Brunak,S., Cotterill, R.M.J., Fredholm,

H., Lautrup, B., and Petersen, S.B. 1990. A novel approach

to prediction of the 3-dimensionalstructures of protein

backbones by neural networks. FEBSLett. 261: 43-46.

410

ISMB-93

Cantor, C.R., and Schimmel,P.R. 1980. Biophysical

Chemistry, Part 1: The conformationof biological macromolecules. W.H. Freeman and Company.

Clementi, E. ed. 1991. MOTECC

ModernTechniques in

Computational Chemistry. ESCOM,

Leiden.

Friedrics, M.S., and Wolynes,P.G. 1989. TowardProtein

Tertiary Structure Recognition by Meansof Associative

MemoryHamiltonians. Science 246:371-373.

Holley, L.H., and Karplus, G. 1989. Protein secondary

structure prediction with a neural network. Proc. Natl.

Acad. Sci. U.S.A. 86: 152-156.

Kabsch, W., and Sander, C. 1983. Howgood are predictions of protein secondarystructure?. FEBSLett. 155:179182.

Lambert, M.H., and Scheraga, H.A. 1989a. Pattern Recognition in the Prediction of Protein Structure. I. Tripeptide

Conformational Probabilities Calculated from the Amino

Acid Sequence. Journal of ComputationalChemistry 10:

770-797.

Lambert, M.H., and Scheraga, H.A. 1989b. Pattern Recognition in the Prediction of Protein Structure. II. ChainConformation from a Probability-Directed Search Procedure.

Journal of Computational Chemistry 10:798-816.

Lambert, M.H., and Scheraga, H.A. 1989c. Pattern Recognition in the Prediction of Protein Structure. 111. AnImportance-Sampling Minimization Procedure. Journal of Computational Chemistry 10: 817-831.

Mejia, C., and Fogelman-Soulie,F., 1990. Incorporating

knowledgein multilayer networks: The exampleof proteins secondarystructure prediction. Rapportde recherche,

Laboratoire de Rechercheen Informatique, Univ. de Parissud.

Momany,F.A., McGuire, R.F., Burgess, A.W., and Scheraga, H.A. 1975. EnergyParameters in Polypeptides. VII.

Geometric Parameters, Partial AtomicCharges, Nonbonded Interactions, HydrogenBondInteractions, and Intrinsic

Torsional Potentials for the Naturally Occurring Amino

Acids. The Journal of Physical Chemistry 79: 2361-2381.

Presta, L.G., and Rose, G.D. 1988. Helix Signals in Proteins. Science 240: 1632-1641.

Qian, N., and Sejnowski,T.J. 1988. Predicting the Secondary Structure of Globular Proteins Using Neural Ne 1 work

Models.J. of Mol. Biol. 202: 865-884.

Rooman,M.J., and Wodak.S.J. 1988. Identification of predictive sequencemotifs limited by protein structure data

base size. Nature335: 45-49.

SunSoft 1991. OpenWindowsDeveloper’s GUIDE3.0,

User Guide. SunSoft, MountainView, CA.

Wilcox, G.L., and Poliac, M.O.1989. Generalization of

Protein Structure From Sequence Using A Large Scale

Back propagation Network.Proceedingsof the International Joint Conference on Neural Networks, IJCNN1989,

Washington, D.C.