ABSTRACT OF THESIS A NEW SCALABLE SYSTOLIC ARRAY PROCESSOR ARCHITECTURE FOR

advertisement

ABSTRACT OF THESIS

A NEW SCALABLE SYSTOLIC ARRAY PROCESSOR ARCHITECTURE FOR

DISCRETE CONVOLUTION

Two-dimensional discrete convolution is an essential operation in digital image

processing. An ability to simultaneously convolute an (i×j) pixel input image plane with

more than one Filter Coefficient Plane (FC) in a scalable manner is a targeted

performance goal. Assuming k FCs, each of size (n×n), an additional goal is that the

system have the ability to output k convoluted pixels each clock cycle. To achieve these

performance goals, an architecture that utilizes a new systolic array arrangement is

developed and the final architecture design is captured using the VHDL hardware

descriptive language. The architecture is shown to be scalable when convoluting multiple

FCs with the same input image plane. The architecture design is functionally and

performance validated through VHDL post-synthesis and post-implementation (functional

and performance) simulation testing. In addition, the design was implemented to a Field

Programmable Gate Array (FPGA) experimental hardware prototype for further

functional and performance testing and evaluation.

KEYWORDS: Systolic Array Processor, Discrete Convolution, Hardware Prototyping,

Scalable Architecture, Parallel Architecture.

________________________

________________________

A NEW SCALABLE SYSTOLIC ARRAY PROCESSOR ARCHITECTURE FOR

DISCRETE CONVOLUTION

By

Albert Tung-Hoe Wong

____________________________

Director of Thesis

____________________________

Director of Graduate Studies

____________________________

RULES FOR THE USE OF THESIS

Unpublished theses submitted for the Master’s degree and deposited in the University of

Kentucky Library are as a rule open for inspection, but are to be used only with due

regard to the rights of the authors. Bibliographical references may be noted, but

quotations or summaries of parts may be published only with permission of the author,

and with the usual scholarly acknowledgements.

Extensive copying or publication of the thesis in whole or in part also requires the

consent of the Dean of the Graduate School of the University of Kentucky.

A library that borrows this thesis for use by its patrons is expected to secure the signature

of each user.

Name

Date

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

________________________________________________________________________

THESIS

Albert Tung-Hoe Wong

The Graduate School

University of Kentucky

2003

A NEW SCALABLE SYSTOLIC ARRAY PROCESSOR ARCHITECTURE FOR

DISCRETE CONVOLUTION

THESIS

A thesis submitted in partial fulfillment of the

requirements for the degree of Master of Science in Electrical

Engineering in the College of Engineering

at the University of Kentucky

By

Albert Tung-Hoe Wong

Lexington, Kentucky

Director: Dr. J. Robert Heath, Associate Professor of Electrical and Computer

Engineering

Lexington, Kentucky

2003

MASTER’S THESIS RELEASE

I authorize the University of Kentucky

Libraries to reproduce this thesis in

whole or in part for purposes of research.

Signed: _____________________________________

Date: _______________________________________

ACKNOWLEDGEMENTS

The following thesis, while an individual work, benefited from the insights and

direction of several people. First, my Thesis Chair, Dr. J. Robert Heath, exemplifies the

high quality scholarship to which I aspire. In addition, Dr. J. Robert Heath provided

timely and instructive comments and evaluation at every stage of the thesis process,

allowing me to complete this project. Next, I wish to thank the complete Thesis

Committee: Dr. J. Robert Heath, Dr. Hank Dietz, and Dr. William R. Dieter. Each

individual provided insights that guided and challenged my thinking, substantially

improving the finished product. I would also like to thank Dr. Michael Lhamon from

Lexmark Inc. for his technical insights, guidance and comments.

In addition to the technical and instrumental assistance above, I received equally

important assistance from family and friends. My wife, Sze Ying Ng, provided on-going

support through out the thesis process for me to finish the thesis.

iii

CONTENTS

Acknowledgements............................................................................................................ iii

List of Tables ..................................................................................................................... vi

List of Figures ................................................................................................................... vii

Chapter 1 Introduction .........................................................................................................1

Chapter 2 Background and Convolution Architecture Requirements .................................2

Chapter 3 Version 1 Convolution Architecture ...................................................................7

3.1. Arithmetic Unit (AU) .......................................................................................... 7

3.2. Coefficient Shifters (CSs) ................................................................................. 10

3.3. Input Data Shifters (IDSs)................................................................................. 11

3.3.1. Register Bank (RB) ............................................................................... 12

3.3.2. Pattern Generator Pointers (PGPs) ....................................................... 12

3.3.3. Delay Units (DU).................................................................................. 15

3.4. Systolic Flow of Version 1 Convolution Architecture. .................................... 15

3.5. Data Memory Interface (DM I/F) ..................................................................... 17

3.6. Output Correction Unit ..................................................................................... 19

3.7. Controller .......................................................................................................... 19

Chapter 4 Revised Architectural Requirements and Resulting Version 2 Convolution

Architecture...............................................................................................................21

4.1. Version 2 Convolution Architecture for (k = 1) ............................................... 21

4.2. Arithmetic Unit (AU) ........................................................................................ 22

4.2.1. Multiplication Unit (MU) of Multiplication and Add Unit (MAU) ...... 26

4.2.2. Delay Units (DU).................................................................................. 31

4.3. Input Data Shifters (IDS) .................................................................................. 31

4.4. Data Memory Interface (DM I/F) ..................................................................... 32

4.5. Memory Pointers Unit (MPU) .......................................................................... 32

4.6. Systolic Flow of Version 2 Convolution Architecture ..................................... 34

4.7. Controller .......................................................................................................... 35

4.8. Multiple Filter Coefficient Sets when (k > 1) ................................................... 43

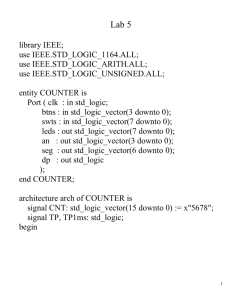

Chapter 5 VHDL Description of Version 2 Convolution Architecture..............................45

iv

Chapter 6 Version 2 Convolution Architecture Validation via Virtual Prototyping

(Post-Synthesis and Post-Implementation Simulation Experimentation).................47

6.1. Post-Synthesis Simulation ................................................................................ 48

6.1.1. Adders ................................................................................................... 48

6.1.2. Multiplication Unit................................................................................ 51

6.1.3. Version 2 Convolution Architecture (with k = 1) ................................. 52

6.2. Post-Implementation Simulation ...................................................................... 61

6.2.1. Synthesis and Implementation of Version 2 Convolution Architecture

(with k = 1)...................................................................................................... 61

6.2.2. Version 2 Convolution Architecture (with k = 1) ................................. 62

6.2.3. Synthesis and Implementation of Version 2 Convolution Architecture (k

= 3) .................................................................................................................. 65

6.2.4. Validation of Version 2 Convolution Architecture (with k = 3)........... 66

Chapter 7 Hardware Prototype Development and Testing ................................................72

7.1. Board Utilization Modules and Prototype Setup .............................................. 73

7.2. Hardware Prototyping Flow.............................................................................. 76

7.3. Test Cases ......................................................................................................... 80

Chapter 8 Conclusion.........................................................................................................84

Appendix A VHDL Code for Version 2 Discrete Convolution Architecture ....................86

Appendix B VHDL codes, C++ source codes and Script file for Post-Synthesis

Simulation ...............................................................................................................133

Appendix C C++ Source Codes for Programs Used During Post-Implementation

Simulation ...............................................................................................................140

Appendix D C++ Source Codes for Programs Used During Hardware Prototype

Implementation .......................................................................................................143

Appendix E VHDL Files for Modules External to the Convolution Architecture...........149

References........................................................................................................................157

Vita .................................................................................................................................159

v

LIST OF TABLES

Table 3.1. Filter coefficient array. .....................................................................................11

Table 3.2. 5×5 Filter size (with one output pointer). .........................................................13

Table 3.3. 5×5 Filter size (Convolution with two output pointers). ..................................14

Table 4.1. Gate count comparison between CSA and CLA................................................25

Table 4.2. A summary of the multiplication. .....................................................................26

Table 4.3. Partial Product Selection Table.........................................................................28

Table 4.4. Comparison between method I and method II..................................................30

Table 6.1. Details of FPGA on the XESS protoboard. .......................................................62

Table 6.2. Resource utilization of Version 2 Convolution Architecture (with k = 1)........62

Table 6.3. Resource utilization of Version 2 Convolution Architecture (with k = 3)........66

vi

LIST OF FIGURES

Figure 2.1. Pictorial view of Input Image Plane (IP), Filter Coefficient Plane (FC), and

Output Image Plane (OI).............................................................................................3

Figure 2.2. Example showing how two consecutive output pixels are generated. This

example is shown with a 3×3 size FC. .......................................................................4

Figure 2.3. Example showing that only (n-1) previous rows plus n input image pixels

need to be stored. In this example, 2 previous rows (shaded rows in addition to

IP23,..,IP25, IP33,..,IP35) plus 3 additional input image pixels (IP43,..,IP45) are

needed for a 3×3 filter size..........................................................................................4

Figure 3.1. Top-level view of Version 1 of the convolution architecture (d is assumed

to be 8 in this example)...............................................................................................8

Figure 3.2. A MAU and included functional units. ..............................................................8

Figure 3.3. Systolic array structures of MAUs, where IDSs are outputs from Input Data

Shifters and CSs are the outputs from Coefficient Shifters. .......................................9

Figure 3.4. Functional units within CSs.............................................................................10

Figure 3.5. Arrangement of the filter coefficients within the Coefficient Shifters............11

Figure 3.6. Functional units within IDSs. ..........................................................................11

Figure 3.7. Generalized RB for n×n filter size. (d denotes number of bits for the input

pixels)........................................................................................................................12

Figure 3.8. Additional hardware and modification for convolution of x output pixels in

parallel for (x ≤ n) (Functional units shaded in gray are additional hardware

required for processing two convolutions in parallel). .............................................14

Figure 3.9. Organization of Flip-flops within the Delay Unit (DU). R within the figure

denotes one flip-flop. ................................................................................................15

Figure 3.10. Pictorial view of the data flow within the MAUs for one output pixel..........16

Figure 3.11. Basic functional units within Data Memory I/F............................................17

Figure 3.12. A more detailed look at DM I/F. ...................................................................18

Figure 3.13. Time line for activities, where W denotes Write or R denotes Read (from

external memory device) registers indicated in boxes directly below......................19

Figure 3.14. Output pattern for two convolutions in parallel. ...........................................19

vii

Figure 4.1. A top level view of Version 2 of the convolution architecture for one

distinct filter coefficient set with n = 5 and d = 8 (MAA denotes Multiplication

and Add Array and AT denotes Adder Tree). ...........................................................22

Figure 4.2. Functional units within the Multiplication and Add Array (MAA). ................23

Figure 4.3. A MAU and its functional units. ......................................................................23

Figure 4.4. A possible arrangement of the AT (R denotes a single flip-flop pipeline

stage; a pipeline stage is included within each CSA and CLA) for a 5×5 FC. ..........24

Figure 4.5. One possible arrangement of the AT when CLA is utilized within the

MAUs. .......................................................................................................................25

Figure 4.6. Illustration of the paper and pencil multiplication technique (s on each row

of the partial products denotes sign extension of that particular row of partial

product). ....................................................................................................................27

Figure 4.7. One possible arrangement of Multilevel CSA Tree for six partial products....28

Figure 4.8. Multiplier based on Modified Booth’s Algorithm and Wallace Tree

Structure....................................................................................................................29

Figure 4.9. Illustration of multiplication technique based on Modified Booth’s

Algorithm..................................................................................................................29

Figure 4.10. Partial Product’s sign extension reduced for hardware saving......................30

Figure 4.11. Functional units within the DU for the case of n = 5 and two pipeline

stages within each MAU (PL denotes a pipeline stage composed of flip-flop

registers)....................................................................................................................31

Figure 4.12. Structural view of the IDS with n = 5 and d = 8............................................32

Figure 4.13. External memory devices organization for n = 5 and d = 8. .........................33

Figure 4.14. Functional units within the Memory Pointers Unit (MPU)...........................34

Figure 4.15. Pictorial view of the data flow within the MAAs for one output pixel (td

denotes the time delay between each MAU). ............................................................35

Figure 4.16. Top level view of the Controller Unit (CU). .................................................36

Figure 4.17. Functional Units that receive control signals from the CU. ..........................37

Figure 4.18. System flow chart for Version 2 convolution architecture’s Controller

Unit (CU). .................................................................................................................39

Figure 4.19. Modified Version 2 system flow chart. .........................................................41

viii

Figure 4.20. Version 2 architecture for k (n×n) filter coefficient sets (where k can be

any number). .............................................................................................................43

Figure 5.1. Version 2 Convolution Architecture organization. .........................................46

Figure 6.1. Testing model for lower level functional components. ...................................49

Figure 6.2. Post-Synthesis simulation for 14-bit CLA. ......................................................49

Figure 6.3. A close up view of one segment of Figure 6.2. ...............................................50

Figure 6.4. Post-Synthesis simulation for 15-bit CLA. ......................................................50

Figure 6.5. Post-Synthesis simulation for 16-bit CLA. ......................................................50

Figure 6.6. Post-Synthesis simulation for 17-bit CLA. ......................................................50

Figure 6.7. Post-Synthesis simulation for 19-bit CLA. ......................................................51

Figure 6.8. Post-Synthesis simulation for all possible inputs for the Multiplication Unit

(MU)..........................................................................................................................51

Figure 6.9. A close up view of one segment of the simulation in Figure 6.8 above..........52

Figure 6.10. Test case 1 with IP and OI of size 5×60 (however, only the first seven

columns of both IP and OI are shown due to report page width limit). ...................53

Figure 6.11. The source code for C++ program that generates test vectors to program

the filter coefficients into MAUs...............................................................................54

Figure 6.12. Arrangement of the Filter Coefficients within the Arithmetic Unit. .............55

Figure 6.13. First phase of operation; programming of FCs into MAUs...........................56

Figure 6.14. First phase of operation; receiving the first two rows of the IP (shown in

figure above is the beginning of the second row of the input pixels). ......................56

Figure 6.15. Second phase of operation; output pixels generated. ....................................57

Figure 6.16. Second phase of operation; output pixels of the second row of OI

(superimposed)..........................................................................................................58

Figure 6.17. Third phase of operation; output pixels of the last row of OI

(superimposed)..........................................................................................................58

Figure 6.18. Test case 2; IP, FCs and expected OI (the first seven columns)...................59

Figure 6.19. First phase of operation for test case 2. .........................................................60

Figure 6.20. Second phase of operation for test case 2; output pixels shown are the

first six of row one of OI (superimposed).................................................................60

ix

Figure 6.21. Third phase of operation for test case 2; output pixels shown are the first

six of the last row for OI (superimposed). ................................................................61

Figure 6.22. Second phase of operation for test case 1 (post-implementation

simulation); output pixels of the second row of OI (superimposed). .......................63

Figure 6.23. Third phase of operation for test case 1 (post-implementation simulation);

output pixels of the last row of OI (superimposed). .................................................64

Figure 6.24. Second phase of operation for test case 2 (post-implementation

simulation); output pixels shown are the first six of row one of OI

(superimposed)..........................................................................................................64

Figure 6.25. Third phase of operation for test case 2 (post-implementation simulation);

output pixels shown are the first six of the last row for OI (superimposed).............65

Figure 6.26. Test Case 1: FC planes, IP plane and the predicted OI planes......................67

Figure 6.27. Superimposed output image pixels (start from the 3rd pixel) for first row

of the OIs for test case 1. ..........................................................................................68

Figure 6.28. Superimposed output image pixels (from 3rd pixel onward) of the second

row of the OIs for test case 1. ...................................................................................68

Figure 6.29. Test case 2: FC planes, IP plane and the predicted OI planes. .....................69

Figure 6.30. Superimposed output image pixels (start from the 3rd pixel) for third row

of the OIs for test case 2. ..........................................................................................70

Figure 6.31. Superimposed output image pixels (from 3rd pixel onward) of the fourth

row of the OIs for test case 2. ...................................................................................70

Figure 6.32. A plot of equivalent system gates versus number of FC planes....................71

Figure 7.1. Convolution Architecture hardware implementation. .....................................72

Figure 7.2. XSV-800 prototype board featuring Xilinx Virtex 800 FPGA (picture

obtained from XESS Co. website, http://www.xess.com).........................................73

Figure 7.3. Top level view of the prototyping hardware. ..................................................74

Figure 7.4. Example of a VHDL file for creating an internal Block RAM containing

input image pixels for the convolution system (seed number of 1 is provided to

the program)..............................................................................................................75

Figure 7.5. FPGA configuration and bit stream download program, gxsload from

XESS Co. ...................................................................................................................77

x

Figure 7.6. Execution of the FCs configuration program..................................................78

Figure 7.7. Upload SRAM content using gxsload utility, the high address indicates the

upper bound of the SRAM address space whereas the low address indicates the

lower bound of the SRAM address space. .................................................................79

Figure 7.8. Uploaded SRAM contents stored in a file (Intel hex file format). There are

two segments due to the fact that the program wrote the right bank of the SRAM

(16-bit) first and the left bank of the SRAM next (16 MSB bits)..............................79

Figure 7.9. SRAM contents retrieved for first OI plane for test case 1. .............................81

Figure 7.10. SRAM contents retrieved for second OI plane for test case 1........................81

Figure 7.11. SRAM contents retrieved for third OI plane for test case 1. ..........................81

Figure 7.12. SRAM contents retrieved for first OI plane for test case 2. ...........................82

Figure 7.13. SRAM contents retrieved for second OI plane for test case 2........................82

Figure 7.14. SRAM contents retrieved for third OI plane for test case 2. ..........................83

xi

Chapter 1

Introduction

Performance and cost are both important parameters and criteria in today’s

computing system components, whether the components are an entire computer or

computer accessories and peripherals such as printers. The ever increasing desire for

higher performance from consumers has driven printer manufacturers to develop and

incorporate performance enhancements into their products at a cheapest price. It is a

known fact that cost is, most of the time, directly proportional to performance, but

manufacturers are constantly pursuing higher performance for less cost.

An ability to scan and print exceedingly clear images at a maximum page-perminute rate at the cheapest cost are performance metrics printer manufacturers aim for. In

order to produce highly enhanced clear images, the “discrete convolutional-filtering

algorithm” must be implemented within the scanner or printer. General-purpose signal

processors from various vendors are widely used to implement the convolutional-filtering

algorithm. Many times all the functionality offered by general-purpose signal processors

are not needed or required by the manufacturers, thus the unused functionality becomes a

cost overhead. Also, many times, commercially available general-purpose processors

cannot meet desired performance/cost requirements of having the highest performance at

lowest cost. Thus, a special-purpose architecture signal/image processor is desired to

implement the discrete convolutional filtering algorithm. The subject of this thesis is the

development of an efficient high performance special purpose signal/image processor

architecture which may be used to implement the discrete convolutional-filtering

algorithm at a lowest cost.

1

Chapter 2

Background and Convolution Architecture Requirements

Convolution is one of the essential operations in digital image processing required

for image enhancements [15,16]. It is used in linear filtering operations such as

smoothing, denoising, edge detection and so on [15,16]. In general, image processing is

carried out in a two dimensional space/array [16]. A digital image can be represented

with an array of numbers in a two dimensional space. Each number (or pixel) has an

associated row and column to indicate its coordination (position) in the two dimensional

space and the number’s value represents gray levels for that coordinate [15]. The gray

levels are usually represented with a byte or 8-bit unsigned binary number, ranging from

0 to 255 in decimal. Equation 1 shows the two dimensional discrete convolution

algorithm, where IP is the Input Image Plane, FC is the Filter Coefficient Plane, and OI is

the Output Image Plane [16].

n −1 n −1

OI [ x, y ] = FC[ x, y ] * IP[ x, y ] = ∑∑ FC[ I , J ]IP[ x − I + (

I =0 J =0

n −1

n −1

), y − J + (

)]

2

2

(1)

Figure 2.1 below shows the basic definitions for the Input Image Plane (IP), Filter

Coefficient Plane (FC), and Output Image Plane (OI). Assuming that the IP has a size of

i×j pixels and FC has a size of n×n pixels, then, OI would have a size of i×j pixels. In

most cases, n<i and n<j.

2

IP(0,0)

FC(0,0)

n

OI(0,0)

c

FC(n-1,n-1)

i

x

n

IP(x,y)

i

x OI(x,y)

IP(i-1,j-1)

OI(i-1,j-1)

j

j

Figure 2.1. Pictorial view of Input Image Plane (IP), Filter Coefficient Plane (FC),

and Output Image Plane (OI).

Digital convolution can be thought of as a moving window of operations [16]. As

shown in Equation 1, one output pixel of the OI[x,y] can be obtained by rotating the FC

180 degrees around the center point (denoted c in Figure 2.1 within FC) and place it over

the IP with the center point on top of IP[x,y]. All the overlapping IP pixels would be

multiplied by the corresponding filter coefficients on FC and then all the products are

summed to generate the one pixel OI[x,y]. The next output pixel can be obtained by

sliding the FC plane by one pixel to the right and then repeat all the processes mentioned

above. Figure 2.2 illustrates the idea of the moving window of operations. FC is first

centered at IP[3,4] to compute OI[3,4] and then moves to IP[3,5] for OI[3,5].

From Figure 2.2 below, one can deduce that when an output pixel is computed,

access to entire previous rows or portions of previously input rows of input pixels are

needed. Hence, previous input image pixels must be stored for this purpose. However,

not all of the previous rows of input image pixels are necessarily needed. Instead, only

(n-1) rows plus n input image pixels are required. This can be shown by example in

Figure 2.3 below. Another important observation that can be made from Figure 2.2 is that

for consecutive convolution, only n input image pixels are obsolete and require update.

This is an important observation that influences the design of the convolution

architecture. Figure 2.3 shows an example for a 3×3 filter size where the shaded areas of

3

the IP and the area under the FC plane are the input image pixels that need to be stored.

Hence, these pixels can be stored in a memory device whether it is on chip or off chip.

Filter Coefficients

FC centered at

IP[3,4]

•

•

•

•

•

•

•

•

IP33

•

•

•

•

•

FC02

FC10

FC11

FC12

FC20

FC21

FC22

FC centered at

IP[3,5]

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

Current output, OI[3,4] = FC00IP45 + FC01IP44 + FC02IP43

+ FC10IP35 + FC11IP34 + FC12IP33

+ FC20IP25 + FC21IP24 + FC22IP23

•

•

IP26

•

IP36

IP35

•

IP44

•

•

IP25

•

•

IP45

•

•

IP34

•

•

•

IP24

IP35

IP44

•

FC01

IP25

IP34

IP43

•

•

IP24

IP23

•

•

FC00

•

IP46

IP45

•

•

next output, OI[3,5] = FC00IP46 + FC01IP45 + FC02IP44

+ FC10IP36 + FC11IP35 + FC12IP34

+ FC20IP26 + FC21IP25 + FC22IP24

Figure 2.2. Example showing how two consecutive output pixels are generated. This

example is shown with a 3×3 size FC.

Beginning of the

rows

End of the rows

•

•

•

•

•

•

•

•

•

•

IP34

•

IP35

•

IP43

•

IP25

•

IP33

•

•

IP24

IP23

•

•

•

IP44

IP45

Current input image pixel.

The input image pixels

coming in row by row.

Figure 2.3. Example showing that only (n-1) previous rows plus n input image pixels

need to be stored. In this example, 2 previous rows (shaded rows in addition to

IP23,..,IP25, IP33,..,IP35) plus 3 additional input image pixels (IP43,..,IP45) are needed

for a 3×3 filter size.

Convolution is a vital part of image processing and it can be done through both

software and hardware [1]. Much effort has been directed towards speeding up the

convolution process through hardware implementation [1,2,3,9,14]. This is because

convolution is a computation intensive algorithm as shown in Equation 1. For example,

with a 5×5 filter size, each output pixel requires 25 Multiplication and Addition

4

Operations (MAOPS). Thus, as the total number of pixels for an image increases,

MAOPS will increase substantially. Bosi and Bois in [1] propose the use of FPGAs

programmed with a 2D convolver as a coprocessor to an existing digital signal processor

(DSP) to speedup the convolution process. In [2,3,9,14], special purpose convolution

architectures are designed to meet real-time image processing requirements. Hsieh and

Kim in [2] proposed a highly pipelined VLSI convolution architecture. Parallel one

dimensional convolutions and a circular processing module were the approaches used in

the architecture for performance gain and the architecture required n×n processing

elements, each being a multiplier and adder. Both [3] and [14] propose convolution

architectures based on systolic arrays which operate on real time images with a size of

512×512 pixels. Also, both of the architectures performed bit-serial arithmetic. The

architecture of [14] requires on chip memory to store the necessary input pixels.

The focus of this thesis is the development of a high performance real time special

purpose convolution architecture which is desired for scanning and printing applications.

The requirement for the final “production” version (implemented with ASIC technology)

of this architecture is the capability to perform convolution with a 5×5 FC size on input

images of size of 8½”×11” at a rate of 60 Pages Per Minute (PPM) at 600dpi (dot per

inch). A total of 33.66M pixels are generated when a standard paper size of 8½”×11” is

scanned at a resolution of 600dpi. Add to that the requirement to process 60 scanned

PPM results in 1.69G MAOPS per second. The multiplication operands are each 8 bits in

width generating 16 bit products which must be summed. The method proposed in [1] is

not feasible from a cost standpoint and also some of the functionality of the DSP may not

be required. The architectures presented in [2] and [9] are special purpose architectures

for convolution, and both architectures require n×n processing elements which could

potentially occupy a large chip area. The architectures of [3] and [14] are both systolic

array architectures employing bit-serial arithmetic operations and hence may not be able

to meet performance requirements mentioned above. In [7] the authors point out the well

known fact that for most applications bit-parallel arithmetic has a performance edge over

bit-serial arithmetic. However, the processing architecture of [7] is based on bit-serial

arithmetic since it is sufficient for their requirements and has less gate count.

5

Two hardware architectures, version 1 and version 2, are proposed for the

implementation of the two dimensional discrete convolution algorithm shown as

Equation 1. Version 1 of the architecture proposed in the next section, is based on a linear

systolic array structure. Version 2 of the architecture, based on an extension of version 1,

will be shown in a later section. Version 2 of the architecture was developed to meet

some functional and performance requirements different from those of Version 1 of the

architecture.

Version 1 is a special purpose convolution architecture. Unlike [2] and [9], the

architecture will not use n×n processing elements and it will be scalable in order to meet

variable performance requirements. A scalable architecture when implemented into

programmable Field Programmable Gate Array (FPGA) technology allows users to

implement the architecture to meet their specific performance needs. Parallel arithmetic

operations will be utilized in the version 1 architecture for performance gain.

6

Chapter 3

Version 1 Convolution Architecture

Specially designed hardware to implement convolution can offer a performance

gain over a general-purpose Digital Signal Processor (DSP). Since the convolution

algorithm requires a large number of multiplications and additions, a multiprocessor

architecture is desired. Multiprocessor architectures offer the benefit of processing

multiple operations at a given instance.

A specific type of multiprocessor architecture will be utilized for implementation

of convolution in hardware. It is referred to as a systolic array structure [6]. The

advantages of this type of structure include its modularity and regularity in structure and

the ease of pipelining. In other words, the systolic structure has the ability to fully and

simultaneously utilize all computational units within the architecture. A major challenge

in developing systolic array architectures can be in providing data simultaneously to the

multiple computational units in correct order. Figure 3.1 shows the top-level view of

version 1 of the developed hardware convolution architecture with the basic functional

units indicated. An external memory device (external to an FPGA or ASIC chip) will be

utilized to hold a portion of the scanned input image plane during the convolution

process. A more detailed description of the interface between the main system and the

external memory will be presented later. The functionality of all basic functional units of

the architecture of Figure 3.1 will now be described.

3.1. Arithmetic Unit (AU)

The Arithmetic Unit (AU) is the core of version 1 of the convolution architecture.

As shown in Figure 3.1 above, the AU consists of an Accumulator plus Multiplication

and Add Units (MAUs). As the name implies, the basic building blocks within MAUs are

7

multiplication units and adders. Each MAU consists of one multiplication unit and one

adder as shown in Figure 3.2 below.

Input Image Plane, IP

Controller

40

External Memory

Device

8

Data Memory

IDS Input

Interface (DM I/F)

Input Data Shifters (IDSs)

8

Outputs, OI

21

Output

Correction

Unit

21

A c c u m u l a to r

8 IDS

n-1

8 IDS

0

8 IDS

1

Multiplication and Add Units

(MAUs)

19

MAUn-1

MAU1

MAU0

Arithmetic Unit (AU)

8

8

Filter Coefficients, FC

CSn-1

8

CS1

8

CS0

Coefficient Shifters (CSs)

Figure 3.1. Top-level view of Version 1 of the convolution architecture (d is assumed

to be 8 in this example).

IDS output

8

16

To the next

MAU

17

Output from

previous MAU

Adder

16

Multiplication

Unit

8

CS output

Figure 3.2. A MAU and included functional units.

8

As depicted in Figure 3.2 the multiplication unit will multiply two 8-bit binary

numbers, and then the adder will add the product to input from the previous MAU. Output

from the adder will be used as the input to the next MAU. In order to achieve high

performance it is important to utilize high-speed adders and multiplication units within

the architecture. It is also of interest to adopt a multiplication technique that is suitable for

pipelining for performance enhancement. For example, the Wallace Tree multiplication

or array multiplication techniques can be easily pipelined into multiple stages. The

implementation platform influences performance as well. For instance, it is now common

to find high performance built in core adders and multiplication units within FPGA

technology chips.

IDSn-1

IDS1

IDS0

MAU1

MAU0

Clock, Clk

Partial result to

a c c u m u l a to r

MAUn-1

Register1

Registern-1

Register0

Multiplication and Add Units (MAUs)

CSn-1

CS1

CS0

Figure 3.3. Systolic array structures of MAUs, where IDSs are outputs from Input

Data Shifters and CSs are the outputs from Coefficient Shifters.

The MAUs are arranged in such a way that they create the systolic array structure.

Figure 3.3 shows the systolic array structure of the MAUs. The total number of MAUs

used is determined by the size of the coefficient filter. For an n×n filter size, n MAUs

would be utilized.

Use of the systolic array structure will require the outputs from the Input Data

Shifter (IDS) and Coefficient Shifter (CS) functional units to be skewed. This is to ensure

that the correct sequence of multiplications is added correctly. An accumulator is needed

at the end of the structure to add the necessary partial results generated by the MAUs to

form an output pixel. For example, for an n×n filter size, the accumulator must

9

accumulate n partial results generated by the MAUs in the generation of one output pixel.

This requires n clock cycles if each MAU takes one clock cycle to complete its operation.

The registers to the left of each MAU serve as pipeline stage registers. If necessary,

additional pipeline stages can be implemented within each MAU to increase performance

[8].

3.2. Coefficient Shifters (CSs)

Coefficient shifters are a group of parallel register shifters that can be

programmed to retain the values of the filter coefficients. CSs are responsible for

generating a skewed output of filter coefficients to MAU inputs. Figure 3.4 shows a

structural view of the CSs. The number of shifters within the CSs is also dependant on the

coefficient filter size. For an n×n filter size, there will be n coefficient shifters. Each CS

stores n coefficients as seen in Figure 3.4. Once programmed with the filter coefficients,

the CSs will retain the filter coefficients through out the convolution process. In order to

provide the MAUs with the skewed input from the CS, as the convolution process starts,

CS0 will shift after the first clock cycle, the next clock cycle CS1 and CS0 will shift, the

following clock cycle CS2, CS1, and CS0 will shift. The process continues until all the CSs

are shifting every clock cycle. This will ensure that the MAUs will receive required

skewed input. Figure 3.5 shows the arrangement of the filter coefficients within the CSs

for the convolution algorithm corresponding to the filter coefficients shown in Table 3.1.

CSn-1

CS1

8

8

CSn-1

Clock, clk

8

FC Input

CS0

8

CS1

8

0

CS0

0

8

0

8

1

8

1

8

1

8

n-1

8

n-1

8

n-1

8

Coefficients Shifters (CSs)

Figure 3.4. Functional units within CSs.

10

Table 3.1. Filter coefficient array.

CSn-1

FC(0, 0)

FC(1, 0)

FC(0, 1)

FC(0, n-2)

FC(0, n-1)

FC(1, n-1)

FC(n-1, 0)

FC(n-1, 1)

FC(n-1, n-2)

FC(n-1, n-1)

8

FC(0,0)

CSn-2

8

CS1

FC(0,1)

8

FC(0,n-2)

FC(1,0)

FC(n-1,0)

CS0

8

FC(0,n-1)

FC(1,n-1)

FC(n-1,n-2)

FC(n-1,1)

FC(n-1,n-1)

Coefficient Shifters (CSs)

Figure 3.5. Arrangement of the filter coefficients within the Coefficient Shifters.

3.3. Input Data Shifters (IDSs)

The main function of the Input Data Shifters (IDSs) is to generate a proper

sequence of input image pixels for the MAUs. Figure 3.6 shows the basic functional units

within the IDSs of Figure 3.1.

Pattern Generator

Pointer(s) (PGPs)

Input from Data

Memory Interface

Register Bank (RB)

(Size of n×n Registers)

Delay Units

Figure 3.6. Functional units within IDSs.

11

Outputs to MAU

Inputs

3.3.1. Register Bank (RB)

Due to the structure of the convolution algorithm, for each successive output

pixel, it requires access to the previous ((n×(n-1))+n-1) input image pixels. Hence, the RB

of Figure 3.6 is used to provide the correct input image pixels for successive convolution.

Figure 3.7 shows the detail of the RB. The RB consists of n registers and each register has

a length of n input image pixels or (n×d) bits assuming each input image pixel is d bits in

length. Thus, RB has the capacity to hold n2 input image pixels that are needed for each

convolution. This functional unit and its structure also improve the scalability of the

architecture.

Demux’s

select lines

Mux’s select

lines

log 2 n

log 2 n

0

d

d(n-1)-1 (d×n)-1

n×d

Mux

Input from

Data

Memory

0

Demux

0

n×d

To delay

units

n-1

n-1

Registers Bank

Figure 3.7. Generalized RB for n×n filter size. (d denotes number of bits for the

input pixels).

3.3.2. Pattern Generator Pointers (PGPs)

In order to provide the MAUs with the correct sequence of input image pixels for

each convolution, the Pattern Generator Pointers (PGPs) of Figure 3.6 are utilized. As the

RB fully fills up for each convolution, only one register needs to be updated with new

input image pixels. Thus, the update sequence for the RB (Input image pixels coming

12

from the Data Memory Interface) will repeatedly go from top to bottom (repeating from

zero to (n-1)). As for the output sequence from RB, each convolution requires all

registers’ contents being fetched to the Delay Units. Hence, all except the Data Memory

Interface (DM I/F) run at frequency n times faster (for an n×n filter size). The DM I/F

operates at the same frequency as the input image pixel rate. Table 3.2 below shows the

output sequence for one output pointer. The output sequence is 0, 1, 2, 3, 4 for n = 5. The

example in Table 3.2 is based on a 5×5 filter size. It is found that the output pattern will

repeat itself every five convolutions.

Reading order

from the RB

Table 3.2. 5×5 Filter size (with one output pointer).

1st

0

1

2

3

4

Convolutions

2nd

1

2

3

4

0

3rd

2

3

4

0

1

4th

3

4

0

1

2

5th

4

0

1

2

3

The architecture can be scaled up to process up to x convolutions in parallel where

(x ≤ n). This is made possible by adding (x-1) additional output pointer(s), Delay Unit(s)

and AU(s) to the existing architecture. As in the example above, the output sequence for

each pointer can be predetermined and they repeat after every five convolutions. Table

3.3 below shows an example for a 5×5 filter size with two output pointers. Figure 3.8

shows the additional hardware required if two convolutions are to be done in parallel and

the figure infers the additional hardware required to convolute x = n points in parallel. In

addition, an Output Correction Unit (OCU) will be needed for convolution of two or

more output pixels in parallel (See OCU in Figure 3.1). The function of the OCU will be

explained in a following section.

As the architecture is scaled up to process more than one convolution in parallel,

all functional units within the architecture except the DM I/F (which runs at the same

frequency as the input image pixels’ rate) can operate at a lower frequency. If the

architecture is scaled up to process n convolutions in parallel, then the whole architecture

operates at the same clock rate as the input image pixels rate. Thus, on average one

convolution can be achieved in every clock cycle. However, the RB needs modification in

order to process n convolutions in parallel. To process n convolutions in parallel, on

every clock cycle all n registers within the RB will be read at once. Thus, it is necessary

13

for the current input from the DM I/F for updating one of the registers within RB being

fetched to the MAU at the same instance. For this case, n pointers are utilized and each

pointer will only have one sequence instead of five as shown in Table 3.2 and Table 3.3.

Table 3.3. 5×5 Filter size (Convolution with two output pointers).

Reading order

from the RB

(pointer 0)

2nd

2

3

4

0

1

Reading order

from the RB

(pointer 1)

Convolutions

1st

0

1

2

3

4

1st

1

2

3

4

0

2nd

3

4

0

1

2

3rd

4

0

1

2

3

Convolutions

3rd

0

1

2

3

4

4th

1

2

3

4

0

5th

3

4

0

1

2

4th

2

3

4

0

1

5th

4

0

1

2

3

IDS Inputs

Register Bank

4

3

2

1

0

Pointern-1

Pointer1

Pointer0

DU0

DU1

AU1

Output Correction

Unit

DUn-1

AUn-1

AU0

CSs outputs

Figure 3.8. Additional hardware and modification for convolution of x output pixels

in parallel for (x ≤ n) (Functional units shaded in gray are additional hardware

required for processing two convolutions in parallel).

14

The PGPs can be synthesized by using a finite state machine model. Another

modeling possibility is by storing the predetermined sequences into RAM and reading

them out sequentially as needed.

3.3.3. Delay Units (DU)

Output from the Register Bank (RB of Figure 3.7 and Figure 3.8) will go through

the Delay Units (DU) of Figure 3.8 before being fetched into MAUs within the Aus of

Figure 3.8. The delay units consist of a series of flip-flops placed in a manner that will

generate a skewed input to the MAUs. This is necessary for the AU to generate the correct

outputs. Figure 3.9 below shows the internal structure of a DU.

Output from the IDS

40

IDS(39 – 32)

8

IDS(31 – 24)

8

IDS(23 – 16)

8

R

R

R

R

R

R

R

R

8

IDS(7 – 0)

R

IDS(15 – 8)

8

R

MAU4

MAU3

MAU2

MAU1

MAU0

Figure 3.9. Organization of Flip-flops within the Delay Unit (DU). R within the

figure denotes one flip-flop.

3.4. Systolic Flow of Version 1 Convolution Architecture.

In order to further demonstrate how the data flow within the MAUs occurs, Figure

3.10 may be used. As the input image pixels pass through the DU from the RB, skewed

input image pixels are generated and fed to the MAUs of the AU. At the same time,

skewed filter coefficients are also input into MAUs by the CSs. Figure 3.10 below shows

an example of how an output pixel is obtained as it flows through the MAUs with a 5×5

filter coefficient size.

15

FC centered at

IP[3,4]

•

•

IP12

•

•

IP13

•

IP22

•

•

•

•

•

•

•

IP36

•

IP46

IP45

•

IP54

IP26

IP35

IP44

•

IP53

•

•

•

IP16

IP25

IP34

IP43

IP52

•

•

•

•

IP15

IP24

IP33

IP42

•

IP14

IP23

IP32

•

Filter Coefficients

•

IP55

IP56

FC00

FC01

FC02

FC03

FC04

FC10

FC11

FC12

FC13

FC14

FC20

FC21

FC22

FC23

FC24

FC30

FC31

FC32

FC33

FC34

FC40

FC41

FC42

FC43

FC44

output, OI[3,4] = FC00IP56 + FC01IP55 + FC02IP54 + FC03IP53 + FC04IP52

+ FC10IP46 + FC11IP45 + FC12IP44 + FC13IP43 + FC14IP42

+ FC20IP36 + FC21IP35 + FC22IP34 + FC23IP33 + FC24IP32

+ FC30IP26 + FC31IP25 + FC32IP24 + FC33IP23 + FC34IP22

+ FC40IP16 + FC41IP15 + FC42IP14 + FC43IP13 + FC44IP12

Time

t0 c.c.

(t0 + 1)

c.c.

(t0 + 2)

c.c.

-

-

-

-

FC44IP12

-

-

-

FC34IP22 +

FC44IP12

FC43IP13

-

-

FC33IP23 +

FC43IP13

FC42IP14

(t0 + 3)

c.c.

-

FC14IP42 + FC24IP32 +

FC34IP22 + FC44IP12

FC32IP24 +

FC42IP14

FC41IP15

(t0 + 4)

c.c.

FC04IP52 + FC14IP42 + FC24IP32

+ FC34IP22 + FC44IP12

FC13IP43 + FC23IP33 +

FC33IP23 + FC43IP13

FC31IP25 +

FC41IP15

FC40IP16

(t0 + 5)

c.c.

FC03IP53 + FC13IP43 + FC23IP33

+ FC33IP23 + FC43IP13

FC12IP44 + FC22IP34 +

FC32IP24 + FC42IP14

FC30IP26 +

FC40IP16

-

(t0 + 6)

c.c.

(t0 + 7)

c.c.

(t0 + 8)

c.c.

FC02IP54 + FC12IP44 + FC22IP34

+ FC32IP24 + FC42IP14

FC11IP45 + FC21IP35 +

FC31IP25 + FC41IP15

-

-

FC01IP55 + FC11IP45 + FC21IP35

+ FC31IP25 + FC41IP15

FC10IP46 + FC20IP36 +

FC30IP26 + FC40IP16

-

-

-

FC00IP56 + FC10IP46 + FC20IP36

+ FC30IP26 + FC40IP16

-

-

-

-

MAU4

MAU3

MAU2

MAU1

MAU0

FC24IP32 +

FC34IP22 +

FC44IP12

FC23IP33 +

FC33IP23 +

FC43IP13

FC22IP34 +

FC32IP24 +

FC42IP14

FC21IP35 +

FC31IP25 +

FC41IP15

FC20IP36 +

FC30IP26 +

FC40IP16

Figure 3.10. Pictorial view of the data flow within the MAUs for one output pixel.

As shown in Figure 3.10 above, the convolution starts at t0 clock cycle (cc) when

the first input image pixel (IP12) is multiplied by filter coefficient FC44 in MAU0. During

the next clock cycle, (t0 + 1), the previous product from MAU0 is added with the product

of the IP22 and FC34 multiplication in MAU1 while a new product is generated in MAU0

(FC43 and IP13). The sum of the two products in MAU1 will be propagated into MAU2 the

next clock cycle (t0 + 2) and it is then summed with the product generated within MAU2.

16

The process continues as shown in Figure 3.10 above. An output pixel is generated

during (t0 + 9) cc when partial results from MAU4 at (t0 + 4), (t0 + 5), (t0 + 6), (t0 + 7), and

(t0 + 8) cc are summed by the accumulator. Once the first output pixel is generated on the

9th cc, from then on a new output pixel will be generated every five cc’s.

3.5. Data Memory Interface (DM I/F)

It is anticipated that external memory devices will be utilized for IP pixel storage

since the cost of having an on chip memory within the single-chip convolution

architecture of Figure 3.1 is high for any implementation platform. (The following

assessment is made on the assumption that a 5×5 filter size is desired) The bus width for

data transfer between external memory device and DM I/F will be 40 bits wide. This will

ensure that each access to the external memory device can yield five input image pixels.

However, since memory devices such as the SRAM devices on the market only come in

sizes of 8-bit, 16-bit, and 32-bit, two memory devices will be used; one 8-bit and one 32bit. Due to the fact that for consecutive convolution only five input image pixels need to

be updated, only one access to an external memory device will be required. Figure 3.11

below shows the basic functional units within the DM I/F.

External Memory

Device

40

Cache

Unit

40

Output To

IDSs

8

Input From

Scanning Device

8

Zero Padding

Hardware

Figure 3.11. Basic functional units within Data Memory I/F.

A cache unit is utilized to reduce the penalty of accessing external memory

devices. Figure 3.12 shows a more detailed DM I/F. Register File A and B each consists

of four shift registers, namely Registers b, c, d, and e. Each register is 40-bits in size and

holds five input image pixels. In order to prevent data starvation, Register File A and B

are used alternatively. As Register File A is providing input image pixels to IDSs,

17

Register File B is being filled with input image data from external memory devices and

vise versa. As either one of the Register Files are outputting input image pixels to IDSs,

each internal register will shift an input image pixel out by shifting right.

From External 40

Memory Device

32

Register File A

32

32

Register File B

To External

Memory Device

Scanning

Device

Output to

IDSs

40

Register a

8

8

8

‘0’

Figure 3.12. A more detailed look at DM I/F.

Observing Equation 1, there are instances where references are made outside the

range of the input image. For these accesses zero pixel values will be used. To address

these boundary conditions, zero padding hardware is incorporated into the DM I/F.

Whenever the end of a row (input image pixels) is reached

(n − 1)

pixels are attached.

2

Thus, a column counter is needed within the main controller of the architecture.

Register a of Figure 3.12 is a register that can hold up to five input image pixels.

As Register a is filled, the contents will be stored into External Memory Devices. There

are also five address pointers needed for addressing the External Memory Devices for

storage of input image pixels. Each addressable location of the two External Memory

Devices can hold up to five input image pixels (40 bits). Figure 3.13 shows the time line

for activities within the DM I/F. For every four reads from External Memory Devices

(read from each pointer once) and one write to store input image pixels stored in Register

a, five output pixels (OI) of Figure 3.1 will be produced.

18

Figure 3.13. Time line for activities, where W denotes Write or R denotes Read

(from external memory device) registers indicated in boxes directly below.

3.6. Output Correction Unit

This unit is responsible for correcting the output sequence when the architecture is

scaled to process two or more convolutions in parallel. Figure 3.14 below shows an

example of the output pixel sequence when two convolutions are processed in parallel.

Instead of one output on each output clock cycle, two output pixels are generated on a

single clock cycle every two output clock cycles. Thus, the Output Correction Unit may

be needed to correct the output sequence back to one output pixel per one output clock

cycle. Whether the OCU is needed can be addressed at a later time.

Figure 3.14. Output pattern for two convolutions in parallel.

3.7. Controller

This is the functional unit of Figure 3.1 that will coordinate all the other

functional units within the architecture. The main controller of the architecture will be

19

implemented in finite state machine form. Within the main controller, there will be a row

and a column counter to keep track of the row and column counts so that it knows when

the end of a row is encountered. There can be two separate controlling units within the

main controller, one controller responsible for the DM I/F (controller DM) and the other

responsible for the rest of the architecture (controller R). The DM I/F will be running at

the same rate as the input image pixels while the rest of the architecture will be running at

least n times faster assuming convolution of a single point. However, as the architecture

is scaled up to handle x convolutions at the same time (see Figure 3.8), then the controller

R can be run at a correspondingly lower frequency rate.

Basically the controller can be divided into three main stages. The first stage is

mainly devoted to storing the first few rows of input image pixels and waiting until there

are enough input image pixels for convolution to start. The second stage is responsible

for filling up the pipeline and making sure that the convolution starts in the correct

manner. The last stage deals with shutting down of the system.

20

Chapter 4

Revised Architectural Requirements and Resulting Version 2 Convolution

Architecture

The convolution architecture proposed in the previous section is scalable and

suitable for applications that require scalable performance and hardware. In this section a

more stringent performance requirement is addressed for which a convoluted OI pixel is

expected on each clock cycle of 7.3 ns (for a final “production” model based on ASIC

technology). In addition, k distinct n×n FCs are required to be simultaneously convoluted

with each Input Image Plane (IP) resulting in a performance requirement of k OI pixels

on each 7.3 ns clock cycle. The performance requirement of k convoluted OI pixels on

each 7.3 ns clock cycles can only be expected from final high speed production

technologies. In Version 1 of the architecture, filter coefficients (FCs) were assumed to

be 8 bits in length. Filter coefficients will now be 6 bits in length. Even though the

convolution architecture proposed in the previous section can be scaled up and pipeline

stages within the MAUs can also be increased to meet all the above requirements, a

specially tailored architecture can save hardware and reduce the architecture’s controller

complexity. For example, as shown in Figure 3.1, within each AU there is an accumulator

in front of the MAUs. As the architecture is scaled up to process n convolutions in

parallel, n accumulators within the architecture will be required which can be costly from

a hardware standpoint. Furthermore, a simplified controller for the IDSs can also

contribute to a hardware saving. Hence, in this section a modified and specially tailored

convolution architecture will be presented and it is referred to as Version 2 of the

convolution architecture.

4.1. Version 2 Convolution Architecture for (k = 1)

Since the desired output rate is the convolution of one OI pixel per clock cycle,

for a n×n FC size, a total of n2 MAUs are needed for one distinct filter coefficient set.

Figure 4.1 below shows a top level view of Version 2 of the architecture where n and d

21

(width of input image pixels) are assumed to be 5 and 8 respectively. Buses shown in

Figure 4.1 with width of 40 bits are resultant of (n×d). Each functional unit in this

architecture will implement required functionality as will be addressed below.

External Memory

Device

(Data Memory)

Input Image d

Plane, IP

n×d

Controller Unit (CU)

Data Memory

Interface (DM I/F)

n×d

Arithmetic Unit (AU)

n×d

Input

Data

Shifters

(IDS)

IDS0

MAA0

IDS1

MAA1

n×d

AT

IDSn-1

Outputs,

OI

MAAn-1

n×d

Filter Coefficients,

FC

19

6

Figure 4.1. A top level view of Version 2 of the convolution architecture for one

distinct filter coefficient set with n = 5 and d = 8 (MAA denotes Multiplication and

Add Array and AT denotes Adder Tree).

4.2. Arithmetic Unit (AU)

As shown in Figure 4.1 above, the Arithmetic Unit (AU) consists of n

Multiplication and Add Arrays (MAAs) plus an Adder Tree Structure (AT) at the end of

the MAAs. Within each MAA there are n Multiplication and Add Units (MAUs) arranged

in a systolic array structure. Figure 4.2 shows the arrangement of the n MAUs within each

MAA.

The basic functional units within each MAU remain the same as in the previous

section. In Version 2 of the architecture, filter coefficients will be held within the MAUs,

therefore, an additional register is needed to hold the filter coefficient value assigned to a

specific MAU. Since Version 2 of the modified convolution architecture will feature n2

MAUs, the Coefficient Shifters (CSs) shown in Version 1 of the architecture (see Figure

3.1, Figure 3.4 and Figure 3.5) of the previous section can be eliminated. Hence, all the n2

22

filter coefficients will be assigned to a specific MAU. Figure 4.3 shows the functional

units within each MAU.

IDS

output

Clock,

Clk

Delay Units (DU)

DU0

DUn-1

DU1

MAU0

MAU1

Partial

result to

A dder

Tree

MAUn-1

Registern-1

Register1

Register0

Filter

Coefficients

Multiplication and Add Array (MAA)

Figure 4.2. Functional units within the Multiplication and Add Array (MAA).

DU output

Output from

previous MAU

8

14

To the next

MAU

Adder

15

Multiplication

Unit

14

6

Register

Filter Coefficient

Figure 4.3. A MAU and its functional units.

In order to achieve the desired performance, it will be necessary to pipeline all

MAUs beyond the minimum pipeline stages shown in Figure 4.2 (the register to the right

of each MAU represents a pipeline stage). Thus it is important to employ multiply

techniques that can easily be pipelined into multiple stages. It is possible to combine the

multiplication unit and the adder shown in Figure 4.3 into one unit. For the most part, a

multiplication unit usually consists of an adder tree that adds all the generated partial

23

products. As shown in Figure 4.3 an adder is required to sum the previous MAU output

with the product generated by the multiplier. It is possible to use a Carry Save Adder and

generate the output as two separate outputs (a sum output and a carry output). This will

eliminate the need for another high speed adder at the end of each MAU.

The Adder Tree (AT) within the AU is responsible for adding all the n partial

results from the MAAs to form the output image pixel. The AT can be constructed with

Carry Save Adders (CSAs) and a Carry Look Ahead Adder (CLA). In addition, the AT

will be pipelined into multiple stages as well for performance. Figure 4.4 shows a

possible arrangement of CSAs and CLA within the AT. This example is based on a 5×5

FC size.

Sum from MAA0 16

Carry from MAA0 16

CSA

CSA

Sum from MAA1 16

Carry from MAA1

CSA

CSA

16

Sum from MAA2 16

CSA

CLA 19

Output Image

Pixel

CSA

Carry from MAA2 16

Sum from MAA3 16

Carry from MAA3 16

CSA

CSA

R

Sum from MAA4 16

Carry from MAA4 16

R

R

R

R

AT

Figure 4.4. A possible arrangement of the AT (R denotes a single flip-flop pipeline

stage; a pipeline stage is included within each CSA and CLA) for a 5×5 FC.

Another basic functional unit within the AU is the Delay Units (DU) which are

responsible for generating the skewed input image pixels for the MAUs. However, the

DUs will need to be pipelined as well with the same pipeline stages that the MAA has.

Upon further investigation, even though the replacement of a high speed adder

with a CSA within a MAU can save a small amount of hardware, the replacement is not as

beneficial when the architecture is reviewed at the highest level. Table 4.1 shows a direct

24

comparison of number of gates required for both a 14-bit CSA and a 14-bit CLA (EX-OR

gate is counted as five gates). The amount of hardware saved is not as significant as the

increase in hardware for the AT. Figure 4.5 shows a possible arrangement of the AT if

CLA is utilized within the MAUs.

Table 4.1. Gate count comparison between CSA and CLA.

CSA CLA

Gate Count 182 210

Output from MAA0

17

Output from MAA1

17

17

18

CSA

18

Output from MAA2

CSA

18

19

17

CSA

CLA 19

19

Output from MAA3

17

R

17

Output from MAA4

17

R

17

R

Output Image

Pixel

17

AT

Figure 4.5. One possible arrangement of the AT when CLA is utilized within the

MAUs.

First and foremost, comparison between Figure 4.4 and Figure 4.5 shows a

number of CSAs being saved and also a number of pipeline stages being saved as well.

This results in a large amount of hardware savings. If CSA is used within each MAU, the

number of bits (or bus lines) running from one MAU to another is doubled. Hence, when

implemented, CSA will require more real estate within the chip (especially when

implemented as an ASIC) than CLA, thus reinforcing the need to reduce the number of

CSA units. Another important hardware reduction is the reduction in the number of flipflops required for the pipeline into half since only one bus (one output from each MAA) is

required.

25

In conclusion, the adder within each MAU of Figure 4.3 will be a CLA type and

the Adder Tree (AT) of Figure 4.1 will be implemented as shown in Figure 4.5 for the

case of n = 5.

4.2.1. Multiplication Unit (MU) of Multiplication and Add Unit (MAU)

The Multiplication Unit (MU) of Figure 4.3 is one of the most important

arithmetic components within the proposed convolution architecture. Thus, it is important

that a high speed and area efficient multiplication technique be derived and implemented

since the architecture requires 25 MUs for one convolution set. For each MU, an 8-bit

unsigned binary number (IP) is to be multiplied by a 6-bit signed binary number (FC)

and a 14-bit signed binary output (OI) is generated. Table 4.2 below shows a summary of

all the elements involved in the multiplication. All signed binary numbers will be

represented as 2’s complement numbers.

Description

Table 4.2. A summary of the multiplication.

Representation

Range (Decimal)

Multiplicand

8-bit unsigned binary number

0 to 255

Multiplier

6-bit signed binary number (2’s complement)

-32 to 31

Product

14-bit signed binary number (2’s complement)

-8192 to 8191

Multiplication in binary can be done using the same technique as with the

commonly used paper and pencil method. Partial products are generated based on each

bit of the multiplier and then all the partial products are summed to generate the product.

The number of partial products required is dependent on the number of bits of the

multiplier. Hence, as shown in Table 4.2, a 6-bit signed binary number is used as the

multiplier instead of the 8-bit unsigned binary number; this is due to the fact that using a

reduced number of bits for the multiplier results in fewer partial products. However, since

the multiplier in this case is a signed binary number, for the regular paper and pencil

method to work when the multiplier is in negative range, both the multiplicand and

multiplier need to be complemented before the multiplication. This is due to the fact that

all the partial products are positive and hence the result generated will be positive as well,

which is not correct since a negative result should be obtained as the multiplier is of

negative value. Hence, by complementing both the multiplicand and the multiplier, the

26

signs are switched between the two, but the result should be the same, a negative value.

Besides, all the partial products need to be sign extended for the multiplication to be

correct. Figure 4.6 illustrates the multiplication concept mentioned above. A copy of the

multiplicand will be placed into the partial product with sign extension(s) if the

respective multiplier bit is one, otherwise all zeros will be placed.

B7

B6

A5

A4

A3

A2

A1

A0

multiplicand

multiplier

s

s

s

s

s

x

x

x

x

x

x

x

x

partial product based on A0

s

s

s

s

x

x

x

x

x

x

x

x

s

s

s

x

x

x

x

x

x

x

x

s

s

x

x

x

x

x

x

x

x

s

x

x

x

x

x

x

x

x

x

x

x

x

x

x

x

x

P9

P8

P7

P6

P5

×

+

P13 P12 P11 P10

B5

B4

B3

B2

B1

B0

partial product based on A1

partial product based on A2

partial product based on A3

partial product based on A4

partial product based on A5

P4

P3

P2

P1

P0

Figure 4.6. Illustration of the paper and pencil multiplication technique (s on each

row of the partial products denotes sign extension of that particular row of partial

product).

From Figure 4.6 above, it is shown that the most expensive operation is to sum all

the partial products into the final result. It is difficult to design an adder that can add six

operands at the same time and also it may not be speed efficient as well. However, a

method such as the Multilevel Carry Save Adder (CSA) Tree [10] can be employed to add

all the partial products into a final result. The Multilevel CSA Adder Tree uses multiple

stages of the CSA to reduce the operands into two operands and a final adder stage is used

to sum both operands to generate the final result. Depending on the speed requirement,

the Multilevel CSA Tree can be easily pipelined into multiple stages to increase

throughput. Besides, the final stage adder can be replaced with a fast adder such as Carry

Lookahead Adder (CLA) to reduce the latency. Figure 4.7 below shows a possible

arrangement of the Multilevel CSA Tree for adding six operands. Also, a five stage

pipeline can be implemented with this configuration.

It is possible to reduce the hardware count if the number of partial products can be

reduced. This can be done thorough use of the Modified Booth’s Algorithm (MBA) [13].

The MBA inspects three multiplier bits at a time and generates respective partial product

27

selections. Compared to the MBA, the original Booth’s Algorithm (BA) [4] inspects two

bits of the multiplier at an instance and hence the number of partial products generated

still remains proportional to the number of multiplier bits. The MBA can reduce the

number of partial products required to (

x

+ 1 ), assuming x is the number of bits for the

2

multiplier. Thus, for a 6-bit multiplier, the partial products generated will be reduced

from six to four. However, the Partial Products Generator’s (PPG) complexity is