From: AAAI Technical Report WS-00-03. Compilation copyright © 2000, AAAI (www.aaai.org). All rights reserved.

Resolving Redundancy:

A Recurring Problem in a Lessons Learned System

John O. Everett

Daniel G. Bobrow

{jeverett,

bobrow @ parc.xerox.com}

XeroxPalo Alto ResearchCenter

3333 CoyoteHill Road

Palo Alto, California, 94304

Abstract

Thevalueof a lessonslearnedcollection dependson how

readilythe rightlessoncanberetrievedat the right time.In

this paper,wediscussonesuchcollection,fromthe Eureka

systemfor exchanging

tips onphotocopier

repair. Feedback

fromXeroxphotocopier

repair techniciansusingEurekaindicatesthat redundancy

in the knowledge

baseis a significant impediment

to effectiveuse of the collection.Toaddress this, weare starting a projectto developknowledgeintensiveapproachesto resolvingthe redundanciesthat

naturallyaccumulate

in a focuseddocument

collectionwith

manyauthors.

Introduction

The Eurekasystem is a technician-authored, experttechnicianvalidatedset of lessonslearned dealingwith the

repair and maintenanceof photocopiersand printers. This

paperfirst describesthe origins of this lessons-learnedsystem, andthendiscusse~3a significant problemthat arises in

the use of this successfullyfielded systemasits knowledge

base continues to growover time, the accumulationof redundantand stale content.

Photocopiersare comprisedof computational,optical,

electro-mechanical, and chemicalsubsystems,whichrenders field diagnosis and repair a challenging task. Xerox

has historically spent muchmoneyto provide its technical

service force with excellent documentation

that guidesthe

technician throughdiagnosis to repair. Unfortunately,the

bookswereso heavythat techniciansleft themin their cars

unless they ran into an unfamiliar or extremelydifficult

problem. Andsince the documentationwas so expensive

to prepare, it wasrevised very seldom,and hence became

out-of-datein crucial places.

Toprovide a lower-costandlighter-weightsolution with

comparable

diagnostic capability, a groupof researchers at

XeroxPARC

embarkedon a research programto build an

automateddiagnostic system that wouldrun on a laptop

computer.Basedon an abstract modelof the copier, the

systemguidedtechnicians throughan optimal path to diagnose problems,computingpossible probes from probabilities of failures, andexpectedcosts.

A modelof one of the morecomplexcopier subsystems

wasconstructed, and a prototype of the system wasshown

12

to techniciansin the field. Theywereamazedby whatthey

saw. However,they pointed out that the only problemsfor

whichthe system wouldprovide guidancewere those that

arose from knownfaults; but this is whereexperienced

technicians needthe least help. Difficult problemsarise

fromthe interaction of multiple factors, such as machine

age, local climate, andusagepatterns, and not fromknown

single point failures. Thesubtly nuancedreasoningand

knowledge

of interactions requiredfor a practical diagnosis

systemwasbeyondthe state of the art in artificial intelligence.

ThePARC

team then started to study and workwith the

techniciansto understandtheir workpractices. Thetechnicians themselvespointed out (as documented

by Julian Orr

in Talking AboutMachines)that war stories exchangedat

parts drops and after workoften helped themsolve their

hardest problems.Theyfrequently shared cheat sheets on

the current hard problemswithin their local workgroups.

PARC

researchers suggestedthat if they hada wayof sharing with other moreremotecommunities,they could all do

a better job more easily. The PARC

team put together

sometechnologyintegrated with a process designedwith

the technicians that would help meet their knowledge

sharing needs [Bell et al 1997; Bobrow,Cheslowand

Whalen,1999].

This effort evolvedinto the Eurekasystem, whichtoday

interconnecLsthousandsof widelydistributed technicians

with a laptop-basedrepository of technician-authoredtips.

Techniciansperiodically log in to updatetheir tips, and to

submitnewtips to a set of validators. Thesevalidators are

themselvessenior technicians, whoattempt to ensure that

only high-qualitytips are enteredinto the tip repository.

Eachtip includes the author’s nameand contact information, whichprovides a community

incentive for sharing

throughincreasedvisibility andreputation enhancement.

It

also encouragesresponsibility, muchlike the scientific

publishingprocess. Unlikethe ,scientific publishingprocess, the validators’ namesare also provided, since they

bear primaryresponsibility for the quality of the document

collection, and continuedinteraction with themhas value

to the community.

TheEurekaknowledge

base is an exampleof both a lessons learned system, and of a moregeneral class that we

call a focused documentcollection. Focused document

collections deal with a specific domain(copier repair problems). Theyare used for specific tasks, and growrelatively slowly. Eureka,for example,contains about 30,000

documents,and is growingby 10-20%a year. It is also a

valuable corporate asset, since the use of Eurekais estimatedto have saved Xeroxroughly $50 million since its

inception. Techniciansunanimouslylike the system, and

Knowledge ManagementWorld magazine named Xerox

Company

of the Year for 1999based on Eureka.

The Nature of the Problem

As the Eurekadocumentcollection continues to grow,

the validators whomaintain the quality of the knowledge

base havefoundit increasingly difficult to locate potentially redundanttips. This redundancyis problematicbecausea techniciansearchingfor a relevant tip can be overwhelmedby a large numberof documents,or confusedby

similar but not completelyconsistent ones. Redundancies

arise for several reasons. In someeases, several technicians encounter the same problemat about the sametime

and submit similar tips on howto address it. In other

cases, early tips that hypothesizeinaccuratediagnosesare

correctedby later tips. Validatorstry to retrieve and take

into accountearlier tips about the sameproblem,to minimize redundancyin the database. But relevant tips may

not be easily retrievedgiventhe ,search tools available, and

the validators are undertime pressure, andmaynot always

be able to check thoroughly

Finally, different authors emphasizedifferent aspects of

the problem.For example,someof the tips provide explanations of the issue, others focus on workarounds

(whatto

do until field engineeringcomesup with a newpart to replace onethat is unexpectedly

failing), andyet others provide only a terse description of the symptoms,

howto confirm the problem,and whatfield engineeringspecifies as

the official fix. Wehave found manydifferent relationships amongsimilar tips; includingcontradictions,elaborations, alternative hypotheses, and hints on howto work

within the Xeroxcorporate environment.

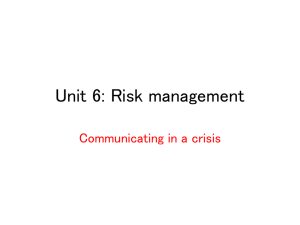

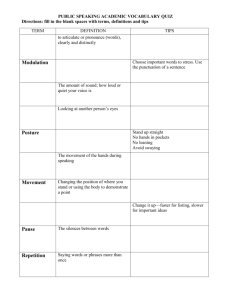

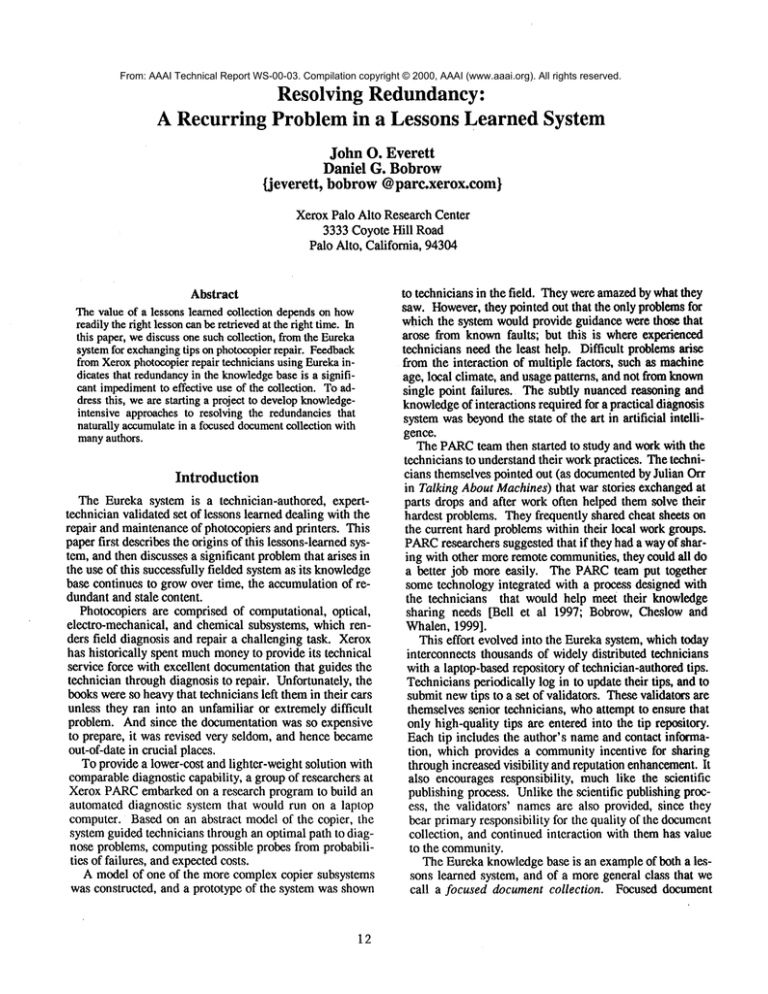

Figure1 showsthree similar (expurgated)tips for a midvolumephotocopier.Thefirst of the three tips postulates a

problemin a particular subsystemthat is expensive to

manufactureand time-consumingto replace. Naturally,

field engineeringwas keento pinpoint the root cause of

problemswith this subsystem,so the tip encouragestechnicians to return defective ones for examination.In the

middletip, however,there is a note towardthe end, to the

effect that this subsystemis not the problem.Apparently,

movingthe wiring harness in order to removethe subsystem causedan intermittent connectionto workagain for a

time, leading technicians to believe the subsystemwasat

fault, and in fact, 95%of those returned were fine. The

final tip tersely summarizes

the situation, as "bimetallic

corrosion"(i.e., tin pins goinginto gold plugs), without

anyreferenceto the prior tips.

Clearly, havingall three tips in the knowledgebase is

problematic,especiallyif a technicianwereonly to look at

13

the first one. Althoughthe first tip should probablybe

deleted, the secondtip contains useful elaborationson the

situation that maybe of use to newtechnicians.

Thereis substantial evidencethat this problemis pervasive. Thereare an increasingnumber

of active users of the

data base, they uniformlylike the system,but requestsfor

tools to managethe redundancyhave increased as well.

These requests have also been press~ uponus from many

different validators, and over the past eighteen months

have taken on a tone of increased urgency. Our own

analy,~ support the field’s contention of significant redundanciesin the corpus. A manualanalysis of 650 tips

turnedup 15 pairs (or triples in a coupleof eases) of conceptually similar tips; Becausetechnicians tend to use

coarsesearchterms(e.g., "fuser"), searchresults tend to

large andcontainanysimilar tips that exist in the database

Tomaintainthe valueof the Eurekasystemas it continues to scale up, it wouldbe useful to automate(at least

partially) the identification and resolutionof suchredundancies. Whereaspeople excel at makingfine distinctions

amongsmall numbers of natural language documents,

computersexcel at processinglarger numbersof document

Thereis a clear needto developtools that play to both of

these strengths, by automatingthe identification of similar

tips, but leaving the resolution of redundancyup to the

maintainersof the system.

There are two obviousapproachesto handle this problem, neither of whichworksin practice. Thefirst is to ask

the techniciansto characterizethe tip duringentry, by selecting keywordterms etc, or even havingeditors "clean

up" their work.This wouldmakethe tips indistinguishable

from other corporate documentation.Of all the information sources available to the technicians, only Eurekais

completelyundertheir control. Ageneral refusal to select

terms frompull-downlists of metadataintended to more

accurately characterize and index the tips arises fromthe

samesentiment.

Thesecondapproachis to use standard informationretrieval methods,such as wordoverlap, perhaps extended

by latent semanticanalysis. Wehavetried this, andit is of

limited valuefor this corpus,whichhas characteristicsthat

renderit generallyimpervious

to statistical analyses.

Theaveragetip is terse (a little over a hundredwords)

andliberally sprinkledwith jargon, misspellings,andcreative departures from generally accepted punctuationand

grammar.Similar tips mayoverlap by as few as fourteen

words. Althoughtips are structured in Problem,Cause,

andSolutionsections, authorsvary in their use of this convention.

Our Approach

TheEurekasystemis similar in somerespects to a easebased reasoningsystem, but there are significant differences. Althoughtips maybe construed as cases, they are

authored by technicians, not knowledgeengineers, and

they adhere to no common

format or metadataconvention.

Theassociated search engine, SearchLite, is a full-text

Tip 36321

Tip 41023

Problem: Subsystem failing [~

intermittently with error code W

E-12

Cause: Unknown

Solution: If the subsystem

[~

responds to the following

[~

procedure, replace it and send l~

the def~tive one to field

Problem: Error code E-12

L

[I

M

Cause: ...The tin plating on

|l

the wire harness pins oxidizes

U

and creates a poor connection.

M

Solution: ..."slide" the

HI

connectors on and off. This

[I

will scrape the pins and

[t

provide a better contact. Long

Term: Harnesses with gold

[!

plated pins will be available... I~

The subsystem is usually NOTR

Tip 46248

Problem: Intermittent

in subsystem

Cause. Bi-metallic

l

faults 1~

I

corrosion i

Solution: A new kit

containing harness with gold

plated pins to replace old

I~

2Z____J

Figure 1: Evolution of Knowledge

in Eureka

keyword-based

system tuned to the particularities of the

domain.For example,it recognizes fault codes and part

numbersas such, whichimprovesthe accuracy of its tokenizer, but it does not rely on explicitly encodeddomain

knowledge.

Severalattemptsto havetechnicians structure their entries (e.g., by tagging themwith metadatafrompull-down

menus)endedin failure. To the technicians, pressed for

time andoften writing tips after hours, the advantagesof

natural languagetext in terms of rapid entry and ease of

use wereso great that they systematically refused to use

other interfaces.

Webelieve that unstructured but focuseddocumentcollections suchas Eurekapre‘sent a large class of interesting

and relevant problems that will yield to knowledgeintensive methods. Finding the resources for skilled

knowledgeengineersto maintainsuch collections is often

difficult in organizations under budgetarypressures, so

¯ automatingaspects of this maintenancemayprove quite

valuable.

In contrast to muchof the on-goingre,search in easebasedreasoning,the field mostclosely allied to this work,

our research is deliberately challengingthe conventional

wisdom

that it is not possibleto extract useful representations of the underlyingcontents fromunstructuredtext via

natural languageprocessing (NLP).Limitedtask-focused

dialog understandingsystemshave existed since the 70’s

[Bobrowet al 1977], and are nowincorporated into commercial speech understandingsystems for limited interfaces. However,difficulties encounteredin providing a

fuller understandingof a significant domainseemedinsurmountable at the time. Webelieve, however, that

enoughprogress has been madein the underlying theory

and technologies to warrant another principled attack on

this understandingproblem.

14

Weintend to apply deep parsing and knowledgerepresentation techniques to the problemof maintaining the

quality of the Eurekaknowledgebase for three reasons.

First, webelieve that recent technologicaladvanceshave

brought us to the verge of: makingsuch techniques commercially feasible, especially whenapplied to document

collections focusedon a particular domain,such as photocopier repair. Recent implementationsof Lexieal Functional Grammartheory [Kaplan and Bre‘snan 1982] have

demonstrated cubic performance in parsing a wide and

increasingrange of sentences,with small enoughconstants

that an averagefifty wordsentencecan be parsedin a seeondor less.

Second,the brevity of the tips and the high degree of

common

knowledgeassumedreduces the effectiveness of

statistical approaches,whilemakingit morelikely that the

contents can be represented in terms from a limited domain. Weexpect that techniquesfor indexing, retrieval,

and representation developedby researchers in analogical

reasoning, statistical languageprocessing,andease-based

reasoning to be complementary.Our hope is that wecan

achieve higher accuracy with deep representations than

wouldbe possiblewith current state of the art techniques.

Third, webelieve that a knowledge-intensiveapproach

will provide the basis for automating other documentcollection tasks. Wewill be investigating the viability of

creating compositedocuments

fromparts’ of existing tips,

in a processwecall knowledge

fusion.

Related

Work

Thereis an extensiveliterature on case-basedreasoning

systems, someof whichtouches directly on the issues of

redundancyand knowledgebase managementthat we are

addressing. Timeand space preclude a thoroughreview of

this literature here, so wecan only highlight relevant research here. The primary difference between CBRwork

and our research is that CBRsystems generally presume

the existence of an infrastructure for encoding cases,

whereasweare starting with a large unstructuredcorpus.

Racine and Yang[1997] address the issue of maintaining a largely unstructuredcorpusof cases via basic informationretrieval methods,such as fuzzy string matching.

Although we have found someinstances of what could

only be multipleedited versions of the sametext, for the

most part weare looking for conceptualsimilarities that

tend to manifestin texts that havesurprisinglylittle overlap in wordsused.

Mario Lenz has done somerelevant work in textual

CBR,in particular defining a layered architecture that ineludes keyword,phrase (e.g., noun phrase), thesaurus,

glossary, feature value, domainstructure, and information

extraction layers [Lenz 1998]. Heand others haveimplementedseveral systemsbasedon this architecture, including FAIIQ[Lenz and Burkhard 1997] and ExperienceBook[Kunzeand Hiibner, 1998], and a system for Siemens called

SIMATIC Knowledge Manager

[www.ad.siemens.de]. An ablation study [Lenz 1998]

showslittle degradationin performance

if the information

extractionlayer is not used, but significant degradationif

domainstructure is not utilized, and a majorloss in recall

andprecisionif domain-specific

phrase~saren’t used.

¯ FAQFinder[Burke et al., 1997] attempts to matcha

query to a particular FAQ(set of frequently asked questions about a topic) and extract from that FAQa useful

response. In contrast to other research here, this system

doesno a priori structuring of the FAQ

corpora, andin this

regard is similar to our research. FAQFinder

uses a twostage retrieval mechanism,

in whichthe first stage is a statistical similarity metric on the terms of the queryanda

shallow representation of lexieal semantics utilizing

WordNet

[Miller, 1995]. This enables the system to match

"ex-spouse" with "ex-husband," but it fails to capture

causal relations, suchas the relation betweengetting a divorce and havingan ex-spouse.Theauthors point out that

developingsuch a representation for the entirety of the

USENET

corpora wouldnot be feasible. This supports our

strategy of limiting our initial efforts to focuseddocument

collections, wherewehave a reasonable chanceof developingsufficient representations for our task. Anablation

study showsthat the semanticand statistical methodsboth

contribute independentlyto recall, as their combination

producesa higher recall (67%)than either used alone (55%

and 58%,respectively).

SPIREcombines information retrieval (IR) methods

with case-based reasoning to help users formulate more

effective queries [Daniels and Rissland, 1997]. SPIRE

starts byretrievinga smallset of case, s similarto the user’s

inquiry, then feeds this to an IR search engine, whichextracts terms fromthese cases to forma query, whichis run

against the target corpusto producea rankedretrieval set.

SPIREthen locates relevant passages within this set by

15

generating queries from actual text excerpts (markedas

relevant by a domainexpert during preparation of the

knowledgebase). The user mayadd relevant excerpts to

the ease-basefromthe retrieval set, thus continuingthe

knowledge

acquisition processduringactual use.

Informationextraction [Riloff andLehnert,1994]is another area of relevantre, searchthat maybe consideredas a

mid-point betweenword-basedapproachesand deep natural languageparsing. Bylimiting the goal of the systemto

extracting specific types of information,the natural languageprocessingproblemis considerablysimplified. Desired informationis encoded

in a framethat specifies roles,

enablingconditions, andconstraints.

l.eake and Wilson[1998] have proposed a framework

for ongoingcase-base maintenance,whichthey define as

"policies for revising the organizationor contents...of the

ease-basein order to facilitate future reasoningfor a particular set of performanceobjectives." Thedimensionsof

this frameworkinclude data collection on ease usage

(none, snap-shot, or trend), timingof maintenance

activities (periodic, conditional,or ad hoe), andscopeof changes

to be made(broador narrow).

This line of research is importantto our project for two

reasons; first there is an extensive bodyof literature on

CBRmaintenance techniques which should be directly

applicable to our research whenwehavereachedthe point

of automatically generating reasonableconceptual representations. For example, Smythand Keane[1995] have

investigated methodsfor determiningwhichcases to delete

without impactingperformance,manyresearchers [e.g.,

Domingos1995, Aha, Kibler & Albert 1991] have presented methodsfor automaticallygeneralizing cases, and

[Ahaand Breslow, 1997] present a methodfor revising

case indices.

Second, weneed to develop a maintenancepolicy that

respects the current workpractices and resourceconstraints

Of the Eurekavalidators. Leakeand Wilsonstress the importanceof setting a maintenance

policy with respect to a

set of performance

objectives, whichis a keyconsideration

for our project. Watson[1997] provides guidelines for

humanmaintenance of CBRsystems.

Project Status

This project is just getting started. Wehave to date

hand-codedknowledgerepresentations for one pair of

similar tips, to serve as a benchmark

for systemdevelopmerit. Wehope by year-end to showthis workingall the

waythroughfor a small numberof tips from this domain.

Whileit remainsto be ,seen whetheror not our approach

will produce systemswith demonstrablybetter performance, webelieve that a careful reexaminationof the convcntional wisdomin this area is in order, and has the

potential to producean exciting newdirection in symbolic

AI research.

Lecture Notes in Artificial Intelligence 1488, eds. B. Smyth

and P. Cunningham,pp 298-309. Springer Verlag, 1998.

References

Aha, D.; Kibler, D.; and Albert, M. 1991. Instance-based

learning algorithms. Machine Learning 6:37-66.

Lenz, M. and H.-D. Burkhard. CBRfor DocumentRetrieval - The FAIIQProject.. In Case-BasedReasoning

Research and Development, Proceedings ICCBR-97,Lecture Notes in Artificial Intelligence, 1266. eds., D. Leake

and E. Plaza, pages 84-93. Springer Verlag, 1997

Aha, D., and Breslow, L. 1997. Refining conversational

case libraries. In Proceedingsof the SecondInternational

Conference on Case-Based Reasoning, 267-278. Berlin:

Springer Verlag.

Miller, G. A. 1995. WordNet:A lexieal database for

English. Communicationsof the A CM38(11):39-41.

Bell, David, Daniel G. Bobrow, Olivier Raiman, Mark

Shirley. DynamicDocumentsand Distributed Processes:

Building on local knowledgein field service. In Information and Process Integration in Enterprises: Rethinking

Documents, eds. Wayakama,T et al. Kluwer Academic

Publishers, Norwell MA1997

Racine, K., and Yang, Q. 1997. Maintaining unstructured

case bases. In Proceedingsof the Second International

Conference on Case-Based Reasoning, 553-564. Berlin:

Springer Verlag.

Bobrow, Daniel G., Robert Cheslow, Jack Whalen. Community KnowledgeSharing in Practice. Submitted to Organization Science 1999

Bobrow, Daniel G., Ronald M. Kaplan, Martin Kay, Donald A. Norman, Henry Thompson, Henry Winograd GUS,

a frame-drivendialog system Artificial Intelligence, 8 (2)

(1977) pp. 155-173

Burke, R., K. Hammond,V. Kulyukin, S. Lytinen, N. Tomuro, and S. Schoenberg. Question Answeringfrom Frequently AskedQuestion Files. AIMagazine, 18(2):57-66,

1997.

Daniels, J.J.; Rissland, E.L. Integrating IR and CBRto

locate relevant texts and passages. Proceedingsof the 8th

International Workshopon Database and Expert Systems

Applications (DEXA)1997.

Domingos,P. 1995. Rule induction and instance-based

learning. In Proceedingsof the Thirteenth International

Joint Conferenceon Artificial Intelligence, pp. 1226-1232.

San Francisco, CA: Morgan Kaufmann.

Kaplan, Ronald M. and Joan Bresnan. 1982. LexicalFunctional Grammar:A formal system for grammatical

representation. In The Mental Representation of Grammatical Relations, ed. Joan Bresnan. The MITPress,

Cambridge, MA,pages 173-281. Reprinted in Formal

Issues in Lexical-Functional Grammar,eds. Mary Dalrymple, Ronald M. Kaplan, John Maxwell, and Annie Zaenen, 29-130. Stanford: Center for the Study of Language

and Information. 1995.

Kunze, M. and A. Hiibner. CBRon Semi-structured

Documents: The Experience-Book and the FAIlQ Project.

In Proceedings 6th GermanWorkshop on CBR, 1998.

Leake, D.B. and D. C. Wilson. Advances in Case-Based

Reasoning: Proceedings of EWCBR-98,Springer-Verlag,

Berlin

Lenz, M. Defining KnowledgeLayers for Textual CaseBased Reasoning. In Advances in Case-Based Reasoning,

16

Riloff, E., and Lehnert, W.1994. Information extraction

as a basis for high-precision text classification. ACM

Transactions on Information Systems 12(3):296-333.

Watson, I. D. Applying Case-Based Reasoning :

Techniques for Enterprise Systems. Morgan Kaufmann,

1997.