2.830J / 6.780J / ESD.63J Control of Manufacturing Processes (SMA...

advertisement

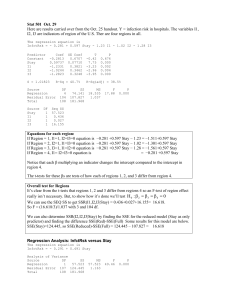

MIT OpenCourseWare http://ocw.mit.edu 2.830J / 6.780J / ESD.63J Control of Manufacturing Processes (SMA 6303) Spring 2008 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms. MIT 6.780J 2008: Problem set 8 solutions Problem 1: example solution courtesy of M. Imani Nejad a) first we calculate the averages and then use the formula in May and Spanos to find b and b 0 : x= y= 1 n ∑ y i = ��������� n i==1 ∑ (x − x)(y − y) = 2397.779 = 0.96601 2482.148 ∑ (x − x) 2 ���������������������������� ����������� y = 35.387 + 0.966x ������������ Fitted Line Plot y = 35.39 + 0.9660 x Regression 99% CI 99% PI 90 80 S R-Sq R-Sq(adj) 70 9 80695 45.4% 43.5% y 60 50 40 30 20 10 0 0 5 10 15 20 25 30 35 x Residual Plots for y Normal Probability Plot Versus Fits 99 20 Percent 90 Residual b 0 = y − bx 50 10 0 -20 -40 1 -40 -20 0 Residual 20 40 Histogram Frequency 50 Fitted Value 60 Versus Order 20 20 15 Residual b= 1 n ∑ x i = ��������� n i==1 10 5 0 -20 -40 0 -30 -20 -10 0 10 Residual 20 30 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30 Observation Order In the above graph the interval is calculated based on the following formula: ⎡1 where α = 99% and V(y) = ⎢ + y 0 ± t α / 2 V(y) ⎣n (x − x) 2 ⎤ 2 *s For each point. 2⎥ (x − x) ∑ ⎦ The regression equation is y = 35.39 + 0.9660 x S = 9.80695 R-Sq = 45.4% R-Sq(adj) = 43.5% Analysis of Variance Source Regression Error Total DF 1 29 30 SS 2316.28 2789.11 5105.39 MS 2316.28 96.18 F 24.08 P 0.000 b) We can see in the above graph that there are 6 points of the 99% confidence interval. So we reduce the data set and again using the formula and procedure in part (a) we get new results. b=1.2432 y = 31.84 + 1.2432x b 0 =31.84 b y = 31.84 + 1.243 x Regression 99% C I 99% PI 80 70 S R-Sq R-Sq(adj) y 60 50 40 30 20 0 5 10 15 20 x 25 30 35 2.85224 94.1% 93.8% Residual Plots for y Normal Probability Plot Versus Fits 99 5.0 Residual Percent 90 50 10 2.5 0.0 -2.5 -5.0 1 -5.0 -2.5 0.0 Residual 2.5 5.0 30 40 60 70 Versus Order 4 5.0 3 2.5 Residual Frequency Histogram 50 Fitted Value 2 1 0.0 -2.5 -5.0 0 -4 -2 0 Residual 2 4 2 4 6 8 10 12 14 16 18 20 22 24 Observation Order Now in the reduced data, we can see that residuals are close to normal. This means that we can rely on the data more than before. Also we can make an ANOVA test which the result is as follows: The regression equation is y = 31.84 + 1.243 x S = 2.85224 R-Sq = 94.1% R-Sq(adj) = 93.8% Analysis of Variance Source Regression Error Total DF 1 23 24 SS 2977.78 187.11 3164.90 MS 2977.78 8.14 F 366.03 P 0.000 Also we can see that the standard error is less than before. This is a good indication that the reduced data is more reasonable. So b and b0 in reduced data is estimated better. c) Again we reduce the data by deleting the data which are put pf 99% level of confidence. We get the following results: y = 32.05 + 1.265 x b y = 32.05 + 1.265 x 80 Regression 99% CI 99% PI 70 S R-Sq R-Sq(adj) 1.36336 98.3% 98.2% y 60 50 40 30 0 5 10 15 20 25 30 35 x Residual Plots for y Normal Probability Plot Versus Fits 99 2 Residual Percent 90 50 1 0 -1 10 -2 1 -3.0 -1.5 0.0 Residual 1.5 3.0 30 40 Histogram 50 Fitted Value 60 70 Versus Order 4 Residual Frequency 2 3 2 1 0 -1 1 -2 0 -2 -1 0 1 Residual 2 1 2 3 4 5 6 7 8 9 10 11 12 13 14 Observation Order We can see that the residual are normal. But there is a little difference between new fitting model and part (b). So we perform ANOVA test and calculate the standard error. The regression equation is y = 32.05 + 1.265 x S = 1.36336 R-Sq = 98.3% R-Sq(adj) = 98.2% Analysis of Variance Source Regression Error Total DF 1 12 13 SS 1327.56 22.30 1349.87 MS 1327.56 1.86 F 714.23 P 0.000 So the standard error is even less than in the part (b). So if we continue this cycle we get better fitting model and finally we get a perfect fitting, but we should keep in mind that we fit the curve based on less data. This procedure can be achieved until the standard error is acceptable value. Problem 2 This was a hard problem and effort will be generously rewarded. First: proof of May and Spanos Eqn. 8.38: Let the true model be: y = Xȕ + İ and the estimate for y be: ǔ = Xb where b are estimates of model coefficients made using May and Spanos Eqn 8.37. (Note that b should be b in the text.) Then we have: Var(b) = E[(b – ȕ)T(b – ȕ)] = E[((XTX)–1XTİ)T(XTX)–1XTİ] = (XTX)–1XTE(İTİ)X(XTX)–1 = (XTX)–1XTı2IX(XTX)–1 [noise is homoskedastic (has constant variance) and not autocorrelated] = ı2(XTX)–1XTIX(XTX)–1 = ı2(XTX)–1 = Ȉ b. Ȉb is the variance–covariance matrix for the estimated parameter set. Then, following the rules for expressing the variance of sums of variables, the variance of the point estimate ǔ* made at x* = [1 x1 x12 x2] is: Var(ǔ*) = Var(x*b) = x*Ȉbx*T and the confidence interval is ǔ* ± tĮ/2 [Var(ǔ*)]1/2. The number of degrees of freedom for the t value is [(number of data) – 4] in this case. Thanks to Shawn Shen for his excellent work on this problem. Problem 3 (Drain problem 4) (a), (d) (b) [courtesy M. Imani Nejad] First line: M = 6, W = 2; second line: M = 2, W = 3: (c) 0.0016 0.00212 0.0016 0.00264 Problem 4 a) From the lecture, for c control parameters and n noise parameters, the number of tests is given by: Crossed array: 3c*2n Response surface: 2c+(c+1)n b) A half-fraction can be used when main effects are not aliased with twofactor interactions. For 2-level (linear model) experiments, this occurs for a 24-1 test. The number of tests is thus: Response surface: 24+(4+1)1=21 Half-fraction response surface: 24-1+(4+1)1=13 Crossed array: 3421=162 c) There must be an interaction between the noise factors and the control factors; otherwise, the process variation won’t depend on the inputs. d) Run several replicates at each of the controllable input settings. This will give two useful response variables: mean and variance. Least-square models would then be fit for the mean and the variance, and response surface methods used to find the optimum.