A Computational Analysis of the Maltese Broken Plural

advertisement

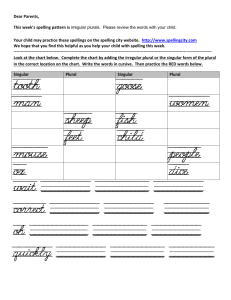

A Computational Analysis of the Maltese Broken Plural Alex Farrugia Department of Artificial Intelligence University of Malta Email: alexfar@gmail.com Abstract The Maltese Broken Plurals have always been treated as a mechanism totally lacking in rules or structure. The traditional view has been that there is simply no relation between the singular, and broken plural forms. Tamara Schembri [1], in her B.A. thesis, argues that this view is not entirely correct and offers evidence for regularities governing transformations between the respective forms. In this paper we describe an attempt to examine the computational implications of Schembri’s work for classification of the singular nouns and generation of the corresponding plural form. The solution adopted is based on based on artificial neural networks and involves a pattern associator flanked on both sides by an encoding and decoding unit. We experimented with various encoding schemes and parameter settings and the network produces results that, after optimisation, are closely correlated with Tamara Schembri’s theory. Results indicate that, although we are still far off from creating a complete computational model for the Broken Plural, the classification of nouns in their singular form is not impossible and can be achieved with a relatively high degree of accuracy using machine learning techniques. Index Terms computational morphology, artificial neural networks, broken plural Michael Rosner Department of Artificial Intelligence University of Malta Email: mike.rosner@um.edu.mt are formed by systematically adding a suffix e.g. omm, ommijiet (mother, mothers) or changing a final vowel karroza, karrozzi (car, cars). The relation between singular and sound plural forms is regular. Broken plurals, on the other hand involve a more diverse set of morphological transformations including internal vowel changes e.g. tifel, tfal (boy, boys), qamar, qmura (moon, moons), redoubling of consonants ġdid, ġodda (new) and combinations thereof. Moreover, the relation between the forms is apparently not systematic. This is clearly displayed by some simple examples such as ratal, rtal (a unit of weight) and h̄awt, h̄wat (trough, troughs) which have the same plural pattern but different singular patterns. This is further substantiated by the arguments presented in other texts. For example, ”Dwar il-plural miksur m’hemmx regoli”1 is the view expressed in the book IrRegoli talKitba Tal-Malti (1998) concerning the broken plurals in Maltese. Sutcliffe [2] also points out that it is impossible to induce any rules for the structure of the latter. Mons. L. Cachia [3] follows suit by saying “Il plurali miksura huma varji h̄afna u ma nistgh̄ux nagh̄tu regoli dwarhom”2 . Cremona [4] further strengthens this point by saying “Gh̄alhekk fil-Malti l-Plural Miksur bh̄ala regula ma jistax jinbena fuq il-gh̄amla tas-Singular”3 . This pessimistic outlook towards inducing any rules catering for the plural miksur has become accepted. Borg and Azzopardi-Alexander’s authoritative work on Maltese [5] states that there is no particular connection between the singular form and the plural pattern. Since Sutcliffe’s observations it has hardly been challenged at all. Nevertheless, Tamara Schembri [1] has recently offered some solid evidence against this position. Al- 1. Introduction Within Semitic morphology, there are two types of noun and adjective plural forms: sound (regular) plurals, and broken (irregular) plurals. Sound plurals 1. There are no rules regarding the broken plural 2. The broken plurals display a significant degree of variety and we cannot infer any rules from them 3. Therefore, as a rule, in Maltese, the Maltese broken plural cannot be constructed on the basis of the singular form though she did not solve the problem of generating the correct plural from the corresponding singular form, she does provide a measure of generalisation by dividing the set of all forms into eleven classes and showing that only some transformations between subclasses are possible. She concludes that the broken plural formation process is not entirely irregular. The existence of this partial evidence for a more systematic account of tne broken plural is interesting from a computational perspective and is the underlying raison d’être of the present project. This paper continues as follows. Section 2 describes the project’s aims and objectives. Section 3 discusses the main design issues, followed, in section 4, by an account of the implementation. The remainder of the paper concerns results and conclusions (sections 5 and 6 respectively). The work reported in this paper was carried out by the first author as a final year project for the BSc. IT (Hons) degree at the University of Malta. Some of the material in the paper is drawn verbatim from the project’s final report [6]. 2. Aims and Objectives The main aim of the project is to incorporate the linguistic regularities hinted at by Schembri into a computer program which actually computes the broken plural. Normally, when designing such programs, we already have in mind a set of rules describing regularities, and we then go about encoding the rules in a way understandable to the computer. Under the present circumstances we have no such rules available. Instead, we have a somewhat fragmentary, partial, and semi-technical theory of the phenomena at hand. Our first objective was to decide on a computational model for dealing with this situation. Not surpisingly, we looked toward a data-driven solution based on machine learning, as described further in the next section. All machine learning systems require an encoding of the problem. It turns out that the representation chosen can radically affect the difficulty, and hence, given limited resources, the quality of the solution. To illustrate this point, suppose you have to perform a long division using only Roman numerals. Obviously, the solution procedure will be much harder to express than when using Arabic numerals. Arabic numerals are a“better” representation than Roman ones for this problem. In the case at hand we are looking for an optimal representation for encoding the linguistic data. A second objective was therefore to investigate which kind of representation works best for learning a solution from the data. The main hypothesis we wanted to test was that a linguistically-based representation i.e. a representation based on linguistic features of the word, produces better results than a non-linguisticall-based encoding. We tested this assertion by pitting two different encoding methods based on linguistic features against a non-linguistic encoding method. Apart from the linguistic aspect, we also experimented within the area of artificial neural networks, working with different network architectures and making use of different variables in order to investigate their effects on the performance of the system. 3. Design Issues We considered a number of different computational issues before adopting the final design. First of all, we required an underlying computatinal model for carrying out mappings between singular and plural forms. Finite state transducers are well-known, mathematically elegant and computationally sufficient for this job. Moreover, they have been successfully applied to Semitic Morphology (cf. Kiraz [7]). However the power of related formalisms, such as Beesley and Karttunen’s xfst [8] lies in its ability to express complex algebraic operations like composition over transducers, not in its ability to express generalised transformations. So in one sense, the xfst formalism is too powerful: we don’t need to express such complex operations. On the other, it is too weak since, being finite every form has to be either explicitly present in the lexicon or generated dynamically by applying a replace rule to a base form. Generalised transformations, involving variables, for example, cannot be expressed. We eventually decided on a machine-learning approach which would attempt to learn the regularities present in the original dataset provided by Schembri. This dataset comprises of a few hundred examples of words together with their (broken) plurals, and a classification scheme for the plural forms. The basic design adopted used was similar to that used by Rumelhart and McClelland [9] and matching in detail to that of Plunkett Nakisa [10]. The model used to generate the plural forms of singular nouns or adjectives is shown in Figure 1. Words are input to the Encoding Layer which converts the latter to its phonetic representation and then this is transformed into a binary feature string. This is then passed to the input layer of the network which will feed forward the inputs into the computational units of the hidden and output layers. The resultant binary string Figure 2. Architecture of the network used in the categorisation process Figure 1. Architecture of the network used in the generation process is then passed from the Output Layer to the Decoding Unit, which transforms it into a grapheme string. Similarly, the networks created for categorisation purposes have identical encoding and input layers (refer to 2. However the architecture then proceeds in a cascading fashion following up on the first hidden layer with two smaller hidden layers, and an output layer containing an output node for each possible category provided by Schembri’s theory. Both types of network made use of a template similar to that used by Plunkett and Nakisa [10] for aligning words in the input layer. This consisted of an alternating series of Vowels and Consonants (VCVCVCVCVCVCVCVCVCVCV). This is done simply by inserting an empty string anywhere which does not correspond to the pattern in question, for example ‘kaxxa’ would produce the following output: ‘’, ‘k’, ‘a’, ‘x’, ‘’, ‘x’,‘a’,‘’,‘’,‘’,‘’,‘’,‘’,‘’,‘’,‘’,‘’. The latter was useful because of Root and Pattern Morphology involved in the broken plurals where in most forms, the root consonants of the singular form are retained in its plural form. This template was also used in the output layer of the generation networks. 4. Implementation Three main methods of encoding were used. 4.1. Phonetic Features The first, labeled ”Phonetic Features Approach” relates to a grapheme-to-phoneme conversion process which offers a very faithful rendition of the underlying phonetics of each word. The decoding process (phoneme-to-grapheme conversion) is less clearcut partly due to lack of linguistic data. For this reason we decided to extend the set of Maltese phonemes handled in order to be able to fully reverse the encoding process. Some examples of such cases relate to the present of silent graphemes such as ‘h’ or ‘gor words h’ which make use of the word-final devoicing rule (see Borg and Azzopardi-Alexander [5]). This approach made use of a phoneme set of 74 possible different symbols, most of which were actually geminates or slight variants or allophones of particular phonemes. For the implementation we adapted MGPT, a grapheme-to-phoneme transcriber created by Sinclair Calleja [11] to include the extended alphabet to cater for the full representation required by this encoding method. We also created a component which made use of most of the rules used by the MGPT module as well as some extra rules which were necessary to complete this process. Each phoneme had a unique binary representation made up of 27 distinct linguistic features. 4.2. Features Lite The next encoding method, which was similar to the former, was dubbed “Features Lite”. It consisted of a much more general approach to converting from graphemes to phonemes and vice versa. This was based on a 1:1 mapping of graphemes to phonemes which basically produced a much smaller set of unique phonemes. Consequently even the feature set used was different and slightly smaller with a total of 23 distinct features necessary to fully represent our alphabet. Refer to Figure 3 for a graphic explanation of this 5. Results 5.1. Cross Validation Figure 3. Features Lite Encoding Process encoding process. 4.3. Grapheme Encoding Finally, another encoding approach was used, the “Grapheme Encoding” method. This method is based on Maltese orthography and hence not on any linguistic principles; it simply encodes a word without considering the grapheme-to-phoneme process by assigning an arbitrary binary string to each possible character. Six bits were necessary to uniquely represent each different grapheme. This method was designed specifically to investigate the hypothesis that an encoding method based on phonetics is more likely to produce better results than using an arbitrary method. The networks used the back-propagation algorithm (first described by Kerbos [12] and subsequently adopted by the Connectionist community) for the learning process. We also made use of certain parameters such as Momentum and Input Gain in order to optimise performance. The main method used in the process of testing both the generation and classification networks is known as “cross-validation”. This means that the dataset is partitioned into a number of subsets. The system will then be trained on all of the subsets except for one, which will be used to test the system. This is repeated for all the different subsets. Ideally, the ‘leave-oneout-cross-validation’ method would have been used (this entails partitioning the data set into a number of subsets equal to the number of members within the set). However, trying this out on a system such as ours requires an inconceivable amount of time. We thus opted for the ‘K-fold’ cross-validation method instead. The latter implies that the training set is divided into k equally sized subsets. In our case we decided to give k a value of 10. This value was chosen since it balances out the small size of our dataset with the amount of time needed to train the whole system relatively well. We also made use of an extra data set made up of nonsense words which we extracted from Tamara Schembri’s project. Although the latter only spanned the first 4 categories, it helped us test the system with respect to new forms. Throughout the training process, an important assumption was made with regard to nouns having several different plural forms. Although, any one of these forms was deemed to be correct as far as the network’s output was concerned, if the network’s output for such a noun did not match exactly any of its possible forms, we chose to use the first form (according to the class hierarchy) in order to train the system and calculate its error. We based this assumption on the fact that, statistically speaking, the most important forms are clearly the ones at the very top of the hierarchy; in fact, the classes seem to be labeled in descending order of frequency. Throughout the course of this project, the feature sets used were slowly evolving according to changes which were either based on new linguistic information we had uncovered or else as a sort of experiment to assess how the reduction or adding of a particular feature to the set will affect its output. One of the main conclusions which we can extract from the linguistically-based approaches is that in the phonetics arena, certain defaults are much more important than previously assumed. For example, most consonants are by default central, and vowels are considered to be sonorants as well as voiced. We noticed that the specification of the latter as defaults increased the performance of the system radically. 5.2. Generation Model Performance We will now report on the performance of the generation model. Initially we tested our model using a hidden layer which contained twice the number of neurons contained within the input and output layers. This delivered relatively good generalisation results. However, we discovered through further testing, that increasing the number of neurons within this layer to three times that contained within the other layers increased the performance on novel forms by about 3 We also noticed that increasing the number of neurons increases the the amount of variance between forms and hence adds to the complexity of the function to be learnt. So the learning rate had to be decreased. A value of -0.03 was thus assigned, offering a good tradeoff between the speed of convergence as well as the ability of the network to learn the whole set. Given a lot more time, most probably the optimal value would be around -0.01. However the number of connections in this type of network meant that training it was a very time consuming process. On the other hand momentum was set to 0.9 in order to compensate for the lower training rate and speed up the process a bit. 5.3. Performance of Different Encoding Methods We also tested the different methods of encoding on separate networks. The Grapheme Encoding” method managed to learn about 52the set after around 3000 epochs, whereas the “Phonetic Features” Method did not manage to learn more than around 40used. We think that the former was restricted by its simplicity and lack of representational power whereas the latter went to the other extreme, offering a representational method too rich and specific for such a data set and such a problem. The Features Lite Method on the other hand managed to offer a good balance, learning very close to all of the set (around 98 Unluckily this model did not generalise to new forms as well as the categorisation model, providing an average of 26.6% correct generations. Through the results we see that Schembri’s analysis of the singular to plural correspondences were correct. Category B, although being less productive than Class A, rendered a better performance. As seen in Category H, some of the forms were hardly significant at all statistically yet they still managed to be learnt reasonably well. We also noted that Category B type plurals were sometimes inflected out of singular nouns or adjectives coming from other forms. The type of errors which were generated, in a certain sense suggest that the encoding method coupled with the input and output layer template did not provide the best way of representing and presenting words to the network. The fact that sometimes even the radicals were changed when mapping from the singular to the plural form indicates that possibly the emphasis of the latter’s importance was not strong enough in our system. We are also unsure as to the reason the vowel patterns sometimes get confused. Yet, according to the nonsense words and the responses generated by native speakers in Schembri’s work, certain plural forms for novel, nonsense forms are generated by different people using different vowel patterns. One might say that these results emulate this sort of behaviour. 6. Conclusion In conclusion, these results show us that the Maltese broken plural is a really complex system and that modelling it is easier said than done. However, we have also seen that most of the regularities present in most a given data set can be learnt by a neural network given a suitable encoding. We have also shown that apparently, categorisation of nouns in the singular form is not impossible and can be achieved with a relatively good degree of accuracy. We hope and are confident that given some future improvements to the design reported in this paper, a system which performs even better at the categorisation process can be built. We also confirmed the hypothesis that phoneticallybased encoding can yield much better results than a non-linguistic one. This seems to confirm Rumelhart and McClelland’s [9]belief that phonetics play a rather important role in the learning and acquisition of a language. However, this conclusion is relative to the data at hand and is underspecified - since there are many potential phonetic representations. To find out which phonetic representation is best requires further investigation on larger data sets. This confirmation involved building an improvement to Calleja’s MGPT system [11] an existing graphemeto-phoneme conversion component which is not only efficient, but which includes a rule set which is very accessible and easy for the non-programmer to understand. At the same time, we also changed and adapted the rules in order to make this process reversible for a phoneme-to-grapheme conversion. In conclusion, the evidence suggests that a neural approach to Maltese morphological analysis is promising - at least for the case of broken plurals - in that it allows us to avoid explicitly programming the classification task in the form of rules. It did not perform very well when attempting to generalise the task to unseen examples. The real questions to investigate next are how much of Maltese morphology can be covered in this way, and how a machine learning approach can be integrated with those parts of maltese morphology that at first sight are better handled by means of explicit morphological rules composed by experts in linguistics. References [1] T. Schembri, “The broken plural in maltese - an analysis of the maltese broken plural,” University of Malta, Tech. Rep., 2006, unpublished B.Sc. thesis. [2] E. Sutcliffe, A Grammar of the Maltese Language. Progress Press, 1924. [3] L. Cachia, Regoli Tal-Kitba Tal-Malti. Valletta: Klabb Kotba Maltin, 1997. [4] A. Cremona, Taglim Fuq il-Kitba Maltija. Union Press, 1975. Valletta: [5] A. Borg and M. Azzopardi-Alexander, Maltese. London: Routledge, 1997. [6] A. Farrugia, “Maltimorph: A computational analysis of the maltese broken plural,” University of Malta, Tech. Rep., 2008, unpublished B.Sc. thesis. [7] G. Kiraz, Computational Nonlinear Morphology, with Emphasis on Semitic Languages. Cambridge, UK: Cambridge University Press, 2001. [8] K. R. Beesley and L. Karttunen, Finite State Morphology, ser. CSLI Studies in Computational Linguistics. Stanford: CSLI Publications, 2003. [Online]. Available: http://linguistlist.org/pubs/books/getbook.cfm?BookID=6754 [9] D. Rumelhart and J. L. McClelland, Parallel distributed processing: Explorations in the microstructure of cognition. Cambridge, Ma.: MIT Press, 1986. [10] K. Plunkett and R. C. Nakisa, “A connectionist model of the arabic plural system,” Language and Cognitive Processes, vol. 12, no. 5,6, pp. 807–836, 1986. [11] S. Calleja, “Maltese speech recognition over mobile telephony.” Master’s thesis, University of Malta, 2004. [12] P. J. Werbos, The roots of backpropagation: from ordered derivatives to neural networks and political forecasting. New York, NY, USA: Wiley-Interscience, 1994.