SOCIAL MEDIA AND SELECTION: HOW DOES NEW TECHNOLOGY CHANGE AN

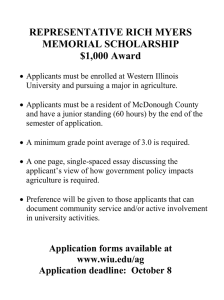

advertisement