Learning to Negotiate Optimally in Non-stationary environments Vidya Narayanan Nick Jennings

advertisement

Learning to Negotiate Optimally in

Non-stationary environments

Vidya Narayanan

Nick Jennings

University of Southampton

CIA Workshop Edinburgh September 11th-13th 2006.

Overview

•

Agent negotiation

•

Machine learning techniques

•

Model description

•

Algorithm description

•

Results

•

Conclusion

•

Future Work

Agent Negotiation

• Large multi-agent systems

• Different goals

• Resolving conflicts

Agent Negotiation

• Operating in Non-Stationary

Environments

– Real world applications everything is dynamic

• Operational objectives

• Parameters

• Constraints

Agent Negotiation

• Effective Performance

– Learning

– Adaptation

Machine Learning in Literature

• Reinforcement Learning

– Advantages

• Modelling interactions Multi agent systems

• Q-learning model free learning

– Drawbacks

• Difficult to model specific process like negotiation

• Computationally intensive

Machine Learning in Literature

• Bayesian Learning.

• Belief Update Process

– Data or information from environment

– Prior Distribution

– Update prior using data

• Negotiation as a Bayesian learning process.

Description of the Environment

• Memoryless Property

• Justification for assumption

• How it helps?

Non-Stationary Environment

• Determined the current strategy profile

– Given initial strategy profile

– Markov Property

– Chapman-Kolmogrov equation

• Computing current probability distribution in nonstationary environments

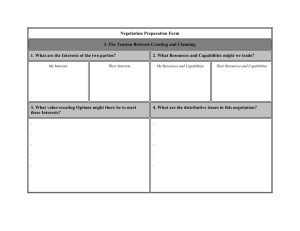

Description of Model

• Information about agent

– Payoff function

• Information known about opponent

– Initial strategy profile

– Offer at each stage of negotiation

• Need to compute

– Strategy yielding maximum payoff

• What varies?

– Opponent’s strategy profile

– Agent’s own payoff function

• Objective

– Determine opponent’s strategy profile and maximise own payoff

• Solution outline

–

–

–

–

–

Data --- Offer price

Strategy Profile --- Transition Probability matrices

Prior --- Arbitrary distribution over strategy profiles

Bayesian learning --- True distribution

Compute own strategy profile to maximise payoff

Algorithm

Inputs to the Algorithm

• Offer Price of opponent

Offer Price (100 --Range

500)

Initial Offer 110

• Initial strategy profile

0.5 0.25 0.25

H 01 0.75

0

0.25

0.2 0.1 0.7

0.5 0.2 0.3

H 02 (t ) 0.1 0 0.9

0.6 0.2 0.2

0.1 0.8 0.1

H 30 (t ) 0.7 0.15 0.15

0.1 0.7 0.2

• Distribution over strategy

profile

• Assumptions

– Relationship --- Strategy

and Offer price of opponent

– Set of arbitrary strategy

profiles

– Initial distribution over

strategies eg., equally likely

– Markov property

• Compute updated

probabilities over strategy

profiles.

(0.4,0.2,0.4)

Pr{H 01 (0)} 0.4

Pr{H 02 (0)} 0.2

Pr{H 03 (0)} 0.4

Pr{O0p (t ) | H 01 (t )} 0.4

Pr{O0p (t ) | H 02 (t )} 0.1

Pr{O0p (t ) | H 03 (t )} 0.5

Steps of the Algorithm

k

• Compute new Hypothesis

• Compute current opponent

strategy profile using result.

• Compute own strategy profile

from payoff function for maximum

payoff by linear programming.

H

new

0

(t ) Pr{H 0i (t ) | O0p (t )} H 0i (t )

i 0

P 00 (t ) H 0new (t )

1

0 2

u0x 2.5 1 2

1.5 1.5 1

Learning in the algorithm

• Repeat steps for each stage of negotiation.

• Learning over repeated negotiations.

• Convergence --- True probability distribution

over repeated negotiations.

Learning in the Algorithm

Learning

1st

2nd

…. . Convergence

Negotiation Negotiation

1st Step

Random

Dist.

Updated

Dist.

. . . Correct

Distribution

2nd Step

Random

Dist.

Updated

Dist.

. . .

Random

Dist.

Updated

Dist.

. . . Correct

Distribution

Convergence

Results

Conclusions

• New framework – Non-stationary negotiation.

• Theorem --- Computing current distribution in

non-stationary environments.

• Algorithm for negotiation.

• Theorem --- Convergence of algorithm to

optimal.

Conclusions

• Evaluated by varying

– Number of Hypotheses

– Strategy Profiles

– Payoff Functions

• Shown empirically convergence is rapid.

Future Work

• Analytical relationship offer price and strategy

profile.

• Comparison RL and BL.

Questions??

Pr{ X n1 x | X n xn , X n1 xn1 ,...} Pr{ X n1 x | X n xn }

back

m

P P P

n

ij

k 0

r

ik

s

kj

back

Pr{H (t ) | O (t )}

i

n

p

n

Pr{H (t )} Pr{O (t ) | H (t )}

p

n

Pr{H (t )} Pr{O

i 0

k 0

Back to Slide 12

i

n

i

n

p

n

i

n

i

n

(t ) | H (t )}

Back to Slide 11

m

p (t ) p (0) Q (t )

n

k

j 0

0

j

n

jk

Q (t ) [ P (0)] [ P (1)] ... [ P

n

jk

0

1

n 1

(t 1)]

back

Compute :

n

smax

(t ) max[( s1 (t ), s2 (t ),..., sm (t )]n unx (t ) [( s10 (t ), s20 (t ),..., sm0 (t )]T

s.t

m

s 1

i 0

i

si 0, i

back