Middleware Working Group Report Initial Evaluation & Future Directions Andrew N. Jackson

advertisement

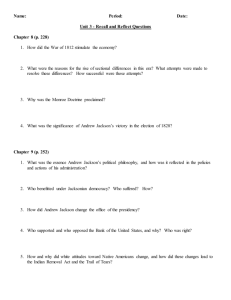

Middleware Working Group Report Initial Evaluation & Future Directions Andrew N. Jackson Chip Watson ILDG2 - 2nd May 2003 1 Andrew N. Jackson May 2003 Middleware Recap ILDG: A Grid-Of-Grids An aggregation of a number of datagrids. Using a standardised webservice interface. Initially linking U.S. and U.K. datagrids. To demonstrate basic contentlisting (‘ls’) by Lat03. Structure U.K.’s QCDgrid as a single entity. With many RO nodes and one RW node. Every U.S. storage facility appears as a separate entity. Gateway running on lqcd.org provides the overall interface. 2 Client ildgFileService [A web service.] U.S. SRM U.S. SRM U.S. SRM QCDgrid & others... Andrew N. Jackson May 2003 Progress Only gentle progress so far... Added basic WG overview on http://www.lqcd.org/ildg/. Andrew Jackson & Chip Watson (co-conveners of the MWG) have been discussing overall status and direction. Andrew Jackson has (with James Perry’s help) written some experimental code to test the Grid-Of-Grids concept. The ILDG-Middleware mailing list has been set up (this week!). ILDG Middleware WG <ildg-middleware@epcc.ed.ac.uk> Please contact me, Andrew Jackson <A.N.Jackson@ed.ac.uk> to be added to this mailing list. 3 Andrew N. Jackson May 2003 Required Interfaces The ILDG Services will be built on... Replica Catalogue Currently different incompatible implementations. Metadata Catalogue QCDgrid currently has its own metadata catalogue based on the eXist XML database, queried via XPath. http://exist.sourceforge.net/. Note that EDG-WP2 is creating a combined ‘ReplicaMetadataCatalog’. Storage Resource Manager EDG-PPDG are developing a standard. Implementation code will follow shortly afterwards. Such standards can hide the differences between Replica Catalogues. And perhaps Metadata Catalogues too. File-transfer daemons A solved problem, though there are many to choose from/support: http, ftp, gridftp, jparss, bbftp.... 4 Andrew N. Jackson May 2003 SRM 2.x The Storage Resource Manager Interface An emerging standard: v2.1 is being currently being co-written by EDG and PPDG. Broad functionality: Maps logical filenames (LFN) to: One or more physical locations (Storage File Name, SFN, of the form sfn://srm.hostname/physical/path/file.name). (And perhaps) Global Unique Identifier (GUID/GlobalFileID), used to refer a specific file. Provides Space Management Functions. e.g. request free space in the future. Provides Directory and File Functions. e.g. srmLs lists directory contents. (Very useful for ILDG). srmMv/srmCp for moves and copies. etc... Provides Data Transfer Functions. (Very useful for ILDG). See http://sdm.lbl.gov/srm for more... 5 Andrew N. Jackson May 2003 EDG ReplicaMetadataCatalog Aims to unify the metadata and replica management. Associating extra data with each entry in the replica catalogue. Still very early in its development. Can store ‘attributes’, and associate them with a given LFN. includes examples like filesize, date of creation, etc. It is not clear how our XML Schema would fit into this model. References The ‘RMC’, under EDG http://www.eu-datagrid.org/ WP2: http://edg-wp2.web.cern.ch/edg-wp2/. See http://proj-grid-data-build.web.cern.ch/proj-grid-data-build/edg-metadatacatalog/ for more details. 6 Andrew N. Jackson May 2003 ILDG Services To build on top of these tools Create SRM2.x interface to the QCDgrid. Implement (a subset of) the SRM 2.x spec. on top of the Globus Java CoG to allow QCDgrid to ‘speak the same language’ as the U.S. SRMs. Define an aggregation scheme for pooling SRM contents: ildgURLs:The ILDG file namespace. Build the ILDG Web Services Providing an interface based on SRM2.x. Extra information will be needed. Providing web services to browse the ILDG file namespace: Directly. Or via the metadata. Build a ILDG Web Portal Public access via www.lqcd.org. Hooking into the ILDG Web Services. 7 Andrew N. Jackson May 2003 ildgURLs Whatever interface we use, we need a way to identify files on the ILDG Grid-Of-Grids: Suggest using an ‘ildgURL’: ildg://srm.host/dir/name.ext. ildg:// a unique prefix. srm.host the hostname of the SRM machine. For QCDgrid, the entire system appears as a single SRM. qcdgrid.ac.uk and backup mirror on qcdgrid-mirror.ac.uk? For U.S. machines, each store appears as a separate SRM. /dir/name.ext a unique filename of a file for that SRM. Unique per SRM, or over the entire ILDG? Note that this scheme assumes one SRM catalogue per machine. Under Globus, we could have many catalogues each with a set of collections of files. But this is functionality is probably not needed. 8 Andrew N. Jackson May 2003 The ILDG Services The ildgFileService Based on a (read-only?) subset of SRM2.x. Uses ildgURLs instead of LFNs. Contains extra information about the SRM hosts, e.g. the WS URL. Adds extra aggregation information. Later, as SRM matures, adopt more of the protocol (and indeed software!). The aggregate service browses the ildgURL-space. But a idlg://url can be mapped onto physical host and allowed access protocol(s), which will then allow file downloads. gsiftp://trumpton.ph.ed.ac.uk/grid/dir/filename http://www.qcdgrid.ac.uk/ildgFileServlet?file=grid/dir/filename The ildgMetadataService Implement a Metadata-based interface. Devise a web-form and/or browse-tree interface? Extend QCDgrid’s Schema-based browser? 9 Andrew N. Jackson May 2003 Testing A Grid-Of-Grids A Simple Grid-Of-Globuses A web interface to a set of Globus 2.x replica catalogues. (Could not get access to U.S. SRMs in the available time). Structure of the code is essentially as ILDG requires. Code Overview: org.lqcd.srm.* package defines an abstract version of a cut-down SRM. org.lqcd.srm.globus.* is an implementation of that SRM using the Java CoG. org.lqcd.ildg.ildgFileService defines the aggregated service. Currently accessed via a Java Servlet Page instead of as a Web Service. Generally successful, with three main suggestions arising: We need a public access mechanism to get into the U.S. SRMs. Directory structure and file information should be cached. The demo caches in the browser session. The first visit is very very slow! It takes about 10 mins to do a full lookup! We need a generic metadata-based interface. Without it, the different catalogues can only be unified at the root of the aggregated directory structure (by the definition of the ildgURL). 10 Andrew N. Jackson May 2003 Metadata A proper SRM supplies lots of information about files. Globus Replica Catalogues only supply filenames No file size, ownership, creation date etc, and so this must be looked up on the remote physical host and/or the metadata catalogue. Therefore, QCDgrid MUST hook its metadata catalogue into the SRM Web Service API. Initially provide just basic file information But we may as well also plan how the generic metadata-browsing might fit it to avoid making life difficult later. But, browse by metadata requires standardising access to our metadata catalogues QCDgrid essentially maps XPath queries to logical filenames. But what should we be doing? 11 Andrew N. Jackson May 2003 Plan Goal by Lattice 2003: simple system based on SRM 2.1. srmLs interface to browse each others files via the ildgURL namespace. support at least enough of the SRM interface to allow simple file transfer. To do this... First set up actual Web Services Move the test code from the service to a web services. (Currently, the test code queries the Globus RC directly). Polish the code: e.g. Needs a better caching mechanism. Currently once-per-session. Should cache and refresh in the background. Then, develop portals to be hosted on www.lqcd.org/ildg Browsing and transfer, looking rather like a normal http/ftp directory structure? 12 Andrew N. Jackson May 2003 For Discussion... The Plan: Implement SRM2.x subset for QCDgrid: srmLs. Implement the ildgFileService and a simple web portal. The File Space Browser: Agree a ‘Namespace’ of the Grid-Of-Grids: ildg://srm.hostname/global/file/id ? Should we also support multiple collections at a single SRM? Should we ensure that the GlobalFileIDs are unique across the ILDG? Access Policies... Public general access? Administration Privileges & Actions? Use ‘Catalogue-Caching’ in the ildgFileService? Cache metadata too? Portal/User Interface ideas? Metadata API (mapping queries to ildgURLs): XPath using the Schema? Or ‘Attributes’ associated with the GlobalFileIDs? 13 Andrew N. Jackson May 2003 Appendixes... 14 Andrew N. Jackson May 2003 Global File IDs Use an ID to precisely identify a file. As well as a logical filename?! Quoted in the metadata catalogues – allowing expression of relationships between files. But still permit changing global file names. 15 Andrew N. Jackson May 2003 Policies Public (Read-Only) Access. We must define and implement our general access policy. Currently, QCDgrid allows public read-only access, but only to the replica catalogue. Then we can work out how to access U.S. SRMs. Completely Public? No registration required to read the public data? Public, with simple registration? Like NERSCs Gauge Connection, http://qcd.nersc.gov/. Certificates used to grant privileges? Certified Individuals Only? No anonymous access at all? Additional Authorisation. Grid Certificates used to authorise richer access/functionality. e.g. a certificate held in your browser can be used to authorise you to move data between grids. ‘Local’ Administration. All potentially destructive acts restricted to the local administrators? 16 Andrew N. Jackson May 2003