Middleware Working Group Report Initial Evaluation & Future Directions Andrew N. Jackson

advertisement

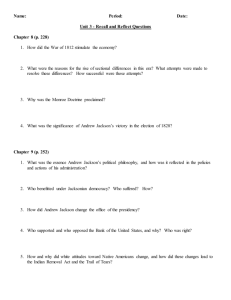

Middleware Working Group Report Initial Evaluation & Future Directions Andrew N. Jackson Chip Watson ILDG2 - 2nd May 2003 1 Andrew N. Jackson May 2003 Middleware Recap ¾ ILDG: A Grid-Of-Grids ¾ An aggregation of a number of datagrids. ¾ Using a standardised webservice interface. ¾ Initially linking U.S. and U.K. datagrids. ¾ To demonstrate basic contentlisting (‘ls’) by Lat03. ¾ Structure ¾ U.K.’s QCDgrid as a single entity. ¾ With many RO nodes and one RW node. ¾ Every U.S. storage facility appears as a separate entity. ¾ Gateway running on lqcd.org provides the overall interface. 2 Client ildgFileService [A web service.] U.S. SRM U.S. SRM U.S. SRM QCDgrid & others... Andrew N. Jackson May 2003 Progress ¾ Only gentle progress so far... ¾ Added basic WG overview on http://www.lqcd.org/ildg/. ¾ Andrew Jackson & Chip Watson (co-conveners of the MWG) have been discussing overall status and direction. ¾ Andrew Jackson has (with James Perry’s help) written some experimental code to test the Grid-Of-Grids concept. ¾ The ILDG-Middleware mailing list has been set up (this week!). ¾ ILDG Middleware WG <ildg-middleware@epcc.ed.ac.uk> ¾ Please contact me, Andrew Jackson <A.N.Jackson@ed.ac.uk> to be added to this mailing list. 3 Andrew N. Jackson May 2003 Required Interfaces ¾ The ILDG Services will be built on... ¾ Replica Catalogue ¾ Currently different incompatible implementations. ¾ Metadata Catalogue ¾ QCDgrid currently has its own metadata catalogue based on the eXist XML database, queried via XPath. http://exist.sourceforge.net/. Note that EDG-WP2 is creating a combined ‘ReplicaMetadataCatalog’. ¾ Storage Resource Manager ¾ EDG-PPDG are developing a standard. ¾ Implementation code will follow shortly afterwards. ¾ Such standards can hide the differences between Replica Catalogues. ¾ And perhaps Metadata Catalogues too. ¾ File-transfer daemons 4 ¾ A solved problem, though there are many to choose from/support: Andrew N. Jackson ¾ http, ftp, gridftp, jparss, bbftp.... May 2003 SRM 2.x ¾ The Storage Resource Manager Interface ¾ An emerging standard: ¾ v2.1 is being currently being co-written by EDG and PPDG. ¾ Broad functionality: ¾ Maps logical filenames (LFN) to: ¾ One or more physical locations (Storage File Name, SFN, of the form sfn://srm.hostname/physical/path/file.name). ¾ (And perhaps) Global Unique Identifier (GUID/GlobalFileID), used to refer a specific file. ¾ Provides Space Management Functions. ¾ e.g. request free space in the future. ¾ Provides Directory and File Functions. ¾ e.g. srmLs lists directory contents. (Very useful for ILDG). ¾ srmMv/srmCp for moves and copies. ¾ etc... ¾ Provides Data Transfer Functions. (Very useful for ILDG). ¾ See http://sdm.lbl.gov/srm for more... 5 Andrew N. Jackson May 2003 EDG ReplicaMetadataCatalog ¾ Aims to unify the metadata and replica management. ¾ Associating extra data with each entry in the replica catalogue. ¾ Still very early in its development. ¾ Can store ‘attributes’, and associate them with a given LFN. ¾ includes examples like filesize, date of creation, etc. ¾ It is not clear how our XML Schema would fit into this model. ¾ References ¾ The ‘RMC’, under EDG http://www.eu-datagrid.org/ ¾ WP2: http://edg-wp2.web.cern.ch/edg-wp2/. ¾ See http://proj-grid-data-build.web.cern.ch/proj-grid-data-build/edgmetadata-catalog/ for more details. 6 Andrew N. Jackson May 2003 ILDG Services ¾ To build on top of these tools ¾ Create SRM2.x interface to the QCDgrid. ¾ Implement (a subset of) the SRM 2.x spec. on top of the Globus Java CoG to allow QCDgrid to ‘speak the same language’ as the U.S. SRMs. ¾ Define an aggregation scheme for pooling SRM contents: ¾ ildgURLs: The ILDG file namespace. ¾ Build the ILDG Web Services ¾ Providing an interface based on SRM2.x. ¾ Extra information will be needed. ¾ Providing web services to browse the ILDG file namespace: ¾ Directly. ¾ Or via the metadata. ¾ Build a ILDG Web Portal ¾ Public access via www.lqcd.org. ¾ Hooking into the ILDG Web Services. 7 Andrew N. Jackson May 2003 ildgURLs ¾ Whatever interface we use, we need a way to identify files on the ILDG Grid-Of-Grids: ¾ Suggest using an ‘ildgURL’: ildg://srm.host/dir/name.ext. ¾ ildg:// a unique prefix. ¾ srm.host the hostname of the SRM machine. ¾ For QCDgrid, the entire system appears as a single SRM. ¾ qcdgrid.ac.uk and backup mirror on qcdgrid-mirror.ac.uk? ¾ For U.S. machines, each store appears as a separate SRM. ¾ /dir/name.ext a unique filename of a file for that SRM. ¾ Unique per SRM, or over the entire ILDG? ¾ Note that this scheme assumes one SRM catalogue per machine. ¾ Under Globus, we could have many catalogues each with a set of collections of files. ¾ But this is functionality is probably not needed. 8 Andrew N. Jackson May 2003 The ILDG Services ¾ The ildgFileService ¾ Based on a (read-only?) subset of SRM2.x. ¾ Uses ildgURLs instead of LFNs. ¾ Contains extra information about the SRM hosts, e.g. the WS URL. ¾ Adds extra aggregation information. ¾ Later, as SRM matures, adopt more of the protocol (and indeed software!). ¾ The aggregate service browses the ildgURL-space. ¾ But a idlg://url can be mapped onto physical host and allowed access protocol(s), which will then allow file downloads. ¾ gsiftp://trumpton.ph.ed.ac.uk/grid/dir/filename ¾ http://www.qcdgrid.ac.uk/ildgFileServlet?file=grid/dir/filename ¾ The ildgMetadataService ¾ Implement a Metadata-based interface. ¾ Devise a web-form and/or browse-tree interface? ¾ Extend QCDgrid’s Schema-based browser? 9 Andrew N. Jackson May 2003 Testing A Grid-Of-Grids ¾ A Simple Grid-Of-Globuses ¾ A web interface to a set of Globus 2.x replica catalogues. ¾ (Could not get access to U.S. SRMs in the available time). ¾ Structure of the code is essentially as ILDG requires. ¾ Code Overview: ¾ org.lqcd.srm.* package defines an abstract version of a cut-down SRM. ¾ org.lqcd.srm.globus.* is an implementation of that SRM using the Java CoG. ¾ org.lqcd.ildg.ildgFileService defines the aggregated service. Currently accessed via a Java Servlet Page instead of as a Web Service. ¾ Generally successful, with three main suggestions arising: ¾ We need a public access mechanism to get into the U.S. SRMs. ¾ Directory structure and file information should be cached. ¾ The demo caches in the browser session. The first visit is very very slow! ¾ It takes about 10 mins to do a full lookup! ¾ We need a generic metadata-based interface. ¾ Without it, the different catalogues can only be unified at the root of the aggregated directory structure (by the definition of the ildgURL). 10 Andrew N. Jackson May 2003 Metadata ¾ A proper SRM supplies lots of information about files. ¾ Globus Replica Catalogues only supply filenames ¾ No file size, ownership, creation date etc, and so this must be looked up on the remote physical host and/or the metadata catalogue. ¾ Therefore, QCDgrid MUST hook its metadata catalogue into the SRM Web Service API. ¾ Initially provide just basic file information ¾ But we may as well also plan how the generic metadatabrowsing might fit it to avoid making life difficult later. ¾ But, browse by metadata requires standardising access to our metadata catalogues ¾ QCDgrid essentially maps XPath queries to logical filenames. ¾ But what should we be doing? 11 Andrew N. Jackson May 2003 Plan ¾ Goal by Lattice 2003: ¾ simple system based on SRM 2.1. ¾ srmLs interface to browse each others files via the ildgURL namespace. ¾ support at least enough of the SRM interface to allow simple file transfer. ¾ To do this... ¾ First set up actual Web Services ¾ Move the test code from the service to a web services. ¾ (Currently, the test code queries the Globus RC directly). ¾ Polish the code: ¾ e.g. Needs a better caching mechanism. ¾ Currently once-per-session. ¾ Should cache and refresh in the background. ¾ Then, develop portals to be hosted on www.lqcd.org/ildg ¾ Browsing and transfer, looking rather like a normal http/ftp directory structure? 12 Andrew N. Jackson May 2003 For Discussion... ¾ The Plan: ¾ Implement SRM2.x subset for QCDgrid: srmLs. ¾ Implement the ildgFileService and a simple web portal. ¾ The File Space Browser: ¾ Agree a ‘Namespace’ of the Grid-Of-Grids: ¾ ildg://srm.hostname/global/file/id ? ¾ Should we also support multiple collections at a single SRM? ¾ Should we ensure that the GlobalFileIDs are unique across the ILDG? ¾ Access Policies... ¾ Public general access? ¾ Administration Privileges & Actions? ¾ Use ‘Catalogue-Caching’ in the ildgFileService? Cache metadata too? ¾ Portal/User Interface ideas? ¾ Metadata API (mapping queries to ildgURLs): 13 ¾ XPath using the Schema? Or ‘Attributes’ associated with the GlobalFileIDs? Andrew N. Jackson May 2003 Appendixes... 14 Andrew N. Jackson May 2003 Global File IDs ¾ Use an ID to precisely identify a file. ¾ As well as a logical filename?! ¾ Quoted in the metadata catalogues – allowing expression of relationships between files. ¾ But still permit changing global file names. 15 Andrew N. Jackson May 2003 Policies ¾ Public (Read-Only) Access. ¾ We must define and implement our general access policy. ¾ Currently, QCDgrid allows public read-only access, but only to the replica catalogue. ¾ Then we can work out how to access U.S. SRMs. ¾ Completely Public? ¾ No registration required to read the public data? ¾ Public, with simple registration? ¾ Like NERSCs Gauge Connection, http://qcd.nersc.gov/. ¾ Certificates used to grant privileges? ¾ Certified Individuals Only? ¾ No anonymous access at all? ¾ Additional Authorisation. ¾ Grid Certificates used to authorise richer access/functionality. ¾ e.g. a certificate held in your browser can be used to authorise you to move data between grids. ¾ ‘Local’ Administration. ¾ All potentially destructive acts restricted to the local administrators? 16 Andrew N. Jackson May 2003