Discovery Net e-Science made easy

advertisement

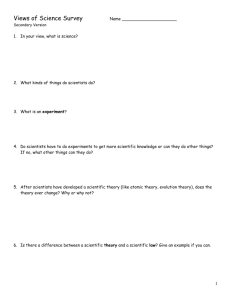

Discovery Net TM e-Science made easy Discovery Net is funded by the EPSRC under the UK e-Science core program “e-Science is about global collaboration in key areas of science, and the next generation of infrastructure that will enable it” John Taylor, Director General of the Research Councils, OST Knowledge Discovery & e-Science Discovery Net is building the software infrastructure and tools for providing Knowledge Discovery Services that allow scientists to conduct and manage complex data analysis and knowledge discovery activities on the new generation Internet. In the same way the web has revolutionised publishing and searching for information, Knowledge Discovery Services will revolutionise how the analysis of distributed scientific data is conducted over the Internet. High Throughput Discovery Informatics Discovery Net addresses the complexities faced by scientists from all information-intensive fields where: • • • • Modern high throughput devices are routinely generating and capturing large amounts of data. Data sets are not analysed in isolation, but dynamically integrated during the analysis. New data analysis methods and software components are continually being developed. Knowledge discovery procedures are complex multistep procedures conducted by interdisciplinary teams of scientists. To address the challenges of high throughput discovery informatics, the Discovery Net project is building the architecture and the associated application-level middleware and tools for conducting and managing complex, distributed knowledge discovery activities. A Grid of Knowledge Discovery Services Discovery Net provides a service-oriented computing model for knowledge discovery, allowing users to connect to and use data analysis software as well as data sources that are made available online by third parties. In particular, Discovery Net defines the standards, architecture and tools that: • • • Allow scientists to plan, manage, share and execute complex knowledge discovery and data analysis procedures available as remote services. Allow service providers to publish and make available data mining and data analysis software components as services to be used in knowledge discovery procedures. Allow data owners to provide interfaces and access to scientific databases, data stores, sensors and experimental results as services so that they can be integrated in knowledge discovery processes. Discovery Net Architecture and Knowledge Discovery Services Discovery Net architecture standards for specifying: provides open Knowledge Discovery Adapters: used to declare the properties of analytical software components and scientific data stores including their input/output types, performance and accuracy characteristics. Knowledge Discovery Services look-up and registration: allowing scientists to retrieve and compose Knowledge Discovery Services in their discovery procedures. Integrated Scientific Database Access: allowing the integration of structured and semistructured data from different data sources within a discovery procedure using XML schemas. Knowledge Discovery Process Management: including DPML (Discovery Process Markup Language) as a standard specification language for constructing and managing knowledge discovery procedures, as well as recording their history. Knowledge and Discovery Process Storage: allowing discovery procedures to be stored, shared and re-executed. Knowledge Discovery Process Deployment: allowing users to deploy and publish their existing knowledge discovery procedures as new services. Figure 1: Application of Discovery Net in Life Sciences From Information Search to Knowledge Discovery Services Consider how easy it is to use Google to conduct a text search over information already published on the Internet. Discovery Net aims to extend Internet usage from this simple model of publishing and retrieving information to a more powerful model of publishing, retrieving and using Knowledge Discovery Services for scientific data analysis. Data Analysis Services A life scientist, for example, may have access to an automated laboratory experiment where a range of sensors produces a large amount of data about the activities of genes in cancerous cells and their response to the introduction of a possible drug. The scientist analysing the data searches through the latest set of data mining software components available on the Internet. These software components, as well as their remote execution, are provided by service providers. Knowledge Discovery Process Composition From the available services, scientists choose a software component for data normalisation to be applied to the data and execute the computation. The results are then fed into another software component performing a clustering algorithm analysis to segment the data into sub-groups of genes exhibiting similar behaviour. The software components used may have come from different providers but, for scientists, their integration and execution is a matter of a few mouse clicks. Data Access and Integration Using the analysis results, scientists then proceed to verify that the biological significance of the subgroups can be explained by referring to existing available information about related genes, proteins, metabolic activities and regulation. Such information is available from various online databases. Each of the databases and their querying interfaces are accessible as remote services. Scientists have to search and find the appropriate service, then connect to it, pulling out the relevant data, and finally integrating the data in their Knowledge Discovery Process. Following this, they can then proceed to perform further analysis on the integrated data. Knowledge Management and Deployment Once the process is complete, scientists publish their results in a database, thus making them available to the community. More importantly, they also publish the details of the discovery processes (discovery work flows) in a Discovery Process Store. This makes them available to other scientists, who can verify the results and methods used, as well as re-execute the published processes as services in their own Knowledge Discovery Processes. Discovery Net Testbeds Discovery Net is multi-disciplinary project. In addition to developing the software infrastructure for Knowledge Discovery Services, the project is also developing a series of testbeds and demonstrators for using the technology in the areas of life sciences, environmental modelling and geo-hazard prediction. Life Science Research Testbed The first testbed implemented in the Discovery Net project is an integrated platform for life science researchers, integrating the analysis of data from: novel high throughput sequencing technologies and novel protein chips with a wide range of existing data sources including: • DNA microarray experiments. • NMR data for Metabolite analysis. • Online genomic, proteomic and metabolic databases. To realise this testbed, implementations of: • • • • Discovery Net provides Specialised Knowledge Discovery Clients and Webbased Clients for interactive visual analysis and discovery process creation. Knowledge Discovery Servers and their associated adapters providing a variety of data analysis and visualisation tools, including tools for data classification, clustering and time series analysis, as well as adapters to the semi-structured life-science data sources available online. Life-Science Discovery Process Data Store for the publication of domain-specific complex compositions of Knowledge Discovery Processes, and allowing the retrieval and deployment of such processes. Knowledge Discovery Deployment Engine for the execution of discovery processes and Knowledge Reporting Tools for dissemination of the results of the related discoveries. The Knowledge Discovery Services provided by Discovery Net are a set application-level services. Their implementation will be coupled with emerging grid standards and technologies such as the OGSA (Open Grid Services Architecture) standard that provides services for managing lower-level grid computational infrastructure and resources. “The essential message of the OGSA is an abstraction of the grid architecture in terms of a set of basic grid services. The hope is that these services may be easily composed with higher level services to deliver complex applications and workflows”. Tony Hey, Director of the UK e-Science Core Programme The Discovery Net project is conducted at Imperial College of Science, Technology and Medicine. Principal Investigator: Dr. Yike Guo (Dept of Computing). Discovery Net Team: Prof. Tony Cass (Dept. of Biological Sciences), Prof. John Darlington (Dept. of Computing), Dr. John Hassard (Dept. of Physics), Dr. Jian Guo Liu (Dept. of Earth Sciences), Dr. Daniel Ruckert (Dept. of Computing), Prof. Robert Spence (Dept. of Electrical Engineering). Discovery Net Collaborations: Discovery Net is currently collaborating with National Centre for Data Mining (NCDM) at the University of Illinois at Chicago for the creation of the Global Discovery Net project. Contact Information: Dr. Moustafa M. Ghanem, Discovery Net Project Manager Department of Computing, Imperial College, London SW7 2BZ, United Kingdom Email: mmg@doc.ic.ac.uk Phone: +44 (0) 20 7594 8357 Fax: +44 (0) 20 7594 8246 www.discovery-on-the.net