Provenance and pragmatics

advertisement

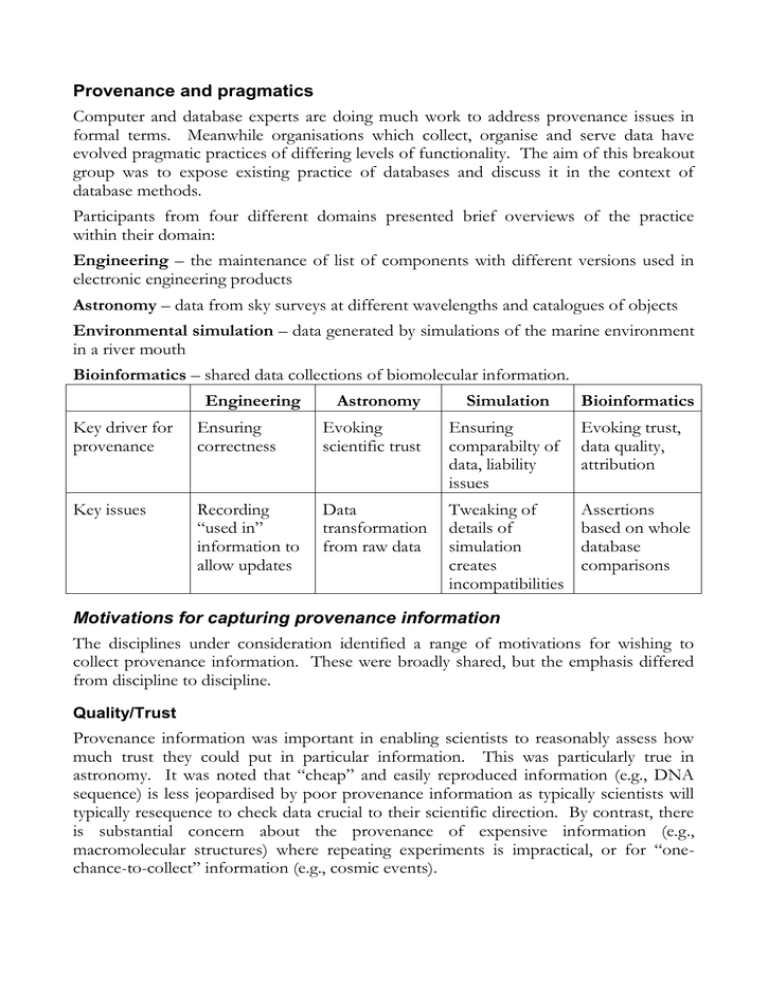

Provenance and pragmatics Computer and database experts are doing much work to address provenance issues in formal terms. Meanwhile organisations which collect, organise and serve data have evolved pragmatic practices of differing levels of functionality. The aim of this breakout group was to expose existing practice of databases and discuss it in the context of database methods. Participants from four different domains presented brief overviews of the practice within their domain: Engineering – the maintenance of list of components with different versions used in electronic engineering products Astronomy – data from sky surveys at different wavelengths and catalogues of objects Environmental simulation – data generated by simulations of the marine environment in a river mouth Bioinformatics – shared data collections of biomolecular information. Engineering Astronomy Simulation Bioinformatics Key driver for provenance Ensuring correctness Evoking scientific trust Ensuring comparabilty of data, liability issues Evoking trust, data quality, attribution Key issues Recording “used in” information to allow updates Data transformation from raw data Tweaking of details of simulation creates incompatibilities Assertions based on whole database comparisons Motivations for capturing provenance information The disciplines under consideration identified a range of motivations for wishing to collect provenance information. These were broadly shared, but the emphasis differed from discipline to discipline. Quality/Trust Provenance information was important in enabling scientists to reasonably assess how much trust they could put in particular information. This was particularly true in astronomy. It was noted that “cheap” and easily reproduced information (e.g., DNA sequence) is less jeopardised by poor provenance information as typically scientists will typically resequence to check data crucial to their scientific direction. By contrast, there is substantial concern about the provenance of expensive information (e.g., macromolecular structures) where repeating experiments is impractical, or for “onechance-to-collect” information (e.g., cosmic events). Attribution Correct attribution of scientific findings depends on the databases which include them carrying provenance information. In the biological arena this is seen as important as part of scientific credit, though it breaks down where composite objects are derived from the work of hundreds of scientists. It seems less of an issue for large-scale genome data or sky surveys, where the attribution is pretty clear. Liability (blame assignment) was seen as the dark side of attribution. Priority In the biological world patent documentation searches may need to know exactly who created what and when. At the EBI special systems to support this have been developed (at the expense of the European Patent Office). Interpretation Provenance of the information can be important to ensuring its correct interpretation. This requirement was particularly important in the environmental simulation data, where one would like to draw conclusions by comparing periodic data generated over a long time scale by evolving simulation methods. Interoperability Connections between different data collections depends on the ability to identify equivalent (or related) objects. Roll-back Often the data presented are the result of refinement/reduction/transformation of “raw” data. If this pre-processing turns out to be suspect it is necessary to re-examine or recompute the data. Cost/Benefit/Risk It became clear that, in practice, data curation centres are either implicitly or explicitly making cost/benefit decisions about the collection and propagation of provenance information along with an intuitive assessment of the risks associated with failure to do so. Thus: Cheap, reproducible data carry little risk as they can be redetermined, and so there is less pressure to record provenance High-aggregation provenance (e.g., information derived from comparison with large, changing databases) seems expensive to collect well, and its suppliers simply give up on provenance. Expensive data (e.g., sky-surveys, macromolecular structures) create pressure to capture provenance because doing so is cheap by comparison with recollecting the data. Unique event data (e.g., cosmic events) cannot be verified after the fact, so they are only as good as the associated provenance information. Life-critical data – (e.g., drug ingredient pedigree, aircraft component versions) create pressure to capture provenance due to the high risk associated with not doing so. We are therefore prepared to tolerate a fairly high cost in capturing the information. Reducing the cost of provenance information A defeatist might conclude that we effectively only create provenance information when it’s essential or easy, and give up otherwise. However the group was of the view that there were some obvious strategies to reduce the burden of collecting the information, and was willing to accept that there might also be some non-obvious strategies. Archive rather than serve provenance Sometimes the need to access the provenance information may be rare and insufficient to merit little more than securing it such that it can be reworked. For example, old versions of sequence databases are typically held, but it is substantial work to retrieve them. Automate If the tools which the data producers use along the way to creating their data record what they do in a standard way then this information can be “harvested” to create high quality provenance information with less effort. …and the non-obvious Can the task of recording the provenance of a complex and ever-changing database be turned into a database engineering problem rather than a scientific domain problem. Could the very technology used to maintain the database also maintain the provenance information. The domain specialists look to the computer scientists for help here.