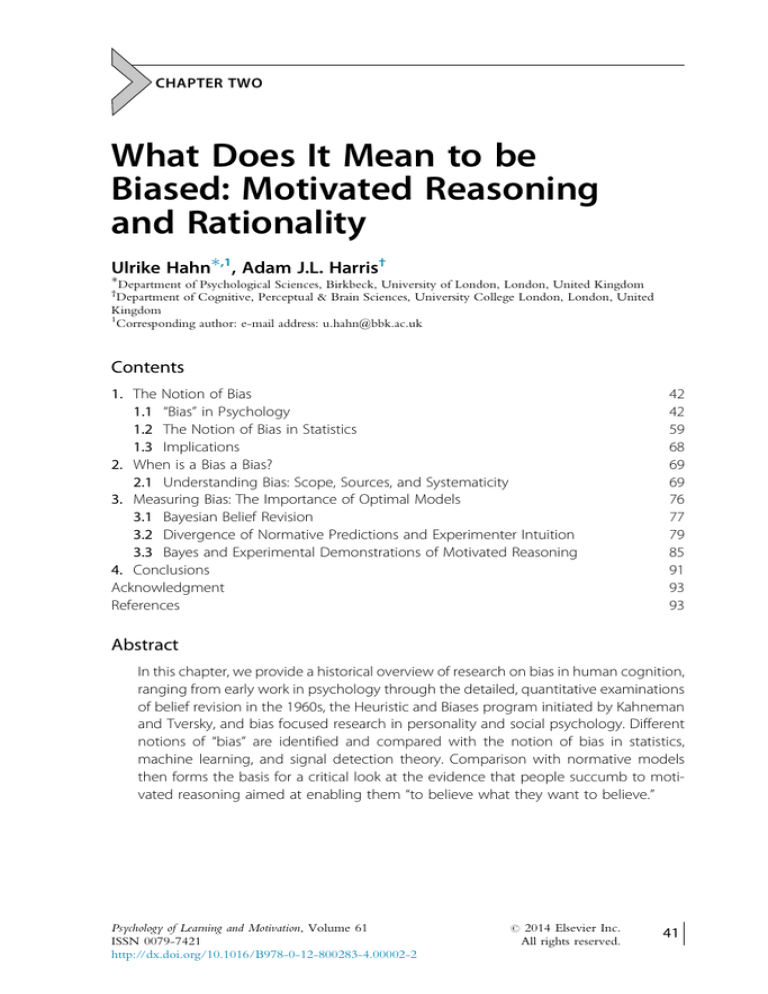

What Does It Mean to be Biased: Motivated Reasoning and Rationality Hahn

advertisement