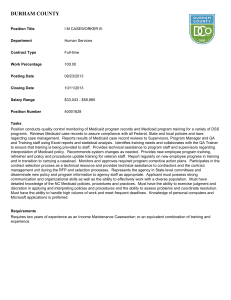

CHCS Performance Measurement in

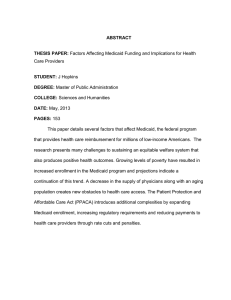

advertisement