JOINTLY ESTIMATING 3D TARGET SHAPE AND MOTION FROM RADAR DATA

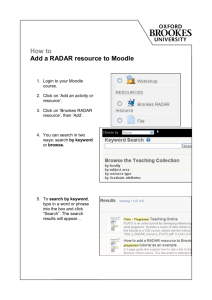

advertisement