3D Segmentation and Reconstruction of Endobronchial Ultrasound

advertisement

3D Segmentation and Reconstruction of Endobronchial

Ultrasound

Xiaonan Zang,1 Mikhail Breslav,1,2 and William E. Higgins1∗

1

Department of Electrical Engineering, Penn State University, University Park, PA 16802

2

Department of Computer Science, Boston University, Boston, MA 02215

ABSTRACT

State-of-the-art practice for lung-cancer staging bronchoscopy often draws upon a combination of endobronchial

ultrasound (EBUS) and multidetector computed-tomography (MDCT) imaging. While EBUS offers real-time in

vivo imaging of suspicious lesions and lymph nodes, its low signal-to-noise ratio and tendency to exhibit missing

region-of-interest (ROI) boundaries complicate diagnostic tasks. Furthermore, past efforts did not incorporate

automated analysis of EBUS images and a subsequent fusion of the EBUS and MDCT data. To address these

issues, we propose near real-time automated methods for three-dimensional (3D) EBUS segmentation and reconstruction that generate a 3D ROI model along with ROI measurements. Results derived from phantom data

and lung-cancer patients show the promise of the methods. In addition, we present a preliminary image-guided

intervention (IGI) system example, whereby EBUS imagery is registered to a patient’s MDCT chest scan.

Keywords: endobronchial ultrasound, 3D reconstruction, bronchoscopy, lung cancer, image segmentation

1. INTRODUCTION

State-of-the-art practice for lung-cancer diagnosis and staging often consists of two steps: MDCT image assessment followed by bronchoscopy. During image assessment, the physician manually examines the stack of

two-dimensional (2D) slices constituting a given 3D MDCT study to identify diagnostic ROIs, such as lymph

nodes and nodules.1 Subsequently, with help from live endobronchial video feedback, the physician navigates

a flexible bronchoscope through the patient’s airway tree toward the diagnostic ROI. Once the bronchoscope

reaches the vicinity of the diagnostic target, the localization phase of bronchoscopy begins. In particular, the

physician inserts devices such as a needle through the bronchoscope working channel to perform tissue biopsies.

In a recent study, Merritt et al. showed that using standard videobronchoscopy practice, a physician could

navigate to the correct airway 78% of the time, but, during localization, only had a biopsy success rate of 43%.2

This huge performance drop for localizing an ROI greatly compromises yield.

To improve the diagnostic yield of bronchoscopy, researchers have developed IGI systems.3–11 For example,

motivated by the field of virtual bronchoscopy (VB),3, 12, 13 our laboratory has been devising an IGI system called

the Virtual Navigator Suite (VNS).4, 5, 14 The VNS uses the preoperative 3D MDCT data to generate a virtual

space of the chest (Figure 1). During follow-on bronchoscopy, the VNS enables image-guided bronchoscopic

navigation, in addition to some rudimentary biopsy-site localization. The system does this by registering the

intraoperative bronchoscopic video to VB renderings. Unfortunately, our current system, along with all other

state-of-the-art IGI systems, cannot provide live visualizations of extraluminal structures to assist biopsy-site

selection.9, 15, 16 Furthermore, the lack of live extraluminal guidance information could potentially risk patient

safety (e.g., a major blood vessel could be punctured).

Endobronchial ultrasound (EBUS) is a new and cost-effective, radiation-free, real-time imaging modality

that can help localize ROIs and highlight other extraluminal anatomical structures such as blood vessels.17, 18

During bronchoscopy localization, the physician invokes EBUS and examines the EBUS video stream to decide

on suitable ROI biopsy sites. In practice, EBUS uses the transmission and reflection of ultrasound waves to

visualize anatomical structures with different acoustic impedances. Based on this imaging characteristic, the

∗

Correspondence: Email: weh2@psu.edu; WWW: http://mipl.ee.psu.edu/; Telephone: 814-865-0186; Fax: 814-863-

5341.

Medical Imaging 2013: Ultrasonic Imaging, Tomography, and Therapy, edited by Johan G. Bosch, Marvin M. Doyley,

Proc. of SPIE Vol. 8675, 867505 · © 2013 SPIE · CCC code: 1605-7422/13/$18 · doi: 10.1117/12.2004901

Proc. of SPIE Vol. 8675 867505-1

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

physician can qualitatively localize extraluminal targets such as lymph nodes and nodules by recognizing islandlike homogeneous dark regions on 2D EBUS video frames.19, 20 Next, the physician must mentally reconstruct a

3D EBUS-based volume from the EBUS video stream for ROI inspection and biopsy-site selection.

Figure 1. Virtual and Real spaces involved in using EBUS during image-guided bronchoscopy. This process is illustrated

using human case 20349.3.66 along with the VNS.4, 5, 14 Prior to bronchoscopy, the physician identifies an ROI (magenta)

on 2D MDCT scan images (top middle) and an airway route is automatically computed leading to the ROI. During

bronchoscopy, the VNS can provide image-guided bronchoscopy by registering the live 2D bronchoscopic video to the

VB renderings (left). Moreover, the VNS can overlay ROI biopsy-site information (green) on both the video frame and

VB rendering. Once the bronchoscope reaches the vicinity of the ROI (localization phase), the physician invokes EBUS

to search for the optimal sampling location. In the bronchoscopy figure (bottom middle), the red circle marks the local

biopsy-site search region, while the blue object and black triangle represent the ROI and EBUS scanning area, respectively.

The real space is then derived from the EBUS video stream consisting of 2D EBUS frames (right). (The bronchoscopy

representation is a drawing by Terese Winslow, Bronchoscopy, NCI Visuals Online, National Cancer Institute.)

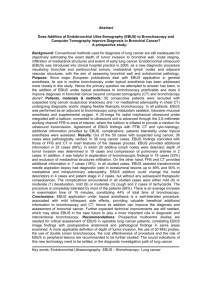

Two types of EBUS devices exist for bronchoscopy (Figure 2): (1) radial probes, which provide 360◦

cross-sectional views of the bronchial walls and adjacent structures, to assist in the diagnosis of peripheral

lesions;15, 21, 22 and (2) integrated bronchoscopes, which provide standard endobronchial video along with fanshaped EBUS imagery, for guiding central-chest transbronchial needle aspiration (TBNA).23 For central-chest

lymph-node staging, recent studies have shown that EBUS TBNA is more accurate in predicting lymph-node status than CT and positron emission tomography (PET).23 Because of these strengths of EBUS, many researchers

have attempted to include EBUS into IGI systems for ROI localization.15, 16

Unfortunately, EBUS usage has two major limitations. First, during EBUS visualization, a wave interference phenomenon called “speckle” arises and often results in noisy low-contrast EBUS images corrupted by

dropouts.24 Second, while the reflection of an ultrasound wave highlights region borders on an EBUS image, the

Proc. of SPIE Vol. 8675 867505-2

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

(a)

(b)

Figure 2. Examples of 2D EBUS frames. (a) 2D EBUS scan from an Olympus UM-S20-17S radial EBUS probe for human

case 21405.91. This scan provides a 360◦ view of extraluminal structures (pointed to by arrow). (b) 2D EBUS scan

from an Olympus BF-UC160F-OL8 integrated EBUS bronchoscope for human case 20349.3.68. In this EBUS example, a

biopsy needle (emanating from the green dot) appears as a white line and passes through a mass (pointed to by arrow).

reflected values depend on the incident angle between the ultrasound-signal transmission and the medium interface. This orientation dependence frequently results in missing ROI boundaries. These limitations complicate

diagnostic tasks, because ROI dimension estimates, which are important evaluation factors, become subjective

and inaccurate.

To address these drawbacks, researchers have suggested numerous methods for reconstructing a 3D volume

from 2D ultrasound (US) cross-sectional scans. Among these methods, the pixel nearest-neighbor (PNN) approach has been recommended for providing real-time or near real-time 3D US visualizations because of its

simplicity and fast execution time.25–27 This approach consists of two steps: a) acquire 2D US images and associate them with their capture positions; and b) based on their positional information, transform all the pixels

from the 2D images to their correct 3D locations and define a 3D EBUS-based real space (Figure 1).

Based on the PNN method, Molin et al. proposed a device-controlled 3D endoscopic ultrasound (EUS)

reconstruction approach.28 They first collected a sequence of equidistant parallel 2D EUS images inside a patient’s

gastrointestinal tract, and then stacked these images together to perform 3D EUS analysis. Recently, Inglis et

al. used a more flexible probe control mechanism and developed a 3D freehand EUS pixel-based reconstruction

technique for use in the upper gastrointestinal tract.29 Their reconstructed 3D EUS-based models provided

excellent characterization of oesophageal structures along with accurate dimension and volume measurements.

In the field of EBUS, Andreassen et al. collected sequences of 2D parallel EBUS images by constantly retracting

a radial EBUS probe through the water-filled airways of five corpses, and reconstructed 3D EBUS-based image

volumes to provide helpful anatomical visualizations and measurements.30 The aforementioned approaches,

however, all required considerable manual efforts to generate 3D visualizations of the diagnostic ROI. Moreover,

US analysis work, such as ROI segmentation, took place only after the completion of US reconstruction. Due to

these facts, these approaches were only suitable for postoperative US analysis.

While previous research could be applicable to EBUS analysis,25–31 past efforts did not incorporate automated

analysis of EBUS images and a subsequent fusion of the EBUS and MDCT data, nor has any successful attempt

been made to reconstruct 3D information from EBUS in vivo. To offer intraoperative guidance, reduce subjective

interpretation of conventional EBUS, and enable true 3D image analysis, we propose near real-time automated

methods for 3D EBUS segmentation and reconstruction. These methods generate a 3D ROI model along with

ROI measurements. In addition, we present a preliminary IGI-system example, whereby EBUS imagery is

registered to a patient’s MDCT chest scan. Section 2 discusses the proposed methods, Section 3 provides results

that validate the methods, and Section 4 offers concluding comments.

Proc. of SPIE Vol. 8675 867505-3

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

2. METHODS

As mentioned earlier, once the physician maneuvers the bronchoscope to the correct airway branch of an ROI,

he/she invokes EBUS to scan the vicinity about the ROI and often freezes the EBUS video stream for in

vivo verification. During this verification process, we employ an EBUS segmentation method to extract ROI

contours from the frozen EBUS frame to help the physician detect the ROI. Subsequently, as the physician probes

potential biopsy sites to decide on an optimal sampling location, we use a 3D EBUS reconstruction method to

assemble the sequence of 2D EBUS frames into a 3D volume. This volume offers the physician a comprehensive

3D understanding of the ROI region. In the following discussion, we lay out the 3D EBUS segmentation and

reconstruction procedures in detail.

2.1 EBUS Segmentation

To offer a real-time interpretation of the EBUS data during live bronchoscopy, our segmentation method must

be accurate and fast. To ensure method accuracy, we must customize the segmentation process to address two

major limitations of EBUS imagery. In particular, the process must include filtering operations to increase the

signal-to-noise ratio of the input EBUS frames. Also, the process must be able to generate a complete ROI from

ROI boundary fragments exhibiting gaps between them. Moreover, since the EBUS segmentation method is

incorporated with the 3D reconstruction algorithm to generate 3D ROI models, it must be able to automatically

extract ROI contours from a sequence of 2D EBUS images.

2D EBUS scan

sequence

I

Annular search

region

initialization

I

Annular search

region

conversion

I

ROI contour

extraction

I

RO! contour

conversion

I

2D ROI

contours

sequence

Figure 3. EBUS segmentation method. The left column gives a block diagram of the method, while the right column

depicts sample outputs during segmentation. In the top right figure, an annular search region is defined between two

green circles, while the yellow cross represents the start node. The middle two figures are “straightened” versions of the

search region. In the bottom two figures, ROI contour points are extracted and marked in green.

Proc. of SPIE Vol. 8675 867505-4

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

Overall, our semi-automatic segmentation method uses a graph-search algorithm to find locally optimal ROI

contours within predefined search regions on given 2D EBUS frames (Figure 3). To extract a complete ROI

contour on a given 2D EBUS frame IUS , our method first locates an annular search region that fully covers the

boundary of an ROI. Given a sequence of 2D EBUS frames IUS (i), i = 0, 1, 2, ..., we interactively initialize a

center point Xc , an inner radius ri , and an outer radius ro to define a search region on the first frame IUS (0). This

user-defined search region must have its inner circle falling within the ROI region and its outer circle situated

among background pixels only.

For each subsequent frame IUS (i), the method automatically locates an annular search region based on both

the previous frame’s segmentation and features from the current image. From the previous frame’s result, we

initialize Xc , ri , and ro to determine an annular search region on the current image. Because background pixels

are typically brighter than ROI pixels in an EBUS image, we distinguish ROI pixels from background pixels

using threshold Tr .20 Then, we adjust Xc , ri , and ro , based on both the number of background pixels nb on the

inner circle and the number of ROI pixels nr on the outer circle. Details of this process appear in Algorithm

1. In Algorithm 1, T1 and T2 are user-specified thresholds, while wi and wo are adjustable weights. Figure 4

demonstrates how the process adjusts a search region to extract a complete ROI contour.

Algorithm 1. Search Region Adjustment Algorithm.

Input:

I

— Intensity matrix of the input 2D EBUS frame IU S

Xc

— Center point of an annular search region

ri , ro — Inner and outer circle radii

Output:

Xc , ri , ro

Algorithm:

1.

Initialize an annular search region based on Xc , ri , and ro

2.

while nb > T1 and nd > T2 do

3.

for all pixels p on the inner circle do

4.

if I(p) ≥ Tr then /∗ Pixel p is a background pixel if its intensity value is above Tr ∗/

/∗ Count number of background pixels on the inner circle ∗/

5.

nb + +

6.

end if

7.

end for

8.

for all pixels p on the outer circle do

9.

if I(p) < Tr then /∗ Pixel p is an ROI pixel if its intensity value is below Tr ∗/

/∗ Count number of ROI pixels on the outer circle ∗/

10.

nr + +

11.

end if

12.

end for

13.

if nb ≥ T1 then

14.

Calculate the center B of the background pixel cluster on the inner circle

15.

Move Xc away from B by half of the vector from the original Xc to B

16.

Decrease ri by a factor of wi

17.

end if

18.

if nr ≥ T2 then

19.

Calculate the center D of the ROI pixel cluster on the outer circle

20.

Move Xc to the middle point between the old Xc and D

21.

Increase ro by a factor of wo

22.

end if

23.

end while

24.

Output Xc , ri , ro

Within the defined annular search region, the segmentation method selects the pixel with maximum gradient

magnitude G as our start node Xs . Subsequently, the method converts the annular search region into a trapezoidal

section perpendicular to the line defined by Xc and Xs (Figure 5). This conversion procedure was used by Sonka

et al. for intravascular ultrasound (IVUS) segmentation.31 In the trapezoidal region, each row of nodes are pixels

on the same circle centered about Xc , while the first column of nodes are pixels on the line defined by Xc and

Xs . Thus, each node of the trapezoidal region corresponds to one pixel in the original EBUS frame.

Within the “straightened” version of the annular search region, the segmentation method applies a graphsearch algorithm to find the optimal path from the start node Xs to a set of end nodes. The algorithm uses a

local-cost function l(p, r) defined as follow32

l(p, r) = wG fG (r) + wZ fZ (r) + wD1 fD1 (p, r) + wD2 fD2 (p, r)

Proc. of SPIE Vol. 8675 867505-5

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

(1)

v,,

J

(a)

(b)

(c)

Figure 4. Search-region adjustment example for a tissue-mimicking phantom. (a) An initial search region is defined between

two green circles on a 2D EBUS frame IU S (i) based on the segmentation outcome of the previous frame IU S (i − 1). The

left side of the outer circle, however, includes ROI (dark) pixels. (b) The center point Xc is moved toward the dark pixel

cluster, so that the outer circle only falls within the background domain. (c) After search-region adjustment, a complete

ROI contour is extracted.

1_21-rroJ

Xs

O

O--O.. ......

.

[2-rtrti J

Figure 5. Annular search region conversion. An annular search region is transformed into a trapezoidal section. After this

transformation, the start node Xs must correspond to the first column to ensure extracting a complete ROI contour.

where p is the seed pixel, r is a 8-connected neighbor pixel of p, wG , wZ , wD1 , and wD2 are user-specified

weights, and fG (r), fZ (r), fD1 , and fD2 are cost components. This cost function is especially appropriate for

segmentation tasks involving weak boundaries.

We briefly overview the cost components of (1) below. For more discussion of these components, please refer

∂I

∂I

and Iy = ∂y

represent the

to Lu and Higgins.32 Let I be intensity matrix of an input image, and Ix = ∂x

horizontal and vertical components of the gray-scale gradient of I. The gradient-magnitude cost fG (r) is given

by

G(r)

fG (r) = 1 −

(2)

Gmax

where G = Ix2 + Iy2 and Gmax is the maximum value of G within the image. Next, the Laplacian zero-crossing

cost fZ (r) is given by

fZ (r) =

0, if IL (r) ≤ Tl

1, if IL (r) > Tl

(3)

where IL (r) is the Laplacian of I at pixel r and Tl is a threshold.33 Finally, costs fG (r) and fZ (r) are small

when pixel r is on or close to ROI edges.

Because EBUS imagery has a low signal-to-noise ratio, the ROI edge information provided by fG and fZ

is often weak. Therefore, to assist ROI boundary detection, two gradient direction costs, fD1 and fD2 , are

Proc. of SPIE Vol. 8675 867505-6

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

calculated:

2

[θ1 + θ2 ]

3π

1

fD2 (p, r) = [θ1 − θ2 ]

π

fD1 (p, r) =

(4)

(5)

where

θ1 = cos−1 [dp (p, r)],

θ2 = cos−1 [dr (p, r)]

are angles derived from vector dot products dp (p, r) and dr (p, r) given by

dp (p, r) = D (p) · L(p, r),

D (p) = [Iy (p), −Ix (p)],

and

1

L(p, r) =

||p − r||

dr (p, r) = D (r) · L(p, r)

D (r) = [Iy (r), −Ix (r)],

r − p, if D (p) · (r − p) ≥ 0

p − r, if D (p) · (r − p) < 0

Costs fD1 and fD2 are small when pixels p and r have similar gradient directions.

On a related front, the segmentation method integrates a Gaussian filter into the calculations of Ix , Iy , and

IL to increase the signal-to-noise-ratio. In particular,

Ix ∼

= Fx ∗ I

Iy ∼

= Fy ∗ I

(6)

(7)

where x and y are the row and column indices of I, and Fx and Fy are 2D filters defined by

1

1

Fx = Gy · Dx = [e− 2σ2

1

e− 2σ2 ]T · [−1 0 1]

Fy = Dy · Gx = [−1 0

1]T · [e− 2σ2

1

1

1 e− 2σ2 ]

where Dx and Dy are two one-dimensional (1D) difference operators that approximate the first-order derivatives

in the row and column directions, Gx and Gy are 1D Gaussian smoothing filters, and σ is an empirically defined

variance parameter. Each filter Fx and Fy smooths an image in one direction while calculating the gradient in

another (i.e., the orthogonal direction). This filter implementation is similar to the two operators of a Canny

detector.34 Next, we calculate IL by

(8)

IL = LoG(k, l) ∗ I

where LoG is the Laplacian of Gaussian operator, which is approximated by a 3×3 mask given by

LoG(k, l) = −

1

x2 + y 2 − k2 +l2 2

[1 −

]e 2σ ,

4

πσ

2σ 2

k, l ∈ [−1, 0, 1]

Our graph-search procedure (Algorithm 2) uses a Dijkstra-based search method,35 similar to the one presented

by Lu and Higgins.32 When searching neighbor pixels of p, however, the search method only considers three

forward neighbors of p instead of its 8-connected neighbors as done by Lu and Higgins. This constraint, along

with the annular search region conversion, provides a substantial computational reduction. Also, we believe our

approach is suitable for extracting thin ROI borders such as those arising for vessels and nodules.

Lastly, the segmentation method marks each node on the optimal path as an ROI contour point and maps it

from the “straightened” version of the annular search region back to its corresponding location on the original

EBUS frame. Then, the method generates the ROI contour by connecting these contour points. Also, the method

calculates Euclidean distances between every pair of ROI contour points and uses the largest Euclidean distance

as the maximum diameter of the ROI.

Proc. of SPIE Vol. 8675 867505-7

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

Algorithm 2. Automatic Graph-search Algorithm

Input:

— Start node

Xs

l(p, r) — Local cost function for link between pixels p and r

Data Structures:

L

— List of active pixels sorted by total cost (initially empty)

N (p) — Neighborhood set of pixel p (contains 3 neighbors of p)

e(p)

— Boolean function indicating if p has been processed

g(p)

— Total cost from seed point to pixel p

pt(p) — Back pointer of p used to indicate the min cost path

Output:

P (Xe ) — Min cost path from an end pixel Xe to the start node

Algorithm:

/∗ Initialize active pixel list with zero-cost seed pixel ∗/

1.

g(Xs ) ← 0; L ← s;

2.

while L = ∅ do

/∗ Iterate while active pixel list is not empty ∗/

3.

p ← min(L);

/∗ Remove min-cost pixel from L and assign it to p ∗/

4.

e(p) ← T RUE;

/∗ Mark p as processed ∗/

5.

for each r ∈ N (p) such that ¬e(r) do

6.

gtemp ← g(p) + l(p, r);

/∗ Compute total cost to a neighbor ∗/

7.

if r ∈ L and gtemp < g(r) then

/∗ Update total cost and set back pointer ∗/

8.

g(r) ← gtemp; pt(r) ← p;

9.

if r ∈

/ L then

/∗ If neighbor not on list∗/

10.

g(r) ← gtemp;

/∗ Assign neighbor’s total cost ∗/

11.

pt(r) ← p;

/∗ Set back pointer ∗/

12.

L ← r;

/∗ Place neighbor on active list ∗/

13.

end if

14.

end for

15.

end while

16.

for each pixel r at the right edge of the trapezoidal region do

17.

if Xe is NOT assigned then

18.

Set r as Xe

19.

if g(r) < g(Xe ) then

20.

Set r as Xe

21.

end if

22.

end for

/∗ Output min cost path from an end node to the start node ∗/

23.

Output P (Xe )

2.2 EBUS Reconstruction

Before we start our reconstruction process, we must know the dimensions and resolution of the EBUS-based

real space. To do this, we first examine the given MDCT sections and define the physical area of interest for

reconstruction. Subsequently, we determine the dimensions of the real space based on the physical volume size

and the resolution of the input EBUS frames. We then proceed with the reconstruction process (Figure 6):

1. Map each 2D ROI contour point to a voxel in the EBUS-based real space.

2. Traverse the EBUS-based real space and generate a 3D ROI model by marking every voxel surrounded by

or belonging to contour points as an ROI voxel.

3. Apply a surface-extraction algorithm to generate a 3D surface from the reconstructed ROI model.36

2D EBUS -based

ROI contour

sequence

1- 2D ROI Contours

1

Transformation

-I.

3D ROI Model

Reconstruction

-I..

3D ROI Surface

Extraction

I

-

3D EBUS -based

ROI model

1

1

Figure 6. 3D EBUS reconstruction method.

In a 2D EBUS image IUS , we denote the set of ROI contour points P as

P = {pj = (xj , yj ) | j = 0, 1, 2, ..., N − 1},

where N is the total number of contour points. Also, we represent the position of IUS in the real space by pose

R

R

R

R

R

ΘR = {μR

x , μy , μz , α , β , γ },

Proc. of SPIE Vol. 8675 867505-8

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

R

R

R

R

R

where (μR

x , μy , μz ) denotes the 3D spatial location and (α , β , γ ) denotes the Euler angles specifying the

EBUS frame’s orientation with respect to the real-space coordinate system R. To map a 2D ROI contour point

p from its location (x, y) in IUS to its corresponding coordinates (X, Y, Z) inside the real space, we must know

the value of ΘR and calculate (X, Y, Z) by37

⎤ ⎡

R

R

R

R

R

R

R

R

R

R

R

R

R

X

Y

Z

1

=⎣

− sin α cos β sin γ + cos α cos β

cos αR cos β R sin γ R + sin αR cos γ R

sin αR sin β R

0

− sin α cos β cos γ − cos α sin γ

cos αR cos β R cos γ R − sin αR sin γ R

sin αR cos γ R

0

sin α sin β

− cos αR sin β R

cos β R

0

μx

μR

y

μR

z

1

⎦

x

y

0

1

(9)

In practice, by predetermining EBUS device pullback path and speed information, we can collect a sequence

of 2D EBUS video frames and obtain pose ΘR (i) for each EBUS frame IUS (i). Subsequently, we can proceed

with the mapping procedure using (9) to place each EBUS frame into the real space. During the mapping

process, we mark the voxels closest to the 2D ROI points as ROI voxels. Let v and IR (v) represent a voxel in

the real space and its value, respectively, where

1, if v is an ROI voxel

IR (v) =

0, otherwise

After the mapping step, we traverse the EBUS-based real space and assess the unmarked voxels based on their

8-connected neighbors. For an unmarked voxel v, we calculate its value by

IR (v) =

IR (r)

wr

(10)

r∈N (v)

where r ∈ N (v), N (v) is the set of the 8-connected neighbor voxels of v, and wr is the Euclidean distance between

voxels v and r. Next, we use a predefined threshold T to determine whether this voxel is an ROI or a background

voxel. After marking all voxels inside the real space, we apply the Marching-Cubes Algorithm to generate the

3D ROI surface.36 Overall, our reconstruction process is derived from the PNN reconstruction method.25–27, 38

Algorithm 3 summarizes the reconstruction process.

Algorithm 3. 3D EBUS Reconstruction Algorithm

Input:

V

— EBUS-based real space

i

— Frame index in a sequence of 2D EBUS video frames

IU S (i) — 2D EBUS frame

— The set of ROI contour points on IU S (i)

Pi

ΘR (i) — The pose of IU S (i)

Output:

S

— 3D ROI surface

Algorithm:

1.

for all EBUS frames IU S (i) do

2.

for all ROI contour points p ∈ Pi do

3.

Map p into V based on ΘR (i) using (9)

4.

Mark the voxel v closest to p as an ROI voxel

5.

end for

6.

end for

7.

for all voxels v ∈ V do

8.

if v is NOT marked then

9.

Assign v a value I(v) using (10)

10.

if I(v) ≥ T then

11.

Mark v as an ROI voxel

12.

end if

13.

if I(v) < T then

14.

Mark v as a background voxel

15.

end if

16.

end if

17.

end for

18.

Use a surface extraction algorithm to generate a 3D ROI surface S

19.

Output S

Proc. of SPIE Vol. 8675 867505-9

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

3. RESULTS

3.1 Segmentation Results

We tested the EBUS segmentation method using three sets of data. Two of them were collected using an Olympus

US-S20-17S radial EBUS probe from a tissue-mimicking phantom (Figure 7) and a live patient procedure, while

the other set was obtained using an Olympus BF-UC160F-OL8 integrated EBUS bronchoscope during another

live human bronchoscopy.

The phantom has been an invaluable tool for developing our EBUS segmentation and reconstruction techniques. It enabled controlled data collection, such a freehand retraction of a radial EBUS probe at constant

speed. It also provided a simple ground-truth test, in that it has produced excellent EBUS images with an

echo-texture similar to that of standard practice EBUS

,

a

,r

(a)

(b)

(c)

Figure 7. Tissue-mimicking phantom. We made this phantom using the ingredients suggested by Bude and Adler.39 In

particular, the background mixture of this phantom consisted of agar and Metamucil powder, while the ROI component

only included agar powder. (a) Cross-sectional view of the phantom, which shows a hollow observation channel (pointed

to by arrow) and ROIs (marked by red circle). (b) External view of constructed phantom with a hollow plastic tube

inserted. During our tests, we removed the plastic tube and inserted the EBUS probe into the hollow observation channel.

(c) 2D EBUS scan of the phantom showing echolucent ROIs and echoic background.

From each set of data, we selected one 2D EBUS frame as the input image. All images had a resolution of

640×480 pixels with a pixel resolution of 0.1 mm/pixel. From these images, we first chose ROIs R1 , R2 , and R3

(Figure 8(a-c)). R1 was a cross-sectional view of a cylindrical object inside the tissue-mimicking phantom, while

R2 and R3 were cross-sectional views of an extraluminal diagnostic target during live human bronchoscopy.

In order to evaluate method performance, we used the accuracy evaluation approach suggested by Lu and

Higgins.32 We first manually extracted all ROI contours to produce the ground truth data, Gj , j = 1, 2, 3, where

Gj is a binary image representation of ROI Rj . Gj (x, y) = 1 when the corresponding pixel (x, y) is inside or on

a manually extracted ROI boundary; otherwise, Gj (x, y) = 0. Next, we applied our segmentation method to the

input frames, with binary image Bj , j = 1, 2, 3, representing the segmentation result for Rj . Lastly, we denoted

the accuracy of segmentation, a(Rj ), of ROI Rj by

a(Rj ) = 1 −

|Bj ⊕ Gj |

|Bj | + |Gj |

(11)

where |Bj | represents the sum of the elements constituting Bj and ⊕ is the exclusive-or operator. Figure 8 shows

segmentation results, while Table 1 lists our accuracy results.

Table 1. Segmentation accuracy results a(Rj ), per (11).

Accuracy

R1

.990

R2

.964

R3

.949

Proc. of SPIE Vol. 8675 867505-10

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

(a)

(b)

(c)

(d)

(e)

(f)

Figure 8. ROIs and the corresponding segmentation results. (a) ROI R1 , a cross-sectional view of a cylindrical object

(pointed to by arrow) inside the tissue-mimicking phantom. (b) ROI R2 , a cross-sectional view of a diagnostic target

(pointed to by arrow) from human case 21405.91. (c) ROI R3 , a cross-sectional view of a diagnostic target (pointed to

by arrow) from human case 20349.3.66. (d) Segmentation result of R1 . (e) Segmentation result of R2 . (f) Segmentation

result of R3 . Yellow pixels represent matching pixels between the ground truth and segmentation results. Red pixels

exclusively belong to the ground truth, while green pixels only belong to segmentation results, and yellow dotted lines are

the maximum ROI diameters. In these segmentation results, the segmented ROIs closely match ground-truth versions of

the ROIs.

Figure 9. Manually measured maximum diameter of ROI R3 from human case 20349.3.66.

Proc. of SPIE Vol. 8675 867505-11

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

For all ROIs, we obtained accuracy rates a(Rj ) ≥ 0.949, indicating that the EBUS segmentation method is

potentially a reliable method for extracting ROIs from EBUS video frames. In addition, for R3 , the automatically

calculated maximum ROI diameter of 1.13 cm closely aligned with the value (1.17 cm) produced by the physician

manually during live bronchoscopy (Figure 9). Thus, the automatic method could conceivably replace manual

measurement. By observing Figure 8(d-f), we point out that the differences between the ground truth and

automatic segmentation results arose near irregularities in the ROI contours. These irregularities may occur due

to the noisy nature of EBUS imaging and may be subjective.

3.2 Reconstruction Results

We next integrated the EBUS segmentation method into the EBUS reconstruction process to generate a 3D

ROI model for a cylindrical object inside the tissue-mimicking phantom. This object had a radius of 8 mm,

and its centerline was parallel to the observation channel of the phantom. Along the observation channel, we

first retracted a radial EBUS probe at a constant speed of 2.4 mm/second and collected a sequence of 80 EBUS

video frames. Next, we applied the proposed method to the EBUS sequence to generate a 3D ROI model. In

particular, after we manually initialized an annular search region on the first EBUS frame and started the ROI

reconstruction process, the segmentation method continuously extracted ROI contours from EBUS frames while

the reconstruction method assembled these contours together for 3D ROI generation (Figure 10). We achieved

a 5 frames/second analysis rate during the reconstruction process. The reconstructed ROI model offers a useful

volumetric understanding of the cylindrical target. Also, from the segmentation results of these 80 frames, the

average cross-sectional maximum diameter of 8.4±0.2 mm (range: 7.9 mm — 8.8 mm) showed good agreement

with the ground truth (8 mm) (Figure 11).

(a)

(b)

(c)

Figure 10. EBUS analysis example for tissue-mimicking phantom. (a) Annular search region located on a 2D EBUS frame.

(b) Extracted live-wire contour from “straightened” annular search region. (c) Final result after segmentation and 3D

reconstruction. Left panel displays a segmented contour overlaid on the original EBUS frame. Right panel depicts a

complete 3D reconstructed model from a sequence of 80 EBUS frames.

9

8.8

E 8.6

°

8.4

E

.E 8.2

E

E

8

7.8

7.6

7.4

0

5

10

15

20

25

30

40

45

Frame Index

35

50

55

60

65

70

75

Figure 11. Maximum ROI diameter measurements from the segmentation results of a sequence of 80 EBUS frames.

Proc. of SPIE Vol. 8675 867505-12

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

We also tested the reconstruction method using synthetic cross-sectional frames. In this test, we first generated an airway tree from a 3D MDCT scan for case 21405.95. Subsequently, we defined the reconstruction volume

and chose a segment of the left main bronchus as the reconstruction target Rr (Figure 12). The reconstruction

volume had dimensions 81 mm × 38 mm × 94 mm. Next, we simulated a retraction procedure of a virtual EBUS

device at 15 mm/second, following the centerline of the bronchus segment. We generated 188 cross-sectional

frames showing the bronchus contours. These frames had a resolution of 80×80 pixels with a pixel resolution of

0.5 mm/pixel. Finally, we reconstructed the bronchus segment Rr from these frames using the method.

We used (11) to evaluate the accuracy a(Rr ) of our reconstruction result, where Br and Gr are the reconstruction result and the ground truth, respectively. Br (x, y, z) = 1 when the corresponding voxel (x, y, z) is inside

or on the surface of the reconstruction target; otherwise Br (x, y, z) = 0. |Br | represents the sum of the voxels

constituting Br . For this situation, the accuracy a(Rr ) was 1.0, meaning that the method perfectly reconstructed

the target. Overall, these experiments show that the proposed reconstruction method could potentially give the

physician useful 3D visualizations of the diagnostic targets during bronchoscopy.

(a)

(b)

(c)

Figure 12. Synthetic 3D reconstruction example based on airway tree data obtained from a 3D MDCT scan for patient

21405.95. The 3D MDCT scan was generated on a Siemens Sensation 40 MDCT scanner. The MDCT scan has voxel

dimensions of 512×512×707 with resolutions Δx = Δy = 0.79 mm and Δz = 0.5 mm. (a) The left main bronchus segment

(red) of the airway tree (green) served as the 3D reconstruction target. (b) Given a precalculated path through the target

segment, we generated a sequence of 80×80 synthetic cross-sectional frames for each bronchoscope view position. (c)

Reconstructed left main bronchus segment.

4. CONCLUSION

We have proposed a novel EBUS analysis approach consisting of automatic 3D segmentation and subsequent

reconstruction of ROIs depicted in a 2D EBUS sequence. Results indicate that our EBUS segmentation algorithm generates well-defined 2D ROI contours, resulting in diameter calculations matching those obtained

intraoperatively. In addition our 3D reconstruction method produces ROI models that could give physicians a

useful volumetric understanding of important diagnostic regions. This could reduce the subjective interpretation

of conventional 2D EBUS scans. Moreover, by combining the proposed methods with a preliminary effort in

progress to register EBUS imagery with MDCT chest data (Figure 13), we have devised an early prototype of an

EBUS-based IGI system that could potentially assist live ROI localization during cancer-staging bronchoscopy.

Our current 3D EBUS-based ROI models, however, omit many important extraluminal structures such as

blood vessels. This can potentially risk the patient’s safety during tissue sampling procedures. Also, we need to

implement our EBUS segmentation and reconstruction methods on a graphic processing unit (GPU) to greatly

reduce their execution time. Furthermore, we must validate the methods on both retrospectively collected and

live bronchoscopy studies. We plan to work on these aspects in future work.

Proc. of SPIE Vol. 8675 867505-13

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

Figure 13. Preliminary fusion of EBUS and MDCT data (case 20349.3.67). Left panel displays the ground truth ROI

overlaid on the original EBUS frame. The ground truth ROI was segmented from the associated patient MDCT scan.

The panel depicts the corresponding MDCT cross section registered to the EBUS frame with the overlaid ground truth

ROI (green).

ACKNOWLEDGMENTS

This work was partially supported by NIH grant R01-CA151433 from the National Cancer Institute.

REFERENCES

[1] Kazerooni, E. A., “High resolution CT of the lungs,” Am. J. Roentgenology 177, 501–519 (Sept. 2001).

[2] Merritt, S. A., Gibbs, J. D., Yu, K. C., Patel, V., Rai, L., Cornish, D. C., Bascom, R., and Higgins, W. E., “Real-time

image-guided bronchoscopy for peripheral lung lesions: A phantom study,” Chest 134, 1017–1026 (Nov. 2008).

[3] Higgins, W. E., Ramaswamy, K., Swift, R., McLennan, G., and Hoffman, E. A., “Virtual bronchoscopy for 3D

pulmonary image assessment: State of the art and future needs,” Radiographics 18, 761–778 (May-June 1998).

[4] Helferty, J. P., Sherbondy, A. J., Kiraly, A. P., and Higgins, W. E., “Computer-based system for the virtual-endoscopic

guidance of bronchoscopy,” Comput. Vis. Image Underst. 108, 171–187 (Oct.-Nov. 2007).

[5] Higgins, W. E., Helferty, J. P., Lu, K., Merritt, S. A., Rai, L., and Yu, K. C., “3D CT-video fusion for image-guided

bronchoscopy,” Comput. Med. Imaging Graph. 32, 159–173 (April 2008).

[6] McAdams, H. P., Goodman, P. C., and Kussin, P., “Virtual bronchoscopy for directing transbronchial needle aspiration of hilar and mediastinal lymph nodes: a pilot study,” Am. J. Roentgenology 170, 1361–1364 (May 1998).

[7] Gildea, T. R., Mazzone, P. J., Karnak, D., Meziane, M., and Mehta, A. C., “Electromagnetic navigation diagnostic

bronchoscopy: a prospective study,” Am. J. Resp. Crit. Care Med. 174, 982–989 (Nov. 2006).

[8] Deguchi, D., Feuerstein, M., Kitasaka, T., Suenaga, Y., Ide, I., Murase, H., Imaizumi, K., Hasegawa, Y., and Mori,

K., “Real-time marker-free patient registration for electromagnetic navigated bronchoscopy: a phantom study,” Int.

J. Computer-Assisted Radiol. Surgery 7, 359–369 (Jun. 2012).

[9] Asano, F., “Virtual bronchoscopic navigation,” Clin. Chest Med. 31, 75–85 (Mar. 2010).

[10] Merritt, S. A., Rai, L., and Higgins, W. E., “Real-time CT-video registration for continuous endoscopic guidance,” in

[SPIE Medical Imaging 2006: Physiology, Function, and Structure from Medical Images], Manduca, A. and Amini,

A. A., eds., 6143, 370–384 (2006).

[11] Schwarz, Y., Greif, J., Becker, H. D., Ernst, A., and Mehta, A., “Real-time electromagnetic navigation bronchoscopy

to peripheral lung lesions using overlaid CT images: the first human study,” Chest 129, 988–994 (Apr. 2006).

[12] Summers, R. M., Feng, D. H., Holland, S. M., Sneller, M. C., and Shelhamer, J. H., “Virtual bronchoscopy: segmentation method for real-time display,” Radiology 200 (Sept. 1996).

[13] Kiraly, A. P., Helferty, J. P., Hoffman, E. A., McLennan, G., and Higgins, W. E., “3D path planning for virtual

bronchoscopy,” IEEE Trans. Medical Imaging 23, 1365–1379 (Nov. 2004).

[14] Graham, M. W., Gibbs, J. D., Cornish, D. C., and Higgins, W. E., “Robust 3D Airway-Tree Segmentation for

Image-Guided Peripheral Bronchoscopy,” IEEE Trans. Medical Imaging 29, 982–997 (Apr. 2010).

[15] Eberhardt, R., Anantham, D., Ernst, A., Feller-Kopman, D., and Herth, F., “Multimodality bronchoscopic diagnosis

of peripheral lung lesions: A randomized controlled trial,” Am. J. Respir. Crit. Care Med. 176, 36–41 (Jul. 2007).

[16] Asano, F., Matsuno, Y., Tsuzuku, A., Anzai, M., Shinagawa, N., Moriya, H., et al., “Diagnosis of peripheral

pulmonary lesions using a bronchoscope insertion guidance system combined with endobronchial ultrasonography

with a guide sheath,” Lung Cancer 60, 366–373 (Jun. 2008).

Proc. of SPIE Vol. 8675 867505-14

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms

[17] Herth, F. J., Eberhardt, R., Becker, H. D., and Ernst, A., “Endobronchial ultrasound-guided transbronchial lung

biopsy in fluoroscopically invisible solitary pulmonary nodules: a prospective trial,” Chest 129, 147–150 (Jan. 2006).

[18] Yasufuku, K., Nakajima, T., Chiyo, M., Sekine, Y., Shibuya, K., and Fujisawa, T., “Endobronchial ultrasonography:

current status and future directions,” J. Thoracic Oncol. 2, 970–979 (Oct. 2007).

[19] Ernst, A. and Herth, F., eds., [Endobronchial Ultrasound: An Atlas and Practical Guide ], Springer Verlag, New

York, NY (2009).

[20] Miyazu, Y., Miyazawa, T., Kurimoto, N., Iwamoto, Y., Kanoh, K., and Kohno, N., “Endobronchial ultrasonography

in the assessment of centrally located early-stage lung cancer before photodynamic therapy,” Am. J. Respir. Crit.

Care Med. 165, 832–837 (Mar. 2002).

[21] Kurimoto, N., Miyazawa, T., Okimasa, S., Maeda, A., Oiwa, H., Miyazu, Y., and Murayama, M., “Endobronchial

ultrasonography using a guide sheath increases the ability to diagnose peripheral pulmonary lesions endoscopically,”

Chest 126, 959–965 (Sep. 2004).

[22] Yoshikawa, M., Sukoh, N., Yamazaki, K., Kanazawa, K., Fukumoto, S., Harada, M., Kikuchi, E., Munakata, M.,

Nishimura, M., and Isobe, H., “Diagnostic value of endobronchial ultrasonography with a guide sheath for peripheral

pulmonary lesions without x-ray fluoroscopy,” Chest 131, 1788–1793 (Sep. 2007).

[23] Yasufuku, K., Nakajima, T., Motoori, K., Sekine, Y., Shibuya, K., Hiroshima, K., and Fujisawa, T., “Comparison of

endobronchial ultrasound, positron emission tomography, and CT for lymph node staging of lung cancer,” Chest 130,

710–718 (Sept. 2006).

[24] Abbott, J. and Thurstone, F., “Acoustic speckle: theory and experimental analysis,” Ultrasonic Imaging 1, 303–324

(Oct. 1979).

[25] Solberg, O. V., Lindseth, F., Torp, H., Blake, R. E., and Nagelhus Hernes, T. A., “Freehand 3D ultrasound reconstruction algorithms: a review,” Ultrasound Med. Biol. 33, 991–1009 (Jul. 2007).

[26] Rohling, R. N., 3D Freehand Ultrasound: Reconstruction and Spatial Compounding, PhD thesis, the University of

Cambridge, Engineering Department, the University of Cambridge, U.K. (1998).

[27] Rohling, R., Gee, A., and Berman, L., “A comparison of freehand three-dimensional ultrasound reconstruction

techniques,” Med. Image Anal. 3, 339–359 (Dec. 1999).

[28] Molin, S., Nesje, L., Gilja, O., Hausken, T., Martens, D., and Odegaard, S., “3D-endosonography in gastroenterology:

methodology and clinical applications,” Eur. J. Ultrasound 10, 171 (Nov. 1999).

[29] Inglis, S., Christie, D., and Plevris, J., “A novel three-dimensional endoscopic ultrasound technique for the freehand

examination of the oesophagus,” Ultrasound Med. Biol. 37, 1779–1790 (Nov. 2011).

[30] Andreassen, A., Ellingsen, I., Nesje, L., Gravdal, K., and Ødegaard, S., “3-D endobronchial ultrasonography–a post

mortem study,” Ultrasound Med. Biol. 31, 473–476 (Apr. 2005).

[31] Sonka, M., Zhang, X., Siebes, M., Bissing, M., DeJong, S., Collins, S., and McKay, C., “Segmentation of intravascular

ultrasound images: A knowledge-based approach,” IEEE Trans. Medical Imaging 14, 719–732 (Dec. 1995).

[32] Lu, K. and Higgins, W. E., “Interactive segmentation based on the live wire for 3D CT chest image analysis,” Int.

J. Computer Assisted Radiol. Surgery 2, 151–167 (Dec. 2007).

[33] Gonzalez, R. C. and Woods, R. E., [Digital Image Processing], Addison Wesley, Reading, MA, 2nd. ed. (2002).

[34] Canny, J., “A computational approach to edge detection,” IEEE Trans. Pattern Anal. Mach. Intell. , 679–698 (Nov.

1986).

[35] Dijkstra, E., “A note on two problems in connexion with graphs,” Numerische mathematik 1(1), 269–271 (1959).

[36] Lorensen, W. and Cline, H., “Marching cubes: A high resolution 3D surface construction algorithm,” in [ACM

Siggraph Computer Graphics ], 21(4), 163–169, ACM (1987).

[37] Rai, L., Helferty, J. P., and Higgins, W. E., “Combined video tracking and image-video registration for continuous

bronchoscopic guidance,” Int. J. Computer Assisted Radiol. Surgery 3, 315–329 (Sept. 2008).

[38] Breslav, M., 3D Reconstruction of 2D Endobronchial Ultrasound, Master’s thesis, The Pennsylvania State University

(2010).

[39] Bude, R. and Adler, R., “An easily made, low-cost, tissue-like ultrasound phantom material,” J. Clin. Ultrasound 23,

271–273 (May 1995).

Proc. of SPIE Vol. 8675 867505-15

Downloaded From: http://spiedigitallibrary.org/ on 03/05/2014 Terms of Use: http://spiedl.org/terms