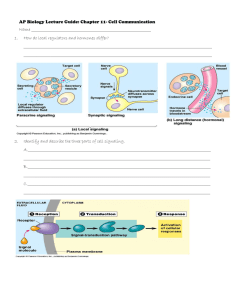

Quantitative Analysis of Signaling Networks

advertisement