A Secure and Dynamic Group Auditing Over Cloud D.Chandrika

advertisement

International Journal of Engineering Trends and Technology (IJETT) – Volume 18 Number 5 – Dec 2014

A Secure and Dynamic Group Auditing Over Cloud

D.Chandrika1, N.Satyanarayana2, A. Phani Sridhar3

Final M.tech Student,2Assistant Professor,3Head of the Department

1

1,2,3

Dept of CSE, SanketikaVidyaParishadEngg. College, PothinaMallayyaPalem, Visakhapatnam, AP, India

Abstract: In cloud service providers the data owners

outsource their data in cloud databases. There are many

users access their data. In this there are some security

issues to provide security to outsourced data. So we

introduced the secure method to provide security for data in

cloud and frequent verification of multiple clouds using

signature verification and symmetric cryptographic

techniques. This method provides maximum security to

outsourced data and secure authentication to all data

owners in cloud service.

I. INTRODUCTION

In storage network systems it is very critical to

compute the confidentiality and security over the stored

data. The increasing growth of the members using the

cloud services are creating more distortion to data which is

stored in cloud service.

There are some security

parameters such as authentication and authorization,

availability, confidentiality and integrity, key sharing and

key management, auditing and intrusion detection[1].

Data should be secured during its entire life-cycle.

Authentication and authorization are the most basic

security services that any storage system should support.

Authentication is defined as the process of corroborating

the identity of an entity(called entity authentication or

identification) or the source of a message (also called

message authentication).The storage servers should verify

the identity of the producers, consumers, and the

administrators before granting them appropriate access

(e.g., read or write) to the data. The act of granting

appropriate privileges to the users is called authorization.

Authentication can be mutual; that is, the

producersand consumers of the data may want to

authenticatethe storage servers to establish a reciprocal

trust relationship.Most of the businesses require continuous

dataavailability. System failures and denial of service

attacks(DoS) are very difficult to prevent. A system that

embedsstrong cryptographic techniques, but does not

ensure availability,backup, and recovery are of little use.

Typically,systems are made fault tolerant by replicating

data or entities that are considered as central point of

failure. However,replication incurs a high cost of

maintaining the consistencybetween replicas.

Storage systems mustmaintain audit logs of

important activities. Audit logs areimportant for system

recovery, intrusion detection, and computerforensics.

ISSN: 2231-5381

Extensive research has been done in the fieldof intrusion

detection [8]. Intrusion detection systems (IDS)use various

logs (e.g., network logs and data access logs) andnetwork

streams (e.g., RPCs, network flows) for detectingand

reporting attacks. Deploying IDS at various levels

andcorrelating these events is important[10].

As the data gets produced,transferred, and stored

at one or more remote storage servers,it becomes

vulnerable

to

unauthorized

disclosures,

unauthorizedmodifications, and replay attacks. An attacker

can change or modify the data while traveling through the

network or when the data is stored on disks or tapes.

Further, a malicious server can replace current files with

valid old versions [4,6]. Therefore, securing data while in

transit as well as when it resides on physical media is

crucial. Confidentiality of data from unauthorized users can

be achieved by using encryption, while data integrity

(which addresses the unauthorized alteration of data) can

be achieved using digital signatures and message

authentication codes. Replay attacks, where an adversary

replays old sessions, can be prevented by ensuring

freshness of data by making each instance of the data

unique.

II. RELATED WORK

For protecting the data in cloud includes some challenges,

such as

The data is highly broadcasted in network and

increasing the complexity of the management by

introducing the vulnerability status.

In decentralized system it creates the storage system is

shared by the users in various security domains with

different policies.

In the extended time gives more windows for attackers.

This raises the less compatibility issues and the data

migration process including encryption algorithm moving.

We now briefly review some common data

storageprotectionmechanisms.Access Control typically

includes both authentication and authorization. Centralized

and de-centralized access management are two models for

distributed storage systems.

Both models require entities to be validated

against predefinedpolicies before accessing sensitive data.

http://www.ijettjournal.org

Page 204

International Journal of Engineering Trends and Technology (IJETT) – Volume 18 Number 5 – Dec 2014

These accessprivileges need to be periodically reviewed or

re-granted.Encryption is the standard method for providing

confidentialityprotection. This relies on the necessary

encryptionkeys being carefully managed, including

processes for flexible key sharing, refreshing and

revocation. In distributedenvironments secret sharing

mechanisms can also be usedto split sensitive data into

multiple component shares.Storage integrity violation can

either be accidental (fromhardware/software malfunctions)

or malicious attacks [3,9].Accidental modification of data

is typically protected bymirroring, or the use of basic parity

or erasure codes.

Thedetection of unauthorized data modification

requires theuse of Message Authentication Codes (MACs)

or digitalsignature schemes. The latter provides the

stronger notion ofnon-repudiation, which prevents an entity

from successfullydenying unauthorized modification of

data.Data availability mechanisms include replication and

redundancy.Recovery mechanisms may also be required

inorder to repair damaged data, for example reencryptingdata when a key is lost. Intrusion Detection or

Preventionmechanisms detect or prevent malicious

activities that couldresult in theft or sabotage of data. Audit

logs can also be used to assist recovery, provide evidence

for security breaches, as well as being important for

compliance.

General threats to confidentiality include sniffing

storage traffic, snooping on buffer caches and de-allocated

memory. File system profiling is an attack that uses access

type, timestamps of last modification, file names, and other

file system metadata to gain insight about the storage

system operation. Storage and backup media may also be

stolen in order to access data.

General threats to integrity include storage

jamming (a malicious but surreptitious modification of

stored data) to modify or replace the original data,

metadata modification to disrupt a storage system, and

subversion attacks to gain unauthorizedOS level access in

order to modify critical system data, and man-in-themiddle attacks in order to change data contents in transit.

General threats to availability include (distributed)

denial of-service, disk fragmentation, network disruption,

hardware failure and file deletion. Centralized data

locationmanagement or indexing servers can be points of

failurefor denial-of-service attacks, which can be launched

using malicious code. Long-term data archiving systems

present additional challenges such as long-term key

ISSN: 2231-5381

management and backwards compatibility, which threaten

availability if they are not conducted carefully[4,2].

General threats to authentication include wrapping

attacks to SOAP messages to access unauthorized data,

federated authentication using browsers that can possibly

open a door to steal authentication tokens, and replay

attacks to deceive the system into processing unauthorized

operations. Cloud-based storage and virtualization pose

further threats. For example, outsourcing leads to data

owners losing physical control of their data, bringing issues

of auditing, trust, obtaining support for investigations,

accountability, and compatibility of security systems.

Multi-tenant virtualization environments can result in

applications losing their security context, enabling an

adversary to attack other virtual machine instances hosted

on the same physical server.

III. PROPOSED SYSTEM

In proposed work we designed a protocol that we

have three roles such as clients, cloud service provider, and

verifier. The client store data in cloud service provider.

There are multi-owners present in the network in the cloud.

The client store data in encrypted format in cloud service

provider.

The to be encrypted by random alphabetic encryption

process which is shown below:

Random Alphabetical Encryption and Decryption

Algorithm :

Encryption: P=plain Text

Key with variable length (128,192, 256 bit)

• Rappresented with a matrix (array) of bytes with 4 rows

andNk columns, Nk=key length / 32

• key of 128 bits= 16 bytes Nk=4

• key of 192 bits= 24 bytes Nk=6

• key of 256 bits= 32 bytes Nk=8

Block of length 128 bits=16 bytes

• Represented with a matrix (array) of bytes with 4 rows

andNb columns, Nb=block length / 32

• Block of 128 bits= 16 bytes Nb=4

State = X

1. AddRoundKey(State, Key0)

for r = 1 to (Nr - 1)

a. SubBytes(State, S-box)

b. ShiftRows(State)

c. MixColumns(State)

d. AddRoundKey(State, Keyr)

end for

2. SubBytes(State, S-box)

3. ShiftRows(State)

4. AddRoundKey(State, KeyNr)

Y = State

http://www.ijettjournal.org

Page 205

International Journal of Engineering Trends and Technology (IJETT) – Volume 18 Number 5 – Dec 2014

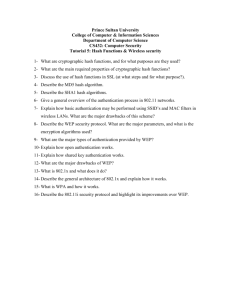

5. Collect signatures from receivers

6. Monitor files

Auditor

User

7. Send Status

4. Receive signatures

from multiple

owners file

7. Send Status

8. Decrypted file

2. Send meta details

Data Owner 1

3. Send encrypted File

Cloud service

Data owner 2

………..

1.Encrypt file, generate signature

Signature Generation Algorithm:

KeyGeneration(Ks)→(pk , sk , skh). The key

generation algorithm takes no input other than the implicit

security parameter Ks. It randomly chooses two random

numbers for selecting random numbers generate two prime

numbers from P. Then calculate primitive roots of the two

prime numbers and those two primitive roots are st

,shrepectively and belongs to Prime number group as the

tag key and the hash key. It outputs the public tag key as pt

= gsKs mod G2, the secret tag key st and the secret hash key

sh. Then generate hash for sh is calculated by using simple

hash function which means second random value given

input to hash function that explains as follows. For

example consider that each input is an integer I in the range

0 to N−1, and the output must be an integer h in the range 0

to n−1, where N is much larger than n. Then the hash

function could be h = I mod n (the remainder of I divided

by n), or h = (I × n) ÷N (the value z scaled down by n/N

and truncated to an integer) or so many other formulas.

Signature Generation (M, st ,sh) → T. The

signature generation algorithm takes each data component

M, the secret tag key st and the secret hash key sh as inputs.

It first chooses s random values r1, r2, …. , xn є I and

computes uj = gxj mod G1 for all j є [1, n]. For each data

block mi(i є [1,n]), it computes a data challenge as:C=({c1}I€SChal,{rn} n€j

where Wi = FID||i (the “||” denotes the concatenation

operation), in which FID is the identifier of the data and i

represents the block number of mi. It outputs the set of data

tags T = {ti}iє[1,n]. Chall(Minfo) → C. The algorithm takes

the brief information of the data Minfo as the input And it

selects some different data blocks to construct the

Challenge Set Q and generates a random number for each

chosen data block mi(i є Q). It computes the challenge

ISSN: 2231-5381

stamp R = (pt)r by randomly choosing a number r є Z*p. It

outputs the challenge as

T p=

Proof(M,T,C) → P. The proving algorithm takes as inputs

the data M and the received. The proof consists of the tag

proof TP and the data proof DP. The challenge proof is

generated as

To generate the data proof it first computes the sector

linear combination of all the challenged data blocks M Pj for

each j є [1, s] as

Mpj=Vj.Mij

Then, it generates the data proof DP as

Mpj

DProof=

It outputs the proof P = (T P,DP).

Verify(C,P, sh, pt ,Minfo) → 0/1. The verification algorithm

takes as inputs the challenge C, the proof P, the secret hash

key sh, the public tag key pt and the abstract information of

the data component. Initially itcomputes the identifier hash

values hash(sh,Wi) of all the challenged data blocks such as

hash value is calculated by using SHA256 method and

computes the challenge hash Hchallange as

Hchal=

h(Skh,Wi))

Then it verifies the proof from the server by the following

verification equation:

Vp=e(HChallenge,pt)=e(Tp,gr2)

If the above verification equation holds it outputs 1.

Otherwise it results 0.

IV. CONCLUSION

In this paper, we proposed an efficient secure dynamic

verifying protocol. It defends the data privacy over the

auditor by combining the cryptography method, rather than

using the mask technique. Our multi-cloud batch verifying

protocol does not require any additional organizer. Our

batch verifying protocol can also support the batch auditing

for multiple owners. Our auditing scheme less

communication cost and less computation complexity of

http://www.ijettjournal.org

Page 206

International Journal of Engineering Trends and Technology (IJETT) – Volume 18 Number 5 – Dec 2014

the auditor by moving the computing calculations of

auditing from the auditor to the server which is greatly

increases the efficiency auditing performance and applied

to large-scale cloud storage systems.

[14] D.L.G. Filho and P.S.L.M. Barreto, “Demonstrating

Data Possession and Uncheatable Data Transfer,” IACR

Cryptology ePrintArchive, vol. 2006, p. 150, 2006.

REFERENCES

[1] P. Mell and T. Grance, “The NIST Definition of Cloud

Computing, “technical report, Nat’l Inst. of Standards and

Technology,2009.

[2] M. Armbrust, A. Fox, R. Griffith, A.D. Joseph, R.H.

Katz, A.Konwinski, G. Lee, D.A. Patterson, A. Rabkin, I.

Stoica, and M.Zaharia, “A View of Cloud Computing,”

Comm. ACM, vol. 53,no. 4, pp. 50-58, 2010.

[3] T. Velte, A. Velte, and R. Elsenpeter, Cloud

Computing: A Practical Approach, first ed., ch. 7.

McGraw-Hill, 2010.

[4] J. Li, M.N. Krohn, D. Mazie`res, and D. Shasha,

“Secure Untrusted Data Repository (SUNDR),” Proc. Sixth

Conf. Symp. Operating Systems Design Implementation,

pp. 121-136, 2004.

[5] G.R. Goodson, J.J. Wylie, G.R. Ganger, and M.K.

Reiter, “Efficient Byzantine-Tolerant Erasure-Coded

Storage,” Proc. Int’l Conf.Dependable Systems and

Networks, pp. 135-144, 2004.

[6] V. Kher and Y. Kim, “Securing Distributed Storage:

Challenges, Techniques, and Systems,” Proc. ACM

Workshop Storage Security and Survivability (StorageSS),

V. Atluri, P. Samarati, W. Yurcik, L.Brumbaugh, and Y.

Zhou, eds., pp. 9-25, 2005.

[7] L.N. Bairavasundaram, G.R. Goodson, S. Pasupathy,

and J.Schindler, “An Analysis of Latent Sector Errors in

Disk Drives,”Proc. ACM SIGMETRICS Int’l Conf.

Measurement and Modeling ofComputer Systems, L.

Golubchik, M.H. Ammar, and M. Harchol-Balter, eds., pp.

289-300, 2007.

[8] B. Schroeder and G.A. Gibson, “Disk Failures in the

Real World:What Does an MTTF of 1,000,000 Hours

Mean to You?” Proc.USENIX Conf. File and Storage

Technologies, pp. 1-16, 2007.

[9] M. Lillibridge, S. Elnikety, A. Birrell, M. Burrows, and

M. Isard,“A Cooperative Internet Backup Scheme,” Proc.

USENIX Ann.Technical Conf., pp. 29-41, 2003.

[10] Y. Deswarte, J. Quisquater, and A. Saidane, “Remote

IntegrityChecking,” Proc. Sixth Working Conf. Integrity

and Internal Controlin Information Systems (IICIS), Nov.

2004.

[11] M. Naor and G.N. Rothblum, “The Complexity of

Online Memory Checking,” J. ACM, vol. 56, no. 1, article

2, 2009.

[12] A. Juels and B.S. Kaliski Jr., “Pors: Proofs of

Retrievability for LargeFiles,” Proc. ACM Conf. Computer

and Comm. Security, P. Ning,S.D.C. di Vimercati, and P.F.

Syverson, eds., pp. 584-597, 2007.

[13] T.J.E. Schwarz and E.L. Miller, “Store, Forget, and

Check: UsingAlgebraic Signatures to Check Remotely

Administered Storage,”Proc. 26th IEEE Int’l Conf.

Distributed Computing Systems, p. 12,2006.

ISSN: 2231-5381

http://www.ijettjournal.org

Page 207