Database Oriented Validation of Theorems on Approximations of Classifications in Optimistic

advertisement

International Journal of Engineering Trends and Technology (IJETT) – Volume 6 Number 3- Dec 2013

Database Oriented Validation of Theorems on

Approximations of Classifications in Optimistic

and Pessimistic Multigranulation Based Rough

Sets

R. Raghavan

SITE, VIT University, Vellore-632014, Tamil Nadu, India

Abstract— The concept granular computing was

in ability to classify objects. The concept of

introduced by Zadeh which deals with granules and

approximations of classifications was introduced and

includes all methods and tools which provide flexibility

studied by Busse [1]. In his work [1] he revealed some

and adaptability in the decision at which knowledge or

important and interesting results. After analysis it has

information is extracted and represented. According to

granular computing an equivalence relation on the

universe can be considered as a granulation, and a

partition on the universe can be considered as a

been found that not all the properties of basic rough sets

can be extended to the category of classifications. In [1]

Busse established four theorems as properties of

granulation space. Two types of multigranulation of rough

classifications and the obtained results could be used in

sets were defined from the view of granular computing.

rule generation. The unification process of the four

One is called to be the optimistic multigranular rough set

theorems of Busse was done by Tripathy et al [7, 8] in

was introduced by Qian et al [4]. During evolution there

the idea that they established two theorems of the

was only one type of multigranulation and it was not

necessary and sufficient type, from which several

named as optimistic multigranulation rough sets. After the

results including the four theorems of Busse can be

improvement of a second type of multigranulation, the

first one was called optimistic multigranulation rough set

and

the

second

one

was

called

as

pessimistic

multigranulation rough sets. For granulations of higher

derived. Also, their results confirm to the prediction of

Pawlak. From the view of information systems, the role

of these four theorems was found to be useful in

order (more than two), the definitions and properties are

deriving rules from such systems. It was observed that

similar. Using multigranular rough sets several theorems

only five cases were considered by Busse out of eleven

with respect to approximations of classifications was

cases as far as the types of classifications, on the other

introduced in [11]. In this paper the validation for the

hand, the other types of classifications reduce either

theorems on approximations of classification in [11] is

directly or indirectly to the five cases considered by

provided with a database support for both optimistic and

Busse. Another interesting aspect of the results in [7, 8]

pessimistic multigranular rough sets.

is the enumeration of possible types [2, 3, 9] of

elements in a classification, which is based upon the

Keywords— validation, approximations of classification,

types of rough sets introduced by Pawlak [3] and

rough

carried out further by Tripathy et al [7, 8]. From the

sets,

optimistic

multi-granular

rough

sets,

pessimistic multi-granular rough sets

view of granular computing the above results on

approximation of classifications were based upon single

I.

INTRODUCTION

granulation. Two types of multigranulations using

In rough set theory role of classification of universes

rough sets were recently introduced in the literature.

were considered to be more important due to its usage

ISSN: 2231-5381

http://www.ijettjournal.org

Page 125

International Journal of Engineering Trends and Technology (IJETT) – Volume 6 Number 3- Dec 2013

These are termed as optimistic [4] and pessimistic [5]

R, S R . The pessimistic multigranular lower

multigranulation.

approximation and pessimistic multigranular upper

The result of Tripathy et al [7, 8] is extended to

approximation of X with respect to R and S in U as

the multigranulation context. In [6] the study about the

types of basic rough sets was done from the view of

optimistic multigranulation rough set and from the

pessimistic multigranulation point of view was done in

R S X { x | [x]R X and [x]S X}

and R S X ~ ( R S (~ X )).

III. PROPERTIES OF MULTIGRANULATIONS

[10].

A classification F = { X1 , X 2 , ..., X n } of a universe U is

n

such that X i X j = for i j and X k U .

k 1

Some properties of multigranulations which

shall be used in this work to establish the results is

given below.

3.1

classification F was defined by Busse as

PROPERTIES

OF

OPTIMISTIC

MULTIGRANULAR ROUGH SETS

The following properties of the optimistic

multigranular rough sets were established in [4].

RF {RX1 , RX 2 , ..., RX n }

(R + S)(X) X (R + S)(X)

The

approximations

(lower

and

upper)

of

and RF {RX1, RX 2 ,...RX n }

a

.

(3.1)

(R +S)( ) = = (R +S)( ) ,

(3.2)

For any two equivalence relations R and S

(R +S)(U) = U = (R +S)(U)

over U, the lower and upper optimistic multigranular

(R + S)(~ X) =~ (R + S)(X)

(3.3)

rough approximations and pessimistic multigranular

(R + S)(X) = RX SX

(3.4)

( R S )( X ) R X S X

(3.5)

approximations are defined in a natural manner as

R +SF={R +SX1 ,R +S X 2 ,...,R +SX n }

and

…..1.1

R +SF={R +SX1 ,R +S X 2 ,...R +SX n }

R*SF={R*SX1,R*SX 2 ,...,R*SX n }

and

……1.2

R*SF={R*SX1,R*SX 2 ,...R*SX n }

II. DEFINITIONS

(R + S)(X) = (S + R)(X),

(3.6)

(R + S)(X) = (S + R)(X)

(R + S)(X Y) (R + S)X (R + S)Y

(3.7)

(R + S) (XUY) (R + S)X U (R + S)Y

(3.8)

(R + S)(XUY) (R + S)XU (R + S)Y

(3.9)

(R + S) (X Y) (R + S)X (R + S)Y

(3.10)

3.2

2.1 DEFINITION: Let K= (U, R) be a knowledge base,

R be a family of equivalence relations, X U and

R , S R . The optimistic multigranular lower

PROPERTIES

OF

PESSIMISTIC

MULTIGRANULAR ROUGH SETS

The following properties of the pessimistic

multigranular rough sets which are parallel to the above

said

properties

were

established

in

approximation and optimistic multigranular upper

approximation of X with respect to R and S in U as

R S X { x | [x]R X or [x]S X}

and

R S X ~ ( R S (~ X )).

2.2 DEFINITION: Let K= (U, R) be a knowledge base,

R be a family of equivalence relations, X U and

ISSN: 2231-5381

http://www.ijettjournal.org

Page 126

[5].

International Journal of Engineering Trends and Technology (IJETT) – Volume 6 Number 3- Dec 2013

(R*S)(X) X (R *S)(X)

(3.11)

(i) R S ( X )

i

(R*S)() = = (R*S)(), (R *S)(U) = U = (R*S)(U)

(3.12)

(R*S)(~ X) =~ (R *S)(X)

(3.13)

(R*S)(X) = RX SX

(3.14)

(R*S)(X) = RXUSX

(3.15)

(R*S)(X) = (S*R)(X), (R*S)(X) = (S*R)(X)

(3.16)

(R*S)(X Y) (R*S)X (R*S)Y

(3.17)

(R*S)(X Y) (R*S)X (R*S)Y

(3.18)

(R*S)(X Y) (R*S)X (R*S)Y

(3.19)

(R*S)(X Y) (R*S)X (R*S)Y

(3.20)

R S Xj

U.

j I C

i I

(ii) R * S( X j ) R * SX j U.

jI

jIC

PROOF: R + S( X j )

jI

x Usuch that [x]R X j or[x]S X j .

jI

jI

Thus [x]R ( X j ) = or [x]S ( X j ) = .

jIC

jIC

C

C

So, [x]R ( X j ) or [x]S ( X j ) .

jIC

jIC

C

Hence, x R + S( X j ) .

C

jI

IV.THEOREMS

ON

APPROXIMATIONS

OF

CLASSIFICATIONS IN MULTIGRANULATIONS

C C

This implies that x ( R + S ( X j ) )

j I C

.

The notation used in this section is denoted as

N n {1, 2, 3,....n } .

So, x R + S( X j ).

jI C

For any I N n , I C denotes the complement

of I in N n .

This proves that R + S (

THEOREM 4.1: For any I N n

The proof of (ii) is similar.

R S ( X i ) = U, if and only if

iI

R S( Xi ) U

iI

if and only if

Xj )=

jI C

R S ( U \ Xi ) =

iI

~ R S ( Xi ) =

iI

R S ( Xi ) = U

iI

The proof for pessimistic case is similar.

ISSN: 2231-5381

ON

THEOREMS

OF

APPROXIMATIONS OF CLASSIFICATIONS IN

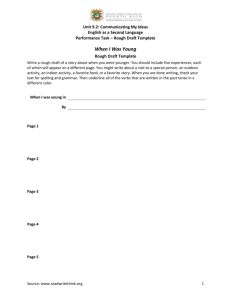

Facult

y

Name

Sam

PROOF:

THEOREM 4.2: For any I N n

VALIDATION

The following faculty details database of an university

with assumed data is used to validate the theorems of

approximations of classifications in multigranulation

rough sets.

R S ( X j ) .

jI C

RS (

V.

X j) U.

MULTIGRANULATIONS ROUGH SET THEORY

Xj )=.

jI C

RS(

j I C

Ram

Div

isio

n

NW

Grade

AP

Highes

t

Degree

M.C.A

Pr

Ph.D

APJ

M.sc.,

IS

Shyam SE

Peter

AI

AS P

Ph.D

Roger

ES

P

Ph.D

Native

State

Tamil

Nadu

Andhra

Pradesh

Tamil

Nadu

Tamil

Nadu

Tamil

Nadu

,

http://www.ijettjournal.org

Page 127

International Journal of Engineering Trends and Technology (IJETT) – Volume 6 Number 3- Dec 2013

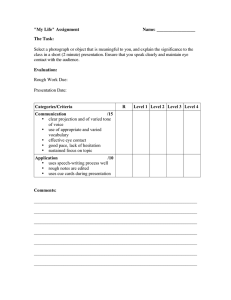

AI

APJ

M.Sc.,

Mishra ES

APJ

M.Sc.,

Albert

Andhra

Pradesh

Tamil

Nadu

Hari

IS

SP

Ph.D

Orissa

John

SE

AP

M.C.A

Smith

NW

ASP

Ph.D

West

Bengal

Orissa

Linz

AI

SP

Ph.D

Orissa

Keny

SE

P

Ph.D

Karnataka

ASP

Ph.D

APJ

M.Sc.,

Tamil

Nadu

Karnataka

Willia ES

ms

Martin IS

APJ

M.Sc.,

Karnataka

Lakma SE

n

Sita

AI

ASP

Ph.D

AP

M.Tech

West

Bengal

Karnataka

Fatima ES

AP

M.Tech

Muker

jee

Preeth

a

IS

SP

Ph.D

SE

SP

Ph.D

Jacob

NW

West

Bengal

West

Bengal

Karnataka

{shyam, albert, mishra

jacob,sam, john,sita, fatima, ram, peter,

roger, hari,smith, keny, linz, williams, mukherjee,

preetha}

/ Highest Degree {{shyam, albert, mishra

jacob},{sam, john},{sita, fatima},{ram, peter,

roger, hari, smith, keny, linz, williams, mukherjee,

preeth}}

/ Native State {{sam,shyam, roger, mishra,

williams},{ram, peter},{hari, smith, linz},{peter,

john, lakman, fatima, mukherjee},{keny, martin,

jacob,sita, pretha}}

/ Division {{sam,smith, jacob},{shyam, john,

keny, lakman, pretha},{peter, albert, linz, sita},

{roger, mishra, williams, fatima},{ram, hari, martin,

mukherjee}}

Let us define a classification F namely grade

from the faculty details table as given below

F / Grade {{shyam, albert, mishra, martin, jacob},

{sam, john,sita, fatima},{peter,smith, williams,

lakman},{linz, mukherjee, preetha, hari},

{ram, roger, keny}}

F {X1, X 2 , X 3 , X 4 , X 5}

N n {1, 2, 3, 4, 5}

The database which is stated above is used for the

I N

validation. Here NW indicates networks, SE indicates

I {1, 2, 3, 4}

Software

I C {5}

engineering,

AI

indicates

Artificial

Intelligence, ES indicated Embedded Systems, IS

indicated Information Systems. Similarly AP indicates

Assistant Professor, APJ indicates Assistant Professor

(Junior), ASP indicates Associate Professor, SP

n

Let

Let U/Grade be the classification named F

U/ R be U / Native state

U/S be U / Division

For validating theorem 4.1

indicates Senior Professor, Pr indicates Professor.

The universe and other equivalence relations are

defined below.

ISSN: 2231-5381

http://www.ijettjournal.org

Page 128

International Journal of Engineering Trends and Technology (IJETT) – Volume 6 Number 3- Dec 2013

5.1 VALIDATION OF THEOREM 4.1

Validation of Theorem 4.2

OPTIMISTIC CASE

For any I N n

For any I N n

R S( iI Xi ) ,if and only if

,

R S( Xi ) R SX j

R S( jIC X j )

jI C

iI

(ii) R * S( X j ) R * SX j U.

jI

C

jI

Take

R S( iI Xi ) R S(X1 X 2 X3 X 4 )

F {X1, X 2 , X 3 , X 4 , X 5}

~ (R S(~ (X1 X 2 X 3 X 4 )))

N n {1, 2, 3, 4, 5}

~ (R S(X 5 )) ~ ([x]R X5 or [x]S X 5 )

I N

n

I {1, 2}

Here fifth classification X 5 is {ram, roger, keny}

I C {3, 4, 5}

Let

Let U/Grade be the classification named F

R S( jIC X j ) R S(X 5 ) [x]R X 5 or [x]S X 5 U/R be U / Highest Degree

U/ S be U / Native state

for validating theorem 4.2

{}or{}

~ ( or )

{}

So R S( iI X i ) ,if and only if

OPTIMISTIC CASE

R S( jIC X j )

R S( iI Xi ) R S(X1 X 2 )

Hence Validated

[x]R {X1 X 2 }or[x]S {X1 X 2 }

{sam, john}or{}

{sam, john}

PESSIMISTIC CASE

To Pr ove

R SX

R *S( iI X i ) , if and only if

j

R S(X3 X 4 X 5 )

jIC

R *S( jIC X j )

~ R S(~ (X 3 X 4 X 5 ))

Take

~ R S(X1 X 2 )

R *S( iI X i ) R *S(X1 X 2 X 3 X 4 )

~ ({sam, john}or }

~ (R *S(~ (X1 X 2 X 3 X 4 )))

~ ({sam, john})

~ (R *S(X 5 )) ~ ([x]R X 5 and [x]S X 5 )

Here fifth classification X 5 is {ram, roger, keny}

~ ( and )

So R S( X i )

iI

R *S( jIC X j ) R *S(X 5 ) [x]R X 5 and [x]S X 5

R SX

j

jIC

Hence Validated

{}and{} {}

So R *S( iI X i ) ,if and only if

R *S( jIC X j )

Hence Validated

ISSN: 2231-5381

http://www.ijettjournal.org

Page 129

International Journal of Engineering Trends and Technology (IJETT) – Volume 6 Number 3- Dec 2013

PESSIMISTIC CASE

To Prove R *S( Xi ) R *SX j

jIC

iI

Take I {3, 4,5}

IC {1, 2}

R *S( iI X i ) R *S(X3 X 4 X 5 )

[x]R {X3 X 4 X 5}and[x]S {X 3 X 4 X 5}

{ram, peter, roger, hari,smith, linz, keny, williams,

lakman, mukherjee, pretha}and{hari,smith, linz}

{hari,smith, linz}

R *SX

j

R *S(X1 X 2 )

jIC

~ R *S(~ (X1 X 2 ))

~ R *S(X3 X 4 X 5 ) ~ ({hari, smith, linz})

So R *S( X j )

jI

R *SX

j

jIC

Hence Validated

V. CONCLUSION

In this paper the validation for the theorems on

approximations of classification was successfully

validated with a database support for both optimistic

and pessimistic multigranular rough sets. This

visualizes how the internal segments of theorems had

the flow with the database used. Such practical

implementation with a database gives more

understandability to the analytical work carried in the

previous paper.

Studies in Computational Intelligence, vol.174, Rough

Set Theory: A True Landmark in Data Analysis,

Springer Verlag, (2009), pp.85 - 136.

[8] Tripathy, B.K., Ojha, J., Mohanty, D. and Prakash

Kumar, Ch. M.S: On rough definability and types of

approximation of classifications, vol.35, no.3, (2010),

pp.197-215.

[9] Tripathy, B.K. and Mitra, A.: Topological properties

of rough sets and their applications, International

Journal of Granular Computing, Rough Sets and

Intelligent Systems (IJGCRSIS), (Switzerland),vol.1,

no.4, (2010),pp.355-369 .

[10] Tripathy, B.K. and Nagaraju, M.: On Some

Topological Properties of Pessimistic Multigranular

Rough Sets, International Journal of Intelligent Systems

and Applications, Vol.4, No.8, (2012), pp.10-17.

[11] Tripathy, B.K. and Raghavan, R.: Some Algebraic

properties of Multigranulations and an Analysis of

Multigranular Approximations of Classifications,

International Journal of Information Technology and

Computer Science, Volume 7, 2013, pp. 63-70.

VII.BIOGRAPHY

R. Raghavan is an Assistant

Professor (Senior) in the School

of Information Technology and

Engineering

(SITE),

VIT

University at Vellore in India. He

obtained his masters in computer

applications from University of

Madras. He completed his M.S., (By Research) in

information technology from school of information

technology and engineering, VIT University. He is a

life member of CSI. His current research interest

includes Rough Sets and Systems, Knowledge

Engineering, Granular Computing, Intelligent

Systems, image processing.

VI. REFERENCES

[1] Grzymala Busse, J.: Knowledge acquisition under

uncertainty- a rough set approach, Journal of Intelligent

and Robotics systems, 1, (1988), pp. 3 -1 6.

[2] Pawlak, Z.: Rough Classifications, international

Journal of Man Machine Studies, 20, (1983), pp.469 –

483.

[3] Pawlak, Z.: Theoretical aspects of reasoning about

data, Kluwer academic publishers (London), (1991).

[4] Qian, Y.H and Liang, J.Y.: Rough set method based

on Multi-granulations, Proceedings of

the 5th IEEE Conference on Cognitive Informatics,

vol.1, (2006),pp.297 – 304.

[5] Qian, Y.H., Liang, J.Y and Dang, C.Y.: Pessimistic

rough decision, in: Proceedings of RST 2010,

Zhoushan, China, (2010), pp. 440-449.

[6] Raghavan, R. and Tripathy, B.K.: On Some

Topological Properties of Multigranular Rough Sets,

Journal of Advances in Applied science Research,

Volume 2(3), 2011, pp.536-543.

[7] Tripathy, B.K.: On Approximation of

classifications, rough equalities and rough equivalences,

ISSN: 2231-5381

http://www.ijettjournal.org

Page 130