Document 12622444

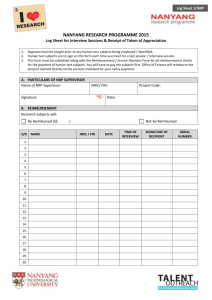

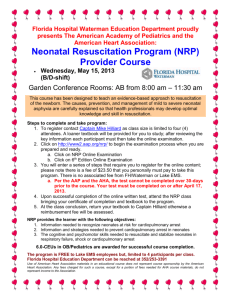

advertisement