Capacity of Hopfield Memory • Depends on the patterns imprinted p n

advertisement

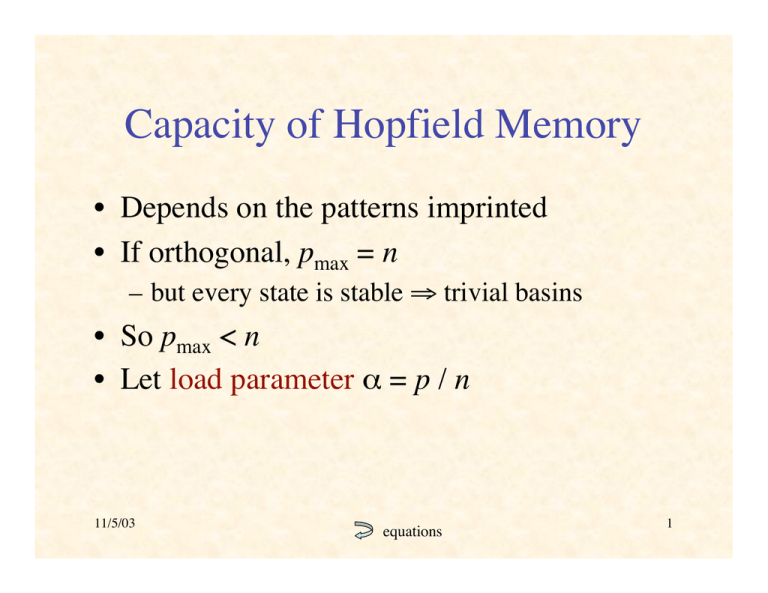

Capacity of Hopfield Memory

• Depends on the patterns imprinted

• If orthogonal, pmax = n

– but every state is stable fi trivial basins

• So pmax < n

• Let load parameter a = p / n

11/5/03

equations

1

Single Bit Stability Analysis

• For simplicity, suppose xk are random

• Then xk ⋅ xm are sums of n random ±1

ß

ß

ß

ß

binomial distribution ≈ Gaussian

in range –n, …, +n

with mean m = 0

and variance s2 = n

• Probability sum > t:

È

˘

Ê

ˆ

t

1

˜˙

2 Í1- erf Á

Ë 2n ¯˚

Î

[See “Review of Gaussian (Normal) Distributions” on course website]

11/5/03

2

†

Approximation of Probability

Let crosstalk Cim =

1

n

k

k

m

x

x

⋅

x

i(

)

k≠m

We want Pr{Cim > 1} = Pr{nCim > n}

p

†

†

†

†

†

n

Note : nCim = Â Â x ik x kj x mj

k=1 j=1

k≠m

A sum of n( p -1) ª np random ± 1

Variance s 2 = np

11/5/03

3

Probability of Bit Instability

È

˘

Ê

ˆ

n

Pr{nCim > n} = 12 Í1- erf Á

˜˙

ÍÎ

Ë 2np ¯˙˚

=

1

2

[1- erf (

n 2p

)]

†

11/5/03 (fig. from Hertz & al. Intr. Theory Neur. Comp.)

4

Tabulated Probability of

Single-Bit Instability

a

Perror

11/5/03

0.1%

0.105

0.36%

0.138

1%

0.185

5%

0.37

10%

0.61

(table from Hertz & al. Intr. Theory Neur. Comp.)

5

Spurious Attractors

• Mixture states:

–

–

–

–

sums or differences of odd numbers of retrieval states

number increases combinatorially with p

shallower, smaller basins

basins of mixtures swamp basins of retrieval states fi

overload

– useful as combinatorial generalizations?

– self-coupling generates spurious attractors

• Spin-glass states:

– not correlated with any finite number of imprinted

patterns

– occur beyond overload because weights effectively

random

11/5/03

6

Basins of Mixture States

x

k1

x

x

†

†

11/5/03

k3

mix

† k2

x

x imix = sgn( x ik1 + x ik2 + x ik3 )

7

Fraction of Unstable Imprints

(n = 100)

11/5/03

(fig from Bar-Yam)

8

Number of Stable Imprints

(n = 100)

11/5/03

(fig from Bar-Yam)

9

Number of Imprints with Basins

of Indicated Size (n = 100)

11/5/03

(fig from Bar-Yam)

10

Summary of Capacity Results

• Absolute limit: pmax < acn = 0.138 n

• If a small number of errors in each pattern

permitted: pmax µ n

• If all or most patterns must be recalled

perfectly: pmax µ n / log n

• Recall: all this analysis is based on random

patterns

• Unrealistic, but sometimes can be arranged

11/5/03

11

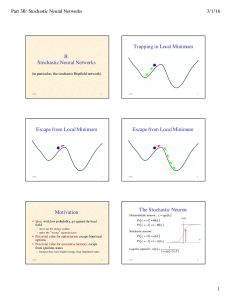

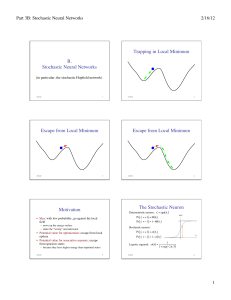

Stochastic Neural Networks

(in particular, the stochastic Hopfield network)

11/5/03

12

Trapping in Local Minimum

11/5/03

13

Escape from Local Minimum

11/5/03

14

Escape from Local Minimum

11/5/03

15

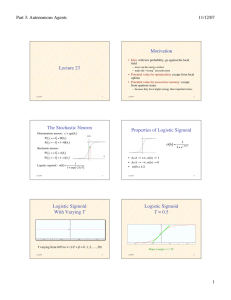

Motivation

• Idea: with low probability, go against the local

field

– move up the energy surface

– make the “wrong” microdecision

• Potential value for optimization: escape from local

optima

• Potential value for associative memory: escape

from spurious states

– because they have higher energy than imprinted states

11/5/03

16

The Stochastic Neuron

Deterministic neuron : s¢i = sgn( hi )

Pr{s¢i = +1} = Q( hi )

Pr{s¢i = -1} = 1- Q( hi )

Stochastic neuron :

Pr{s¢i = +1} = s ( hi )

†

†

†

s(h)

h

Pr{s¢i = -1} = 1- s ( hi )

1

Logistic sigmoid : s (h ) =

1+ exp(-2 h T )

11/5/03

17

Properties of Logistic Sigmoid

1

s (h) =

1+ e-2h T

• As h Æ +•, s(h) Æ 1

• As h Æ –•, s(h)†Æ 0

• s(0) = 1/2

11/5/03

18

Logistic Sigmoid

With Varying T

11/5/03

19

Logistic Sigmoid

T = 0.5

Slope at origin = 1 / 2T

11/5/03

20

Logistic Sigmoid

T = 0.01

11/5/03

21

Logistic Sigmoid

T = 0.1

11/5/03

22

Logistic Sigmoid

T=1

11/5/03

23

Logistic Sigmoid

T = 10

11/5/03

24

Logistic Sigmoid

T = 100

11/5/03

25