Reading Lecture 22 Definition of Covariance Imprinting Multiple Patterns

advertisement

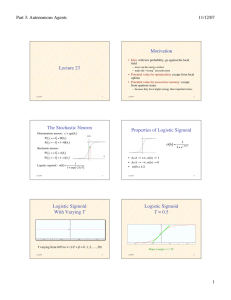

Part 3: Autonomous Agents

11/8/07

Reading

Lecture 22

• Flake, ch. 20 (“Genetics and Evolution”)

11/8/07

1

11/8/07

Definition of Covariance

Imprinting Multiple Patterns

Consider samples (x1, y1), (x2, y2), …, (xN, yN)

Let x = x k and y = y k

Covariance of x and y values :

• Let x1, x2, …, xp be patterns to be imprinted

• Define the sum-of-outer-products matrix:

p

W ij =

1

n

"x

k

i

= x k y k " xy k " x k y + x # y

= xkyk " x yk " xk y + x # y

!

p

1

n

Cxy = ( x k " x )( y k " y )

!

!

x kj

k=1

W=

2

k T

" x (x )

k

= xkyk " x # y " x # y + x # y

!

k=1

!

11/8/07

3

Cxy = x k y k " x # y

11/8/07

4

!

!

!

Characteristics

of Hopfield Memory

Weights & the Covariance Matrix

x1,

x 2,

xp

Sample pattern vectors:

…,

Covariance of ith and jth components:

• Distributed (“holographic”)

– every pattern is stored in every location

(weight)

Cij = x ik x kj " x i # x j

If "i : x i = 0 (±1 equally likely in all positions) :

Cij = x ik x kj =

!

!

1

p

"

p

k=1

• Robust

– correct retrieval in spite of noise or error in

patterns

– correct operation in spite of considerable

weight damage or noise

x ik y kj

" W = np C

!

!

11/8/07

5

11/8/07

6

!

1

Part 3: Autonomous Agents

11/8/07

Interpretation of Inner Products

Stability of Imprinted Memories

• xk ⋅ xm = n if they are identical

• Suppose the state is one of the imprinted

patterns xm

T

• Then: h = Wx m = 1n " x k (x k ) x m

[

=

1

n

=

1

n

" x (x )

x (x ) x

k

m T

m

= xm +

1

n

]

k

k T

k

m

" (x

k#m

– highly correlated

x

• xk ⋅ xm = –n if they are complementary

– highly correlated (reversed)

m

+

• xk ⋅ xm = 0 if they are orthogonal

1

n

k T

" x (x )

k

xm

– largely uncorrelated

• xk ⋅ xm measures the crosstalk between

patterns k and m

k#m

k

$ x m )x k

11/8/07

7

11/8/07

8

!

Cosines and Inner products

Conditions for Stability

u

u " v = u v cos # uv

" uv

Stability of entire pattern :

%

(

x m = sgn' x m + 1n $ x k cos" km *

&

)

k#m

v

2

If u is bipolar, then u = u!" u = n

!

Hence, u " v = n n cos# uv = n cos # uv

!

Stability of a single bit :

%

(

x im = sgn' x im + 1n $ x ik cos" km *

&

)

k#m

!

!

11/8/07

9

11/8/07

10

!

Sufficient Conditions for

Instability (Case 1)

Sufficient Conditions for

Instability (Case 2)

Suppose x im = "1. Then unstable if :

Suppose x im = +1. Then unstable if :

("1) + 1n % x ik cos#km > 0

(+1) + 1n $ x ik cos"km < 0

k$m

!

1

n

$x

k#m

!

k

i

cos" km > 1

1

n

k#m

%x

k

i

cos" km < #1

k$m

!

!

11/8/07

11

!

11/8/07

12

!

2

Part 3: Autonomous Agents

11/8/07

Sufficient Conditions for

Stability

1

n

$x

k

i

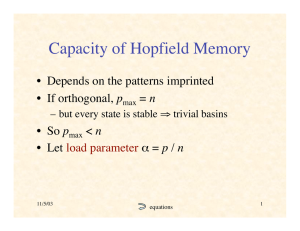

Capacity of Hopfield Memory

• Depends on the patterns imprinted

• If orthogonal, pmax = n

cos " km % 1

– but every state is stable ⇒ trivial basins

k#m

• So pmax < n

• Let load parameter α = p / n

The crosstalk with the sought pattern must be

sufficiently

small

!

11/8/07

13

11/8/07

Single Bit Stability Analysis

Approximation of Probability

• For simplicity, suppose xk are random

• Then xk ⋅ xm are sums of n random ±1

Let crosstalk Cim =

1

2

$ x (x

k

i

k

" xm )

We want Pr{Cim > 1} = Pr{nCim > n}

p

!

!

)

# t &,

(.

+1" erf %

$ 2n '*

!

[See “Review of Gaussian (Normal) Distributions” on course website]

11/8/07

15

!

!

n

Note : nCim = " " x ik x kj x mj

k=1 j=1

k#m

A sum of n( p "1) # np random ± 1s

Variance " 2 = np

11/8/07

16

!

Tabulated Probability of

Single-Bit Instability

Probability of Bit Instability

)

# n &,

Pr{nCim > n} = 12 +1" erf %

(.

+*

$ 2np '.=

1

2

[1" erf (

n 2p

α

Perror

)]

!

11/8/07 (fig. from Hertz & al. Intr. Theory Neur. Comp.)

1

n

k#m

binomial distribution ≈ Gaussian

in range –n, …, +n

with mean µ = 0

and variance σ2 = n

• Probability sum > t:

14

equations

17

11/8/07

0.1%

0.105

0.36%

0.138

1%

0.185

5%

0.37

10%

0.61

(table from Hertz & al. Intr. Theory Neur. Comp.)

18

3

Part 3: Autonomous Agents

11/8/07

Spurious Attractors

Basins of Mixture States

• Mixture states:

–

–

–

–

–

–

sums or differences of odd numbers of retrieval states

number increases combinatorially with p

shallower, smaller basins

basins of mixtures swamp basins of retrieval states ⇒ overload

useful as combinatorial generalizations?

self-coupling generates spurious attractors

x k1

x k3

x mix

• Spin-glass states:

– not correlated with any finite number of imprinted patterns

– occur beyond overload because weights effectively random

!

! k2

x

!

11/8/07

19

11/8/07

!

Fraction of Unstable Imprints

(n = 100)

11/8/07

(fig from Bar-Yam)

(fig from Bar-Yam)

20

!

Number of Stable Imprints

(n = 100)

21

Number of Imprints with Basins

of Indicated Size (n = 100)

11/8/07

x imix = sgn( x ik1 + x ik2 + x ik3 )

11/8/07

(fig from Bar-Yam)

22

Summary of Capacity Results

• Absolute limit: pmax < α cn = 0.138 n

• If a small number of errors in each pattern

permitted: pmax ∝ n

• If all or most patterns must be recalled

perfectly: pmax ∝ n / log n

• Recall: all this analysis is based on random

patterns

• Unrealistic, but sometimes can be arranged

23

11/8/07

24

4

Part 3: Autonomous Agents

11/8/07

Trapping in Local Minimum

Stochastic Neural Networks

(in particular, the stochastic Hopfield network)

11/8/07

25

11/8/07

Escape from Local Minimum

11/8/07

Escape from Local Minimum

27

11/8/07

28

The Stochastic Neuron

Motivation

Deterministic neuron : s"i = sgn( hi )

Pr{si" = +1} = #( hi )

Pr{s"i = $1} = 1$ #( hi )

• Idea: with low probability, go against the local

field

– move up the energy surface

– make the “wrong” microdecision

σ(h)

Stochastic neuron :

• Potential value for optimization: escape from local

optima

• Potential value for associative memory: escape

from spurious states

– because they have higher energy than imprinted states

11/8/07

26

Pr{s"i = +1} = # ( hi )

!

Logistic sigmoid : " ( h ) =

!

29

h

Pr{s"i = $1} = 1$ # ( hi )

11/8/07

1

1+ exp(#2 h T )

30

!

5

Part 3: Autonomous Agents

11/8/07

Logistic Sigmoid

With Varying T

Properties of Logistic Sigmoid

" ( h) =

1

1+ e#2h T

• As h → +∞, σ(h) → 1

• As h → –∞, σ(h)!→ 0

• σ(0) = 1/2

11/8/07

T varying from 0.05 to ∞ (1/T = β = 0, 1, 2, …, 20)

31

11/8/07

Logistic Sigmoid

T = 0.5

32

Logistic Sigmoid

T = 0.01

Slope at origin = 1 / 2T

11/8/07

33

11/8/07

Logistic Sigmoid

T = 0.1

11/8/07

34

Logistic Sigmoid

T=1

35

11/8/07

36

6

Part 3: Autonomous Agents

11/8/07

Logistic Sigmoid

T = 10

11/8/07

Logistic Sigmoid

T = 100

37

11/8/07

Pseudo-Temperature

Transition Probability

•

•

•

•

Temperature = measure of thermal energy (heat)

Thermal energy = vibrational energy of molecules

A source of random motion

Pseudo-temperature = a measure of nondirected

(random) change

• Logistic sigmoid gives same equilibrium

probabilities as Boltzmann-Gibbs distribution

11/8/07

38

Recall, change in energy "E = #"sk hk

= 2sk hk

Pr{sk" = ±1sk = m1} = # (±hk ) = # ($sk hk )

!

Pr{sk " #sk } =

!

=

39

1

1+ exp(2sk hk T )

1

1+ exp($E T )

11/8/07

40

!

Does “Thermal Noise” Improve

Memory Performance?

Stability

• Experiments by Bar-Yam (pp. 316-20):

• Are stochastic Hopfield nets stable?

• Thermal noise prevents absolute stability

• But with symmetric weights:

n = 100

p=8

average values si become time - invariant

!

11/8/07

41

• Random initial state

• To allow convergence, after 20 cycles

set T = 0

• How often does it converge to an imprinted

pattern?

11/8/07

42

7

Part 3: Autonomous Agents

11/8/07

Probability of Random State Converging

on Imprinted State (n=100, p=8)

Probability of Random State Converging

on Imprinted State (n=100, p=8)

T=1/β

11/8/07

(fig. from Bar-Yam)

43

(D) all states melt

• Complete analysis by Daniel J. Amit &

colleagues in mid-80s

• See D. J. Amit, Modeling Brain Function:

The World of Attractor Neural Networks,

Cambridge Univ. Press, 1989.

• The analysis is beyond the scope of this

course

(C) spin-glass states

(A) imprinted

= minima

45

11/8/07

(fig. from Hertz & al. Intr. Theory Neur. Comp.)

(B) imprinted,

but s.g. = min.

(fig. from Domany & al. 1991)

46

Phase Diagram Detail

Conceptual Diagrams

of Energy Landscape

11/8/07

44

Phase Diagram

Analysis of Stochastic Hopfield

Network

11/8/07

(fig. from Bar-Yam)

11/8/07

47

11/8/07

(fig. from Domany & al. 1991)

48

8