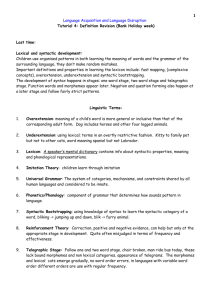

Lexical and syntactic representations in the brain: An

advertisement