Document 11582112

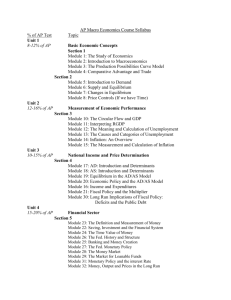

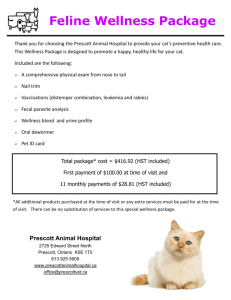

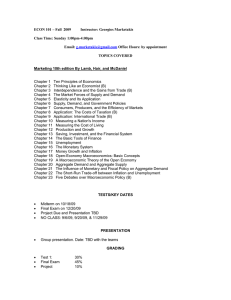

advertisement