Testing the limits of quantum mechanics: motivation, state of play, prospects

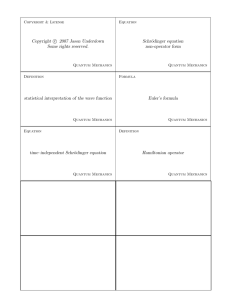

advertisement