Chabot College Program Review Report 2015 ‐2016

advertisement

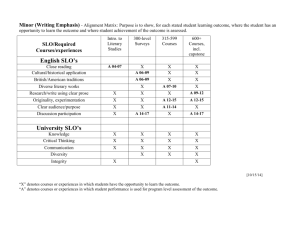

Chabot College Program Review Report 2015 ‐2016 Year 3 of Program Review Cycle Mathematics Submitted on Oct. 24, 2014 Contact: Ming Ho 1 Appendix B2: “Closing the Loop” Course‐Level Assessment Reflections. Course ALL Semester assessment data gathered Spring 2014 Number of sections offered in the semester 82 sections combined Number of sections assessed 79 sections assessed Percentage of sections assessed 96.3% Semester held “Closing the Loop” discussion Fall 2014 Faculty members involved in “Closing the Loop” discussion 9 Form Instructions: Complete a separate Appendix B2 form for each Course‐Level assessment reported in this Program Review. These courses should be listed in Appendix B1: Student Learning Outcomes Assessment Reporting Schedule. Part I: CLO Data Reporting. For each CLO, obtain Class Achievement data in aggregate for all sections assessed in eLumen. Part II: CLO Reflections. Based on student success reported in Part I, reflect on the individual CLO. Part III: Course Reflection. In reviewing all the CLOs and your findings, reflect on the course as a whole. PART I: COURSE‐LEVEL OUTCOMES – DATA RESULTS Defined Target Actual Scores** Scores* (eLumen data) (CLO Goal) See SLO See SLO (CLO) 1: (Critical Thinking) Analyze mathematical Attachments (p8‐ Attachments problems critically using logical methodology. 27) (p8‐27) See SLO See SLO (CLO) 2: (Communication) Communicate mathematical Attachments (p8‐ Attachments ideas, understand definitions, and interpret concepts. 27) (p8‐27) See SLO See SLO (CLO) 3: (Development of the Whole Person) Increase Attachments Attachments (p8‐ confidence in understanding mathematical concepts, (p8‐27) 27) communicating ideas and thinking analytically. If more CLOs are listed for the course, add another row to the table. * Defined Target Scores: What scores in eLumen from your students would indicate success for this CLO? (Example: 75% of the class scored either 3 or 4) **Actual scores: What is the actual percent of students that meet defined target based on the eLumen data collected in this assessment cycle? CONSIDER THE COURSE‐LEVEL OUTCOMES INDIVIDUALLY (THE NUMBER OF CLOS WILL DIFFER BY COURSE) 2 PART II: COURSE‐ LEVEL OUTCOME REFLECTIONS A. COURSE‐LEVEL OUTCOME (CLO) 1: 1. How do your current scores match with your above target for student success in this course level outcome? See SLO Attachments (p8‐27) 2. Reflection: Based on the data gathered, and considering your teaching experiences and your discussions with other faculty, what reflections and insights do you have? See SLO Attachments (p8‐27) B. COURSE‐LEVEL OUTCOME (CLO) 2: 1. How do your current scores match with your above target for student success in this course level outcome? See SLO Attachments (p8‐27) 2. Reflection: Based on the data gathered, and considering your teaching experiences and your discussions with other faculty, what reflections and insights do you have? See SLO Attachments (p8‐27) 3 C. COURSE‐LEVEL OUTCOME (CLO) 3: 1. How do your current scores match with your above target for student success in this course level outcome? See SLO Attachments (p8‐27) 2. Reflection: Based on the data gathered, and considering your teaching experiences and your discussions with other faculty, what reflections and insights do you have? See SLO Attachments (p8‐27) 4 PART III: COURSE REFLECTIONS AND FUTURE PLANS 1. What changes were made to your course based on the previous assessment cycle, the prior Closing the Loop reflections and other faculty discussions? See SLO Attachments (p8‐27) 2. Based on the current assessment and reflections, what course‐level and programmatic strengths have the assessment reflections revealed? What actions has your discipline determined might be taken as a result of your reflections, discussions, and insights? See SLO Attachments (p8‐27) 3. What is the nature of the planned actions (please check all that apply)? X – Curricular X – Pedagogical Resource based Change to CLO or rubric Change to assessment methods X – Other: _ See SLO Attachments (p8‐27) __________________________________________________ 5 Appendix C: Program Learning Outcomes Considering your feedback, findings, and/or information that has arisen from the course level discussions, please reflect on each of your Program Level Outcomes. Program: _Math AS _ PLO #1: (Critical Thinking) Analyze mathematical problems critically using logical methodology. PLO #2: (Communication) Communicate mathematical ideas, understand definitions, and interpret concepts PLO #3: (Development of the Whole Person) Increase confidence in understanding mathematical concepts, communicating ideas and thinking analytically. What questions or investigations arose as a result of these reflections or discussions? See SLO Attachments (p8‐27) What program‐level strengths have the assessment reflections revealed? See SLO Attachments (p8‐27) What actions has your discipline determined might be taken to enhance the learning of students completing your program? See SLO Attachments (p8‐27) Program: _Math AA _ PLO #1: (Critical Thinking) Analyze mathematical problems critically using logical methodology. PLO #2: (Communication) Communicate mathematical ideas, understand definitions, and interpret concepts PLO #3: (Development of the Whole Person) Increase confidence in understanding mathematical concepts, communicating ideas and thinking analytically. What questions or investigations arose as a result of these reflections or discussions? See SLO Attachments (p8‐27) 6 What program‐level strengths have the assessment reflections revealed? See SLO Attachments (p8‐27) What actions has your discipline determined might be taken to enhance the learning of students completing your program? See SLO Attachments (p8‐27) 7 Student Learning Outcomes for the Math Subdivision After going through the SLO process in the Fall 2011, it was obvious that the process was broken—the data gathered was not meaningful, with its biased accumulation, its limited scope, and nearly empty results. Faculty used different questions to assess the same CLO; the assessments were given at varying times during the semester; no consistent grading guidelines were followed. Even if we discard the highly regarded and cherished notion of academic freedom and had the professors using a unified set of problems and a uniform grading rubric, the scope of the CLOs is rather limiting. To assess a student’s performance based on limited select problems only addresses a small portion of the course’s curriculum—easily less than 20% of the total expected learning outcomes listed in the course outline. It would be analogous to evaluating the effectiveness of a hospital’s triage department by assessing correct usage of a blood pressure cuff. The Mathematics subdivision met in Spring 2012. We decided to discard the previous method and its data. We needed to have a different method of assessment. Goals of the new method include: Broad scope. Mathematics contains fundamental concepts threaded through several courses. We cannot focus on one topic’s instruction found in one course. There needs to be a way to look at this globally. Easy to administer and evaluate. Many of the concerns about the process we had set up was that the assessment had too much of a negative impact on teaching the course—vital class time is being used for assessment not linked to grades. Also, instructors were spending additional time grading and tabulating results. Anything to get time back to instruction would be vital. Useful data. The data gathered should paint a snapshot of the course—not a small percentage of the course’s material. Respect Academic Freedom. This process should not interfere with the foundations of instructor/student relationship—Academic Freedom. The focus needs to be on the course and not the instructor. Integration. There needs to be full integration between the established SLOs for math (CLOs, PLOs, and CWLGs) with Curriculum, Program Review, and Budget Requests. While alternate solutions were being explored, an interim process was established. While we could not use the meaningless data from the previous CLO cycle, we still felt that we needed to comply with the goal of Student Learning Outcomes. We established a monthly meeting where faculty shared teaching best practices on a variety of topics (e.g., Generalizations, Graphing, 8 Utilizing Technology, Transition from Trig to Pre‐calculus, etc.). These conversations proved beneficial and should be part of the new process. The New Process: PLOs and CLOs. For each degree (AA and AS) as well as each of our courses the learning outcomes will be changed to: 1) (Critical Thinking) Analyze mathematical problems critically using logical methodology. 2) (Communication) Communicate mathematical ideas, understand definitions, and interpret concepts. 3) (Development of the Whole Person) Increase confidence in understanding mathematical concepts, communicating ideas and thinking analytically. While these are clearly meant for the PLO level, they can also be used at the course level using material from the course outline. There will be more than one question that will target each of these three CLOs. The reason for doing this is that by focusing on one topic from one course presents rather useless information to build a course of action for improvements. For example, gathering data on whether or not an algebra student can factor a trinomial does not address the root cause of what might be happening. By using multiple questions for each outcome, we have a variety of questions to pull from to assess those CLOs These PLOs and CLOs could easily be shared with students. Our communicated statement could be: “Student Learning Outcome: Students will analyze mathematical problems critically using a logical methodology, communicate these ideas, understand definitions, and increase their confidence in interpreting, understanding, and communicating mathematical concepts.” This could be placed on syllabi or on our website. The Assessment: Students will complete a twelve question multiple choice self‐assessment survey. Ten questions will be problems based on the courses outcomes from the course outline—with at least two questions based on communicating information. Instead of asking students to perform the task and having instructors evaluate the data, for each problem, the student’s choices are: A. I know immediately what to do, and I know I will get this answer 100% right. B. I think I can get this if I really think about it, and most likely do well on this problem. 9 C. I could do this problem if I had my notes or textbook; I don’t know how well I will do. D. If I had aid of a tutor while working on this problem, I could probably do it. E. I have no idea how to do this problem. Two other questions will target the confidence of the student: Question 11.) Based on your performance in the class, what grade do you anticipate receiving? Question 12.) Your confidence in math: A. Has improved when you compare it to the beginning of the semester. B. Is about the same as it was at the beginning of the semester. C. Has gotten worse since the beginning of the semester. By making this shift from evaluating completed problems to self‐assessment offers a different perspective on the learning process. It also allows for uniform assessment across all sections of a course, by removing discrepancies in which questions are used and how they are graded between instructors. (A sample survey is in Appendix A o p26.) Gathering the Data. A simple mechanism is to be used to collect the student response; a common “bubble in” form used during class will ensure that a significant number of students will participate, while minimizing the impact to class time. During the assessment process in Sp 14, the surveys took at most 10 minutes to complete in each section—significantly minimizing the impact to class time. 79 of the 82 sections completed the survey. Math 33 is only taught in the Fall; it will be assessed in Fall 14. MTH 122 was administer by e‐mail with poor result, so it will be reassessed in Fall 14. The inputting of the data was done using a reader with appropriate software, saving time over grading. Instructors deposited the completed forms in the division office, to be scanned. The data will not only show students’ results, but also which sections have participated. This will ensure compliance to a significant sample size. IR’s group scanned the surveys to provide an Excel spreadsheet with student answers. For each student, his or her individual median score is determined. That student’s score for each question is compared to his or her individual median. If the question yielded a score less than or equal to the median minus one, then the question is deemed to be a low outlier. By comparing the ten responses in this manner, we are essentially asking the student where his or her weaknesses are. It is the spread of the scores that help identify the lower outliers—not if the student over or under estimates his or her performance or if the student scores each question with the exact same answer. (See Appendix B on p28 for sample computation.) 10 For each question a percentage of students with that question as a low outlier is determined, and the questions are ranked for each class. This ranking is what is used in the Closing the Loop discussions. The results from Spring 2014 are included in Appendix C on p29. Closing the Loops After all courses are assessed and the data has been tabulated and ranked, we met twice to identify topics, trends, and behaviors to work on for all our courses. The first meeting (on 9/2/14) focused on Course Level Outcomes, and the second one (on 9/24/14) focused on Program Level Outcomes—but during the robust discussions, it was hard to separate the two levels as we discovered that CLO and PLO results are intrinsically integrated. The discussion was not limited to the survey alone, but included our insight from our experiences as math instructors. We analyzed our data using diverse lenses—curriculum, budget, program level, pathway level, course level, etc. These identified topics/action items below will be the subject of our Monthly Meeting of Mathematical Minds (M4) for the next three years. It is our opinion that the results from our survey are indicators of issues and should not be used as a sole source of the discussion. For our first meeting of M4—which also doubled as our PLO discussion—our focus was on program level outcome results. In addition to coming up with a list of program and curriculum topics, we dove right in to start working on issues that came from our SLO results. First, we evaluated the disconnect between the students’ perceived performance and their actual performance in 103, 104, 65, 55, 53, and 37 courses. We compared these program level courses results with the content from the course level and we found that students struggled with foundational concepts—concepts that carry them through to the next course in the sequence. With the majority of our faculty teaching the higher level courses, we do not have enough full time faculty to fully support the broad spectrum of math classes. We are stretched thin. We need additional full time faculty to close this gap in spectrum support. It is feared that with our shrinking number of full time faculty, that we could not effectively implement changes resulting from our SLO discussions for all courses, thus making any discussion surrounding learning outcomes moot. Another Program Level discussion also surrounded the topics from the 103, 104, 65, 55, 53, and 37 courses. Most topics that appeared at the top of the respective lists are foundational in nature with connections to prerequisites. Our discussion also brought up the validity of our placement exams—are they placing the student in the appropriate class? We decided to investigate not only the validity of the exams but if there were other placement exams in the market. We will follow up in a future M4 discussion to discuss the results. A third discussion from that meeting was when we noted that Math 53 has a very low success rate. Students feel that they are performing higher than what they are actually doing. But then 11 those students who do continue in the subsequent Math 43 class are succeeding at a much higher rate. We posed a question: is there a problem with Math 53 or is the low success rate a natural result when compared with the success rates for students taking the 2 semester 65/55 sequence? We decided to table this discussion for a future meeting as well as more data would need to be gathered. Please note: it would be highly cumbersome to superficially solve all these problems in one or two Closing the Loop discussions. The discussions for addressing each of the list of topics/issues will be spread out over the next few years so that we can devote an adequate amount of time for each topic. Our next M4 meeting will address using technology as an aid to instruction for our algebra students. The following are topics/issues that will be discussed at future M4 meetings: Program Level Multiple New Full Time Faculty Members Technology as a Tool for Instruction Placement Exam Revision Curriculum Math 36/37 into 20 Transitions Math 20 to prepare for Math 1, 2, 3, 4, 6, and 8. Converting Math 103/104 into Non-Credit Using results to compare old 65/55 to new 65/55 sequence. Success in 53/43 vs. 65/55/43 compared to 53 vs. 65/55 Pathway Level (Topics Spanning Across Several Courses) Definition of Functions Inverse Functions and their Domains and Ranges Polar Coordinates Visualizing Topics Interpretations / Applications Course Level (Topics Limited to One or Two Courses) Percents Variation Pt-Slope Equations Taylor Series Binomial Distribution 12 Follow Up Assessments: When subsequent assessments are done we can compare the two results. We will know that we were successful if the problem no longer is identified high in the ranking of low outlier percentages. If it does remain, then we know that we did not achieve the goal; further analysis will need to be done. This might include adjustment to the actual questions used on the assessment. Confidence in Math The goal of the SLO process is to identify gaps and work to close them as a team. The Math subdivision has identified this process as a way of determining areas for improvement. This method does provide additional insight not found through traditional direct assessment—a student’s confidence in the material. A lack of confidence in math is one of fundamental issues we face; now here is another method for quantifying it. See Appendices E p, F, and G (p36‐38) for the results. Validating the Indirect Method Some questions have come up regarding the validity of the data, since it is using an indirect method; the data does not come from a direct assessment of student understanding. For Spring 2014, Robert Yest compared his student’s final exam solutions (from his Beginning Algebra, Intermediate Algebra, Pre‐Calculus, Calculus I, and Discrete Math courses) to the SLO results for each of the five courses. Since the nature of the final exam questions was intrinsically different from the SLO questionnaire, it would not make sense to compare numbers quantitatively. Instead, a qualitative ranking was created based on the percentage of students who had some conceptual mistake on the final exam. The two rankings were compared, and in the five courses there is a strong similarity between the corresponding rankings. The results are attached in Appendix D on p34. We believe that our students understand their gaps in knowledge. Listening to them offers us nearly the same results as if we directly tested them and yet offers additional information regarding confidence not found in an exam. We do not believe that our Closing the Loop discussions would have been any different. 13 In Conclusion The math division believes that this process is the best process to balance—and still meet—the objectives we had set for ourselves when we overhauled our system. This assessment is incredibly easy to administer. Between 5 to 10 minutes of unsupervised class time was generally needed. Many instructors multitasked by having the students fill out the survey as the instructor was passing back items. There was no time spent on grading assessments, as there was no grading. The only post assessment resource used was our IR department scanning the bubble forms. 79 out of all 82 sections completed the survey, an incredibly strong turnout. The data is robust that we can continually mine for topics for improvement in instruction. The Post Closing the Loop process is continual; its format is flexible so that we can address identified program, pathway, curriculum, and course specific issues in depth and in a manner where suggested changes can be rolled out effectively and efficiently. We see the problem using a global view, and act on it locally. The process is deeply integrated with the course outline of record while definitively mapped to the Course Learning Outcomes, Program Learning Outcomes, and finally College Wide Learning Goals. Finally, we believe that this system balances the multi‐faceted—sometimes contradictory— demands of the Student Learning Outcomes in a way that puts the focus back into improving instruction. It minimizes the cumbersome and time‐consuming administrative aspects of what was being done in prior versions, allowing us to create a venue for sharing ideas and starting conversations. These conversations are ongoing, evolving, thriving, and not static. 14 Appendix A: Mathematics 55 • Intermediate Algebra Course Assessment Survey For the questions 1–10, you are asked if you can do the problem, or if not, what level of support would you need. You are not asked to work out the answer. This is an anonymous survey; your instructor will not know your choices. Also, your instructor will not be evaluated on these results. Your honest opinion is vital to the success of this survey. Use the following scale for each question. Mark your answers on the answer sheet provided. A. I know immediately what to do, and I know I will get this answer 100% right. B. I think I can get this if I really think about it, and most likely do well on this problem. C. I could do this problem if I had my notes or textbook, and I don’t know how well I will do. D. If I had aid of a tutor while working on this problem, I could probably do it. E. I have no idea how to do this problem. ——————————————— 1. Solve 3x 2 11x 5 0 using the quadratic formula. 2. Solve the inequality 2x 5 7 3. Determine the inverse of the one to one function f x 4. Divide and simplify 1 . x3 2 5i . 4 3i 5. Solve the equation 2x 5 x 10 6. A town’s population is 12,000 and growing at a rate of 6% per year. How long will it take for the town’s population to reach 15,000? 7. Describe in your own words the definition of a function. 1 2 x 8. Sketch the graph of f x 15 9. Express the domain of f x log3 x 2 in interval notation. 2x 10. Rationalize the denominator for 3 7 18x y 2 . ——————————————— For questions #11 and #12 answer the question with the appropriate response. 11. Based on your performance in the class, what grade do you anticipate receiving? 12. Your confidence in math: A. Has improved when you compare it to the beginning of the semester. B. Is about the same as it was at the beginning of the semester. C. Has gotten worse since the beginning of the semester. 16 Appendix B: 17 Appendix C: Results for Student Learning Outcomes for Math 2014 Course Survey Qstn # # of Low Total Percent (**) Outliers Students 37 10 Graph a Polar Equation 53 100 53.00% 37 4 Finding Areas of Plane Regions 36 100 36.00% 37 8 * Domains & Ranges of Inverse Trig Fns 32 100 32.00% 37 6 Solving Trigonometric Equations 24 100 24.00% 37 5 22 100 22.00% 37 7 Graphing Trigonometric Functions * Finding Trig Function Values w/ Identities 22 100 22.00% 37 1 Triangle Congruence Proofs 18 100 18.00% 37 9 12 100 12.00% 37 3 Law of Sines and Law of Cosines Solving Triangles Using Right Trangle Thms 5 100 5.00% 37 2 Angle Measures Using Basic Theorems 3 100 3.00% 20 10 Converting Polar Equations 35 86 40.70% 20 5 Polar Graphing 33 86 38.37% 20 3 Right Triangle Geometry 27 86 31.40% 20 7 * Range of 1‐1 Functions 27 86 31.40% 20 4 * Translations and Transformaitons 26 86 30.23% 20 6 Polynomial Graphing 25 86 29.07% 20 1 Log Equations 20 86 23.26% 20 8 Sequences 14 86 16.28% 20 9 Series 11 86 12.79% 20 2 Polynomial Factoring 4 86 4.65% 1 1 * Epsilon Delta 66 87 75.86% 1 6 * Mean Value Theorem 40 87 45.98% 1 10 Volumes 31 87 35.63% 1 7 Concavity 19 87 21.84% 1 9 * Riemann Sum 19 87 21.84% 1 5 Implicit Differentiation 16 87 18.39% 1 2 Continuity 14 87 16.09% 1 8 Integral 3 87 3.45% 1 4 Computation of Derivative 2 87 2.30% 1 3 Definition of Derivative 1 87 1.15% 2 5 Taylor Series 50 84 59.52% 2 2 * Natural Logarithm (Calculus) Definition 37 84 44.05% 2 8 Inverse Trigonometric Derivatives 25 84 29.76% 2 9 Interval of Convergence 22 84 26.19% Type of Question 18 2 7 Polar Area 20 84 23.81% 2 1 * Geometric Series Convergence 11 84 13.10% 2 4 Trigonometric Integrals 11 84 13.10% 2 10 Improper Integral 10 84 11.90% 2 6 L'Hopital's Rule 8 84 9.52% 2 3 Integration by Parts 2 84 2.38% 3 9 Divergence Theorem 32 58 55.17% 3 10 * Line Integral Applications 27 58 46.55% 3 5 Optimization 23 58 39.66% 3 4 * Gradient Properties 21 58 36.21% 3 8 Green's Theorem 12 58 20.69% 3 6 Volumes 7 58 12.07% 3 1 3D Geometry 5 58 8.62% 3 7 Spherical Integration 5 58 8.62% 3 2 Tangent Vectors 3 58 5.17% 3 3 Partial Derivatives 3 58 5.17% 4 10 Laplace Transformations 16 25 64.00% 4 1 * Existence and Uniqueness Theorem 15 25 60.00% 4 4 Exact DE 12 25 48.00% 4 6 * Definition of Fundamental Set 8 25 32.00% 4 9 Power Series Solutions 7 25 28.00% 4 3 3 25 12.00% 4 8 First Order Linear DE Higher Order Linear Differential Equaitons 3 25 12.00% 4 2 Verifying Solutions 1 25 4.00% 4 7 Variation of Parameters 1 25 4.00% 4 5 IVP 0 25 0.00% 6 9 Orthonormal Bases 18 34 52.94% 6 5 Rank and Nullity of a Matrix 11 34 32.35% 6 8 Linear Transformations 11 34 32.35% 6 10 Eigenvectors and Eigenvalues 9 34 26.47% 6 7 * Definition of Vector Spaces 8 34 23.53% 6 6 Column Spaces 5 34 14.71% 6 1 Gauss‐Jordan Elimination Method 4 34 11.76% 6 4 * Linear Independence 3 34 8.82% 6 2 Inverse Matrices 1 34 2.94% 6 3 Determinant 1 34 2.94% 8 4 * Countability 13 26 50.00% 8 6 Modular Arithmetic 12 26 46.15% 8 7 * Proof by Contradiction 11 26 42.31% 8 3 Sets 10 26 38.46% 8 10 Counting Techniques 10 26 38.46% 8 9 Discrete Probability 5 26 19.23% 19 8 2 Symbolic Logic 4 26 15.38% 8 5 Euclidean Algorithm 3 26 11.54% 8 8 RSA Encription 3 26 11.54% 8 1 Rules of Inference 0 26 0.00% 103 3 Graphing Fractions 26 49 53.06% 103 10 * Proportions 26 49 53.06% 103 8 Simplification of Fractions 24 49 48.98% 103 7 Percents 17 49 34.69% 103 9 * Contrasting Different Measurments 10 49 20.41% 103 1 Words to Decimal Conversion 8 49 16.33% 103 5 Decimal Division 8 49 16.33% 103 6 Rounding 6 49 12.24% 103 2 Adding Fractions 4 49 8.16% 103 4 Decimal Subrtraction 3 49 6.12% 104 5 Application of Roots 65 143 45.45% 104 8 Volume 55 143 38.46% 104 4 Circular Computations 47 143 32.87% 104 10 * Simplifying vs. Evaluating 38 143 26.57% 104 7 Percents 34 143 23.78% 104 9 * Interpretaion of Computations 30 143 20.98% 104 2 Evaluating Expressions 25 143 17.48% 104 3 Linear Equations 9 143 6.29% 104 1 Order of Operations 8 143 5.59% 104 6 Square Roots 7 143 4.90% 65 9 Percents 91 192 47.40% 65 5 Solving System of Equations 78 192 40.63% 65 6 Equations of Lines from Points 55 192 28.65% 65 10 * Vertical Slopes 49 192 25.52% 65 4 Simplifying Rational Expressions 46 192 23.96% 65 8 Graphing Lines 40 192 20.83% 65 7 * Explain "Canceling" 35 192 18.23% 65 2 Algebra of Polynomials 29 192 15.10% 65 3 Factor Trinomials 11 192 5.73% 65 1 Linear Equations 7 192 3.65% 55 9 Logrithmic Functions 127 280 45.36% 55 6 Exponential Applications 119 280 42.50% 55 7 * Definition of Function 84 280 30.00% 55 8 Exponential Graphing 81 280 28.93% 55 3 Inverses 66 280 23.57% 55 10 Rationalizing Denominators 61 280 21.79% 55 4 Complex Numbers 43 280 15.36% 55 5 Radical Equations 39 280 13.93% 20 55 2 Absolute Value Inequalities 11 280 3.93% 55 1 Quadratic Formula 4 280 1.43% 31 9 Exponential Models 26 93 27.96% 31 5 * Determining Domains 25 93 26.88% 31 2 Binomial Expansion 23 93 24.73% 31 8 * Quadratic Modeling 23 93 24.73% 31 3 Logarithmic Equations 22 93 23.66% 31 4 Graphing of Rational Functions 22 93 23.66% 31 1 * Interpret Graphs 20 93 21.51% 31 6 Geometric Series 18 93 19.35% 31 10 Rational Inequalities 18 93 19.35% 31 7 Rational Equations 6 93 6.45% 15 7 Exponential Models 25 46 54.35% 15 8 Using Derivatives To Sketch A Graph 18 46 39.13% 15 5 Related Rates 16 46 34.78% 15 10 * Intermediate Value Theorem 15 46 32.61% 15 4 Differentiation Rules For Exp And Logs 11 46 23.91% 15 9 * Continuity At A Point 11 46 23.91% 15 1 Evaluate Limits 9 46 19.57% 15 6 Cancavity And Inflection Points 5 46 10.87% 15 2 3 46 6.52% 15 3 2 46 4.35% 16 8 Equations Of Tangent Lines Find Derivatives Using Differentiation Rules Continuous Random Variable Probabilities 4 6 66.67% 16 9 Optimization Problems 4 6 66.67% 16 10 Related Rates 4 6 66.67% 16 7 Taylor Series Representation 3 6 50.00% 16 2 Improper Integral 2 6 33.33% 16 4 Double Intervals 1 6 16.67% 16 6 Separable Differential Equations 1 6 16.67% 16 1 Integration By Parts 0 6 0.00% 16 3 Partial Derivatives 0 6 0.00% 16 5 Differentiate A Trigonometric Functions 0 6 0.00% 54 10 Variation 33 59 55.93% 54 3 Applications of System of Equations 26 59 44.07% 54 5 Rates 20 59 33.90% 54 8 * Choosing an Appropriate Model 14 59 23.73% 54 6 Exponential Models 13 59 22.03% 54 4 Interpretation of Functional Models 8 59 13.56% 54 1 * Interpret Linear Models 7 59 11.86% 54 2 Equations for Parallel Lines 5 59 8.47% 54 7 Exponential Equations 5 59 8.47% 21 54 9 Quadratic Graphing 5 59 8.47% 53 10 Variation 46 97 47.42% 53 4 Empirical Rule for Normal Distributions 38 97 39.18% 53 1 Geometry and Measurement 32 97 32.99% 53 3 * Interpreting the Slope of a Line 21 97 21.65% 53 5 Dimensional Analysis 20 97 20.62% 53 9 Exponential Models 19 97 19.59% 53 6 Linear Models for Real Situations 18 97 18.56% 53 7 Function Notation 12 97 12.37% 53 8 Scatterplots 7 97 7.22% 53 2 Mean and Median 5 97 5.15% 43 5 * Linear Regression 146 357 40.90% 43 7 Binomial Distribution 106 357 29.69% 43 10 Hypothesis Testing 96 357 26.89% 43 3 * Interpreting Plots 94 357 26.33% 43 9 Confidence Intervals 91 357 25.49% 43 8 Normal Distribution 61 357 17.09% 43 4 Using Box Plots 52 357 14.57% 43 1 * Types of Studies 45 357 12.61% 43 2 Finding Measures ofCcenter and Spread 42 357 11.76% 43 6 Conditional Probability 34 357 9.52% Key: * – Questions requiring the student to provide an explanation over solving a problem. ** – Percent of students having the question as a low outlier—defined as a topic with a student score at least one unit below the student's individual median. 22 Appendix D: Select Student Self Evaluation vs. Final Exam Performance Math 1 * Epsilon Delta * Mean Value Theorem Volumes Concavity E‐Riemann Sum Implicit Differentiation Continuity Integral Computation of Derivative Definition of Derivative Math 20 Converting Polar Equations Polar Graphing Right Triangle Geometry * Range of 1‐1 Functions * Translations and Transformaitons Polynomial Graphing Log Equations Sequences Series Polynomial Factoring Math 55 Logrithmic Functions Exponential Applications * Definition of Function Exponential Graphing Inverses Rationalizing Denominators Complex Numbers Radical Equations Absolute Value Inequalities Quadratic Formula Math 65 Percents Solving System of Equations Equations of Lines from Points * Vertical Slopes Simplifying Rational Expressions SLO Score 75.86% 45.98% 35.63% 21.84% 21.84% 18.39% 16.09% 3.45% 2.30% 1.15% SLO Score 40.70% 38.37% 31.40% 31.40% 30.23% 29.07% 23.26% 16.28% 12.79% 4.65% SLO Score 45.36% 42.50% 30.00% 28.93% 23.57% 21.79% 15.36% 13.93% 3.93% 1.43% SLO Score 47.40% 40.63% 28.65% 25.52% 23.96% 23 Final Exam 71% 82% 53% 47% 29% 29% 41% 24% 18% Final Exam 67% 78% 33% 67% 28% 33% 33% 22% Final Exam 60% 73% 47% 53% 40% 33% 27% 40% 13% 13% Final Exam 86% 71% 64% 57% Graphing Lines * Explain "Canceling" Algebra of Polynomials Factor Trinomials Linear Equations Math 8 * Countability Modular Arithmetic * Proof by Contradiction Sets Counting Techniques Discrete Probability Symbolic Logic Euclidean Algorithm RSA Encription Rules of Inference 20.83% 18.23% 15.10% 5.73% 3.65% SLO Score 50.00% 46.15% 42.31% 38.46% 38.46% 19.23% 15.38% 11.54% 11.54% 0.00% 43% 14% 29% 14% Final Exam 65% 65% 71% 47% 59% 47% 24% 18% 18% SLO Scores are from the SLO Results Report and represent all sections of the course. Final Exam percentages are the proportion of students from (Robert Yest's Spring 14 sections only) who had some conceptual mistake/issue with the problem on the Final Exam. Note: Blank Final Exam percentages mean that no specific question for that topic was asked on the Final Exam. 24 Appendix E: Student's Self‐Assessment Expected Grade Course # Students A B C D F 37 96 19.8% 38.5% 32.3% 5.2% 4.2% 20 85 27.1% 27.1% 32.9% 9.4% 3.5% 1 87 18.4% 41.4% 29.9% 9.2% 1.1% 2 80 22.5% 38.8% 36.3% 1.3% 1.3% 3 56 37.5% 33.9% 26.8% 1.8% 0.0% 4 24 41.7% 33.3% 16.7% 8.3% 0.0% 6 32 59.4% 28.1% 9.4% 3.1% 0.0% 8 26 15.4% 57.7% 23.1% 3.8% 0.0% 31 91 20.9% 41.8% 29.7% 5.5% 2.2% 15 45 20.0% 37.8% 35.6% 4.4% 2.2% 16 6 33.3% 33.3% 16.7% 0.0% 16.7% 54 59 6.8% 35.6% 44.1% 6.8% 6.8% 53 90 11.1% 31.1% 41.1% 11.1% 5.6% 43 343 31.8% 37.9% 25.7% 2.9% 1.7% 55 275 18.5% 34.2% 42.2% 4.0% 1.1% 65 186 10.8% 38.2% 43.0% 6.5% 1.6% 103 47 19.1% 42.6% 31.9% 0.0% 6.4% 104 137 19.0% 34.3% 34.3% 9.5% 2.9% Students were asked what grade they thought they would get. 25 Appendix F: Student's Self Assessed Success vs. Actual Success Course 37 20 1 2 3 4 6 8 31 15 16 54 53 43 55 65 103 104 SLO* 74.48% 70.59% 74.71% 79.38% 84.82% 83.33% 92.19% 84.62% 77.47% 75.56% 75.00% 64.41% 62.78% 82.51% 73.82% 70.43% 77.66% 70.44% Actual 62.18% 67.65% 74.19% 86.67% 84.13% 80.00% 94.59% 92.31% 75.61% 72.22% 66.67% 57.45% 47.68% 81.48% 64.84% 54.65% 60.49% 65.96% Difference 12.30% 2.94% 0.52% ‐7.29% 0.69% 3.33% ‐2.41% ‐7.69% 1.86% 3.33% 8.33% 6.96% 15.10% 1.03% 8.98% 15.78% 17.17% 4.48% * SLO success is measured by the number of “A” and “B” students + 50% of the students who marked a C 26 Appendix G: Student's Confidence Confidence in Math Course # Students Improved Same Worse 37 95 71.6% 18.9% 9.5% 20 84 46.4% 41.7% 11.9% 1 87 66.7% 24.1% 9.2% 2 83 72.3% 21.7% 6.0% 3 56 69.6% 23.2% 7.1% 4 25 80.0% 12.0% 8.0% 6 33 75.8% 21.2% 3.0% 8 26 88.5% 7.7% 3.8% 31 93 62.4% 30.1% 7.5% 15 46 78.3% 17.4% 4.3% 16 6 33.3% 50.0% 16.7% 54 59 62.7% 28.8% 8.5% 53 93 61.3% 23.7% 15.1% 43 348 74.4% 18.7% 6.9% 55 65 275 185 68.7% 68.6% 24.7% 23.2% 6.5% 8.1% 103 47 72.3% 27.7% 0.0% 104 134 67.9% 23.9% 8.2% * Students were asked their confidence in math improved, remained the same, or worsened. 27