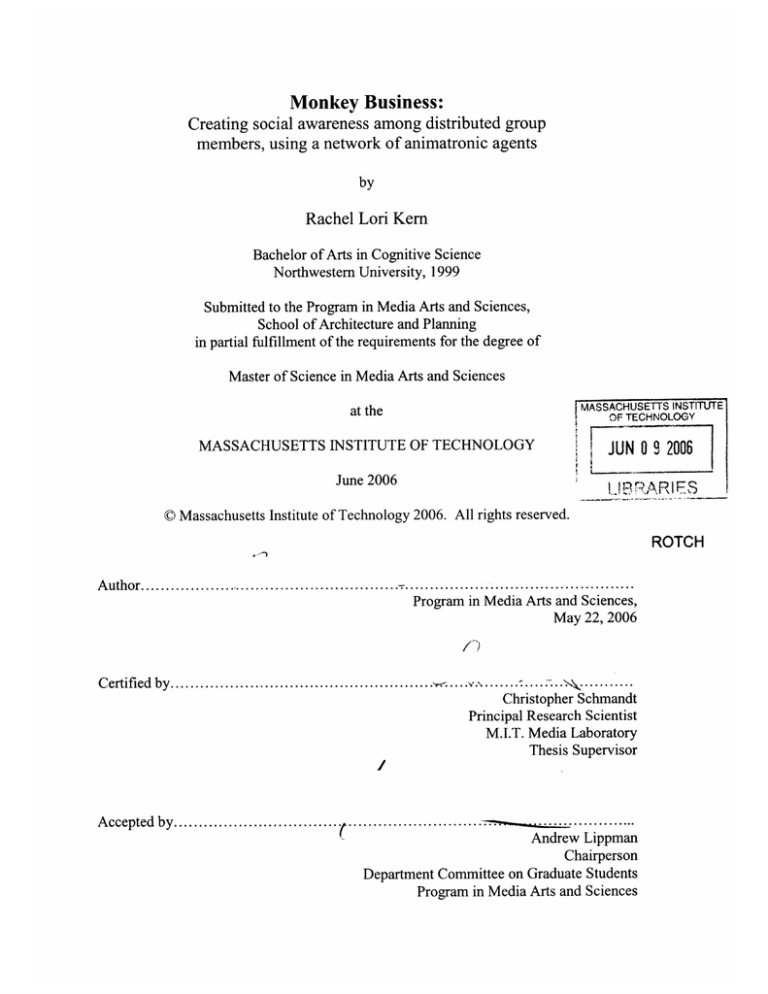

Monkey Business:

advertisement