§»1

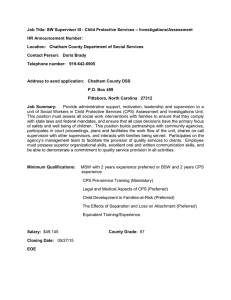

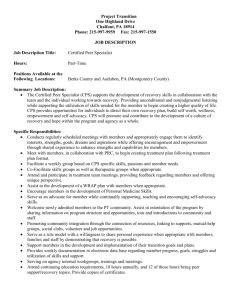

advertisement