Document 11114805

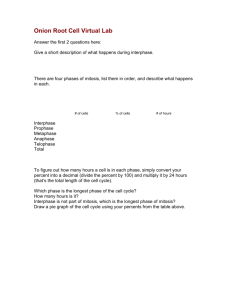

advertisement