HUMAN SUPERVISORY CONTROL

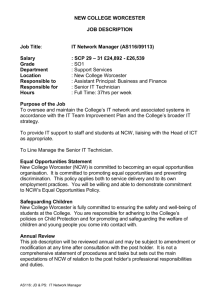

advertisement