The Role of New Technology in Transportation by

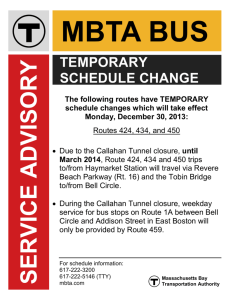

advertisement