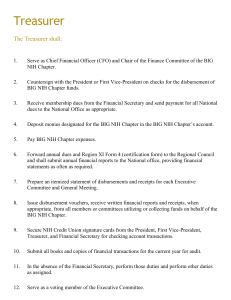

NBER WORKING PAPER SERIES Brian Jacob Lars Lefgren

advertisement