3D Reconstruction of Cuboid-Shaped Objects

from Labeled Images

by

rT 2 9 2013

Erika Lee

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2013

@ Massachusetts Institute of Technology 2013. All rights reserved.

A u tho r ..............................................................

Department of Electrical Engineering and Computer Science

August 9, 2013

Certified by

.

.............

Antonio Torralba

Associate Professor

Thesis Supervisor

Accepted by .......

............

.. ..................

......

Prof. Dennis M. Freeman

Chairman, Masters of Engineering Thesis Committee

2

3D Reconstruction of Cuboid-Shaped Objects from Labeled

Images

by

Erika Lee

Submitted to the Department of Electrical Engineering and Computer Science

on August 9, 2013, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

In this thesis, my goal is to determine a rectangular 3D cuboid that outlines the

boundaries of a cuboid-shaped object shown in an image. The position of the corners

of each cuboid are manually labeled, or annotated, in a 2D color image. Given the

color image, the labels, and a 2D depth image of the same scene, an algorithm extrapolates a cuboid's 3D position, orientation, and size characteristics by minimizing

two quantities: the deviation of each estimated corner's projected position from its

annotated position in 2D space and the distance from each estimated surface to the

observed points associated with that surface in 3D space. I found that this approach

successfully estimated the 3D boundaries of a cuboid object for 72.6% of the cuboids

in a data set of 1,089 manually-labeled cuboids in images taken from the SUN3D

database [12].

Thesis Supervisor: Antonio Torralba

Title: Associate Professor

3

4

Acknowledgments

Professor Antonio Torralba for supervising my research at CSAIL during my MEng

year and giving me the opportunity to explore this topic in computer vision with his

group.

Jianxiong Xiao for his support and indispensable advice throughout the year.

5

6

Contents

1

Introduction

11

1.1

Motivation for Reconstruction . . . . . . . . . . . . . . . . . . . . . .

11

1.2

Description of a Reconstructed Cuboid . . . . . . . . . . . . . . . . .

12

1.3

Description of Labeling . . . . . . . . . . . . . . . . . . . . . . . . . .

13

1.4

Related Works. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

2 Background

3

15

2.1

Projecting a Cuboid

. . . . . . . . . . . . . . . . . . . . . . . . . . .

15

2.2

Going from 2D to 3D . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

2.2.1

The Cuboid . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

2.2.2

The Projection Equation . . . . . . . . . . . . . . . . . . . . .

18

2.2.3

Rotation, Translation, and Scale . . . . . . . . . . . . . . . . .

19

2.2.4

Intrinsic Camera Parameters . . . . . . . . . . . . . . . . . . .

19

System Overview

21

3.1

Capturing Color and Depth

. . . . . . . . . . . . . . . . . . . . . . .

21

3.2

Labeling a Cuboid

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

3.3

Bundle Adjustment . . . . . . . . . . . . . . . . . . . . . . . . . . . .

23

3.4

Initializing the Parameters . . . . . . . . . . . . . . . . . . . . . . . .

24

3.4.1

Fitting Planes with RANSAC

. . . . . . . . . . . . . . . . . .

24

3.4.2

Making the Planes Orthogonal . . . . . . . . . . . . . . . . . .

25

3.4.3

Solving for Corner Positions . . . . . . . . . . . . . . . . . . .

25

3.4.4

Estimating Initial Cuboid Parameters . . . . . . . . . . . . . .

27

7

4 Results

29

4.1

Data Set and Results . . . . . . . . . . . . . . . . . . . . . . . . . . .

29

4.2

Edge Cases

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

29

4.2.1

Cuboids with Only One Visible Face . . . . . . . . . . . . . .

29

4.2.2

Cuboids Missing a Face

. . . . . . . . . . . . . . . . . . . . .

31

4.2.3

Cuboid Deformation . . . . . . . . . . . . . . . . . . . . . . .

31

4.2.4

Reflective or Clear Surfaces

. . . . . . . . . . . . . . . . . . .

33

4.2.5

Occlusion by Other Objects . . . . . . . . . . . . . . . . . . .

35

4.2.6

Labeling Outside of the Image Boundary . . . . . . . . . . . .

35

4.2.7

Objects Outside of the Depth Sensor's Distance of Use . . . .

37

4.2.8

Inaccuracies in Annotations . . . . . . . . . . . . . . . . . . .

37

5 Conclusion

39

5.1

Contribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

5.2

Suggestions for Future Work . . . . . . . . . . . . . . . . . . . . . . .

39

8

List of Figures

1-1

3D Reconstruction

2-1

. . .

... ... ... ... .... ... ..

12

Perspective Projection

... ... ... ... ... .... ..

16

2-2

Intrinsic Parameters

... ... ... ... .... ... ..

17

2-3

A Unit Cube

... ... ... ... ... .... ..

18

3-1

Color and Depth Images

... ... ... ... .... ... ..

22

3-2

The SUN Labeling Tool

... ... ... ... .... ... ..

23

4-1

Reconstruction Results.....

... ... ... .... ... ... ..

30

4-2

Edge Case: Cuboid with One Vis ible Face . . . . . . . . . . . . . . .

31

4-3

Edge Case: Cuboid Missing a Fa ,e

4-4

Edge Case: Cuboid Deformation . . . . . . . . . . . . . . . . . . . . .

33

4-5

Edge Case: Black Reflective Surf,ace . . . . . . . . . . . . . . . . . . .

34

4-6

Edge Case: Occlusion . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

4-7

Edge Case: Outside of Image Bo uindaries . . . . . . . . . . . . . . . .

36

4-8

Edge Case: Outside of Sensor's Distance of U se . . . . . . . . . . . .

37

4-9

Edge Case: Annotation Error

38

. . . . . .

.32

. . . . . . . . . . . . . . . . . . . . . .

9

10

Chapter 1

Introduction

1.1

Motivation for Reconstruction

Human vision captures information in series of 2D pictures; at any point in time, we

see a 2D image of the scene in font of us. Yet, we have no trouble understanding how

to interact with a 3D world. For example, suppose there is a cup of coffee sitting at

eye level. Even though you can only see one side of the cup, your brain is somehow

able to fill in the rest of it; you can tell how big that cup is, how that cup is shaped,

how far away it is, etc. even though you can only see one side of that cup. For

most people, this scenario is a natural process that requires relatively little conscious

thought.

Similarly, computers see using cameras. In a picture, each pixel's color value

contributes to a creating a cohesive image. However, without further processing, that

is all that the pixels are -a

series of color values. Computers do not automatically

have an semantic understanding of what items are in a scene, how much space those

items take up, or how the item looks from the other side. There is an gap between

mere color values and semantic meaning that needs to be filled before computers are

able to conduct the same perception tasks that our brains are naturally wired to

do. The concept of 3D reconstruction is an attempt at taking a step towards filling

that gap by building a 3D model which represents the shape and geometry of objects

visible in a 2D picture.

11

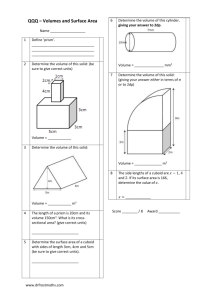

Figure 1-1: A table reconstructed from a 2D image, seen from different angles.

1.2

Description of a Reconstructed Cuboid

Cuboids are a category of 3D shape encompassing geometries composed of six faces

connected at right angles, including cubes and rectangular prisms. A unique cuboid

can be constrained by three properties: size, position, and orientation. The goal of

this thesis is to estimate these these properties to a reasonable approximation of what

they are in reality for a given object. In a successful estimate, the estimated cuboid

looks like a bounding box of the targeted cuboid-shaped object.

Numerous artificial items are cuboid-shaped, such as boxes, books, cabinets, etc.

Being able to identify the 3D properties of cuboid-shaped objects can potentially

be helpful in areas such as object recognition and scene understanding. This thesis

discusses an approach to find a cuboid-shaped object's position, orientation, and size

from a 2D color image, a 2D depth image, and some labels.

12

1.3

Description of Labeling

Locating and recognizing objects in an image is a large component in scene understanding, and it poses a significant question in the field of computer vision. Image

labeling is one way to accomplish this task. Labels are manually added to an image to

indicate where an object exists. They are intended to provide some information about

the content of the image and by extension some indication how the image should be

processed.

There are several ways in which these labels can be recorded; a popular approach

is asking a user click points and enclose a polygon that outlines the object of interest

[8, 10]. For the purposes of this thesis, the user labels a cuboid-shaped object by

specifying where the corners of that object appear in the image, thereby constraining

the boundaries of the object.

Because labeling is done manually, it is a labor-intensive process.

However it

remains an valid approach because manual labeling offers a higher rate of accuracy

than automatic algorithms. People do a much better job of recognizing objects and

segmenting which parts of an image correlates to what object than current automatic

algorithms that attempt to do general object recognition. In addition, user annotations can ultimately provide training examples for studies in related fields that use

machine learning. These manually-labeled instances could perhaps shed some insight

on the processes that our visual system undergoes which allows us to segment the

objects in these images so naturally.

1.4

Related Works

There have been a number of works published on cuboid fitting. One approach includes training a system on the color gradients of several variations of cuboid corners.

The gradients are matched to an image to determine the position of the corner in the

image, and therefore the location of the cuboid [13]. Other works have relied on the

geometry of the scene to estimate cuboid location, by using perspective and doing

13

edge detection to find potential fits for the cuboid and the edges and corners of room

[4, 7].

Whereas the works described above relies primarily on processing a color image,

the approach discussed in this thesis relies primarily on processing the depth map and

user-provided labels to segment the objects of interest. There have been approaches

that attempt to extrapolate the depth from the color image by guessing the orientation

of every visible surface in the room [7]. Another common approach to find depth

information in general is to use multiple cameras to capture the same scene at slightly

different viewpoints. By contrast, the approach in this thesis uses a single camera

that produces two different images from the same viewpoint.

Two labeling frameworks of note are LabelMe

[8, 10] and SUN

[12], both of

which are image labeling tools developed at the Vision group at CSAIL. Both are

publicly accessible, and use an interface that asks the user to click on a color image

to label its contents. LabelMe3D, an extension of LabelMe, explores extrapolating

3D information from labeled 2D polygons, but does support labeling cuboids at this

point in time [9].

14

Chapter 2

Background

This section highlights the geometry and mathematical concepts relevant to the topic

of this thesis. The ideas are well established and discussed at length in reference texts

such as [3]. Therefore concepts that are considered common knowledge in the field

(such as perspective projection, homogeneous coordinates, intrinsic camera parameters, etc.) are left without further citation in this section.

2.1

Projecting a Cuboid

In order to estimate the 3D characteristics of a cuboid-shaped object from a picture,

it is useful to examine how a 2D image correlates to its 3D counterpart. A camera

projects one viewpoint of a 3D world onto a plane in a manner described as perspective

projection. The color of each pixel on the resulting image plane is determined by the

visible object in the 3D world that intersects with the ray that passes through the

camera origin and that pixel on the image plane.

Focal length and principle point are the intrinsic parameters of a camera; they

describe the measurements of perspective projection. The focal length values

(f.

and fy) determine the distance between the camera lens and the image plane. The

principle point (px, py) refers to the point of intersection of the perpendicular line

from the image plane to the camera origin. The principle point is typically very close

to the center of the image.

15

object

camera

projected

image

Figure 2-1: In perspective projection, each pixel of an image is determined by the

intersection of the 3D scene and the ray through the camera origin and that pixel.

Assuming that there is a way to derive the distance from the camera to an object

from the depth image, it is possible to estimate the 3D coordinates of the points in a

scene using similar triangles. The following equations express these relationships:

Z = depth-map(x, y)

Px) Z

fX

X -(x

Y _ (x-PY).Z

fy

(2.1)

(2.2)

(2.3)

where (x, y) refer to a point in image coordinates and (X, Y, Z) refer to the corresponding point in real world coordinates.

16

side view

I

fYI jpy

,,tffw

camera

origin

Z

image

plane

Figure 2-2: A camera's intrinsic parameters are defined by its focal length and principle point.

2.2

2.2.1

Going from 2D to 3D

The Cuboid

Generally, a cuboid can be represented by the positions of its 8 corners. To maintain

consistency, each corner is assigned a number from 1 through 8, and each visible face

is assigned a number from 1 through 3. The corners are numbered such that for every

cuboid, each face has the same set of 4 corners, as shown in Figure 2-3.

The corner positions of the unit cube centered at (0, 0, 0) can be represented as

follows:

X3D

=

-1

-1

-1

1 -1

1

1

1

1

1

-1

1 -1

-1

1

-1

1

-1

1

1 -1

1

-1

-1

1

1

1

1

1

1

1

1

(2.4)

Each column of the first three rows correspond to the X, Y, and Z positions of

17

y

31

5

1

3

4

3

z

Figure 2-3: A unit cube centered at (0,0,0). Each of its 8 corners and 3 visible faces

are numbered in this fashion. Corner 8 is not visible in this representation.

corners from 1 through 8.

2.2.2

The Projection Equation

Each annotated corner with position (x, y) in the 2D image can be mapped to its 3D

world coordinates (X, Y, Z). The projection of 3D coordinates onto a 2D plane can

be written as:

X2D =

where

X2D

PX

3D

(2.5)

takes the form:

X2D

~

X1

X2

X3

X4

X5

X6

X7

X8

Yi

Y2

Y3

Y4

Y5

Y6

Y7

Y8

L1

1

1

1

1

1

1

P, the projection matrix, can be further broken down into:

18

(2.6)

P= K x [Rlt] x S

2.2.3

(2.7)

Rotation, Translation, and Scale

[Rlt] is a 4x4 matrix that combines a regular 3x3 rotation matrix, R, and a vector,

t, representing the center of the cuboid after translation. The matrix takes the form:

R

[Rjt] =

t

(2.8)

with the bottom row being all zeros except for the last element.

The R matrix can be translated into angle-axis notation, which is a vector of three

elements that represents the axis of rotation. The magnitude of rotation is encoded by

the magnitude of the vector. This reduces the number of free parameters for rotation

from nine to three.

Since the t component of the matrix consists of the X, Y, Z values of the center

of the cuboid, the number of free parameters for translation is three.

S is a standard scaling matrix of the form:

W

S_ S=0

0

0

0

0

h 0 0

0 d 0

0

0 0

.9

(2.9)

1

where w is the width, h is the height, and d is the depth of the cuboid. Thus,

scale also has three free parameters, for a total of nine free parameters to optimize

for.

2.2.4

Intrinsic Camera Parameters

K is a matrix representing the intrinsic parameters of the camera of the form:

19

fx0 P.

K=

0

fy p

0

0

(2.10)

1

Multiplying a set of 3D corners by K gives the corners' projected locations by the

relations discussed in section 2.1. The corner positions are converted from homogeneous coordinates back to Cartesian coordinates before being multiplied by the K

matrix.

20

Chapter 3

System Overview

The tasks involved in completing this thesis includes capturing videos of different

scenes, labeling cuboid-shaped objects in extracted frames, and solving for the cuboid

for each labeled object.

3.1

Capturing Color and Depth

The SUN database consists of videos of various rooms and indoor spaces. The images

in this thesis are randomly sampled frames from the videos in SUN. Data is captured

with the Asus Xtion camera, which contains a regular RGB camera and a depth

sensor. These images, along with the labels provided by the user, serve as the input

for the algorithm. The depth map is much like the regular image, except each pixel

corresponds to a value that can be used to derive the how far that point is away from

from the camera instead of a color value.

Ideally, the depth sensor would give an accurate depth reading for all pixels;

however, the sensor fails in a number of situations. The sensor gives no readings

near the top, bottom, and right image boundaries of the image. In addition, certain

surfaces leave large gaps in the depth map, and there also tends to be small gaps

around the boundaries of objects. Accurate readings for those pixels are unavailable.

The implications of the depth sensor's limitations are discussed in more depth in the

results section 4.2.

21

Figure 3-1: An RGB image and a depth image captured by the Asus Xtion camera.

There are gaps in the depth map for some of the items in the far background, around

the back outline of the table, and around the top, right, and bottom borders of the

image.

The significance of introducing a depth sensor for this project is that it provides

an absolute depth reading, which can be used to determine absolute scaling.

In

perspective projection, a large object far away from the camera and smaller object

close to the camera could look exactly the same in 2D projection if viewed from the

right angle. Thus, absolute scaling is difficult to determine. However, a depth sensor

removes that ambiguity since the exact depth of objects in a scene becomes known.

3.2

Labeling a Cuboid

Labels refer to the manual annotations to an image that provide information about the

image's content, and specifically in this thesis, which parts of the image correspond to

a cuboid-shaped object. The labels play a pivotal role in discerning the orientation,

position, and size of the cuboid. To label a cuboid with the SUN labeling tool, the user

chooses the viewpoint that best matches that of the object in the image, then clicks

on the corners of the cuboid in the color image. For a cuboid, the corners define face

boundaries; the pixels enclosed by the corners of a face are also the pixels associated

with the face of the cuboid.

The labeling provides some insight of the cuboid's

geometry as well, since all the visible faces of a cuboid from a given viewpoint must

22

~3DC

Figure 3-2: The SUN labeling tool interface allows users to label cuboids on a color

image [12].

be adjacent.

From any perspective there are 1, 2, or 3 visible faces of a cuboid. The number

of visible corners are 4, 6, and 7, depending on the number of faces which are visible.

For a cuboid with 1 visible face, the problem is not constrained enough to solve for a

unique cuboid. Thus, this thesis explores the cases with 2 or 3 visible faces.

The primary annotation file format this system uses is compatible with the SUN

annotation tool [12]. However, the system is also compatible with an .xml file format

similar in structure to LabelMe's annotation file [8, 10].

3.3

Bundle Adjustment

Bundle adjustment is a standard technique used to estimate the values of a cuboid's

parameters [3, 11].

This thesis's approach minimizes two measurements: 1.

the

2D distance between a labeled corner position and its location after projecting the

estimated 3D point onto the image and 2. the 3D distance between each observed

point from the estimated plane.

This value to be minimized can be expressed by the following:

23

(X 2 D- P x X3 D) + A

cECorners

(distance(p,m))

(3.1)

pEPlanes mEPointCloud

where Corners is the annotated corners of the cuboid, A is some weight, Planes is

the set of estimated planes of the cuboid, PointCloud is a set of points associated

with plane p, m is a point in the point cloud associated with p, and distance(p,m)

computes the perpendicular distance between a plane p and point m.

The library used to solve for the parameter values to minimize error for this thesis

is ceres-solver, which was developed at Google

[1]. The solver iteratively tries to

solve for the best parameter values for orientation, position, and size for a cuboid to

minimize the expression above.

3.4

Initializing the Parameters

As described in section 2.2.3, a cuboid object's orientation, position, and size can

be encoded as rotation, translation, and scale matrices. Given the constraints in

the section above 3.3, a solver should yield values for the free parameters. Because

the quality of the results can be improved with good initialization, determining a

reasonable set of initial parameters is reasonably important.

3.4.1

Fitting Planes with RANSAC

The first step to making an initial guess of the cuboid parameters is to extract the

point cloud associated with each face. This is accomplished by choosing all of the 2D

points enclosed by the corners of a face and converting them to 3D using equations

(2.1), (2.2), (2.3).

for each visible face

Then, the RANSAC algorithm is used to find the best-fit plane

[2].

This process involves taking a random sample of three 3D

points from a face and seeing how many other points in the point cloud falls onto the

plane defined by these three points. After some number of iterations, the plane that

yields the most matches is selected as the best-fit plane. It is possible to evaluate

24

how confidently each plane fits by taking the number of points in the point cloud that

fall in the plane. This metric of confidence favors faces of higher surface area, which

usually does correspond correctly to a more confident fit.

Although RANSAC does not guarantee the correct answer, if more than 50% of

a plane's points lie within the correct plane, n iterations of RANSAC will yield a

successful result with a probability of at least 1 - (.5)n, which comes out to be greater

than 0.99999 for n = 20.

3.4.2

Making the Planes Orthogonal

After determining the best-fit plane for each visible face of the cuboid, the planes are

adjusted so that each face is orthogonal to each other, if the planes are not already.

The plane with the highest confidence score is left untouched. The second plane's

normal is determined by projecting two points along the second most confident plane's

normal onto the first plane. If the second plane is just slightly misaligned, this should

only slightly adjust the plane's orientation while producing a normal that lies in the

first plane. Thus, the second plane will be orthogonal to the first plane. The third

plane's normal is determined by taking the cross product of the normals of the first

two planes. The third plane's normal will be orthogonal to the normals for the first

two planes.

Thus, all three planes will be orthogonal to each other. Even if the

original object had only two visible faces, this third plane can be derived once the

first two planes are fixed.

3.4.3

Solving for Corner Positions

The algorithm uses two techniques to solve for the 3D positions of the corners. The

first gets the 3D position of each corner from equations (2.1),

(2.2),

(2.3), then

adjusts each corner by projecting it onto the intersection of all of the fitted planes

that the corner sits on. If the corner only sits on one of those faces, the corner is

simply projected onto that plane instead of an intersection of multiple planes. This

technique shifts the position of a 3D point so that it lies exactly on the orthogonal

25

planes; however, it fails when the depth map reading is not available for that corner's

2D position, which can occur when the depth sensor simply fails for that pixel or

because the labeled corner is outside of the captured image.

The second technique uses perspective projection and projects the labeled 2D

point on the image plane, along the ray drawn from the camera origin, onto one of

the fitted, orthogonal planes that the corner lies on. If the corner lies on more than

one plane, the algorithm chooses the plane that is most orthogonal to the ray drawn

from the camera center through the point in the image plane. The most orthogonal

plane, determined by calculating the cross product of the ray and the plane's normal,

is chosen because this technique tends to fail if the ray projects onto a plane parallel

to the ray. A labeling error of a few pixels can make a very large difference in the

final 3D position of the point if the ray and plane are close to parallel.

In both techniques, the point could be adjusted to a position that does not correspond to its original observed value since the fitted orthogonal planes have been

adjusted after the fitting.

Because the two techniques fail in different circumstances, a combination of both

yields better results than just one technique by itself. This thesis combines the two

techniques to make two different approaches. First, the system makes a pass through

all the corners with the first technique, then makes a second pass with the second

technique on the points that failed the first pass. The system saves the results, clears

all the corners, then makes a pass through all the corners again with the second

technique first before making a pass with the first technique on the points that failed

the first pass the second time around. Trying both sequences and picking the better

set of corners tends to yield more accurate results than just trying one option or

the other. Thus, the algorithm yields two sets of corners. A corner will fail both

techniques when there is no depth reading and only relatively parallel planes for the

corner to project onto, or if the corner is not visible from the image plane (which is

always true for corner 8, and true for corner 5 for cuboids with only two visible faces).

If a cuboid is fully constrained, the position of the remaining corners can be

solved by some additive combination of the known corners. The known corners need

26

to establish the properties of width, height, and depth. This occurs when five or

more corners are already known, or when four corners are known as long as the four

corners are not all along the same face. If one set of corners is under-constrained, the

set is discarded. If both approaches result in an under-constrained set of corners, the

algorithm fails.

Because this approach can return multiple sets of corners, each set is assigned

a score that approximates how good the guess is. The set with the higher score is

chosen to extract orientation, position, and size parameters. The score measures the

integrity of the cuboid by two metrics. The first is the fraction of points in each point

cloud that fall within the boundary defined by the corners of that face. The second is

how similar in length each of the edges are for each dimension of width, height, and

depth. A cuboid with significantly uneven edge lengths along the same dimension is

unlikely to be a good estimate.

3.4.4

Estimating Initial Cuboid Parameters

The final step is to convert the set of corners to a true cuboid, represented by the

parameters as described in 2.2.3. The scale of the cuboid is approximated by the

average length of the edges of the resulting set of corners. The translation of the

cuboid is approximated by taking the average position values of each of the corners.

These values can easily be translated to matrix form.

The orientation, represented by the rotation matrix, is solved for using the following equation [5]:

R=M x M 7

(3.2)

where the goal coordinate system of the cuboid is:

M=

0

0 1

0

1

-1

27

0

0 0

(3.3)

as represented by figure 2-3. The rows represents the normals for faces 1, 2, and 3.

The initial coordinate system, M, is also a 3x3 matrix, where each row represents

the normals of the fitted orthogonal planes 1, 2, and 3:

X 1 Y1

Zi

Mr= X 2 Y2 z 2

X3 Y3 Z3

The resulting rotation matrix can then be converted to angle-axis format.

28

(3.4)

Chapter 4

Results

4.1

Data Set and Results

To construct a data set of images, I randomly sampled 7,130 images from the SUN3D

database. Of these images, there were a total of 675 images containing 1,089 two

or three face cuboids. The experiment yielded a success rate of 72.6%, with 791

successful and 298 failed reconstructions. On average, each cuboid took about 3.2

seconds to process. This is fast enough perhaps for a visualization tool for a labeling

database, but not quite fast enough for more time-sensitive applications.

4.2

Edge Cases

This section discusses various edge cases that arose. All of these cases except for the

first were included in the results.

4.2.1

Cuboids with Only One Visible Face

Cuboid-shaped objects that have only one visible face in an image are not constrained

enough for this algorithm to solve for its 3D reconstruction. These cases were discounted from the results.

29

Figure 4-1: Example of a reconstructed scene with three cuboid-shaped objects.

30

Figure 4-2: Example of a image with a cuboid-shaped object with only one visible

face (labeled in red).

4.2.2

Cuboids Missing a Face

Certain objects are cuboid-shaped, but may be "open" or missing a part of a face or a

whole face. For example, a box without a cover has five solid faces, but its open face

may be visible to the camera. This causes the algorithm to fail in some cases, since

the point cloud for that face no longer resembles a plane, thus causing the algorithm

fit the wrong plane to the point cloud. It is arguable whether objects in this category

should be considered a cuboid since they do not actually have six faces.

4.2.3

Cuboid Deformation

Few objects in reality are actually perfect cuboids. Most are at least slightly deformed.

Although the deformation is not usually an issue, an item that deviates too much in

31

I

Figure 4-3: Example of a reconstructed object with a missing face. This particular

object was a successful example.

32

Figure 4-4: Example of a reconstructed object that deviates somewhat from a cuboid.

The algorithm still attempts to fit a cuboid to the object.

shape from an actual cuboid can cause the algorithm to fail.

4.2.4

Reflective or Clear Surfaces

The depth sensor fails to return accurate readings for certain surfaces. For example,

it ignores clear surfaces and gives incomplete readings for reflective black or metallic

surfaces. In mirrors, it returns the distance of the object shown in the mirrored

image. These cases can cause the algorithm to fail, although some objects with

reflective surfaces were still successful if enough of the depth reading was usable for

reconstruction.

33

Figure 4-5: Example of a reconstructed object with a black reflective surface that

returns poor depth reading. A large chunk of the pixels on the object are blacked out

in the depth image.

34

Figure 4-6: Example of a reconstructed object that is partially occluded. The points

associated with the occluding objects causes the estimated cuboid to be shifted upward from its actual position.

4.2.5

Occlusion by Other Objects

Objects in a scene are often at least partially occluded. Not only does occlusion make

accurate labeling more difficult, but the points associated with the occluding object

can also skew the estimated translation parameters.

4.2.6

Labeling Outside of the Image Boundary

Some objects are partially cut off at the edge of the image. Both the labeling tool

and the reconstruction algorithm handles this as long as at least two faces of the

object have enough points in the image to fit a plane to. The object is successfully

reconstructed in most cases.

35

Figure 4-7: Example of a object that has some of its visible faces cut off from the

image. Enough of the object is preserved for reconstruction.

36

Figure 4-8: Example of a labeled object that cannot be reconstructed because no

depth reading is available. The depth map is completely black for parts of the picture

that are too far away from the depth sensor.

4.2.7

Objects Outside of the Depth Sensor's Distance of Use

According to the Asus Xtion's specification, its depth sensor reads between 0.8 to 3.5

meters. In a few cases, the cuboid is out of the range of the depth sensor. This tends

to occur in large spaces such as lecture halls. Although the RGB camera still captures

the image of the cuboid, the algorithm fails because there is no depth reading for the

object.

4.2.8

Inaccuracies in Annotations

For cuboid faces that are very parallel to the camera's viewpoint, mislabeling a corner

by a few pixels can appear correct upon visual inspection, but result in a significant

error during the reconstruction. Human errors in labeling suggest that perhaps the

annotations should not be taken as strict constraints, or perhaps that there could be

some automatic readjustment of labeled corner positions for better results. Another

possibilty is a more fine-grain interface for labeling the images.

37

Figure 4-9: Example of a object that is slightly mislabeled. The boundaries of the

top face is difficult to discern in the color image. Because the angle of the top

face is reasonably parallel to the viewpoint the camera, this mislabeling results in a

reconstructed cuboid that is significantly too large for the object. In this case, the

estimated cuboid juts out beyond the back wall.

38

Chapter 5

Conclusion

5.1

Contribution

This thesis proposes a method for reconstructing a 3D cuboid that estimates the size,

location, and orientation of a cuboid-shaped object. The reconstruction is accomplished using depth images and user-provided labels of color images. I evaluated this

approach on a data set constructed from images taken from the SUN3D database.

This data set and the performance results could serve as a reference for future attempts at cuboid reconstruction. Alternatively, it could also be used as a potential

training set for algorithms that rely on learning to automatically recognize cuboidshaped objects in an image such as [6].

5.2

Suggestions for Future Work

Potential future improvements might include gracefully handling some of the edge

cases, especially occlusion since occlusion occurs very frequently in images. More

than half of the objects labeled in the data set were at least partially occluded.

One approach could be to identify when occlusion occurs and ignore the occluding

points, which might be feasible since occluding points will have a closer depth reading

than the actual cuboid. Another possibility is to integrate occlusion boundaries into

the labeling tool so the algorithm is aware that a part of the image is occluded.

39

LabelMe3D has already begun to explore this option

[91.

Another change could be to include more information in cuboid labels. If the labeling included information about the general orientation of each face, the reconstruction

algorithm could become more constrained and potentially more robust. This change

would improve cases such as the hollow objects that are missing a face since a plane

fit to the wrong general orientation would not be attributed very high confidence.

And finally, a similar approach as the one described in the thesis can be extended

to apply to other commonly occurring geometric shapes such as cylinders. This would

increase the types of objects that could be reconstructed by the algorithm.

40

Bibliography

[1] Sameer Agarwal and Keir Mierle. Ceres Solver. https: //code .google. com/p/

ceres-solver/.

[2]

M. Fischler and R. Bolles. Random sample consensus: A paradigm for model

fitting with applications to image analysis and automated cartography. Communications of the ACM, 24, 1981.

[3] R. I.Hartley and A. Zisserman. Multiple View Geometry in Computer Vision.

Cambridge University Press, ISBN: 0521540518, second edition, 2004.

[4] Varsha Hedau, Derek Hoiem, and David Forsyth. Thinking inside the box: Using

appearance models and context based on room geometry. In Kostas Daniilidis,

Petros Maragos, and Nikos Paragios, editors, Computer Vision ECCV 2010,

volume 6316 of Lecture Notes in Computer Science, pages 224-237. Springer

Berlin Heidelberg, 2010.

[5] B.K.P. Horn. Closed-form solution of absolute orientation using unit quaternions.

Journal of the Optical Society of America A, 4, 1987.

[6] H. Jiang and J. Xiao. A linear approach to matching cuboids in rgbd images.

Proceedings of 26th IEEE Conference on Computer Vision and Pattern Recognition, 2013.

[7] David C. Lee, Abhinav Gupta, Martial Hebert, and Takeo Kanade. Estimating

spatial layout of rooms using volumetric reasoning about objects and surfaces.

Advances in Neural Information Processing Systems, 24, 2010.

[8] B. C. Russell and A. Torralba. LabelMe: A database and web-based tool for

image annotation. InternationalJournal of Computer Vision, 77, 2008.

[9] B. C. Russell and A. Torralba. Building a database of 3D scenes from user

annotations. Computer Vision Pattern Recognition, 2009.

[10] A. Torralba, B. C. Russell, and J. Yuen. LabelMe: Online image annotation and

applications. Proceedings of the IEEE, 98, 2010.

[11] Bill Triggs, Philip Mclauchlan, Richard Hartley, and Andrew Fitzgibbon. Bundle

adjustment A modern synthesis. In Vision Algorithms: Theory and Practice,

LNCS, pages 298-375. Springer Verlag, 1999.

41

[12] J. Xiao, J. Hays, K. A. Ehinger, A. Oliva, and A. Torralba. SUN database:

Large-scale scene recognition from abbey to zoo. Computer Vision and Pattern

Recognition, 2010.

[13] J. Xiao, B. C. Russell, and A. Torralba. Localizing 3D cuboids in single-view

images. Neural Information Processing Systems, 2012.

[14] Jianxiong Xiao. Multiview 3D reconstruction for dummies. https://6.869.

csail.mit .edu/f a12/lectures/lecture3D/SFMedu.pdf, October 2012.

42