Introduction WARPAL Our Idea: Identify and Use Slack Cycles

advertisement

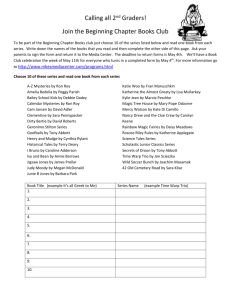

Introduction With GPUs and CPUs on the same chip the memory system becomes more contended for GPUs typically have high IPCs and are hence system performance suffers immensely if they are deprioritized However, if the GPUs are prioritized for long periods to ensure high performance then the other on-chip cores will starve WARPAL Our Idea: Identify and Use Slack Cycles WARP 1 Compute GPU CORE PRESENT ? NO STALL : Issue WARP 2 Sort GPU Request by Number of Stalled Warps WARP 3 Service CPU Request using TCM/ATLAS/PAR-BS WARP 4 WARP5 WARP 6 WARP 7 Max. Stall Request > Threshold WARP 8 CPU STALL Issue YES CPU Request Present ? WARP 1 Compute STALL Issue 1 2 3 4 WARP 2 YES Service Request WARP 3 Architecture WARP 4 WARP5 Threshold is the number of stalled warps above which the GPU will be prioritized It is computed dynamically based on IPC over the previous epoch WARP 6 WARP 7 GPU is composed of 16 cores that carry out FGMT on 32 Warps CPUs are 3 wide superscalar engines Individual L1(128KB) caches are present along with shared L2 cache(2MB) WARP 8 CPU STALL:1 Issue 2 3 Number of Non-Stalled Warps and IPC used to identify slack in the system Results 1.2 Weighted Speedup Fairness: Harmonic Speedup 0.006 1 GPU-Core 0 GPU-Core 1 GPU-Core 2 0.005 GPU-Core 3 SS CPU Core GPU-Core 4 GPU-Core 5 GPU-Core 6 SS CPU Core GPU-Core 7 0.8 0.004 0.6 CPU4 CPU3 0.003 CPU2 0.4 CPU1 GPU GPU-Core 8 GPU-Core 9 GPU-Core 10 GPU-Core 11 SS CPU Core 0 0 SSSP_S SSSP_D WP_S WP_D STMCL_S STMCL_D BFS_S BFS_D CFD_S GPU-Core 15 0.001 CFD_D GPU-Core 14 0.2 BACKP_S GPU-Core 13 SS CPU Core BACKP_D GPU-Core 12 0.002 Developed mechanism that can be coupled with other memory scheduling algorithms to increase performance or fairness or both