Trading Structure for Randomness in Wireless Opportunistic Routing Szymon Chachulski

advertisement

Trading Structure for Randomness in Wireless Opportunistic

Routing

by

Szymon Chachulski

mgr inz., Warsaw University of Technology (2005)

Submitted to the Department of Electrical Engineering and Computer Science

in partial fulfillment of the requirements for the degree of

Master of Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

May 2007

c Massachusetts Institute of Technology 2007. All rights reserved.

°

Author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Department of Electrical Engineering and Computer Science

May 24, 2007

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Dina Katabi

Assistant Professor

Thesis Supervisor

Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Arthur C. Smith

Chairman, Department Committee on Graduate Students

2

Trading Structure for Randomness in Wireless Opportunistic Routing

by

Szymon Chachulski

Submitted to the Department of Electrical Engineering and Computer Science

on May 24, 2007, in partial fulfillment of the

requirements for the degree of

Master of Science

Abstract

Opportunistic routing is a recent technique that achieves high throughput in the face of

lossy wireless links. The current opportunistic routing protocol, ExOR, ties the MAC with

routing, imposing a strict schedule on routers’ access to the medium. Although the scheduler

delivers opportunistic gains, it eliminates the clean layering abstraction and misses some of

the inherent features of the 802.11 MAC. In particular, it prevents spatial reuse and thus

may underutilize the wireless medium.

This thesis presents MORE, a MAC-independent opportunistic routing protocol. MORE

randomly mixes packets before forwarding them. This randomness ensures that routers that

hear the same transmission do not forward the same packets. Thus, MORE needs no special

scheduler to coordinate routers and can run directly on top of 802.11.

We analyze the theoretical gains provided by opportunistic routing and present the

EOTX routing metric which minimizes the number of opportunistic transmissions to deliver

a packet to its destination.

We implemented MORE in the Click modular router running on off-the-shelf PCs

equipped with 802.11 (WiFi) wireless interfaces. Experimental results from a 20-node wireless testbed show that MORE’s median unicast throughput is 20% higher than ExOR, and

the gains rise to 50% over ExOR when there is a chance of spatial reuse.

Thesis Supervisor: Dina Katabi

Title: Assistant Professor

3

4

Acknowledgments

This thesis is the result of joint work done with Michael Jennings, Sachin Katti and Dina Katabi.

I could not express enough thanks to Dina for her extraordinary support during these two years at

MIT.

I am grateful to Mike and Sachin for the effort put into the project. I owe many thanks to

Hariharan Rahul for his much appreciated help with our wireless testbed. I am especially indebted to

Micah Brodsky for his insight on wireless communication and invaluable discussions on opportunistic

routing. I would also like to thank the developers of Roofnet, Click and MadWifi, the great building

blocks of our project.

I wish to thank my fellow lab mates for their great company; Arvind, David, Dan, Jacob, James,

Jen, Krzysztof, Nate, Srikanth, Yang and Yuan. Magda and Michel deserve special credit for their

support.

Finally, my biggest thanks go to Ewa for her patience.

5

6

Contents

1 Introduction

1.1

13

Motivating Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Background and Related Work

2.1

2.2

2.3

17

Wireless Mesh Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

2.1.1

Routing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

18

Opportunistic Routing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

2.2.1

ExOR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

Network Coding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

3 MAC-independent Opportunistic Routing and Encoding

3.1

3.2

3.3

14

23

MORE In a Nutshell . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

23

3.1.1

Source . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

23

3.1.2

Forwarders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

3.1.3

Destination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

25

Practical Challenges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

26

3.2.1

How Many Packets Does a Forwarder Send? . . . . . . . . . . . . . .

26

3.2.2

Stopping Rule

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

29

3.2.3

Fast Network Coding . . . . . . . . . . . . . . . . . . . . . . . . . . .

30

Implementation Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

32

3.3.1

Packet Format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

32

3.3.2

Node State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33

3.3.3

Control Flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

3.3.4

ACK Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

7

4 Experimental Evaluation

4.1

37

Testbed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38

4.1.1

Compared Protocols . . . . . . . . . . . . . . . . . . . . . . . . . . .

38

4.1.2

Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

Throughput . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

39

4.2.1

How Do the Three Protocols Compare? . . . . . . . . . . . . . . . .

39

4.2.2

When Does Opportunistic Routing Win? . . . . . . . . . . . . . . .

40

4.2.3

Why Does MORE Have Higher Throughput than ExOR? . . . . . .

42

4.3

Multiple Flows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

4.4

Autorate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

4.5

Batch Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

4.6

MORE’s Overhead . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

47

4.2

5 Theoretical Bounds

49

5.1

Prior Theoretical Results . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

5.2

Contribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

50

5.3

Minimum-cost Information Flow . . . . . . . . . . . . . . . . . . . . . . . .

51

5.3.1

Network Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

51

5.3.2

Problem Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . .

52

5.3.3

Solution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

53

5.4

EOTX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

59

5.5

Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

61

5.6

How Many Transmissions to Make? . . . . . . . . . . . . . . . . . . . . . . .

64

5.6.1

Flow Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

64

5.6.2

EOTX Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

EOTX vs. ETX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

66

5.7

6 Conclusion

69

8

List of Figures

1-1 Motivating Example. The source sends 2 packets. The destination overhears p2 , while R receives both. R needs to forward just one packet but,

without node-coordination, it may forward p2 , which is already known to

the destination. With network coding, however, R does not need to know

which packet the destination misses. R just sends the sum of the 2 packets

p1 + p2 . This coded packet allows the destination to retrieve the packet it

misses independently of its identity. Once the destination receives the whole

transfer (p1 and p2 ), it acks the transfer causing R to stop transmitting.

.

15

2-1 Benefits of Fortunate Receptions. In (a), though the chosen route has

4 hops, node B or C may directly hear some of the source’s transmissions,

allowing these packets to skip a few hops. In (b), each of the source’s transmissions has many independent chances of being received by a node closer to

the destination.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

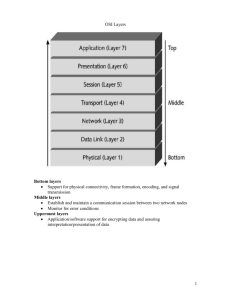

3-1 MORE Header. Grey fields are required while the white fields are optional.

The packet type identifies batch ACKs from data packets.

. . . . . . . . .

33

3-2 MORE’s Architecture. The figure shows a flow chart of our MORE implementation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

4-1 One Floor of our Testbed. Nodes’ location on one floor of our 3-floor

testbed. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

38

4-2 Unicast Throughput. Figure shows the CDF of the unicast throughput

achieved with MORE, ExOR, and Srcr. MORE’s median throughput is 22%

higher than ExOR. In comparison to Srcr, MORE achieves a median throughput gain of 95%, while some source-destination pairs show as much as 10-12x. 40

9

4-3 Scatter Plot of Unicast Throughput. Each point represents the throughput of a particular source destination pair. Points above the 45-degree line

indicate improvement with opportunistic routing. The figure shows that opportunistic routing is particularly beneficial to challenged flows.

. . . . . .

41

4-4 Spatial Reuse. The figure shows CDFs of unicast throughput achieved by

MORE, ExOR, and Srcr for flows that traverse 4 hops, where the last hop

can transmit concurrently with the first hop. MORE’s median throughput

is 50% higher than ExOR.

. . . . . . . . . . . . . . . . . . . . . . . . . . .

42

4-5 Multi-flows. The figure plots the per-flow average throughput in scenarios

with multiple flows. Bar show the average of 40 random runs. Lines show

the standard deviation.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

4-6 Opportunistic Routing Against Srcr with Autorate. The figure compares the throughput of MORE and ExOR running at 11Mb/s against that

of Srcr with autorate. MORE and ExOR preserve their throughput gains

over Srcr. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

44

4-7 Impact of Batch Size. The figure shows the CDF of the throughput taken

over 40 random node pairs. It shows that MORE is less sensitive to the batch

size than ExOR. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

5-1 Unbounded Cost Gap. The numbers on the arrows are marginal success

probabilities and it is assumed that the losses are independent. For each

node, its ETX and EOTX are listed. ETX-order will always discard B as

a forwarder, thus leaving only the path through A. The total cost on this

path equals its ETX, which can be driven arbitrarily higher than the EOTX

through B. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

66

List of Tables

3.1

Definitions used in the thesis. . . . . . . . . . . . . . . . . . . . . . . .

4.1

Average computational cost of packet operations in MORE. The

24

numbers for K = 32 and 1500B packets are measured on a low-end Celeron

machine clocked at 800MHz with 128KiB cache. Note that the coding cost

is highest at the source because it has to code all K packets together. The

coding cost at a forwarder depends on the number of innovative packets it

has received, and is always bounded by the coding cost at the source. . . .

11

47

12

Chapter 1

Introduction

Wireless mesh networks are increasingly used for providing cheap Internet access everywhere [7, 4, 33]. City-wide WiFi networks, however, need to deal with poor link quality

caused by urban structures and the many interferers including local WLANs. For example, half of the operational links in Roofnet [4] have a loss probability higher than 30%.

Opportunistic routing has recently emerged as a mechanism for obtaining high throughput

even when links are lossy [10]. Traditional routing chooses the nexthop before transmitting

a packet; but, when link quality is poor, the probability the chosen nexthop receives the

packet is low. In contrast, opportunistic routing allows any node that overhears the transmission and is closer to the destination to participate in forwarding the packet. Biswas and

Morris have demonstrated that this more relaxed choice of nexthop significantly increases

the throughput. They proposed the ExOR protocol as a means to achieve these gains [10].

Opportunistic routing, however, introduces a difficult challenge. Multiple nodes may

hear a packet broadcast and unnecessarily forward the same packet. ExOR deals with this

issue by tying the MAC to the routing, imposing a strict scheduler on routers’ access to

the medium. The scheduler goes in rounds. Forwarders transmit in order, and only one

forwarder is allowed to transmit at any given time. The others listen to learn which packets

were overheard by each node. Although the medium access scheduler delivers opportunistic

throughput gains, it does so at the cost of losing some of the desirable features of the current

802.11 MAC. In particular, the scheduler prevents the forwarders from exploiting spatial

reuse, even when multiple packets can be simultaneously received by their corresponding

13

receivers. Additionally, this highly structured approach to medium access makes the protocol hard to extend to alternate traffic types, particularly multicast, which is becoming

increasing common with content distribution applications [1] and video broadcast [3, 2].

In contrast to ExOR’s highly structured scheduler, this paper addresses the above challenge with randomness. We introduce MORE, MAC-independent Opportunistic Routing

& Encoding. MORE randomly mixes packets before forwarding them. This ensures that

routers that hear the same transmissions do not forward spurious packets. Indeed, the

probability that such randomly coded packets are the same is proven to be exponentially

low [16]. As a result, MORE does not need a special scheduler; it runs directly on top of

802.11.

The main contribution of MORE is its ability to deliver the opportunistic routing gains

while maintaining the clean architectural abstraction between the routing and MAC layers.

MORE is MAC-independent, and thus can enjoy the basic features available to today’s

MAC. Specifically, it achieves better unicast throughput by exploiting the spatial reuse

available with 802.11.

We evaluate MORE in a 20-node indoor wireless testbed. Our implementation is in

Linux and uses the Click toolkit [23] and the Roofnet software package [4]. Our results

reveal the following findings.

• In our testbed, MORE’s median unicast throughput is 20% higher than ExOR. For

4-hop flows where the last hop can exploit spatial reuse, MORE’s throughput is 50%

higher than ExOR’s.

• In comparison with traditional routing, the gain in the median throughput of a MORE

flow is 95%, and the maximum throughput gain exceeds 10x.

• Finally, coding is not a deployment hurdle for mesh wireless networks. Our implementation can sustain a throughput of 44 Mb/s on low-end machines with Celeron

800MHz CPU and 128KiB of cache.

1.1

Motivating Example

MORE’s design builds on the theory of network coding [5, 25, 16]. In this section, we use

a toy example to explain the intuition underlying our approach and illustrate the synergy

between opportunistic routing and network coding.

14

Figure 1-1: Motivating Example. The source sends 2 packets. The destination overhears p2 , while R receives both. R needs to forward just one packet but, without nodecoordination, it may forward p2 , which is already known to the destination. With network

coding, however, R does not need to know which packet the destination misses. R just

sends the sum of the 2 packets p1 + p2 . This coded packet allows the destination to retrieve

the packet it misses independently of its identity. Once the destination receives the whole

transfer (p1 and p2 ), it acks the transfer causing R to stop transmitting.

Consider the scenario in Fig. 1-1. Traditional routing predetermines the path before

transmission. It sends traffic along the path “src→R→dest”, which has the highest delivery

probability. However, wireless is a broadcast medium. When a node transmits, there is

always a chance that a node closer than the chosen nexthop to the destination overhears

the packet. For example, assume the source sends 2 packets, p1 and p2 . The nexthop, R,

receives both, and the destination happens to overhear p2 . It would be a waste to have

node R forward p2 again to the destination. This observation has been noted in [10] and

used to develop ExOR, an opportunistic routing protocol for mesh wireless networks.

ExOR, however, requires node coordination, which is hard to achieve in a large network.

Consider again the example in the previous paragraph. R should forward only packet

p1 because the second packet has already been received by the destination; but, without

consulting with the destination, R has no way of knowing which packet to transmit. The

problem becomes harder in larger networks, where many nodes hear a transmitted packet.

Opportunistic routing allows these nodes to participate in forwarding the heard packets.

Without coordination, however, multiple nodes may unnecessarily forward the same packets,

creating spurious transmissions. To deal with this issue, ExOR imposes a special scheduler

on top of 802.11. The scheduler goes in rounds and reserves the medium for a single

forwarder at any one time. The rest of the nodes listen to learn the packets overheard

by each node. Due to this strict schedule, nodes farther away from the destination (which

could potentially have transmitted at the same time as nodes close to the destination due to

15

spatial reuse), cannot, since they have to wait for the nodes close to the destination to finish

transmitting. Hence the scheduler has the side effect of preventing a flow from exploiting

spatial reuse.

Network coding offers an elegant solution to the above problem. In our example, the

destination has overheard one of the transmitted packets, p2 , but node R is unaware of this

fortunate reception. With network coding, node R naturally forwards linear combinations

of the received packets. For example, R can send the sum p1 + p2 . The destination retrieves

the packet p1 it misses by subtracting from the sum and acks the whole transfer. Thus, R

need not know which packet the destination has overheard.

Indeed, the above works if R sends any random linear combination of the two packets

instead of the sum. Thus, one can generalize the above approach. The source broadcasts

its packets. Routers create random linear combinations of the packets they hear (i.e.,

c1 p1 + . . . + cn pn , where ci is a random coefficient). The destination sends an ack along

the reverse path once it receives the whole transfer. This approach does not require node

coordination and preserves spatial reuse.

The Challenges: To build a practical protocol that delivers the above benefits, we need

to address a few challenges.

(a) How Many Packets to Send? In traditional best path routing, a node keeps sending

a packet until the nexthop receives it or until it gives up. With opportunistic routing

however, there is no particular nexthop; all nodes closer to the destination than the current

transmitter can participate in forwarding the packet. How many transmissions are sufficient

to ensure that at least one node closer to the destination has received the packet?

(b) Stop and Purge? With network coding, routers send linear combinations of the packets.

Once the destination has heard enough such coded packets, it decodes and retrieves the file.

We need to stop the sender as soon as the destination has received the transfer and purge

the related data from the forwarders.

(c) Efficient Coding? Network coding optimizes for better utilization of the wireless medium,

but coding requires the routers to multiply and add the data bytes in the packets. We need

efficient coding and decoding strategies to prevent routers’ CPU from becoming a bottleneck.

16

Chapter 2

Background and Related Work

In this chapter, we introduce the reader to the basic concepts and related work in wireless

mesh networking, opportunistic routing and network coding.

2.1

Wireless Mesh Networks

The nodes in a wireless mesh network are most often stationary wireless routers equipped

with omnidirectional antennae [13, 4, 9]. These routers can either connect to wired LANs or

include access points for mobile clients. Moreover, the radio frequency band is unlicensed,

so, in order to deploy such a network physically, one only needs to scatter the nodes over

the covered area. All remaining configuration can be done in software. Things get a bit

more complicated when directional antennae and multiple interfaces are used, but in this

work we focus on the simplest deployments. The wireless interfaces are 802.11 commodity

hardware, which make such networks affordable and proliferate.

The price for this simplicity is charged to the resulting link quality. A number of

physical phenomena can cause bit errors and corrupt transmitted frames, even if no collision

occurs [13, 4]. A failed transmission is wasted bandwidth not only for its originator, but

also for all the nodes in the wireless channel vicinity that have deferred their transmissions

in order to avoid collision. Our performance goal is thus to reduce the total number of

transmissions needed to deliver the message across the network.

The loss rates can be prohibitively high even if the destination is just one hop away,

and the problem is much aggravated when a packet is dropped after some number of hops.

In such case, all past transmissions of this packet could go to waste. The standard solution

17

to avoid this inefficiency is to add retransmissions in the link-layer [17]. Upon successful

decoding the intended receiver transmits back an acknowledgment frame (ACK). The original sender keeps retransmitting the data frame until it receives the ACK. This method,

effectively, masks most losses from higher layers. Furthermore, although nodes other than

the intended receiver can often successfully decode the data frame, they usually discard

them. So the wireless link typically has a simple point-to-point abstraction.

2.1.1

Routing

Given the point-to-point links, the routing algorithm is responsible for picking the best path

through the nodes to destination. A number of metrics has been proposed to determine

the quality of a path. The most obvious one, borrowed directly from wired networks, is

hop count. Intuitively, paths with fewer hops will require fewer transmissions, which in

turn reduces delay and bandwidth consumption. However, unless precaution is taken, this

metric prefers long hops, which is unfortunate, because link quality generally drops with

length. Therefore, the result could be a reduced throughput.

To address this problem, DeCouto et al. propose the ETX metric, defined as the expected number of transmissions necessary to deliver one packet over the path [13]. The

ETX of a path is then the sum of the ETX of each hop along it. This in turn, is inversely

proportional to the loss rate of the wireless link. Thus, this metric accepts more hops if they

are less lossy, but minimizes hop count if good links are available. For example, in Fig. 1-1

the ETX of the path src → R → dst equals 2 and is smaller than ETX of src → dst, which

is 1/0.49 = 2.04. Overall, if the loss estimates are accurate for each link, and the losses are

independent across links, then ETX yields the expected transmission cost of each packet.

In addition, for sake of accuracy, ETX accounts for the probability that the transmission is

successfully decoded, but must be reattempted because the 802.11 ACK is lost.

It should be noted that wireless interfaces are often capable of modulating at different

bit-rates, and so transmissions of the same-sized packet could take different amounts of

time. In that case, during one lengthy transmission at a low bit-rate, a number of high

bit-rate ones could be performed. Realizing that, Bicket et al. propose the ETT metric,

an extension to ETX, defined as the expected transmission time. This is the metric that

powers Srcr, the routing protocol used in Roofnet [9]. Other variants and extensions have

also been proposed [14, 31].

18

(a) Skipping Hops

(b) Multiple Independent Forwarders

Figure 2-1: Benefits of Fortunate Receptions. In (a), though the chosen route has 4

hops, node B or C may directly hear some of the source’s transmissions, allowing these

packets to skip a few hops. In (b), each of the source’s transmissions has many independent

chances of being received by a node closer to the destination.

2.2

Opportunistic Routing

As described in the previous section, a widely employed practice in routing in wireless mesh

networks is to conceal the underlying broadcast medium behind a point-to-point abstraction.

A transmission is not successful unless the designated receiver is able to decode it. Failure

to receive at one node, however, does not preclude a success at another. The traditional

approach ignores any such receptions, which could turn out to be quite fortunate. To

illustrate how could such receptions be beneficial, let us consider two simple scenarios taken

from [10].

Suppose that the path used for routing is src → A → B → C → dst, as shown in

Figure 2-1-a. When the source transmits a packet, there is a chance that some nodes

further down the route successfully receive the packet. If that is the case, there would be no

point for A to forward the packet again, as it would be redundant effort. Moreover, A could

actually fail to decode, but other nodes succeed.1 Rather than discarding the packet at the

lucky, though unintended receivers, we can exploit these receptions to skip hops. Thus, a

number of transmissions can be spared.

In the second scenario, when the source transmits, each of the 100 possible forwarders

has an independent probability of successful reception. So, even if each one fails with

probability 0.9, some other succeeds with probability 1 − 0.9100 > 0.9999. If the forwarder

is designated ahead of time, the source must therefore perform on average 10 transmissions

1

Although the link quality decreases with distance, so the chance that a farther receiver succeeds is

smaller than that of the nearer node, but a bit-error at A does not determine a bit-error at other nodes.

19

for each packet. But if any node that successfully receives could be used as a forwarder,

this cost drops tenfold.

2.2.1

ExOR

What is necessary to gain from these fortunate receptions is to defer the choice of the next

hop forwarder to after the outcome of reception decoding is known. This is the essence of

opportunistic routing as proposed by Biswas and Morris in [10]. The proposed protocol,

ExOR, aims to leverage all such events by enforcing the simple rule: of all the nodes that

were able to successfully decode the transmission, the one that is closest to the destination

should forward it on. Not only does it ensure that the progress of a packet in each transmission is maximized, but it also prevents redundant transmissions due to replication. In

the scenario shown in Fig. 2-1-a, this rule guarantees maximum progress of the packet toward the destination, and in the second scenario from Fig. 2-1-b, it utilizes all candidate

forwarders, in both cases preventing redundant replication.

Although simple to define, the rule is quite difficult to implement in a distributed network, without omniscient observers and centralized coordinators. To determine whether it

should forward the packet it received, a node must at the very least know whether a better

forwarder succeeded in decoding that packet. Reliably exchanging this information after

every reception would likely lead to a collapse due to overhead, not mentioning the hardness

of avoiding collisions in such a flood of announcements. Instead, ExOR schedules all nodes

in order of increasing distance to the destination. For example the schedule in Fig. 2-1-a

would be dst>C>B>A>src. In this way, before the turn of a particular node comes, all

nodes closer to the destination get to transmit. This enables propagation of reception status

via piggy-backing on data transmissions. But if packets get lost, so are the announcements.

To alleviate this problem and amortize the overhead of scheduling, ExOR gathers packets

into larger batches.

To better understand why this works, let us consider a snapshot of the schedule. When

C is done forwarding the packets it is responsible for, it is the turn of node B. Each

transmission from C included (in a bit-map in the header) information on each packet in

the batch whether the packet had been received by C or any node closer to destination. If

B received at least one of these, it knows what packets to forward: all that B received but

none reported to be received by C.

20

The scheduling is troublesome. The standard 802.11 MAC is a CSMA/CA protocol [17]

and does not support such a strict schedule, hence, in order to maintain high utilization,

ExOR must rely on fragile timing estimates. Moreover, this scheduling is one-at-a-time

and thus prevents two transmissions that could otherwise succeed at the same time due to

spatial reuse. The nodes are forced to wait until the currently scheduled node has finished.

This is adversely affecting the throughput.

2.3

Network Coding

Network coding is a method to improve bandwidth efficiency in networks. The core idea is

that the forwarding nodes should merge data contained in distinct packets in such a way

that allows for recovery at the destination. This is in contrast with the traditional scheme

which treats each packet as a distinct object that must be delivered to the destination

intact. As Ahlswede et al. established in their seminal paper, network coding allows one

to surpass the capacity limitations of the traditional method and achieve the information

flow capacity of the network [5]. Furthermore, as Li et al. shown in [25], it is possible to

achieve this capacity using only linear functions when mixing packets together. In such case,

each packet transmitted is some linear combination of other packets. Additionally, Ho et al.

show that the above is true even when the routers pick random coefficients [16]. Researchers

have extended the above results to a variety of areas including content distribution [15],

secrecy [11, 18], distributed storage [19], and reliability in DTN [34].

The classical network coding theory is hardly practical. It often assumes that the packets

flow synchronously through the network, so that nodes can simply combine the incoming

packets as they arrive and apply a single mixing function. In contrast, real-world packet

networks are asynchronous and subject to random losses and delays. MORE belongs to

a category of practical (or distributed ) network coding schemes, such as those described

in [28] and [12]. It addresses this problem by randomness and buffering. The nodes store the

packets in buffers as they receive them and form random linear combinations of them when

transmitting. Then each packet is described by the coefficients of the linear combination

with respect to the raw source packets. This allows decoding by solving a set of linear

equations.

As a final note, we should point out that the proposed scheme is not the only way to

21

apply network coding to routing in multi-hop wireless networks. For example, Katti et al.

proposed COPE [21], a protocol which mixes together packets of different flows crossing

at a forwarder. This way a single transmission can deliver a number of packets to their

respective next hop nodes, where they can be decoded. In contrast, MORE operates on a

single flow, and does not decode the packets until they reach the destination.

22

Chapter 3

MAC-independent Opportunistic

Routing and Encoding

In this chapter, we present the design and implementation of MORE, our MAC-independent

opportunistic routing protocol, which can exploit fortunate receptions without cumbersome

coordination.

3.1

MORE In a Nutshell

MORE is a routing protocol for stationary wireless meshes, such as Roofnet [4] and community wireless networks [33, 6]. Nodes in these networks are PCs with ample CPU and

memory.

MORE sits below IP and above the 802.11 MAC. It provides reliable file transfer. It

is particularly suitable for delivering files of medium to large size (i.e., 8 or more packets).

For shorter files or control packets, we use standard best path routing (e.g., Srcr [9]), with

which MORE benignly co-exists.

Table 3.1 defines the terms used in the rest of the paper.

3.1.1

Source

The source breaks up the file into batches of K packets, where K may vary from one batch

to another. These K uncoded packets are called native packets. When the 802.11 MAC

is ready to send, the source creates a random linear combination of the K native packets

23

Term

Native Packet

Coded Packet

Code Vector of a Coded

Packet

Innovative Packet

Closer to destination

Definition

Uncoded packet

Random linear combination of native or coded

packets

The vector of co-efficients that describes how to

derive the coded packet from thePnative packets. For a coded packet p0 =

ci pi , where

pi ’s are the native packets, the code vector is

~c = (c1 , c2 , . . . , cK ).

A packet is innovative to a node if it is linearly

independent from its previously received packets.

Node X is closer than node Y to the destination,

if the best path from X to the destination has a

lower ETX metric than that from Y .

Table 3.1: Definitions used in the thesis.

in the current batch and broadcasts the coded packet. In MORE, data packets are always

P

coded. A coded packet is p0 = i ci pi , where the ci ’s are random coefficients picked by the

node, and the pi ’s are native packets from the same batch. We call ~c = (c1 , . . . , ci , . . . , cK )

the packet’s code vector. Thus, the code vector describes how to generate the coded packet

from the native packets.

The sender attaches a MORE header to each data packet. The header reports the

packet’s code vector (which will be used in decoding), the batch ID, the source and destination IP addresses, and the list of nodes that could participate in forwarding the packet

(Fig. 3-1). To compute the forwarder list, we leverage the ETX calculations [13]. Specifically, nodes periodically ping each other and estimate the delivery probability on each link.

They use these probabilities to compute the ETX distance to the destination, which is the

expected number of transmissions to deliver a packet from each node to the destination.

The sender includes in the forwarder list nodes that are closer (in ETX metric) to the

destination than itself, ordered according to their proximity to the destination.

The sender keeps transmitting coded packets from the current batch until the batch is

acked by the destination, at which time, the sender proceeds to the next batch.

3.1.2

Forwarders

Nodes listen to all transmissions. When a node hears a packet, it checks whether it is in the

packet’s forwarder list. If so, the node checks whether the packet contains new information,

in which case it is called an innovative packet. Technically speaking, a packet is innovative if

24

it is linearly independent from the packets the node has previously received from this batch.

Checking for independence can be done using simple Algebra (Gaussian Elimination [22]).

The node ignores non-innovative packets, and stores the innovative packets it receives from

the current batch.

If the node is in the forwarder list, the arrival of this new packet triggers the node

to broadcast a coded packet. To do so the node creates a random linear combination

of the coded packets it has heard from the same batch and broadcasts it. Note that a

linear combination of coded packets is also a linear combination of the corresponding native

packets. In particular, assume that the forwarder has heard coded packets of the form

P

p0j =

i cji pi , where pi is a native packet. It linearly combines these coded packets to

P

0

create more coded packets as follows: p00 =

j rj pj , where rj ’s are random numbers.

The resulting coded packet p00 can be expressed in terms of the native packets as follows

P

P

P P

p00 = j (rj i cji pi ) = i ( j rj cji )pi ; thus, it is a linear combination of the native packets

themselves.

3.1.3

Destination

For each packet it receives, the destination checks whether the packet is innovative, i.e.,

it is linearly independent from previously received packets. The destination discards noninnovative packets because they do not contain new information. Once the destination

receives K innovative packets, it decodes the whole batch (i.e., it obtains the native packets)

using simple matrix inversion:

p1

c

. . . c1K

11

.. ..

..

. = .

.

pK

cK1 . . . cKK

−1

p01

..

. ,

p0K

where, pi is a native packet, and p0i is a coded packet whose code vector is ~ci = ci1 , . . . , ciK .

As soon as the destination decodes the batch, it sends an acknowledgment to the source to

allow it to move to the next batch. ACKs are sent using best path routing, which is possible

because MORE uses standard 802.11 and co-exists with shortest path routing. ACKs are

also given priority over data packets at every node.

25

3.2

Practical Challenges

In §3.1, we have described the general design of MORE. But for the protocol to be practical,

MORE has to address 3 additional challenges, which we discuss in detail below.

3.2.1

How Many Packets Does a Forwarder Send?

In traditional best path routing, a node keeps transmitting a packet until the nexthop

receives it, or the number of transmissions exceeds a particular threshold, at which time

the node gives up. In opportunistic routing, however, there is no particular nexthop; all

nodes closer to the destination than the current transmitter are potential nexthops and

may participate in forwarding the packet. How many transmissions are sufficient to ensure

that at least one node closer to the destination has received the packet? This is an open

question. Prior work has looked at a simplified and theoretical version of the problem that

assumes smooth traffic rates and infinite wireless capacity [26]. In practice, however, traffic

is bursty and the wireless capacity is far from infinite.

In this section, we provide a heuristic-based practical solution to the above problem.

Our solution has the following desirable characteristics: 1) It has low complexity. 2) It

is distributed. 3) It naturally integrates with 802.11 and preserves spatial reuse. 4) It

is practical– i.e., it makes no assumptions of infinite capacity or traffic smoothness, and

requires only the average loss rate of the links.

Practical Solution

Bandwidth is typically the scarcest resource in a wireless network. Thus, the natural approach to increase wireless throughput is to decrease the number of transmissions necessary

to deliver a packet from the source to the destination [10, 13, 9]. Let the distance from a

node, i, to the destination, d, be the expected number of transmissions to deliver a packet

from i to d along the best path– i.e., node i’s ETX [13]. We propose the following heuristic to route a packet from the source, s, to the destination, d: when a node transmits a

packet, the node closest to the destination in ETX metric among those that receive the

packet should forward it onward. The above heuristic reduces the expected number of

transmissions needed to deliver the packet, and thus improves the overall throughput.

Formally, let N be the number of nodes in the network. For any two nodes, i and j, let

26

i < j denote that node i is closer to the destination than node j, or said differently, i has a

smaller ETX than j. Let ²ij denote the loss probability in sending a packet from i to j. Let

zi be the expected number of transmissions that forwarder i must make to route one packet

from the source, s, to the destination, d, when all nodes follow the above routing heuristic.

In the following, we assume that wireless receptions at different nodes are independent, an

assumption that is supported by prior measurements [32, 30].

We focus on delivering one packet from source to destination. Let us calculate the

number of packets that a forwarder j must forward to deliver one packet from source, s

to destination, d. The expected number of packets that j receives from nodes with higher

P

ETX is i>j zi (1 − ²ij ). For each packet j receives, j should forward it only if no node

Q

with lower ETX metric hears the packet. This happens with probability k<j ²ik . Thus,

in expectation, the number of packets that j must forward, denoted by Lj , is:

Lj =

X

Y

²ik ).

(zi (1 − ²ij )

i>j

(3.1)

k<j

Note that Ls = 1 because the source generates the packet.

Now, consider the expected number of transmissions a node j must make. j should

transmit each packet until a node with lower ETX has received it. Thus, the number of

transmissions that j makes for each packet it forwards is a geometric random variable with

Q

success probability (1 − k<j ²jk ). This is the probability that some node with ETX lower

than j receives the packet. Knowing the number of packets that j has to forward from

Eq. (3.1), the expected number of transmissions that j must make is:

zj =

(1 −

L

Qj

k<j ²jk )

.

(3.2)

(a) Low Complexity: The number of transmissions made by each node, the zi ’s, can be

computed via the following algorithm. We can ignore nodes whose ETX to the destination

is greater than that of the source, since they are not useful in forwarding packets for this

flow. Next, we order the nodes by increasing ETX from the destination d and relabel them

according to this ordering, i.e., d = 1 and s = n. We begin at the source by setting Ln = 1,

then compute Eqs. (3.1) and (3.2) from source progressing towards the destination. To

reduce the complexity, we will compute the values incrementally. Consider Lj , as given by

27

Eq. (3.1). If we computed it in one shot, we would need to compute the product

Q

k<j ²ik

from scratch for each i > j. The idea is to instead compute and accumulate the contribution

of node i to Lj ’s of all nodes j with lower ETX, so that each time we only need to make a

small update to this product (denoted P in the algorithm).

Algorithm 1 Computing the number of transmissions each node makes to deliver a packet

from source to destination, zi ’s

for i = n . . . 1 do

Li ← 0

Ln ← 1

{at source}

for i = n . . . 2 do

Q

zi ← Li /(1 − j<i ²ij )

P ←1

for j = 2 . . . i − 1 do

{compute the contribution of i to Lj }

Q

P ← P × ²i(j−1) {here, P is k<j ²ik }

Lj ← Lj + zi × P × (1 − ²ij )

Alg. 1 requires O(N 2 ) operations, where N is the number of nodes in the network. This

is because the outer loop is executed at most n times and each iteration of the inner loop

requires O(n) operations, where n is bounded by the number of nodes in the network, N .

(b) Distributed Solution: Each node j can periodically measure the loss probabilities

²ij for each of its neighbors via ping probes. These probabilities are distributed to other

nodes in the network in a manner similar to link state protocols [9]. Each node can then

build the network graph annotated with the link loss probabilities and compute Eq. (3.2)

from the ²ij ’s using the algorithm above.

(c) Integrated with 802.11: A distributed low-complexity solution to the problem is

not sufficient. The solution tells each node the value of zi , i.e., the number of transmissions

it needs to make for every packet sent by the source. But a forwarder cannot usually tell

when the source has transmitted a new packet. In a large network, many forwarders are

not in the source’s range. Even those forwarders in the range of the source do not perfectly

receive every transmission made by the source and thus cannot tell whether the source has

sent a new packet. Said differently, the above assumes a special scheduler that tells each

node when to transmit.

28

In practice, a router should be triggered to transmit only when it receives a packet,

and should perform the transmission only when the 802.11 MAC permits. We leverage the

preceding to compute how many transmissions each router needs to make for every packet it

receives. Define the TX credit of a node as the number of transmissions that a node should

make for every packet it receives from a node farther from the destination in the ETX

P

metric. For each packet sent from source to destination, node i receives j>i (1 − ²ji )zj ,

where zj is the number of transmissions made by node j and ²ji is the loss probability from

j to i, as before. Thus, the TX credit of node i is:

zi

.

j>i zj (1 − ²ji )

TX crediti = P

(3.3)

Thus, in MORE, a forwarder node i keeps a credit counter. When node i receives a

packet from a node upstream, it increments the counter by its TX credit. When the 802.11

MAC allows the node to transmit, the node checks whether the counter is positive. If yes,

the node creates a coded packet, broadcasts it, then decrements the counter. If the counter

is negative, the node does not transmit. The ETX metric order ensures that there are no

loops in crediting, which could lead to credit explosion.

Pruning

MORE’s solution to the above might include forwarders that make very few transmissions

(zi is very small), and thus, have very little contribution to the routing. In a dense network,

we might have a large number of such low contribution forwarders. Since the overhead

of channel contention increases with the number of forwarders, it is useful to prune such

nodes. MORE prunes forwarders that are expected to perform less than 10% of all the

P

transmissions for the batch (more precisely, it prunes nodes whose zi < 0.1 j∈N zj ).

3.2.2

Stopping Rule

In MORE, traffic is pumped into the network by the source. The forwarders do not generate

traffic unless they receive new packets. It is important to throttle the source’s transmissions

as soon as the destination has received enough packets to decode the batch. Thus, once

the destination receives the K th innovative packet, and before fully decoding the batch, it

sends an ACK to the source.

29

To expedite the delivery of ACKs, they are sent on the shortest path from destination

to source. Furthermore, ACKs are given priority over data packets at all nodes and are

reliably delivered using local retransmission at each hop.

When the sender receives an acknowledgment for the current batch, it stops forwarding

packets from that batch. If the transfer is not complete yet, the sender proceeds to transmit

packets from the next batch.

The forwarders are triggered by the arrival of new packets, and thus stop transmitting

packets from a particular batch once the sender stops doing so. Eventually the batch will

timeout and be flushed from memory. Additionally, forwarders that hear the ACK while it

is being transmitted towards the sender immediately stop transmitting packets from that

batch and purge it from their memory. Finally, the arrival of a new batch from the sender

causes a forwarder to flush all buffered packets with batch ID’s lower than the active batch.

3.2.3

Fast Network Coding

Network coding, implemented naively, can be expensive. As outlined above, the routers

forward linear combinations of the packets they receive. Combining N packets of size S

bytes requires N S multiplications and additions. Due to the broadcast nature of the wireless

medium, routers could receive many packets from the same batch. If a router codes all these

packets together, the coding cost may be overwhelming, creating a CPU bottleneck.

MORE employs three techniques to produce efficient coding that ensure the routers can

easily support high bit rates.

(a) Code only Innovative Packets: The coding cost scales with the number of packets

coded together. Typically, network coding makes routers forward linear combinations of

the received packets. Coding non-innovative packets, however, is not useful because they

do not add any information content. Hence, when a MORE forwarder receives a new

packet, it checks if the packet is innovative and throws away non-innovative packets. Since

innovative packets are by definition linearly independent, the number of innovative packets

in any batch is bounded by the batch size K. Discarding non-innovative packets bounds

both the number of packets the forwarder buffers from any batch, and the number of

packets combined together to produce a coded packet. Discarding non-innovative packets

is particularly important in wireless because the broadcast nature of the medium makes the

number of received packets much larger than innovative packets.

30

(b) Operate on Code Vectors: When a new packet is received, checking for innovativeness implies checking whether the received packet is linearly independent of the set of

packets from the same batch already stored at the node. Checking independence of all data

bytes is very expensive. Fortunately, this is unnecessary. The forwarder node simply checks

if the code vectors are linearly independent.

To amortize the cost over all packets, each node keeps code vectors of the packets in

its buffer in a row echelon form. Specifically, they are stored in a triangular matrix M

of K rows with some of the rows missing, thus for each stored row, the smallest index of

a non-zero element is distinct. To check if the code vector of the newly received packet

is linearly independent, the non-empty rows are multiplied by appropriate coefficients and

added to it in order so that consecutive elements of the vector become 0. If the vector is

linearly independent, then one element will not be zeroed due to a missing row, and the

modified vector can be added to the matrix in that empty slot, as shown in the algorithm:

Algorithm 2 Checking for linear independence of vector u

for i = 1 . . . K do

if u[i] 6= 0 then

if M [i] exists then

u ← u − M [i]u[i]

else

{admit the modified block into memory}

M [i] ← u/u[i]

return True {rank increased}

return False {discard packet}

This process requires only N K multiplications per packet, where N is the number of

non-empty rows and is bounded by K. The decoder at the destination runs a similar

algorithm for each received packet. The nodes are kept in reduced row echelon matrix, so

the first non-zero element of each existing row is 1. Decoding requires 2N S multiplications

per packet in order to obtain the identity matrix at the end, where S is the packet size.

The data in the packet itself is not touched; it is just stored in a pool to be used later

when the node needs to forward a linear combination from the batch. Thus, operations

on individual data bytes happen only occasionally at the time of coding or decoding, while

31

checking for innovativeness, which occurs for every overheard packet, is fairly cheap.

(c) Pre-Coding: When the wireless driver is ready to send a packet, the node has to

generate a linear combination of the buffered packets and hand that coded packet to the

wireless card. Linearly combining packets involves multiplying individual bytes in those

packets, which could take hundreds of microseconds. This inserts significant delay before

every transmission, decreasing the overall throughput.

To address this issue, MORE exploits the time when the wireless medium is unavailable

to pre-compute one linear combination, so that a coded packet is ready when the medium

becomes available. If the node receives an innovative packet before the prepared packet

is handed over to the driver, the pre-coded packet is updated by multiplying the newly

arrived packet with a random coefficient and adding it to the pre-coded packet. This

approach achieves two important goals. On one hand, it ensures the transmitted coded

packet contains information from all packets known to the node, including the most recent

arrival. On the other hand, it avoids inserting a delay before each transmission.

3.3

Implementation Details

Finally, we put the various pieces together and explain the system details.

3.3.1

Packet Format

MORE inserts a variable length header in each packet, as shown in Fig. 3-1. The header

starts with a few required fields that appear in every MORE packet. The type field distinguishes data packets, which carry coded information, from ACKs, which signal batch

delivery. The header also contains the source and destination IP addresses and the flow

ID. The last required field is the batch ID, which identifies the batch to which the packet

belongs. The above is followed by a few optional fields. The code vector exists only in

data packets and identifies the coefficients that generate the coded packet from the native

packets in the batch. The list of forwarders has variable length and identifies all potential

forwarders ordered according to their proximity to the source. For each forwarder, the

packet also contains its TX credit (see §3.2.1). Except for the code vector, all fields are

initialized by the source and copied to the packets created by the forwarders. In contrast,

the code vector is computed locally by each forwarder based on the random coefficients they

32

Figure 3-1: MORE Header. Grey fields are required while the white fields are optional.

The packet type identifies batch ACKs from data packets.

picked for the packet.

3.3.2

Node State

Each MORE node maintains state for the flows it forwards. The per-flow state is initialized

by the reception of the first packet from a flow that contains the node ID in the list of

forwarders. The state is timed-out if no packets from the flow arrive for 5 minutes. The

source keeps transmitting packets until the destination acks the last batch of the flow. These

packets will re-initialize the state at the forwarder even if it is timed out prematurely. The

per-flow state includes the following.

• The batch buffer stores the received innovative packets. Note that the number of

innovative packets in a batch is bounded by the batch size K.

• The current batch variable identifies the most recent batch from the flow.

• The forwarder list contains the list of forwarders and their corresponding TX credits,

ordered according to their distance from the destination. The list is copied from one

of the received packets, where it was initialized by the source.

• The credit counter tracks the transmission credit. For each packet arrival from a

node with a higher ETX, the forwarder increments the counter by its corresponding

TX CREDIT, and decrements it 1 for each transmission. A forwarder transmits only

when the counter is positive.

33

(a) Sender side

Figure 3-2: MORE’s Architecture.

implementation.

3.3.3

(b) Receiver side

The figure shows a flow chart of our MORE

Control Flow

Figure 3-2 shows the architecture of MORE. The control flow responds to packet reception

and transmission opportunity signaled by the 802.11 driver.

On the sending side, the forwarder prepares a pre-coded packet for every backlogged

flow to avoid delay when the MAC is ready for transmission. A flow is backlogged if it

34

has a positive credit counter. Whenever the MAC signals an opportunity to transmit,

the node selects a backlogged flow by round-robin and pushes its pre-coded packet to the

network interface. As soon as the transmission starts, a new packet is pre-coded for this

flow and stored for future use. If the node is a forwarder, it decrements the flow’s credit

counter.

On the receiving side, when a packet arrives the node checks whether it is a forwarder by

looking for its ID in the forwarder list in the header. If the node is a forwarder, it checks if

the batch ID on the packet is the same as its current batch. If the batch ID in the packet

is higher than the node’s current batch, the node sets current batch to the more recent

batch ID and flushes packets from older batches from its batch buffer. If the packet was

transmitted from upstream, the node also increments its credit counter by its TX credit.

Next, the node performs a linear independence check to determine whether the packet is

innovative. Innovative packets are added to the batch buffer while non-innovative packets

are discarded.

Further processing depends on whether the node is the packet’s final destination or just

a forwarder. If the node is a forwarder, the pre-coded packet from this flow is updated by

adding the recent packet multiplied by a random coefficient. In contrast, if the node is the

destination of the flow, it checks whether it has received a full batch (i.e., K innovative

packets). If so, it queues an ACK for the batch, decodes the native packets and pushes

them to the upper layer.

3.3.4

ACK Processing

ACK packets are routed to the source along the shortest ETX path. ACKs are also prioritized over data packets and transferred reliably. In our implementation, when a transmission

opportunity arises, a flow that has queued ACK is given priority, and the ACK packet is

passed to the device. Unless the transmission succeeds (i.e., is acknowledged by the MAC

of the nexthop) the ACK is queued again. In addition, all nodes that overhear a batch

ACK update their current batch variable and flush packets from the acked batch from

their batch buffer.

35

36

Chapter 4

Experimental Evaluation

We use measurements from a 20-node wireless testbed to evaluate MORE, compare it with

both ExOR and traditional best path routing, and estimate its overhead. Our experiments

reveal the following findings.

• MORE achieves 20% better median throughput than ExOR. In comparison with traditional routing, MORE almost doubles the median throughput, and the maximum

throughput gain exceeds 10x.

• MORE’s throughput exceeds ExOR’s mainly because of its ability to exploit spatial

reuse. Focusing on flows that traverse paths with 25% chance of concurrent transmissions, we find that MORE’s throughput is 50% higher than that of ExOR.

• MORE significantly eases the problem of dead spots. In particular, 90% of the flows

achieve a throughput higher than 50 packets/second. In traditional routing the 10th

percentile is only 10 packets/second.

• MORE keeps its throughput gain over traditional routing even when the latter is

allowed automatic rate selection.

• MORE is insensitive to the batch size and maintains large throughput gains with

batch size as low as 8 packets.

• Finally, we estimate MORE’s overhead. MORE stores the current batch from each

flow. Our MORE implementation supports up to 44 Mb/s on low-end machines with

Celeron 800MHz CPU and 128KiB of cache. Thus, MORE’s overhead is reasonable

for the environment it is designed for, namely stationary wireless meshes, such as

Roofnet [4] and community wireless networks [33, 6].

37

Figure 4-1: One Floor of our Testbed.

testbed.

Nodes’ location on one floor of our 3-floor

We will make our code public including the finite field coding libraries.

4.1

Testbed

(a) Characteristics: We have a 20-node wireless testbed that spans three floors in our

building connected via open lounges. The nodes of the testbed are distributed in several

offices, passages, and lounges. Fig. 4-1 shows the locations of the nodes on one of the floors.

Paths between nodes are 1–5 hops in length, and the loss rates of links on these paths vary

between 0 and 60%, and averages to 27%.

(b) Hardware: Each node in the testbed is a PC equipped with a NETGEAR WAG311

wireless card attached to an omni-directional antenna. They transmit at a power level of

18 dBm, and operate in the 802.11 ad hoc mode, with RTS/CTS disabled.

(c) Software: Nodes in the testbed run Linux, the Click toolkit [23] and the Roofnet

software package [4]. Our implementation runs as a user space daemon on Linux. It sends

and receives raw 802.11 frames from the wireless device using a libpcap-like interface.

4.1.1

Compared Protocols

We compare the following three protocols.

• MORE as explained in §3.3.

38

• ExOR [10], the current opportunistic routing protocol. Our ExOR code is provided

by its authors.

• Srcr [9] which is a state-of-the-art best path routing protocol for wireless mesh networks. It uses Dijkstra’s shortest path algorithm where link weights are assigned

based on the ETX metric [13].

4.1.2

Setup

In each experiment, we run Srcr, MORE, and ExOR in sequence between the same source

destination pairs. Each run transfers a 5 MByte file. We leverage the ETX implementation

provided with the Roofnet Software to measure link delivery probabilities. Before running

an experiment, we run the ETX measurement module for 10 minutes to compute pair-wise

delivery probabilities and the corresponding ETX metric. These measurements are then fed

to all three protocols, Srcr, MORE, and ExOR, and used for route selection.

Unless stated differently, the batch size for both MORE and ExOR is set to K = 32

packets. The packet size for all three protocols is 1500B. The queue size at Srcr routers is

50 packets. In contrast, MORE and ExOR do not use queues; they buffer active batches.

Most experiments are performed over 802.11b with a bit-rate of 5.5Mb/s. In §4.4, we

allow traditional routing (i.e., Srcr) to exploit the autorate feature in the MadWifi driver,

which uses the Onoe bit-rate selection algorithm [8]. Current autorate control optimizes

the bit-rate for the nexthop, making it unsuitable for opportunistic routing, which broadcasts every transmission to many potential nexthops. The problem of autorate control for

opportunistic routing is still open. Thus in our experiments, we compare Srcr with autorate

to opportunistic routing (MORE and ExOR) with a fixed bit-rate of 11 Mb/s.

4.2

Throughput

We would like to examine whether MORE can effectively exploit opportunistic receptions

to improve the throughput and compare it with Srcr and ExOR.

4.2.1

How Do the Three Protocols Compare?

Does MORE improve over ExOR? How do these two opportunistic routing protocols compare with traditional best path routing? To answer these questions, we use these protocols

39

Cumulative Fraction of Flows

1

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

Srcr

ExOR

MORE

0.1

0

0

50

100

Throughput [pkt/s]

150

200

Figure 4-2: Unicast Throughput. Figure shows the CDF of the unicast throughput

achieved with MORE, ExOR, and Srcr. MORE’s median throughput is 22% higher than

ExOR. In comparison to Srcr, MORE achieves a median throughput gain of 95%, while

some source-destination pairs show as much as 10-12x.

to transfer a 5 MByte file between various nodes in our testbed. We repeat the same

experiment for MORE, ExOR, and Srcr as explained in §4.1.2.

Our results show that MORE significantly improves the unicast throughput. In particular, Fig. 4-2 plots the CDF of the throughput taken over 200 randomly selected sourcedestination pairs in our testbed. The figure shows that both MORE and ExOR significantly

outperform Srcr. Interestingly, however, MORE’s throughput is higher than ExOR’s. In

the median case, MORE has 22% throughput gain over ExOR. Its throughput gain over

Srcr is 95%, but some challenged flows achieve 10-12x higher throughput with MORE than

traditional routing.

Further, MORE and opportunistic routing ease the problem of dead spots. Fig. 4-2

shows that over 90% of MORE flows have a throughput larger than 50 packets a second.

ExOR’s 10th percentile is at 35 packets a second. Srcr on the other hand suffers from dead

spots with many flows experiencing very low throughput. Specifically, the 10th percentile

of Srcr’s throughput is at 10 packets a second.

4.2.2

When Does Opportunistic Routing Win?

We try to identify the scenarios in which protocols like MORE and ExOR are particularly

useful– i.e., when should one expect opportunistic routing to bring a large throughput gain?

40

MORE Throughput [pkt/s]

100

10

1

1

10

Srcr Throughput [pkt/s]

100

(a) MORE vs. Srcr

ExOR Throughput [pkt/s]

100

10

1

1

10

100

Srcr Throughput [pkt/s]

(b) ExOR vs. Srcr

Figure 4-3: Scatter Plot of Unicast Throughput. Each point represents the throughput of a particular source destination pair. Points above the 45-degree line indicate improvement with opportunistic routing. The figure shows that opportunistic routing is particularly

beneficial to challenged flows.

Fig. 4-3a shows the scatter plot for the throughputs achieved under Srcr and MORE for

the same source-destination pair. Fig. 4-3b gives an analogous plot for ExOR. Points on

the 45-degree line have the same throughput in the two compared schemes.

These figures reveal that opportunistic routing (MORE and ExOR) greatly improves

performance for challenged flows, i.e., flows that usually have low throughput. Flows that

achieve good throughput under Srcr do not improve further. This is because when links on

41

Cumulative Fraction of Flows

1

0.8

0.6

0.4

0.2

Srcr

ExOR

MORE

0

10

20

30

40

50

Throughput [pkt/s]

60

70

Figure 4-4: Spatial Reuse. The figure shows CDFs of unicast throughput achieved by

MORE, ExOR, and Srcr for flows that traverse 4 hops, where the last hop can transmit

concurrently with the first hop. MORE’s median throughput is 50% higher than ExOR.

the best path have very good quality, there is little benefit from exploiting opportunistic

receptions. In contrast, a source-destination pair that obtains a low throughput under

Srcr does not have any good quality path. Usually, however, many low-quality paths exist

between the source and the destination. By using the combined capacity of all these lowquality paths, MORE and ExOR manage to boost the throughput of such flows.

4.2.3

Why Does MORE Have Higher Throughput than ExOR?

Our experiments show that spatial reuse is a main contributor to MORE’s gain over ExOR.

ExOR prevents multiple forwarders from accessing the medium simultaneously [10], and

thus does not exploit spatial reuse. To examine this issue closely, we focus on a few flows

that we know can benefit from spatial reuse. Each flow has a best path of 4 hops, where the

last hop can send concurrently with the first hop without collision. Fig. 4-4 plots the CDF

of throughput of the three protocols for this environment. Focusing on paths with spatial

reuse amplifies the gain MORE has over ExOR. The figure shows that for 4-hop flows with

spatial reuse, MORE on average achieves a 50% higher throughput than ExOR.

It is important to note that spatial reuse may occur even for shorter paths. The capture

effect allows multiple transmissions to be correctly received even when the nodes are within

the radio range of both senders [32]. In particular, less than 7% of the flows in Fig. 4-2

42

Average Flow Throughput [pkt/s]

200

Srcr

ExOR

MORE

150

100

50

0

1

2

3

Number of flows

4

Figure 4-5: Multi-flows. The figure plots the per-flow average throughput in scenarios

with multiple flows. Bar show the average of 40 random runs. Lines show the standard

deviation.

have a best path of 4 hops or longer. Still MORE does better than ExOR. This is mainly

because of capture. The capture effect, however, is hard to quantify or measure. Thus, we

have focused on longer paths to show the impact of spatial reuse.

4.3

Multiple Flows

One may also ask how MORE performs in the presence of multiple flows. Further, since the

ExOR paper does not show any results for concurrent flows, this question is still open for

ExOR as well. We run 40 multi-flow experiments with random choice of source-destination

pairs, and repeat each run for the three protocols.

Fig. 4-5 shows the average per-flow throughput as a function of the number of concurrent

flows, for the three protocols. Both MORE and ExOR achieve higher throughput than Srcr.

The throughput gains of opportunistic routing, however, are lower than for a single flow.

This highlights an inherent property of opportunistic routing; it exploits opportunistic

receptions to boost the throughput, but it does not increase the capacity of the network.

The 802.11 bit rate decides the maximum number of transmissions that can be made in a

time unit. As the number of flows in the network increases, each node starts playing two

roles; it is a forwarder on the best path for some flow, and a forwarder off the best path for

another flow. If the driver polls the node to send a packet, it is better to send a packet from

the flow for which the node is on the best path. This is because the links on the best path

43

Cumulative Fraction of Flows

1

0.8

0.6

0.4

Srcr

ExOR

MORE

Srcr autorate

0.2

0

0

100

200

300

400

Throughput [pkt/s]

500

600

Figure 4-6: Opportunistic Routing Against Srcr with Autorate. The figure compares the throughput of MORE and ExOR running at 11Mb/s against that of Srcr with

autorate. MORE and ExOR preserve their throughput gains over Srcr.

usually have higher delivery probability. Since the medium is congested and the number of

transmissions is bounded, it is better to transmit over the higher quality links.

Also, the gap between MORE and ExOR decreases with multiple flows. Multiple flows

increase congestion inside the network. Although a single ExOR flow may underutilize

the medium because it is unable to exploit spatial reuse, the congestion arising from the

increased number of flows covers this issue. When one ExOR flow becomes unnecessarily

idle, another flow can proceed.

Although the benefits of opportunistic routing decrease with a congested network, it

continues to do better than best path routing. Indeed, it smoothly degenerates to the

behavior of traditional routing.

Finally, this section highlights the differences between inter-flow and intra-flow network

coding. Katti et al. [21] show that the throughput gain of COPE, an inter-flow network

coding protocol, increases with an increased number of flows. But, COPE does not apply

to unidirectional traffic and cannot deal with dead spots. Thus, inter-flow and intra-flow

network coding complement each other. A design that incorporates both MORE and COPE

is a natural next step.

44

4.4

Autorate

Current 802.11 allows a sender node to change the bit rate automatically, depending on

the quality of the link to the recipient. One may wonder whether such adaptation would

improve the throughput of Srcr and nullify the gains of opportunistic routing. Thus, in this

section, we allow Srcr to exploit the autorate feature in the MadWifi driver [29], which uses

the Onoe bit-rate selection algorithm [8].

Opportunistic routing does not have the concept of a link, it broadcasts every packet

to many potential nexthops. Thus, current autorate algorithms are not suitable for opportunistic routing. The problem of autorate control for opportunistic routing is still open.

Therefore, in our experiments, we compare Srcr with autorate to opportunistic routing

(MORE and ExOR) with a fixed bit-rate of 11 Mb/s.

Fig. 4-6 shows CDFs of the throughputs of the various protocols. The figure shows

that MORE and ExOR preserve their superiority to Srcr, even when the latter is combined

with automatic rate selection. Paths with low throughput in traditional routing once again

show the largest gains. Such paths have low quality links irrespective of the bit-rate used,

therefore autorate selection does not help these paths.

Interestingly, the figure also shows that autorate does not necessarily perform better

than fixing the bit-rate at the maximum value. This has been noted by prior work [35]

and attributed to the autorate confusing collision drops from error drops and unnecessarily

reducing the bit-rate.

A close examination of the traces indicates that the auto-rate algorithm often picks the

lowest bit-rate in attempt to reduce packet loss. However, the improvement in quality of

the relatively good links is limited, and a large fraction of the losses is due to interference

thus cannot be avoided by reducing the bit-rate. This limited benefit is greatly outweighed

by the sacrifice in bandwidth efficiency. In our experiments, the average success rate of all

transmissions improves only slightly with autorate from 66% to 68%. At the same time, on

average 23% of all transmissions using autorate are done at the lowest bit-rate, which takes

roughly 10 times longer than the highest bit-rate. These transmissions form a throughput

bottleneck and consume almost 70% of the shared medium time. As shown in Fig. 4-6, this

problem affects about 80% of all flows tested.

45