Cache Data Compaction via Decoupled Tag Store Concept and Motivation Big Questions

advertisement

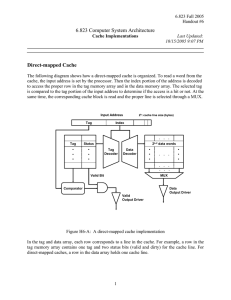

Cache Data Compaction via Decoupled Tag Store Edward Ma 15/18-740, Computer Architecture Dec. 13, 2011 Concept and Motivation Big Questions Just how many tag entries? Concerns Performance, latency and power are tied to memory bandwidth For same number of tag entries, more sectors per tag entry is slightly better. Caches are expensive – can we reduce their size without significantly compromising performance? Sectored caches provide both benefits of reduced cache block granularity and smaller tag store size by tying multiple blocks to one tag entry, thus reducing the number of entries. However, they suffer from poor performance due to unused space. Average Miss Rates 0.8 M i 0.75 s 0.7 s 0.65 R 0.6 a t 0.55 e 0.5 s Data Compaction What if we increase the number 16 8 4 2 1/16 1/8 1/4 1/2 3/4 1 Sect ors Ratio of Cache Blocks to Tag Entries of tag entries slightly and remove the restriction sector restrictions? Where to insert a data block? We can “compact” the data stored in the cache by allowing free “sectors” of the cache to be occupied by other blocks (tags). Each block can have a variable size, can grow and shrink as needed. Benchmarks and Results Savings versus Performance Can’t insert anywhere; reorder latency can be long Can evict block closest to sector “family” More Results & Future Work Replacement Policy Comparison Number of Sectors per Block (nsb) = 8 Hit Ratio to Best Policy 1.2 Comparison of Hit Rates vs. Standard Cache H i t R a t i o 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 1 0.8 1/8 1/4 1/2 3/4 LRU 0.6 Random 0.4 Smallest Largest 0.2 BIP 0 Number of Sectors per Block (nsb) = 4 Summary Marginal performance loss for more savings H i t R a t i o 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 Cache Compacted Design Improves Hit Rate over Sectored Cache design, reduces data block granularity, reducing traffic. 1/8 1/4 1/2 3/4 Some more improvements can be made to hit rate Further exploration of replacement and insertion policies, especially in the data store. Need better latency modeling for data store reorders Techniques to reduce or “hide” reorder latency – offset bits in tag store or reorder amount restrictions….more tradeoffs!