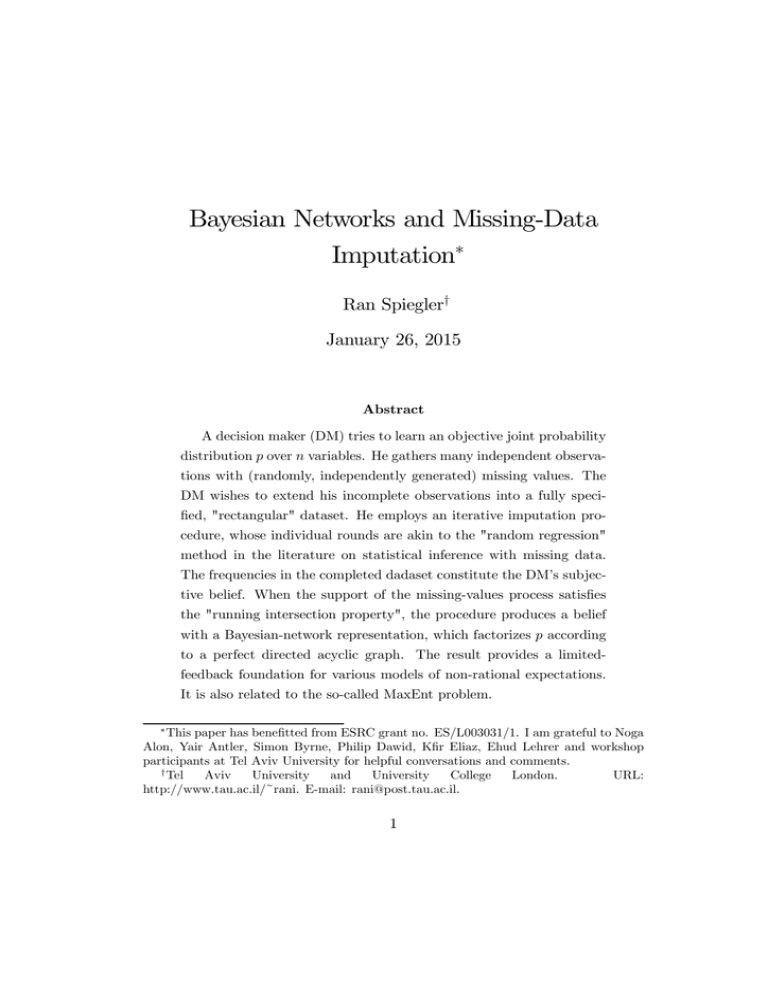

Bayesian Networks and Missing-Data Imputation ∗ Ran Spiegler

advertisement

Bayesian Networks and Missing-Data

Imputation∗

Ran Spiegler†

January 26, 2015

Abstract

A decision maker (DM) tries to learn an objective joint probability

distribution over variables. He gathers many independent observations with (randomly, independently generated) missing values. The

DM wishes to extend his incomplete observations into a fully specified, "rectangular" dataset. He employs an iterative imputation procedure, whose individual rounds are akin to the "random regression"

method in the literature on statistical inference with missing data.

The frequencies in the completed dadaset constitute the DM’s subjective belief. When the support of the missing-values process satisfies

the "running intersection property", the procedure produces a belief

with a Bayesian-network representation, which factorizes according

to a perfect directed acyclic graph. The result provides a limitedfeedback foundation for various models of non-rational expectations.

It is also related to the so-called MaxEnt problem.

∗

This paper has benefitted from ESRC grant no. ES/L003031/1. I am grateful to Noga

Alon, Yair Antler, Simon Byrne, Philip Dawid, Kfir Eliaz, Ehud Lehrer and workshop

participants at Tel Aviv University for helpful conversations and comments.

†

Tel

Aviv

University

and

University

College

London.

URL:

http://www.tau.ac.il/~rani. E-mail: rani@post.tau.ac.il.

1

1

Introduction

Imagine a fresh business graduate who has just landed a job as a junior analyst in a consulting firm. The analyst is ordered to write a report about a

duopolistic industry. Toward this end, he gathers periodic data about three

relevant variables: the product price (denoted 1 ) and the quantities chosen

by the two producers (denoted 2 and 3 ). The price is public information

and its periodic realizations are always available. In contrast, quantities are

not publicly disclosed and the analyst must rely on private investigation to

obtain data about them. Lacking any assistance, he must do the investigation himself, and he is unable to monitor both quantities at the same time.

Specifically, at any given period , the analyst observes either 2 or 3 .

After collecting the data, the analyst records his observations in a spreadsheet:

1 2 3

1

2

3

4

5

6

..

.

X

X

X

X

X

X

..

.

X

X

−

−

X

−

..

.

−

−

X

X

−

X

..

.

where a "X" ("−") sign in a cell indicates that the value is observed (missing). Let us use to denote the value of in observation , whenever this

value is not missing. Because of the missing values, the analyst’s spreadsheet is "non-rectangular". He seeks a way to "rectangularize" it - i.e., fill

the missing cells in a systematic way, in order to have a complete, "rectangular" dataset amenable to rudimentary statistical analysis (plot diagrams,

table statistics, regressions) to be included in his report. Ultimately, the

2

frequencies of (1 2 3 ) in the rectangularized spreadsheet will serve as the

analyst’s practical estimate of the joint distribution over prices and quantities

in the industry.

The situation described in this example is quite common. In many reallife situations, we try to learn the statistical regularities in our environment

through exposure to datasets with missing values. Of course, there are professional statistical techniques for imputing missing values (see Little and

Rubin (2002)). And one may go further and question the normative justification for any attempt to extend incomplete datasets to a fully specified

probability distribution (Gilboa (2014)).

However, my approach in this paper is not normative, but descriptive.

How would an ordinary decision maker, like our analyst, who is not a professional statistician but nevertheless wants to perform some kind of data

analysis, approach such a situation? Which intuitive methods would he apply to his limited dataset in order to make it an object of rudimentary data

analysis? Specifically, how would he impute missing values? To the extent

that the output of his method is a probabilistic belief that exhibits a systematic bias relative to the true underlying distribution, the method can be

viewed as an explanation for the bias. In this sense, what I am interested in

can be referred to as "behavioral imputation".

Returning to the example, the following is a plausible principle our analyst

might employ to fill the missing cells in his spreadsheet. When the value of

( = 2 3) is missing in some observation , he can use the observed data

about the joint distribution of and 1 , coupled with the known realization

1 , to impute a value for . In particular, he may draw the value of from

the empirical distribution over conditional on 1 . In fact, this procedure is

a non-parametric version of the so-called "stochastic regression" imputation

technique (Little and Rubin (2002, Ch. 4)).

What is the distribution over = (1 2 3 ) in the rectangularized

spreadsheet induced by this imputation procedure? Suppose that in every

3

period, the profile is independently drawn from some objective joint distribution . Assume further that the spreadsheet is long: the analyst has

managed to gather infinitely many observations of 1 2 and 1 3 . Thus,

through repeated observation, he has effectively learned the marginal distributions (1 2 ) and (1 3 ). Therefore, in the part of the spreadsheet

where the values of 3 were originally missing, the frequency of is 1 () =

(1 2 )(3 | 1 ). And in the part of the spreadsheet where the values of

2 were originally missing, the frequency of is 2 () = (1 3 )(2 | 1 ).

The overall frequency of in the final spreadsheet is thus a weighted

average of 1 and 2 , where the weights match the relative size of the two

parts in the original spreadsheet. However, observe that by the basic rules

of conditional probability,

(1 2 )(3 | 1 ) = (1 3 )(2 | 1 ) = (1 )(2 | 1 )(3 | 1 )

(1)

Thus, both parts in the analyst’s rectangularized spreadsheet exhibit the

same frequencies of any given . As the R.H.S of (1) makes explicit, these

frequencies regard the firms’ quantities as statistically independent conditional on the price. This conditional independence property is a direct consequence of the analyst’s method of imputation; it need not be satisfied by

the objective distribution itself. Thus, the analyst’s constructed statistical

description distorts the true underlying distribution.

Expression (1) is an instance of a "Bayesian-network representation". In

general, suppose that is a joint probability distribution over a collection

of random variables = (1 ), and let be an asymmetric, acyclic

(but not necessarily transitive) binary relation over the set of variable labels

= {1 }. Define, for every ,

(1 ) =

Y

=1

4

( | () )

(2)

where () = { | }. Thus, the expression "factorizes according to

". It is convenient to represent as a directed acyclic graph (DAG) over

the set of nodes , such that corresponds to the directed edge → ,

and () is the set of "direct parents" of the node . I will therefore refer

to (2) as a "Bayesian-network representation" or as a "DAG representation"

interchangeably. The R.H.S of (1) is an instance of a DAG representation,

where the DAG is 2 ← 1 → 3.

Bayesian networks - and more generally, probabilistic graphical models

- have many uses in statistics and Artificial Intelligence: they help analyzing systematic implications of conditional-independence assumptions, devising efficient algorithms for computing Bayesian updating, and systematize

reasoning about causal relations that underlie statistical regularities. For

prominent textbooks, see Cowell et al. (1999) and Pearl (2000).

Closer to home, Bayesian-network representations turn out to be relevant

for economic models of non-rational expectations. In recent years, economic

theorists have become increasingly interested in decision making under systematically biased beliefs. In particular, game theorists developed solution

concepts that extend the steady-state interpretation of conventional Nash

equilibrium, while relaxing its rational-expectations property in favor of the

assumption that players’ subjective beliefs systematically distort the objective steady-state distribution (e.g., Osborne and Rubinstein (1998), Eyster

and Rabin (2005), Jehiel (2005), Jehiel and Koessler (2008), Esponda (2008),

Esponda and Pouzo (2014a)).1

In a companion paper (Spiegler (2014)), I demonstrate that many of these

models of subjective belief distortion (as well as other belief biases that had

not been formalized before) can be expressed in terms of a Bayesian-network

representation. The individual agent is characterized by a "subjective DAG"

1

Piccione and Rubinstein (2003) and Eyster and Piccione (2013) developed ideas of a

similar nature in a competitive-equilibrium framework. Macroeconomic theorists examined

models in which agents learn misspecified models and thus come to hold biased beliefs

(Sargent (2001), Evans and Honkapohja (2001), Woodford (2013)).

5

, and his subjective belief factorizes the objective equilibrium distribution

according to . The "true model" is defined by the set of objective distributions that are consistent with the "true DAG" ∗ . We capture the agent’s

systematic bias by performing a basic operation on ∗ (removing, inverting

or reorienting links) to obtain . Thus, the Bayesian-network representation

of subjective beliefs can be viewed as a way to organize part of the literature

on equilibrium models with non-rational expectations.

An argument that is often invoked in justification of equilibrium concepts

with non-rational expectations, is that agents form their beliefs by naively

extrapolating from limited learning feedback. Sometimes, as in Osborne and

Rubinstein (1998), this idea is built formally into the solution concept. More

often, though, it is informal. The observation that in our example, the subjective belief that emerges from the analyst’s imputation procedure has a

Bayesian-network representation is thus of interest, because it provides a

"limited feedback foundation" for a useful representation of non-rational expectations. The main question in this paper is the following: If we extend the

analyst’s imputation procedure to general datasets with (randomly generated)

missing values, under what conditions will the subjective belief that emerges

from the procedure have a Bayesian-network representation?

To address this question, I construct a model in which a decision maker

(DM) has access to a dataset consisting of infinitely many observations, independently drawn from an objective distribution over = (1 ).

For each observation, the set of variables whose values are observed is some

⊂ {1 }, generated by an independent random process with support S.

In the "analyst" example, S = {{1 2} {1 3}}. I assume that S is a cover

of the set of variables {1 }, and (for convenience) that no member of S

contains another. Because there are infinitely many observations, the DM

effectively learns the marginal of over , denoted , for every ∈ S.

The DM’s objective is to extend his dataset to a fully specified probability

distribution over (1 ). He performs an iterative version of the imputa-

6

tion method described in the example. His starting point is some 0 ∈ S. In

the first round of the procedure, he searches for another 1 ∈ S having the

largest intersection with 0 (with arbitrary tie-breaking). The intuition for

this "maximal overlap" criterion is that the DM tries to make the most of

observed correlations among variables. He then performs the same imputation method as in the example, replacing the known marginal distributions

(0 ) and ( 1 ) with a single marginal distribution 1 ∈ ∆(1 ), where

1 = 0 ∪ 1.

Thus, at the end of the procedure’s first round, the DM has produced a

new dataset, which rectangularizes the part of the original dataset in which

the observed sets of variables were 0 and 1 . In the next round, the DM

applies the same imputation method, taking 1 as his new starting point,

and so forth. The procedure terminates after − 1 rounds, producing a

complete, rectangular dataset. The frequencies of (1 ) in this dataset

serve as the DM’s final subjective belief.

The main result in the paper establishes a necessary and sufficient condition on S for the belief produced by the iterative imputation procedure

to have a Bayesian-network representation for all objective distributions .

The condition is known in the literature as the "running intersection property" (e.g., Cowell et al. (1999, p. 55)), and I demonstrate that it is satisfied by a variety of natural missing-values processes. When the condition

holds, the DAG in the Bayesian-network representation is perfect: for

every ∈ (), either or . Conversely, every Bayesian-network

representation that involves a perfect DAG can be justified as the output

of the iterative imputation procedure, applied to any missing-values process

whose support is the set of maximal cliques in .

The results in this paper thus provide a missing-data foundation for

Bayesian-network representations that involve perfect DAGs. Section 3 provides a few economic illustrations of how this foundation works. Section 4

shows how this foundation is related to the so-called MaxEnt problem. I refer

7

the reader to Spiegler (2014) for many other economic examples, in which the

DM’s subjective belief has a Bayesian-network representation that involves

a perfect-DAG. The results in this paper thus tell a story about the possible

origins of such beliefs.

2

The Model

Let = 1 × · · · × be a finite set of states, where ≥ 2. I refer to

as a variable. Let = {1 } be the set of variable labels. Let ∈ ∆()

be an objective probability distribution over . For every ⊆ , denote

= ( )∈ and = ×∈ , and let denote the marginal of over

. Consider a decision maker (DM henceforth) who obtains an infinite

sequence of independent draws from . However, for each observation, he

only gets to see the realized values of a subset of variables ⊂ , where is

independently drawn from a probability distribution ∈ ∆(2 ), referred to

as the missing-values process. The pair ( ) constitutes the DM’s dataset.

The support of is denoted S. Let |S| = ≥ 2, and assume that S is a

cover of . In addition, assume (purely for convenience) that there exist no

0 ∈ S such that ⊂ 0 . Thanks to the DM’s infinite sample, he gets to

learn for every ∈ S.

We could define a dataset more explicitly, as an infinite sequence ( ),

where is a random draw from ; is an independent random draw from ;

. However, for our purposes,

and the value of is missing if and only if ∈

identifying the dataset with ( ) is w.l.o.g and more convenient to work

with. The more elaborate definition would be appropriate for extensions of

the model, e.g. when the DM’s sample is finite.

2.1

An Iterative Imputation Procedure

The DM’s task is to extend his dataset into a fully specified subjective probability distribution over . He performs this task using an "iterative im8

putation procedure", which consists of − 1 rounds. The initial condition

of round = 1 − 1 round is a pair ( −1 −1 ), where −1 ⊆

and −1 ∈ ∆( −1 ). In particular, 0 is an arbitrary member of S, and

0 = 0 . Round consists of two steps:

¯

¯

Step 1: Select ∈ arg max∈S−{0 −1 } ¯ ∩ −1 ¯. Let = −1 ∪ .

Step 2: Define two auxiliary distributions over :

1 ( ) ≡ −1 (−1 ) · ( −−1 | ∩−1 )

2 ( ) ≡ ( ) · −1 (−1 − | ∩−1 )

and then, define ∈ ∆( ) as follows:

P−1

( )

( )

1 + P

2

=1 ( )

=1 ( )

≡ P=1

If = − 1, the procedure is terminated and −1 ∈ ∆() is the DM’s final

belief. If − 1, switch to round + 1.

This procedure describes a process by which the DM gradually completes

his dataset, iterating the method for imputing missing values described in

the Introduction. By the end of round − 1, the DM has "rectangularized"

the part of the original dataset that consisted of observations of , ∈

{ 0 1 −1 }. He has thus transformed the (infinite sets of) observations

of the variable sets 0 1 −1 into an infinite set of "observations" that

induce a fully-specified joint distribution over −1 . In step 1 of round ,

the DM looks for a new set of variables ∈ S having maximal overlap

with −1 . The rationale for this "maximal overlap" criterion is that the

DM tries to extrapolate as little as possible and make the most of observed

correlations.

In Step 2 of round , the DM extends the "rectangularization" of the

9

dataset from the variable set −1 to the variable set = −1 ∪ ,

using the same method as in the introductory example. It will be useful to

describe it in terms of the "spreadsheet" image. First, the DM exploits the

observed distribution over - when the value of −−1 is missing in

some row of the spreadsheet, he imputes a random draw from ( −−1 |

∩−1 ). The resulting frequency of in this part of the spreadsheet

is 1 ( ) ≡ −1 (−1 ) · ( −−1 | ∩−1 ). Second, the DM exploits

the constructed distribution −1 over −1 - when the value of −1 −

is missing in some row of the spreadsheet, he imputes a random draw from

−1 (−1 − | ∩−1 ). The resulting frequency of in this part of

the spreadsheet is 2 ( ) ≡ ( ) · −1 (−1 − | ∩−1 ). The DM

produces the distribution over as a weighted average of 1 and 2 ,

according to the relative number of "observations" in each of the two parts

of the dataset.

The DM terminates the procedure when he has "rectanguralized" the

entire dataset - namely, all missing values have been imputed.

Example 2.1. Consider a dataset in which S is a partition of . Then, in

{ 0 1 −1 }. Therefore,

any round , −1 ∩ = ∅ for every ∈

can be selected arbitrarily. The auxiliary distributions 1 and 2 , calculated

in Step 2, are both equal to −1 (−1 )( ). It follows that in any round

, ( ) = (0 )( 1 ) · · · ( ). In the final round − 1, we obtain

−1 () =

Y

( )

∈S

We see that the belief that emerges from the DM’s procedure is a product of

marginal objective distributions.

Example 2.2. Let = {1 2 3 4} and assume that the support of is

S = {{1 3} {1 2} {2 4}}. This means that the DM has effectively learned

the joint distributions (1 3 ), (1 2 ) and (2 4 ). For an economic

10

story behind this specification, imagine that the DM learns the behavior of

two players in a game with incomplete information, where 1 and 2 represent

the players’ signals, and 3 and 4 represent their actions. The DM’s dataset

enables him to learn the joint distribution of the players’ signals, as well as

the joint signal-action distribution for each player.

Let us implement the iterative imputation procedure. In round 1, select the initial condition to be 0 = {1 3}. By the maximal-overlap criterion of Step 1, The only legitimate continuation is 1 = {1 2}, such that

1 = {1 2 3}. Imputing the missing values of 3 and 2 is done exactly as

in the motivating example of the Introduction. By the end of the first round,

the DM has "rectanguralized" the part of his dataset in which he originally

observed 1 2 or 1 3 . He has replaced these observations with "manufactured observations" of the triple 1 2 3 , and the joint distribution over

these variables in the manufactured dataset can be written as

1 (1 2 3 ) = (3 )(1 | 3 )(2 | 1 )

In the second and final round, 2 = {2 4}, such that 2 = , and

22 ()

1

= (2 4 ) · (1 3 | 2 ) = (2 4 )

1{123} (1 2 3 )

1{123} (2 )

(3 )(1 | 3 )(2 | 1 )

= (2 4 ) P P

0

0

0

0

0

0 (3 )(1 | 3 )(2 | 1 )

1

3

(2 )(1 | 2 )(3 | 1 )

P

P

= (2 4 )

(2 ) 01 (01 | 2 ) 03 (03 | 01 )

= (2 4 )(1 | 2 )(3 | 1 )

= (3 )(1 | 3 )(2 | 1 )(4 | 2 )

= 1 (1 2 3 ) · (4 | 2 ) = 21 ()

11

Thus, 21 = 22 = 2 , which can be written as

2 () = (1 )(2 | 1 )(3 | 1 )(4 | 2 )

(3)

In the context of the economic story described earlier, this expression for

the DM’s subjective belief has a simple interpretation: he ends up believing

that each player’s mixture over his actions is measurable w.r.t his signal i.e., the player’s action is independent of his opponent’s signal and action,

conditional on the player’s own signal.

Comment: The role of the "maximal overlap" criterion

Suppose that in Example 2.2, the DM ignored the "maximal overlap" criterion and picked 1 = {2 4} in the first round. The output of this round

would be 1 = and 1 () = (1 3 )(2 4 ). In the second round, we

would have 21 = 1 , and 22 () = (1 2 )1 (3 4 | 1 2 ). As a result, the

final output of the procedure would be the belief

2 () = ({1 3} + {2 4})1 () + {1 2}22 ()

£

¤

= 1 (3 4 | 1 2 ) {1 2}(1 2 ) + ({1 3} + {2 4})1 (1 2 )

Consider an objective distribution under which 3 and 4 are both independently distributed, whereas the variables 1 and 2 are mutually correlated.

Then, 1 () = (1 )(2 )(3 )(4 ). Therefore,

2 () = (3 )(4 ) [{1 2}(1 2 ) + ({1 3} + {2 4})(1 )(2 )]

(4)

This expression underestimates the objective correlation between 1 and 2 .

By comparison, under the same assumptions on , expression (3) would reduce to

(5)

(3 )(4 )(1 2 )

which fully accounts for the objective correlation between 1 and 2 . This

12

example demonstrates that the maximal-overlap criterion matters for the

evolution of the iterative imputation procedure.

2.2

Bayesian Networks

The final belief that emerges from the DM’s procedure in each of the examples

factorizes into a product of conditional probabilities. Both are instances of

a "Bayesian-network representation". I now provide a concise exposition of a

few basic concepts in the literature on Bayesian networks. The exposition is

standard - see Cowell et al. (1999) - occasionally using different terminology

that is more familiar to economists.

Let be an acyclic asymmetric binary relation over . For every ∈ ,

let () = { ∈ | }. The pair ( ) can be viewed as a directed acyclic

graph (DAG), where is the set of nodes, and means that there is a

link from to . I will therefore refer to elements in as "variable labels"

or "nodes" interchangeably. Slightly abusing terminology, I will refer to

as a DAG and I will use the notations and → interchangeably. The

skeleton of , denoted ̃, is its non-directed version - that is, ̃ if or

. A subset of nodes ⊆ is a clique in if ̃ for every ∈ . A

clique is maximal if it is not a strict subset of another clique. A clique is

ancestral if () ⊂ for every ∈ .

Fix a DAG . For every objective distribution ∈ ∆(), define

() ≡

Y

∈

( | () )

(6)

The distribution is said to factorize according to . In Example 2.1,

the final belief factorizes according to a DAG that consists of disjoint

cliques. In Example 2.2, the final belief factorizes according to the DAG

3 ← 1 → 2 → 4. The DAG and the set of distributions that can be

factorized by constitute a Bayesian network.

Different DAGs can be equivalent in terms of the distributions they fac13

torize. For example, the DAGs 1 → 2 and 2 → 1 are equivalent, since

(1 )(2 | 1 ) ≡ (2 )(1 | 2 ).

Definition 1 (Equivalent DAGs) Two DAGs and are equivalent if

() ≡ () for every ∈ ∆().

For instance, all linear orderings (i.e., transitive and anti-symmetric binary relations) are equivalent: in this case, the factorization formula (6)

reduces to the textbook chain rule, where the enumeration of the variables

is known to be irrelevant.

Verma and Pearl (1991) provided a complete characterization of equivalent DAGs, which will be useful in the sequel. Define the -structure of a

DAG to be the set of all triples of nodes such that , ,

/

and .

/

Proposition 1 (Verma and Pearl (1991)) Two DAGs and are equivalent if and only if they have the same skeleton and the same -structure.

To illustrate this result, the DAGs 1 → 3 ← 2 and 1 → 3 → 2 have

identical skeletons but different -structures. Therefore, these DAGs are not

equivalent: there exist distributions that can be factorized by one DAG but

not by the other. In contrast, the DAGs 1 → 3 → 2 and 1 ← 3 ← 2

are equivalent because they have the same skeleton and the same (vacuous)

-structure.

A certain class of DAGs will play a special role in the sequel.

Definition 2 (Perfect DAGs) A DAG is perfect if () is a clique for

every ∈ .

14

Thus, a DAG is perfect if and only if it has a vacuous -structure: for

every triple of variables for which and , it is the case that ̃.

For instance, the DAG 3 ← 1 → 2 → 4 that factorizes the final belief in

Example 2.2 is perfect. Proposition 1 thus implies the following result.

Remark 1 Two perfect DAGs are equivalent if and only if they have the

same set of maximal cliques. In particular, we can set any one of these

cliques to be ancestral w.l.o.g.

In what follows, I refer to as a DAG representation. When is perfect,

I refer to as a perfect-DAG representation.

The DAG representation generally distorts the objective distribution

. However, certain marginal distributions are not distorted. The following proposition, which will be useful in the next section, characterizes these

cases.2

Proposition 2 Let be a DAG and let ⊆ . Then, ( ) ≡ ( ) for

every if and only if is an ancestral clique in some DAG in the equivalence

class of .

Thus, the marginal distribution over induced by never distorts

the true marginal if is an ancestral clique in , or in some DAG that is

equivalent to . The intuition for this result can be conveyed through the

causal interpretations of DAGs, and by considering a set that consists of

a single node . When is an ancestral node, it is a "primary cause". The

belief distortions that arise from a misspecified DAG concern variables that

are either independent of or (possibly indirect) effects of . Therefore, these

2

For other properties of Bayesian-network representations, see Dawid and Lauritzen

(1993).

15

distortions are irrelevant for calculating the marginal distribution of . In

contrast, suppose that cannot be represented as an ancestral node in any

DAG that is equivalent to . Then, it can be shown that there must be

two other variables that function as (possibly indirect) causes of , and

the DAG deems independent of or . This failure to account for the

full dependencies among and its multiple causes can lead to distorting the

marginal distribution over .

3

The Main Results

In this section, I show that a perfect-DAG representation can always be

justified as the final output of the iterative imputation procedure. In contrast,

an imperfect-DAG representation lacks this foundation. The analysis will rely

on the following twin definitions.

Definition 3 A sequence of sets 1 satisfies the running intersection property (RIP) if for every = 2 , ∩ (∪ ) ⊆ for some

. The collection S satisfies RIP∗ if its elements can be ordered in a

sequence that satisfies RIP.

Thus, a sequence of sets satisfies RIP if the intersection between any

set along the sequence and the union of its predecessors is weakly contained in one of these predecessors. We will be interested in whether S

(the support of the missing-values process ) satisfies RIP∗ . The property

holds trivially when the sequence consists of two sets. The supports of

in Examples 2.1 and 2.2 satisfy RIP* - vacuously in the former case, and

by the sequence {1 3} {1 2} {2 4} in the latter. In contrast, the collection

S = {{1 3} {1 2} {2 3}} violates RIP*.

In what kind of missing-values processes should we expect RIP∗ to hold?

The following are two natural cases.

16

Example 3.1. Consider situations (like the motivating example of the Introduction) in which there is a set of "public variables" that are always observed,

as well as "private variables" whose observability is mutually exclusive. Formally, there is a set ⊂ such that S = { ∪ {}}∈− . Any ordering

of S satisfies RIP.

Example 3.2. Suppose that S consists of all "intervals" of length − 1 - that

is,

S = {{1 } {2 + 1} { − + 1 }}

This is a natural assumption when different ’s indicate values of a single

variable at different time periods , and observations of the variable consist

of lagged realizations with bounded memory − 1. The above ordering of

the sets in S satisfies RIP, because the intersection of each set with the union

of all its predecessors is weakly contained in its immediate predecessor.

RIP is a familiar property in the Bayesian-network literature (see Cowell

et al. (1999, p. 54)), because of its relation to the notion of perfect DAGs.

Remark 2 (Cowell et al. (1999, p. 54)) The set of maximal cliques in

any perfect DAG satisfies RIP*.

We are now ready to state the main results of this paper.

Proposition 3 Suppose that S satisfies RIP*. Then, the iterative imputation procedure produces a final belief with a perfect-DAG representation,

where the set of maximal cliques in the DAG is S.

Thus, when S satisfies RIP∗ , −1 factorizes any objective distribution

according to a perfect DAG whose set of maximal cliques is S. Since all

perfect DAGs with the same set of maximal cliques are equivalent, the DAG

17

representation of the DM’s final belief is essentially unique. In particular, it

is independent of the arbitrary selections that the procedure involves along

the way (the initial condition 0 , tie breaking when there are multiple ways

to respect the "maximal overlap" criterion in step 1 of some round).

The RIP plays a subtle role in the proof of Proposition 3. Recall that

RIP* states that the sets in S can be ordered in some sequence that will

satisfy RIP. However, the order in which the iterative imputation procedure

considers the sets in S (as the DM "rectangularizes" increasingly large parts

of the dataset) is governed by the maximal-overlap criterion of Step 1 in each

round. A priori, such a sequence need not satisfy RIP. However, a lemma

due to Noga Alon (Alon (2014)) ensures that it does.

Illustrations

Let us illustrate the economic meaning of Proposition 3, by revisiting the two

economic examples we provided for datasets that satisfy RIP∗ . In Example

3.1, the iterative imputation procedure produces a final belief with a perfectDAG representation, which can be written as follows:

−1 () = ( ) ·

Y

∈

( | )

Thus, the DM ends up considering all "private variables" to be independently

distributed, conditional on the "public variables".

As to Example 3.2, the iterative imputation procedure produces a final

belief with a perfect-DAG representation, which can be written as follows:

−1 () = (1 −1 ) ·

Y

=

( | −1 −+1 )

If we interpret as the value of some variable at time period , then −1

reads exactly like a serially correlated process with memory − 1.

18

The two examples show how natural structures of subjective belief can

emerge as extrapolations from equally natural specifications of the DM’s

incomplete dataset. Spiegler (2014) contains numerous additional examples

of perfect-DAG representations in concrete economic settings. Moreover,

some of these examples are abstracted from existing models of non-rational

expectations in the literature. Proposition 3 can thus be viewed as a limitedfeedback foundation for these models, too.

The next result is a converse to Proposition 3: if S violates RIP∗ , the

iterative imputation procedure produces a final belief that lacks a DAG representation, in the following sense: there exists no DAG such that −1

factorizes all objective distributions according to .

Proposition 4 Suppose that S violates RIP*. then, for every DAG there

exists a (positive-measure) set of objective distributions such that −1 () 6=

() for some .

Propositions 3 and 4 imply that among all DAG representations, only

those that involve perfect DAGs have a "missing data" foundation, as articulated by the following corollary.

Corollary 1 () If is perfect, then any missing-values process whose

support consists of the maximal cliques in has the following property: for

every objective distribution , applying the iterative imputation procedure to

the dataset ( ) will generate the final belief −1 = . () If is not

perfect, then for any missing-values process , there is an objective distribution such that applying the iterative imputation procedure to the dataset

( ) will generate a final belief −1 6= .

19

The first part of the corollary follows directly from Remark 2: the set

of maximal cliques of a perfect DAG satisfies RIP∗ . The second part

says that imperfect-DAG representations cannot be justified by the iterative

imputation procedure - in other words, they cannot be "inferred" from the

data. For example, consider the DAG 1 → 3 ← 2. For any , we can find

such that applying the iterative imputation procedure to the dataset ( )

will give rise to a final belief −1 such that −1 () 6= (1 )(2 )(3 |

1 2 ) for some .

Causal inferences

Pearl (2000) advocated a causal interpretation of DAG representations, and

turned them into a powerful formalism for systematic causal reasoning. According to this view, the DAG represents a fundamental causal structure

that underlies the statistical regularities given by , and the directed edge

→ means that the variable is considered to be a direct cause of variable . The conditional-independence properties captured by are thus a

consequence of the underlying causal structure.

In the context of the "analyst" example that opened this paper, imagine

that our analyst is tempted to draw causal inferences from the statistical

relations in his rectangularized spreadsheet. (I say "tempted" because he

should have been wary of imposing causal interpretations on purely statistical

data.) Are firms price takers responding to exogenous price changes? Or is

the product price a consequence of firms’ independent quantity choices, as in

Cournot duopoly? A priori, both theories are plausible, hence causality could

run in both directions. However, the Bayesian-network representation (1)

favors the "price taking" theory: price is a primary cause and quantities are

conditionally independent consequences. This causal theory can be described

by the DAG : 2 ← 1 → 3. It is empirically distinct from the "quantity

competition" theory, described by the DAG 0 : 2 → 1 ← 3, in the sense

that and 0 are not equivalent. Thus, the analyst’s willingness to draw

causal inferences from his incomplete dataset has led him to favor a causal

20

story that regards the publicly observed variable as a primary cause, and to

dismiss a causal story that regards it as a final consequence.

At the same time, the analyst’s causal inferences in this example are not

robust, because is equivalent to the DAGs 2 → 1 → 3 and 3 → 1 → 2.

Thus, the causal links in the Bayesian-network representation of his final

belief are not pinned down. Corollary 1 implies that this is a general property.

In a perfect DAG, we can select any maximal clique to be ancestral, w.l.o.g.

Therefore, a causal interpretation of the DAG means that every variable can

be viewed as a primary cause. If there is a directed path from to in some

perfect DAG, then there is an equivalent DAG in which the path is entirely

inverted. In this sense, the beliefs that the iterative imputation procedure

extrapolates from datasets lack a meaningful causal interpretation.

An alternative termination criterion

A natural variant on the imputation procedure would terminate it in the earliest round for which = . In other words, the DM would stop as soon

as his "edited" spreadsheet has an infinite set of rows for which no cell has

a missing value, instead of trying to rectangularize the entire spreadsheet.

When RIP* is satisfied, this variation would not make any difference (in

particular, by the assumption that S does not include sets that contain one

another, the alternative procedure would terminate in exactly −1 rounds).

When RIP* is violated, it is possible that under the alternative termination

criterion, the imputation procedure will culminate in a belief with a DAG

representation. However, the DAG will not be essentially unique, as it will

depend on arbitrary selections made at various stages of the procedure. For

instance, let = {1 2 3}, S = {{1 2} {1 3} {2 3}}. The alternative procedure will terminate after one round, leading to a belief with a perfect-DAG

representation ← → , where any permutation of is possible.

21

4

Relation to the MaxEnt Problem

I have introduced a "behaviorally motivated" procedure for extending a

dataset with missing values into a fully-specified probability distribution over

, and characterized when this procedure generates a DAG representation.

One could consider other, more "normatively motivated" extension criteria.

One such criterion is maximal entropy. Suppose the DM faces the dataset

( ) and derives from it the marginal distributions of over , ∈ S. The

DM’s problem is to find a probability distribution ∈ ∆() that maximizes

entropy subject to the constraint that ( ) ≡ ( ) for every ∈ S. A

more general version of this problem was originally stated by Jaynes (1957)

and has been studied in the machine learning literature, where it is known

as the MaxEnt problem.

The maximal-entropy criterion generalizes the "principle of insufficient

reason" (recall that unconstrained entropy maximization yields the uniform

distribution). The idea behind it is that the DM wishes to be "maximally

agnostic" about the aspects of the distributions he has not learned, while

being entirely consistent with the aspects he has learned. For instance, suppose that the DM only manages to learn the marginal distributions over all

individual variables - i.e., S = {{1) {}}. Then, the maximal-entropy

extension of these marginals is (1 ) · · · ( ), which is consistent with an

empty-DAG representation.

The following is an existing result, reformulated to suit our present purposes.

Proposition 5 ((Hajek et al. (1992))) Suppose that is perfect. Then,

is a maximal-extension entropy of the marginals of over all , where

is a maximal clique in .

This result establishes a connection between the iterative imputation procedure and the MaxEnt problem: the former can be viewed as an algorithm

22

for implementing the latter whenever S satisfies RIP∗ . This is a partial,

quasi-normative justification for the iterative imputation procedure.

5

Conclusion

This paper presented an example of a broad research program: establishing

a "limited learning feedback" foundation for representations of "boundedly

rational expectations". The basic idea is that DMs extrapolate their belief

from some feedback they receive about a prevailing probability distribution.

The form of their belief - and the systematic biases it may be exhibit in

relation to the true distribution - will reflect the structure of their feedback and the method of extrapolation they employ. In this paper, limited

feedback took the form of an infinitely large sample subjected to an independent missing-values process; the extrapolation method was defined by the

iterative imputation procedure; and under some condition on the feedback

(RIP∗ ), the resulting belief had an essentially unique Bayesian-network representation, from which aspects of the underlying limited feedback could be

inferred. The same condition ensured that another extrapolation method,

namely MaxEnt, would be equivalent. As I demonstrate in Spiegler (2014),

the belief distortions inherent in this representation of subjective beliefs have

interesting implications for individual behavior.

This view of our exercise suggests natural directions for extension. First,

it may be interesting to explore generalizations of the Bayesian-network

representation, such as convex combinations of DAG representations, or

graphs with both directed and non-directed links. Second, the link to the

literature on statistical inference with missing data (see Little and Rubin

(2002) for a textbook treatment) can be strengthened. The class of missingvalue processes that I have assumed is known in this literature as MissingCompletely-at-Random (MCAR). Step 2 in each round of the imputation

procedure is a "non-parametric cousin" of the so-called "stochastic regres23

sion" technique. The missing-data aspect can be developed in various ways:

the processes and may be correlated; the DM’s sample may be finite; and

it may consists of passive observational data and active, deliberate "experiments". Finally, alternative methods of extrapolation can be considered.

The link between learning feedback and non-rational expectations was

studied in two recent papers. Esponda and Pouzo (2014b) proposed a general game-theoretic model, in which each player has a "subjective model",

which is a set of stochastic mappings from his action to a primitive set of

payoff-relevant consequences he observes during his learning process. This

learning feedback is limited because in the true model, other "latent" variables may affect the action-consequence mapping. Esponda and Pouzo define

an equilibrium concept, in which each player best-replies to a subjective distribution (of conditional on ), which is the closest in his subjective model

to the true equilibrium distribution. Distance is measured by a weighted

version of Kullback-Leibler divergence. Esponda and Pouzo justify this equilibrium concept as the steady-state of a Bayesian learning process.

Schwartzstein (2014) studied a dynamic model in which a DM tries to

predict a variable as a function of two variables and . At every period,

he observes the realizations of and . In contrast, he pays attention to

the realization of only if his belief at the beginning of the period is that

is sufficiently predictive of . When the DM chooses not to observe , he

imputes a constant value. Schwartzstein examines the long-run belief that

emerges from this learning process, and in particular the DM’s failure to

perceive correlations among the three variables.

References

[1] Alon, N. (2014), “Problems and Results in Extermal Combinatorics III,” Tel Aviv University, mimeo.

24

[2] Cowell, R., P. Dawid, S. Lauritzen and D. Spiegelhalter (1999), Probabilistic Networks and Expert Systems, Springer, London.

[3] Dawid, P. and S. Lauritzen (1993), “Hyper Markov Laws in the Statistical Analysis of Decomposable Graphical Models,” Annals of Statistics,

21, 1272—1317.

[4] Esponda, I. (2008), “Behavioral Equilibrium in Economies with Adverse

Selection,” The American Economic Review, 98, 1269-1291.

[5] Esponda, I. and D. Pouzo (2014a), “An Equilibrium Framework for

Modeling Bounded Rationality,” mimeo.

[6] Esponda, I. and D. Pouzo (2014b), “Conditional Retrospective Voting

in Large Elections,” mimeo.

[7] Evans, G. and S. Honkapohja (2001), Learning and Expectations in

Macroeconomics, Princeton University Press, Princeton.

[8] Eyster, E. and M. Piccione (2013), “An Approach to Asset Pricing Under

Incomplete and Diverse Perceptions,” Econometrica, 81, 1483-1506.

[9] Eyster, E. and M. Rabin (2005), “Cursed Equilibrium,” Econometrica,

73, 1623-1672.

[10] Gilboa, I. (2014), “Rationality and the Bayesian Paradigm,” mimeo.

[11] Hajek, P., T. Havranek and R. Jirousek (1992), Uncertain Information

Processing in Expert Systems, CRC Press.

[12] Jaynes, E. T. (1957), “Information Theory and Statistical Mechanics,”

Physical Review, 106, 620-630.

[13] Jehiel, P. (2005), “Analogy-Based Expectation Equilibrium,” Journal of

Economic Theory, 123, 81-104.

25

[14] Jehiel, P. and F. Koessler (2008), “Revisiting Games of Incomplete Information with Analogy-Based Expectations,” Games and Economic Behavior, 62, 533-557.

[15] Little, R. and D. Rubin (2002), Statistical Analysis with Missing Data,

Wiley, New Jersey.

[16] Osborne, M. and A. Rubinstein (1998), “Games with Procedurally Rational Players,” American Economic Review, 88, 834-849.

[17] Pearl, J. (2000), Causality: Models, Reasoning and Inference, Cambridge University Press, Cambridge.

[18] Piccione, M. and A. Rubinstein (2003), “Modeling the Economic Interaction of Agents with Diverse Abilities to Recognize Equilibrium Patterns,” Journal of the European Economic Association, 1, 212-223.

[19] Sargent, T. (2001), The Conquest of American Inflation, Princeton University Press, Princeton.

[20] Schwartzstein, J. (2014), “Selective Attention and Learning,” Journal

of European Economic Association, 12, 1423-1452.

[21] Spiegler, R. (2014), “Bayesian Networks and Boundedly Rational Expectations,” mimeo.

[22] Verma, T. and J. Pearl (1991), “Equivalence and Synthesis of Causal

Models,” Uncertainty in Artificial Intelligence, 6, 255-268.

[23] Woodford, M. (2013), “Macroeconomic Analysis without the Rational

Expectations Hypothesis,” Annual Review of Economics, forthcoming.

26

Appendix: Proofs

Proposition 2

For convenience, label the variables in by 1 . Let us write down the

explicit expression for ( ):

X

( ) =

(1 0+1 0 )

0+1 0

X

=

Y

0+1 0 ∈

( | ()∩ 0()− )

(7)

Y

∈

(0 | ()∩ 0()− )

(i). Assume is an ancestral clique in . Then,

Y

∈

( |

()∩ 0()− )

= (1 )

Y

=2

( | 1 −1 ) = ( )

Expression (7) can thus be written as

( )

X

0

+1 0

Ã

Y

(0

=+1

|

()∩ 0()−

!

= ( )

(ii). Let us distinguish between two cases.

Case 1 : is not a clique in . Then, contains two variables, labeled

w.l.o.g 1 and 2, such that 12

/ and 21.

/ Consider an objective distribution

, for which every , 2, is distributed independently, whereas 1 and 2

are mutually correlated. Then, expression (7) is simplified into

Y

=1

( )

X

0

+1 0

Y

=+1

27

(0 )

=

Y

=1

( )

whereas the objective distribution can be written as

( ) = (1 )(2 | 1 )

Y

( )

=3

The two expressions are different because 2 and 1 are not independent.

Case 2 : is a clique which is not ancestral in any DAG in the equivalence

class of . Suppose that for every node ∈ , has no "unmarried parents"

- i.e., if there exist nodes 0 such that and 0 , then 0 or 0 .

In addition, if there is a directed path from some ∈

to , then has no

unmarried parents either. Transform into another DAG 0 by inverting

every link along every such path. The DAGs and 0 share the same skeleton

and -structure. By Proposition 1, they are equivalent. By construction,

is an ancestral clique in DAG that is equivalent to , a contradiction.

It follows that has the following structure. First, there exist three

distinct nodes, denoted w.l.o.g 1 2 3, such that 1 2 ∈

, 13, 23, 12

/ and

21.

/ Second, there is a directed path from 3 to some node ∈ , ≥ 3. For

convenience, denote the path by (3 4 ) - i.e., the immediate predecessor

of any 3 along the path is − 1. It is possible that = 3, in which case

the path is degenerate. W.l.o.g, we can assume that ∈

for every 6=

along this path (otherwise, we can take to be the lowest-numbered node

that belongs to along the path).

Consider any which is consistent with a DAG ∗ that has the following

structure: first, 1∗ 2∗ 3 and 1∗ 3; second, for every ∈ {4 }, ∗ () =

{2 }. (Note that the latter property

{ −1}; and ∗ () = ∅ for every ∈

{2 } is independently distributed. Then,

means that every , ∈

() = ∗ () = (1 )(2 | 1 )(3 | 1 2 ) ·

28

Y

=4

( | −1 ) ·

Y

4

( )

In contrast,

() = (1 )(2 )(3 | 1 2 ) ·

Y

=4

( | −1 ) ·

Y

( )

4

By definition, every = 4 − 1 does not belong to . Denote

(0 ) = (01 )(03 | 01 02 )

Ã−1

Y

=4

!

(0 | 0−1 ) ( | 0−1 )

Therefore,

( ) =

Y

( )

0

∈−{}

( ) =

Y

X

( )

(02 | 01 )(0 )

X

(02 )(0 )

01 0−1

∈−{}

It is easy to see from these expressions that we can find a distribution which

is consistent with ∗ such that ( ) 6= ( ) for some .

Proposition 3

I begin with a lemma due to Noga Alon. A sequence of sets 0 1 is

¯

¯

expansive if for every ≥ 1, ¯ ∩ (∪ )¯ ≥ | ∩ (∪ )| for all .

Lemma (Alon (2014)). Suppose that S satisfies RIP*. Then, every expansive ordering of S satisfies RIP.

Suppose that S satisfies RIP*. Consider the sequence of sets that

are introduced in Step 1 of each round . By Step 1 of the procedure, the

sequence 0 1 −1 is expansive. By the lemma, it satisfies RIP. My

task is to show that for every and every round = 1 − 1, the belief

∈ ∆( ) has a perfect-DAG representation, where the DAG is defined

over , and its set of maximal cliques is { 0 1 }. The proof is by

induction on .

29

Let = 1. Recall that 1 = 0 ∪ 1 . By assumption, S does not include

sets that contain one another. Therefore, 1 − 0 and 0 − 1 are non-empty.

The auxiliary beliefs 11 and 12 defined over 1 are given by

11 (1 ) = (0 )( 1 −0 | 1 ∩0 )

12 (1 ) = ( 1 )(0 − 1 | 1 ∩0 )

By the basic rules of conditional probability, we have

(0 )( 1 −0 | 1 ∩0 ) = ( 1 )(0 − 1 | 1 ∩0 )

and therefore 1 is consistent with a DAG 1 defined over 1 , where ̃1

/ 1 for every ∈ 0 ∈ 1 . The

if and only if ∈ 0 or ∈ 1 ; and

DAG 1 has two maximal cliques, 0 and 1 .

Consider the initial condition ( −1 −1 ) of any round 1. The

auxiliary beliefs 1 and 2 over = −1 ∪ are given by

1 ( ) = −1 (−1 )( −−1 | ∩−1 )

(8)

2 ( ) = ( )−1 (−1 − | ∩−1 )

Consider the expression for 1 . The inductive hypothesis is that −1 has a

perfect-DAG representation, where the DAG −1 is defined over −1 , and

its set of maximal cliques is { 0 1 −1 }. By RIP, ∩ −1 is weakly

contained in one of the sets 0 1 −1 . Extend −1 to a DAG

over , simply by adding a directed link between every ∈ (without

destroying acyclicity). The DAG is perfect, and its set of maximal cliques

is { 0 1 }. Thus, 1 is a perfect-DAG representation, where the DAG

is .

To complete the proof, I will show that 2 coincides with 1 . Note that

30

1 and 2 can be written as

1 ( ) = ( )−1 (−1 ) ·

1

( ∩−1 )

1

2 ( ) = ( )−1 (−1 ) · −1

( ∩−1 )

(9)

Since −1 is a perfect-DAG representation, where the DAG is −1 , and

∩ −1 is a clique in −1 , Remark 1 implies that w.l.o.g it is an ancestral clique. Proposition 2 then implies that −1 ( ∩−1 ) ≡ ( ∩−1 ).

Therefore, 2 coincides with 1 .

Proposition 4

Suppose that S violates RIP*. Then, any ordering of its sets will violate

RIP. Note that this means ≥ 3. Recall that the sequence 0 1 trivially

satisfies RIP. Let 1 be the earliest round for which ∩ −1 is not

weakly contained in any of the sets 0 1 −1 . By the proof of Proposition 3, −1 ∈ ∆(−1 ) is a perfect-DAG representation, where the perfect

DAG −1 , defined over −1 , is characterized by the set of maximal cliques

{ 0 1 −1 }. It follows that ∩ −1 is not a clique in −1 . By Proposition 2, there exist distributions for which −1 ( ∩−1 ) 6= ( ∩−1 ).

Therefore, by (9), there exists for which 1 and 2 do not coincide. I now

construct a family of such distributions. Since ∩ −1 is not a clique

in −1 , it must contain two nodes, denoted w.l.o.g 1 and 2, that are not

linked by −1 . Let be an arbitrary objective distribution for which is

independently distributed for every 2, whereas 1 and 2 are mutually

correlated. Then,

Y

( )

−1 (−1 ) =

∈ −1

31

It follows that 1 and 2 can be written as

1 ( ) = (1 )(2 ) ·

Y

( )

∈ −{12}

2 ( ) = (1 )(2 | 1 ) ·

Y

( )

∈ −{12}

such that

⎛

( ) = ⎝

Y

∈ −{2}

⎞ ÃP

!

(

)

)

(

P≤−1 (2 ) + P

(2 | 1 )

( )⎠ ·

≤ ( )

≤ ( )

Since all the variables ∈ − {1 2} are independently distributed under , the continuation of the iterative imputation procedure will eventually

produce a final belief of the form

−1 () =

Ã

Y

6=2

!

( )

· [(2 ) + (1 − )(2 | 1 )]

where ∈ (0 1). Since (2 | 1 ) 6= (2 ) for some 1 2 , −1 does not

have a DAG representation.

32