The Warp Computer: Architecture, Implementation, and Performance

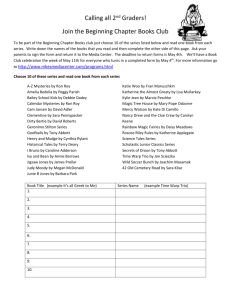

advertisement