Zarestky Math 141 Chapter 9 Notes

advertisement

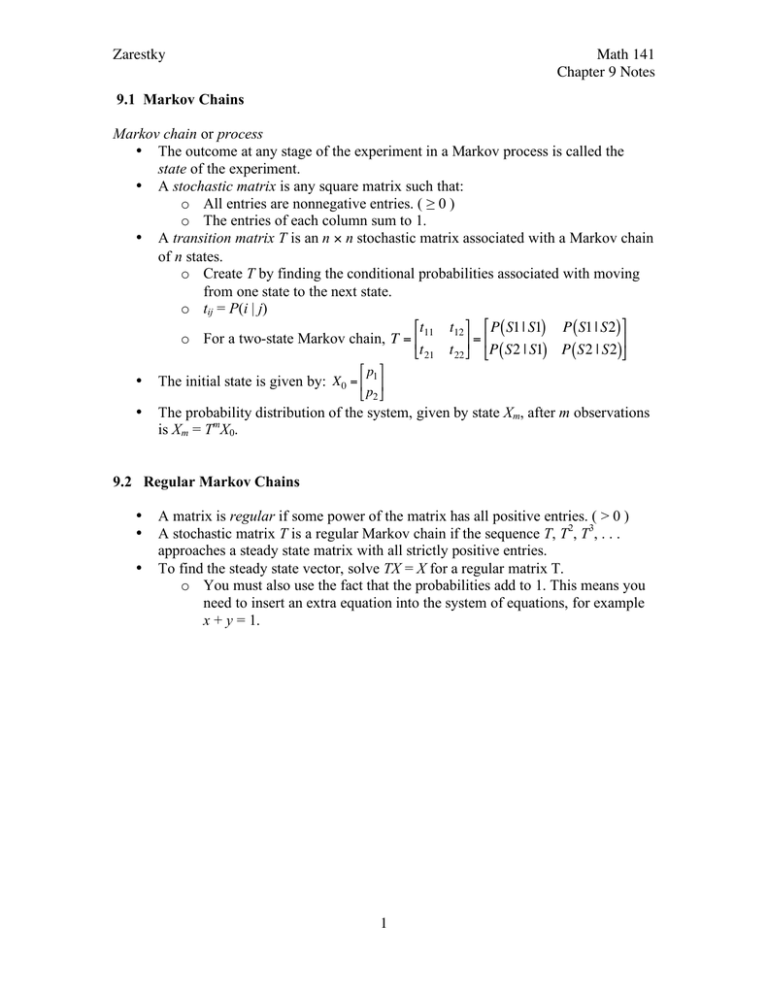

Zarestky Math 141 Chapter 9 Notes 9.1 Markov Chains Markov chain or process • The outcome at any stage of the experiment in a Markov process is called the state of the experiment. • A stochastic matrix is any square matrix such that: o All entries are nonnegative entries. ( ≥ 0 ) o The entries of each column sum to 1. • A transition matrix T is an n × n stochastic matrix associated with a Markov chain of n states. o Create T by finding the conditional probabilities associated with moving from one state to the next state. o tij = P(i | j) "t t % " P ( S1 | S1) P ( S1 | S2) % o For a two-state Markov chain, T = $ 11 12 ' = $ ' #t 21 t 22 & #P ( S2 | S1) P ( S2 | S2)& "p % • The initial state is given by: X0 = $ 1 ' • The probability distribution ! of the system, given by state Xm, after m observations is Xm = TmX0. # p2 & ! 9.2 Regular Markov Chains • • • A matrix is regular if some power of the matrix has all positive entries. ( > 0 ) A stochastic matrix T is a regular Markov chain if the sequence T, T2, T3, . . . approaches a steady state matrix with all strictly positive entries. To find the steady state vector, solve TX = X for a regular matrix T. o You must also use the fact that the probabilities add to 1. This means you need to insert an extra equation into the system of equations, for example x + y = 1. 1