Rewriting History

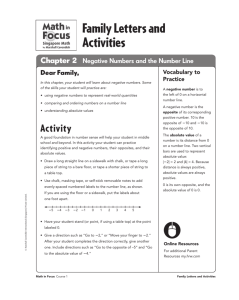

advertisement