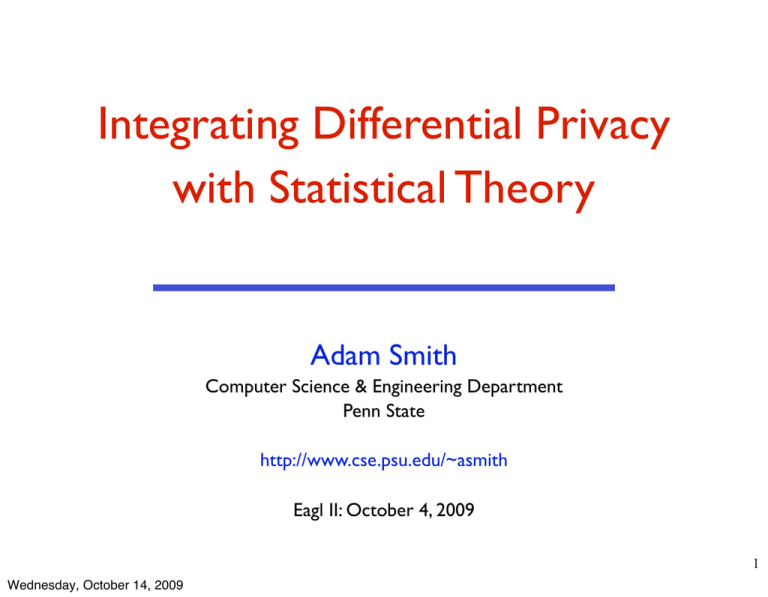

Integrating Differential Privacy with Statistical Theory Adam Smith Computer Science & Engineering Department

advertisement

Integrating Differential Privacy

with Statistical Theory

Adam Smith

Computer Science & Engineering Department

Penn State

http://www.cse.psu.edu/~asmith

Eagl II: October 4, 2009

1

Wednesday, October 14, 2009

Privacy in Statistical Databases

Individuals

x1

x2

..

.

xn

Server/agency

A

local random

coins

(

queries

answers

Users

)

Government,

researchers,

businesses

(or)

Malicious

adversary

Large collections of personal information

• census data

• medical/public health data

Recently:

• social networks

• larger data sets

• recommendation systems

• more types of data

• trace data: search records, etc

• intrusion-detection systems

2

Wednesday, October 14, 2009

Privacy in Statistical Databases

3

Wednesday, October 14, 2009

Privacy in Statistical Databases

• Two conflicting goals

Utility: Users can extract “global” statistics

Confidentiality: Individual information stays hidden

• How can we define these precisely?

Variations on model studied in

• Statistics (“statistical disclosure control”)

• Data mining / database (“privacy-preserving data mining” *)

Recent: theoretical CS

3

Wednesday, October 14, 2009

Differential Privacy

Individuals

x1

x2

..

.

xn

Server/agency

A

local random

coins

(

queries

answers

Users

)

Government,

researchers,

businesses

(or)

Malicious

adversary

• Definition of privacy in statistical databases

Imposes restrictions on algorithm A generating output

• If A satisfies restrictions, then output provides privacy

no matter what user/intruder knows ahead of time

• Question: how useful are algorithms that satisfy differential

privacy?

4

Wednesday, October 14, 2009

Statistics in a Nutshell

probability

model

data

inference / learning

• This talk:

Differentially private alg’s for recovering model

Performance = convergence to “true” model

• Goals:

interesting problems + bridge linguistic gap

5

Wednesday, October 14, 2009

This talk: Valid Statistical Inference

• Construct differentially private algorithms with

same asymptotic error as best non-private algorithm

Parametric: for any* parametric model, there exists a

private, efficient estimator (i.e. minimal variance)

Nonparametric: for any* distribution on [0,1], there is a

private histogram estimator with same convergence rate as

best (non-private) histogram estimator

6

Wednesday, October 14, 2009

This talk: Valid Statistical Inference

• Construct differentially private algorithms with

same asymptotic error as best non-private algorithm

Parametric: for any* parametric model, there exists a

private, efficient estimator (i.e. minimal variance)

Nonparametric: for any* distribution on [0,1], there is a

private histogram estimator with same convergence rate as

best (non-private) histogram estimator

Other recent work along these lines:

•

•

•

•

•

Dwork, Lei 2009

Wasserman, Zhou 2008

Chaudhuri, Monteleoni 2008

McSherry, Williams 2009

...

6

Wednesday, October 14, 2009

Main Idea for both cases

• Add noise to carefully modified estimator

Several ways to design differentially private algorithms

Adding noise is the simplest

• Prove that required noise is less than inherent variability

due to sampling

original

(non-private)

estimate

Wednesday, October 14, 2009

perturbed

(private)

estimate

sampling noise

(confidence interval

)

7

Reminder: differential privacy

• Intuition:

Changes to my data not noticeable by users

Output is “independent” of my data

8

Wednesday, October 14, 2009

Defining Privacy [DiNi,DwNi,BDMN,DMNS]

x1

x2

..

.

xn

A

A(x)

local random

coins

n

=

(x

,

...,

x

)

∈

D

• Data set x

1

n

Domain D can be numbers, categories, tax forms

Think of x as fixed (not random)

• A = randomized procedure run by the agency

A(x) is a random variable distributed over possible outputs

Randomness might come from adding noise, resampling, etc.

9

Wednesday, October 14, 2009

Defining Privacy [DiNi,DwNi,BDMN,DMNS]

x1

x2

..

.

xn

x1

A

local random

coins

A(x)

x!2

..

.

xn

A

A(x’)

local random

coins

x’ is a neighbor of x

if they differ in one data point

10

Wednesday, October 14, 2009

Defining Privacy [DiNi,DwNi,BDMN,DMNS]

x1

x2

..

.

xn

x1

A

local random

coins

A(x)

x!2

..

.

xn

A

A(x’)

local random

coins

x’ is a neighbor of x

if they differ in one data point

Neighboring databases

induce close distributions

on outputs

10

Wednesday, October 14, 2009

Defining Privacy [DiNi,DwNi,BDMN,DMNS]

x1

x2

..

.

xn

x1

A

A(x)

local random

coins

x!2

A

..

.

xn

A(x’)

local random

coins

x’ is a neighbor of x

if they differ in one data point

Definition: A is ε-differentially private if,

for all neighbors x, x’,

for all subsets S of outputs

!

Neighboring databases

induce close distributions

on outputs

Pr(A(x) ∈ S) ≤ e · Pr(A(x ) ∈ S)

!

10

Wednesday, October 14, 2009

Defining Privacy [DiNi,DwNi,BDMN,DMNS]

• This is a condition on the algorithm (process) A

Saying “this output is safe” doesn’t take into account how it

was computed

• Semantics:

no matter what user knows ahead of time,

learn the same things about me

whether or not my data is present

Definition: A is ε-differentially private if,

for all neighbors x, x’,

for all subsets S of outputs

!

Neighboring databases

induce close distributions

on outputs

Pr(A(x) ∈ S) ≤ e · Pr(A(x ) ∈ S)

!

11

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A

A(x) = x̄ + noise

local random

coins

xi ∈ {0, 1}

x̄ =

1

n

!

i

xi

12

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A

A(x) = x̄ + noise

local random

coins

• Data points are binary responses

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

12

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A

local random

coins

A(x) = x̄ + noise

≈ x̄ ±

• Data points are binary responses

1

!n

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

• Claim: Can obtain ε-differential privacy with noise ≈

1

!n

12

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A

local random

coins

A(x) = x̄ + noise

≈ x̄ ±

• Data points are binary responses

1

!n

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

• Claim: Can obtain ε-differential privacy with noise ≈

1

!n

• Is this a lot?

If x is a random sample from a large underlying population,

1

then sampling noise ≈ √

n

Statistical error swamps noise required for privacy

12

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A

A(x) = x̄ + noise

local random

coins

xi ∈ {0, 1}

x̄ =

1

n

!

i

xi

13

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A

local random

coins

A(x) = x̄ + noise

≈ x̄ ±

• Data points are binary responses

1

!n

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

• Claim: Can obtain ε-differential privacy with noise ≈

1

!n

13

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A(x) = x̄ + noise

A

local random

coins

≈ x̄ ±

• Data points are binary responses

1

!n

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

• Claim: Can obtain ε-differential privacy with noise ≈

1

!n

Laplace distribution Lap(λ) has density h(y) ∝ e−|y|/λ

Sliding property:

h(y)

h(y+δ)

≤ eδ/λ

h(y)

y

13

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

..

.

xn

A(x) = x̄ + noise

A

local random

coins

≈ x̄ ±

• Data points are binary responses

1

!n

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

• Claim: Can obtain ε-differential privacy with noise ≈

1

!n

Laplace distribution Lap(λ) has density h(y) ∝ e−|y|/λ

Sliding property:

h(y)

h(y+δ)

≤ eδ/λ

h(y + δ)

h(y)

y

13

Wednesday, October 14, 2009

Example: Perturbing the Average

x1

x2

A(x) = x̄ + noise

A

..

.

xn

≈ x̄ ±

local random

coins

• Data points are binary responses

1

!n

xi ∈ {0, 1}

• Server wants to release average x̄ =

1

n

!

i

xi

• Claim: Can obtain ε-differential privacy with noise ≈

1

!n

Laplace distribution Lap(λ) has density h(y) ∝ e−|y|/λ

Sliding property:

h(y)

h(y+δ)

≤ eδ/λ

h(y + δ)

h(y)

A(x) = blue curve, A(x’) = red curve

δ = |x̄ − x̄! | ≤

Wednesday, October 14, 2009

1

n

=⇒

blue curve

≤ e!

red curve

y

13

When Does Noise Not Matter?

1

• Average: A(x) = x̄ + Lap( !n

)

Suppose X1, X2, X3, ...,Xn are i.i.d. random variables

√

D

X̄ is a random variable, and n · (X̄ − µ)−→Normal

A(X) − X̄ P

−→0

StdDev(X̄)

if ! !

√1

n

No “cost” to privacy:

• A(X) is “as good as” X̄ for statistical inference*

X̄

-1.5

-1

-0.5

A(X)

0.8

0

0.5

1

1.5

14

Wednesday, October 14, 2009

When Does Noise Not Matter?

15

Wednesday, October 14, 2009

When Does Noise Not Matter?

• Mean example generalizes to other statistics

• Theorem: For any* exponential family, can release

“approximately sufficient” statistics

Suff. stats T(X) are sums, add noise d for dimension d

!n

P

A(X) − T (X) −→0

StdDev(T (X))

15

Wednesday, October 14, 2009

When Does Noise Not Matter?

• Mean example generalizes to other statistics

• Theorem: For any* exponential family, can release

“approximately sufficient” statistics

Suff. stats T(X) are sums, add noise d for dimension d

!n

P

A(X) − T (X) −→0

StdDev(T (X))

• Asymptotic result: Indicates that useful analysis possible

Requires more sophisticated processing for small n

15

Wednesday, October 14, 2009

When Does Noise Not Matter?

• Mean example generalizes to other statistics

• Theorem: For any* exponential family, can release

“approximately sufficient” statistics

Suff. stats T(X) are sums, add noise d for dimension d

!n

P

A(X) − T (X) −→0

StdDev(T (X))

• Asymptotic result: Indicates that useful analysis possible

Requires more sophisticated processing for small n

• Noise degrades with dimension

More information ⇒ less privacy

Research question: Is this necessary?

15

Wednesday, October 14, 2009

• Histogram Density Estimation

Calibrating noise to sensitivity

• Maximum Likelihood Estimator

Sub-sample and aggregate

16

Wednesday, October 14, 2009

Global Sensitivity [DMNS06]

Individuals

x1

x2

Server/agency

..

.

xn

A

f(x) + noise

User

local random

coins

• Intuition: f(x) can be released accurately when f is insensitive

to individual entries x1 , x2 , . . . , xn

• Global Sensitivity:

GSf =

• Example: GSaverage =

max

!

!f

(x)

−

f

(x

)!1

!

neighbors x,x

1

n

17

Wednesday, October 14, 2009

Global Sensitivity [DMNS06]

Individuals

x1

x2

Server/agency

A

..

.

xn

f(x) + noise

User

local random

coins

• Intuition: f(x) can be released accurately when f is insensitive

to individual entries x1 , x2 , . . . , xn

• Global Sensitivity:

GSf =

• Example: GSaverage =

!

!f

(x)

−

f

(x

)!1

!

neighbors x,x

1

n

Theorem: If A(x) = f (x) + Lap

Wednesday, October 14, 2009

max

!

GSf

!

"

, then A is !-differentially private.

17

Example: Histograms

f (x) = (n1 , n2 , ..., , nd ) where nj = #{i : xi in j-th interval}

Lap(1/!)

0

1/d

1

18

Wednesday, October 14, 2009

Example: Histograms

• Say x1,x2,...,xn in [0,1]

Partition [0,1] into d intervals of equal size

f (x) = (n1 , n2 , ..., , nd ) where nj = #{i : xi in j-th interval}

GSf = 2

Sufficient to add noise Lap(1/!) to each count

• Independent of the dimension

0

1/d

1

18

Wednesday, October 14, 2009

Example: Histograms

• Say x1,x2,...,xn in [0,1] arbitrary domain D

Partition [0,1] into d intervals of equal size into d disjoint “bins”

f (x) = (n1 , n2 , ..., , nd ) where nj = #{i : xi in j-th interval}bin

GSf = 2

Sufficient to add noise Lap(1/!) to each count

• Independent of the dimension

0

1/d

1

18

Wednesday, October 14, 2009

Example: Histograms

• Say x1,x2,...,xn in [0,1]

Partition [0,1] into d intervals of equal size

f (x) = (n1 , n2 , ..., , nd ) where nj = #{i : xi in j-th interval}

GSf = 2

Sufficient to add noise Lap(1/!) to each count

• Independent of the dimension

• For any smooth density h, if Xi i.i.d. ~ h,

noisy histogram converges to h

Expected L2 error O(

1

√

3 n)

1

if n ! 3

!

Same as “best” non-private estimator

0

1/d

1

19

Wednesday, October 14, 2009

Proof

• L2 error:

!

" # 1

IM SE = Eĥ !h − ĥ!22 =

(h − ĥ)2 dx

0

• If bins have fixed width t

1

1

2

!

Theorem [Scott]: IM SE =

+ t R(h ) +

nt

n

√

3

Minimized for t = n

• Additional square error due to noise

Additional error per bin t ×

1

t2 n2 !2

Total additional error:

ĥ(x) =

# pts ± (1/!)

nt

t

1

1/t × 1/(tn2 !2 ) = 2 2 2

!"#$

! "# $

t n !

# bins

error per bin

Total error (by additivity of variance):

1

1

2

+ t + 2 2 2 = (original error) × (1 + o(1))

nt

t n !

Wednesday, October 14, 2009

0

1/d

1

20

Frequency Polygon

• Frequency polygon

linear interpolation through midpoints of histogram

• For any smooth density h, if Xi i.i.d. ~ h,

frequency polygon of noisy histogram converges to h

Expected L2 error O(n

−4/5

) if n !

Same as non-private estimator

1

!5/2

• Similar, more complicated proof

0

Wednesday, October 14, 2009

1/d

1

21

More detail

• This actually shows that for any given bin width, can find

noisy estimator that is close to non-noisy estimator

• Does not address how to choose exact bin width

Subject to extensive research

Common “bandwidth selection” criteria

can be approximated privately:

• compute many different candidates

• use exponential mechanism to select best candidate(s)

22

Wednesday, October 14, 2009

• Histogram Density Estimation

Calibrating noise to sensitivity

• Maximum Likelihood Estimator

Sub-sample and aggregate

23

Wednesday, October 14, 2009

High global sensitivity: example 3

High

Global Sensitivity: Learning Mixtures

Example 3: cluster centers

Database entries: points in a metric space. !

x

x

!" !"

!

"

!" !"!" !" !"

!"

!" !" !" !"

!" "!

!" !"!"!" !" !"

!"

!" !" !" !"

!"

"! !"

!" !"!"!" !" !"

!"

!" !" !" !"

!"

!" !"

!

"

!" !"!" !" !"

!"

!" !" !" !"

!" "!

!" !"!"!" !" !"

!"

!" !" !" !"

!" "!

!" !"!"!" !" !"

!"

!" !" !" !"

Global sensitivity of cluster centers is roughly the

diameter of the space.

• But intuitively, if clustering is ”good”, cluster centers

should be insensitive.

16

Wednesday, October 14, 2009

24

High global sensitivity: example 3

High

Global Sensitivity: Learning Mixtures

Example 3: cluster centers

Database entries: points in a metric space. !

x

x

!" !"

!

"

!" !"!" !" !"

!"

!" !" !" !"

!"

#$

!" !"

!" !"

!" !"!"!" !" !"

!" !"!"!" !" !"

!"

!"

!" !" !" !"

!" !" !" !"

!"

!"

!" !"

!

"

!" !"!" !" !"

!"

!" !" !" !"

!" "!

!" !"!"!" !" !"

!"

!" !" !" !"

#$

!" !"

!" !"!"!" !" !"

!"

!" !" !" !"

!"

Global sensitivity of cluster centers is roughly the

diameter of the space.

• But intuitively, if clustering is ”good”, cluster centers

should be insensitive.

16

Wednesday, October 14, 2009

25

High global sensitivity: example 3

High

Global Sensitivity: Learning Mixtures

Example 3: cluster centers

Database entries: points in a metric space. !

x

x

!" !"

!

"

!" !"!" !" !"

!"

!" !" !" !"

#

$

!"

#$

!" !"

!" !"

!" !"!"!"#

!" !"!"!" !" !"

$!" !"

!"

!"

!" !" !" !"

!" !" !" !"

!"

!"

!" !"

!

"

!" !"!" !" !"

!"

!" !" !" !"

$

#

!" "!

!" !"!"!" !" !"

!"

!" !" !" !"

#$

!" !"

!" !"!"!"#

$!" !"

!"

!" !" !" !"

!"

Global sensitivity of cluster centers is roughly the

diameter of the space.

• But intuitively, if clustering is ”good”, cluster centers

should be insensitive.

16

Wednesday, October 14, 2009

26

What about maximum likelihood estimates?

• Sometimes MLE is well-behaved,

e.g. observed proportion for binomial

• Sometimes we have no idea

e.g. no closed form expression for most loglinear models

• (loglinear = popular class of statistical models for categorical data)

Can have arbitrarily bad sensitivity

27

Wednesday, October 14, 2009

Sample-and-Aggregate Methodology [NRS]

Sample-and-Aggregate

Intuition: Replace f with a less sensitive function f˜.

f˜(x) = g(f (sample1 ), f (sample2 ), . . . , f (samples ))

k

x

!

xi1 , . . . , xit

"

xj1 , . . . , xjt

$

!

f"

$

!

f"

'

%& "

aggregation function g!

noise calibrated

calibrated

noise

sensitivity of gf˜

totosensitivity

Wednesday, October 14, 2009

...

#

xk1 , . . . , xkt

$

(+

" ( output

$

!

f"

28

T ∗ (x) =

k

θ̂ x(i−1)t+1 , ..., xit

+ Lap

k"

Λ is the diameterPoint

of the parameter’s

range, and Lap(λ) is a random variabl

Example:whereEfficient

Estimates

i=1

the Laplacian distribution with parameter (standard deviation) λ.

• Given a parametric

model {fθ : θ ∈ Θ}

• MLE = argmaxθ (fθ (x))

• Converges to Normal

Var(MLE) = (IF(θ)n)-1

Can be corrected so that

bias( θ̂ ) = O(n-3/2)

!

!!

"

!

!!

xi1 , . . . , xit

'

!

θ̂"

(

x

#

#$

%%

%

xj1 , . . . , xjt . . .

%%

%

&

x k 1 , . . . , x kt

'

'

"

!

!

"

θ̂

, θ̂

,

((

*

( z1 * z2 · · · ,,zk

,

((

,

+

*

,

) "

(

!

g

noise calibrated

to sensitivity of g

aggregation function

'

. +" . output

Figure 1: Sample-and-aggregate

• Theorem: If model is well-behaved, then sample6

θ̂

n

=

ω(1/"

)

aggregate using

gives

efficient

estimator

if

For concreteness, we first analyze the one-dimensional estimator described in

Lemma 2.2 ([1, 2]). For any choice of the number of blocks k, the estimator T ∗ i

Average MLE from different samples

• Basic version:

Proof. Fix a particular value of x, and consider the effect of changing a single

#

databasespace

x (for is

anybounded,

particular index

i). At most

of the is

numbers

zj can ch

If parameter

sensitivity

of one

average

O(1/k)

29

the block which contains xi . The number zj that changes can go up or down by

Wednesday, October 14, 2009

T ∗ (x) =

k

θ̂ x(i−1)t+1 , ..., xit

+ Lap

k"

where Λ is the diameter of the parameter’s range, and Lap(λ) is a random variabl

Proof idea

i=1

the Laplacian distribution with parameter (standard deviation) λ.

• Each MLE ≈ N((k/n)3/2, k/I(n)

!

!

!!

x

%%

%

#

#$

%

%%

"!

!

&

• Output ≈ Gaussian since

xi , . . . , x i

xj , . . . , x j . . . xk , . . . , x k

each MLE ≈ Gaussian

'

'

'

!

"

!

"

!

"

θ̂ (

θ̂

, θ̂

,

((

*

• Variance of average

( z1 * z2 · · · ,,zk

,

((

,

+

*

,

) "

(

g! aggregation function

= (k/n) / k = 1/n

'

noise calibrated

. +" . output

• Bias of average

to sensitivity of g

= bias of each term = (k/n)3/2

Figure 1: Sample-and-aggregate

• Total squared error

2 + E(noise2)

= variance +

bias

For concreteness, we first analyze the one-dimensional estimator described in

1

t

1

t

1

t

6

1

k Lemma

1 ([1,

+ choice

o(1) of the

when

n=

ω(1/!

) estimator T ∗ i

3

2 2]). For1any

2.2

number

of blocks

k, the

= +( ) +( ) =

2/3 ))

n

n Proof. Fix

k!a particular valuenof x, and (set

k

=

o(n

consider the effect of changing a single

database x# (for any particular index i). At most one of the numbers zj can ch

30

the block which contains xi . The number zj that changes can go up or down by

Wednesday, October 14, 2009

Higher Dimension

• Theorem holds in any fixed dimension

actually up to poly(n)

• Higher dimension requires significant extra work

need to find an ellipse-shaped “envelope” for data set

that captures 1-o(1) fraction of points in set...

... differentially privately

Use Dunagan-Vempala ’01

• Open: understanding exact dependence on dimension

31

Wednesday, October 14, 2009

Conclusions

• Define privacy in terms of my effect on output

Meaningful despite arbitrary external information

I should participate if I get benefit

• What can we compute with rigorous guarantees?

This talk: statistical estimators that are “as good” as optimal

non-private estimators

• First step: basic asymptotic theory

• New aspect to “curse” of dimensionality

Future / ongoing work:

• More sophisticated analyses: linear/logistic regression, kernel density

estimates, etc

• Multiple analyses (see Blum-Ligett-Roth)

Wednesday, October 14, 2009

32