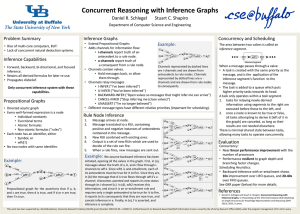

Concurrent Reasoning with Inference Graphs

advertisement

Concurrent Reasoning with Inference Graphs Daniel R. Schlegel and Stuart C. Shapiro Department of Computer Science and Engineering University at Buffalo, The State University of New York Buffalo, New York, USA <drschleg,shapiro>@buffalo.edu D. R. Schlegel and S. C. Shapiro 1 Problem Statement • Rise of multi-core computers • Lack of concurrent natural deduction systems A Motivation • Application to natural language understanding for terrorist plot detection. D. R. Schlegel 2 What are Inference Graphs? • Graphs for natural deduction – Four types of inference: • • • • Forward Backward Bi-directional Focused – Retain derived formulas for later re-use. – Propagate disbelief. – Built upon Propositional Graphs. • Take advantage of multi-core computers – Concurrency and scheduling – Near-linear speedup. D. R. Schlegel 3 Propositional Graphs • • Directed acyclic graph Every well-formed expression is a node – Individual constants – Functional terms – Atomic formulas – Non-atomic formulas (“rules”) • Each node has an identifier, either – Symbol, or – wfti[!] • No two nodes with same identifier. D. R. Schlegel and S. C. Shapiro 4 Propositional Graphs a b and-ant wft1! cq c and-ant If a, and b are true, then c is true. D. R. Schlegel and S. C. Shapiro 5 Inference Graphs • Extend Propositional Graphs • Add channels for information flow (messages): – i-channels report truth of an antecedent to a rule node. – u-channels report truth of a consequent from a rule node. • Channels contain valves. – Hold messages back, or allow them through. u-channel i-channel a b and-ant wft1! cq c and-ant D. R. Schlegel and S. C. Shapiro 6 Messages • Five kinds – – – – – I-INFER – “I’ve been inferred” U-INFER – “You’ve been inferred” BACKWARD-INFER – “Open valves so I might be inferred” CANCEL-INFER – “Stop trying to infer me (close valves)” UNASSERT – “I’m no longer believed” D. R. Schlegel and S. C. Shapiro 7 Priorities • Messages have priorities. – – – – UNASSERT is top priority CANCEL-INFER is next I-INFER/U-INFER are higher priority closer to a result BACKWARD-INFER is lowest D. R. Schlegel and S. C. Shapiro 8 Rule Node Inference 1. Message arrives at node. Assume we have a KB with a ^ b -> c, and b. Then a is asserted with forward inference. i-infer a! b! a : true and-ant wft1! cq c and-ant A message is sent from a to wft1 D. R. Schlegel and S. C. Shapiro 9 Rule Node Inference 2. Message is translated to Rule Use Information a : true a! b! and-ant 1 Positive Antecedent, a 0 Negative Antecedents 2 Total Antecedents wft1! cq c and-ant Rule Use Information is stored in rule nodes to be combined later with others that arrive. D. R. Schlegel and S. C. Shapiro 10 Rule Node Inference 3. Combine RUIs with any existing ones 1 Positive Antecedent, b 0 Negative Antecedents 2 Total Antecedents a! b! 1 Positive Antecedent, a + 0 Negative Antecedents 2 Total Antecedents and-ant wft1! = 2 Positive Antecedents, a,b 0 Negative Antecedents 2 Total Antecedents cq c and-ant Combine the RUI for a with the one which already exists in wft1 for b. D. R. Schlegel and S. C. Shapiro 11 Rule Node Inference 4. Determine if the rule can fire. 2 Positive Antecedents, a,b 0 Negative Antecedents 2 Total Antecedents a! b! and-ant wft1! cq c and-ant We have two positive antecedents, and we need two. The rule can fire. D. R. Schlegel and S. C. Shapiro 12 Rule Node Inference 5. Send out new messages. u-infer c : true a! b! and-ant wft1! cq c and-ant c will receive the message, and assert itself. D. R. Schlegel 13 Cycles • Graphs may contain cycles. • No rule node will infer on the same message more than once. – RUIs with no new information are ignored. • Already open valves can’t be opened again. wft1! cq ant a b ant cq wft2! D. R. Schlegel and S. C. Shapiro 14 Concurrency and Scheduling • Inference Segment: the area between two valves. • When messages reach a valve: – A task is created with the same priority as the message. • Task: application of the segment’s function to the message. – Task is added to a prioritized queue. • Tasks have minimal shared state, easing concurrency. D. R. Schlegel and S. C. Shapiro 15 Concurrency and Scheduling • A task only operates within a single segment. 1. tasks for relaying newly derived information using segments “later” in the derivation are executed before “earlier” ones, and 2. once a node is known to be true or false, all tasks attempting to derive it are canceled, as long as their results are not needed elsewhere. D. R. Schlegel and S. C. Shapiro 16 Example cq Backchain on cq. Assume every node requires a single one of its incoming nodes to be true for it to be true (simplified for easy viewing). Two processors will be used. D. R. Schlegel and S. C. Shapiro 17 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 18 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 19 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 20 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 21 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 22 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 23 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 24 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 25 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 26 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 27 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 28 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 29 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 30 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 31 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 32 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 33 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 34 Example cq Backward Inference (Open valve) Inferring D. R. Schlegel and S. C. Shapiro Inferred Cancelled 35 Evaluation • Concurrency: – Near linear performance improvement with the number of processors – Performance robust to graph depth and branching factor changes. • Scheduling Heuristics: – Backward-inference with or-entailment shows 10x improvement over LIFO queues, and 20-40x over FIFO queues. D. R. Schlegel and S. C. Shapiro 36 Acknowledgements This work has been supported by a Multidisciplinary University Research Initiative (MURI) grant (Number W911NF-09- 1-0392) for Unified Research on Network-based Hard/Soft Information Fusion, issued by the US Army Research Office (ARO) under the program management of Dr. John Lavery. D. R. Schlegel 37