Learning, Analyzing and Predicting Object Roles on Dynamic Networks Kang Li ,Suxin Guo

advertisement

2013 IEEE 13th International Conference on Data Mining

Learning, Analyzing and Predicting Object Roles on

Dynamic Networks

Kang Li∗ ,Suxin Guo† ,Nan Du‡ , Jing Gao§ and Aidong Zhang¶

Department of Computer Science and Engineering

The State University of New York at Buffalo

Emails: {kli22∗ ,suxinguo† , nandu‡ , jing§ and azhang¶ }@buffalo.edu

types of object roles of interest. [1] defines vulnerable nodes as

the nodes that the deletion of them could cause maximum network fragmentation. [2] assumes important objects should have

high PageRank scores. Generally, there are two drawbacks of

such methods. First, the topological properties in each task are

subjectively selected, thus critical information characterizing

the object roles in the networks may be missed. Even worse,

when object roles are complex, the aforementioned strategy

may not be able to characterize the object roles using any

existing topological property. Second, existing methods specified for static networks ignore the influence of the evolving

vertices and links in dynamic networks, thus are not applicable

for analyzing the dynamic patterns of object roles. To sum up,

these existing methods are not effective in mining the object

roles and their evolving patterns in dynamic networks.

Abstract—Dynamic networks are structures with objects and

links between the objects that vary in time. Temporal information

in dynamic networks can be used to reveal many important

phenomena such as bursts of activities in social networks and

human communication patterns in email networks. In this area,

one very important problem is to understand dynamic patterns

of object roles. For instance, will a user become a peripheral

node in a social network? Could a website become a hub on the

Internet? Will a gene be highly expressed in gene-gene interaction

networks in the later stage of a cancer? In this paper, we propose

a novel approach that identifies the role of each object, tracks

the changes of object roles over time, and predicts the evolving

patterns of the object roles in dynamic networks. In particular,

a probability model is proposed to extract latent features of

object roles from dynamic networks. The extracted latent features

are discriminative in learning object roles and are capable of

characterizing network structures. The probability model is then

extended to learn the dynamic patterns and make predictions

on object roles. We assess our method on two data sets on the

tasks of exploring how users’ importance and political interests

evolve as time progresses on dynamic networks. Overall, the

extensive experimental evaluations confirm the effectiveness of

our approach for identifying, analyzing and predicting object

roles on dynamic networks.

I.

In this paper, we propose a novel probability model to

address the problems of learning, analyzing and predicting

object roles in dynamic networks. Different from the aforementioned existing methods, the proposed model does not rely on

any subjectively selected topological properties, and is capable

of characterizing network dynamics. At the heart of our

framework is the latent feature representation of object roles,

which captures the structural information of each time point,

incorporates evolving information in dynamic networks, and

is discriminative in learning object roles. Specifically, at each

time point, objects of similar roles have close latent feature

representations, and the interactions of the latent object roles

can effectively reconstruct the observable network of the time

point. Moreover, the extracted latent feature representation can

well fit the existing supervised information in the proposed

model. Furthermore, to incorporate the evolving information in

the dynamic networks, the latent feature representation of each

time point is close to the prediction obtained from the previous

time points. This representation of object roles can be used

to build a variety of sophisticated analysis tools for dynamic

networks. We utilize the effective representation strategy for

exploring the evolution of object roles. This representation

method endues the proposed approach with simple and direct

visualizations that clearly show how the roles of individual

objects evolve as time progresses.

I NTRODUCTION

Dynamic networks exist in many different settings, such as

computer networks, social networks, biological networks and

sensor networks. During the formation and the dynamics of

these systems, objects usually play various types of changing

roles. For example, in Facebook, some users play as topic

hubs which are highly involved in various activities while some

other users play as peripheral objects who seldom participate

in discussions. As time goes by, highly involved users may

reduce their engagement with Facebook due to the increased

engagement with other social networks, and peripheral users

may become more active in the communities.

In the research of dynamic networks, a critical problem

is to understand object roles and their evolving patterns.

This problem is meaningful in many applications and has

attracted much attention. For instance, detecting users having

abnormal roles can be used to filter spammers in email

networks, analyzing the dynamics of customer engagement

in social networks can help improve the quality of service,

and predicting the informative genes in gene-gene interaction

networks is essential for preventing the onset of cancers.

Although mining dynamic networks using latent feature

representation on objects has been a hot topic recently, the proposed work in this paper significantly differs from the existing

work in both the method and the aimed task. In the existing

methods, exponential-family random graph models (ERGMs)

are the canonical way for representing observable networks

with latent variables. As discussed in [3], ERGMs usually

Nevertheless, existing methods for this problem usually

focus on static networks and make strong assumptions on the

relationships between specific topological properties and the

1550-4786/13 $31.00 © 2013 IEEE

DOI 10.1109/ICDM.2013.95

428

suffer from high computational and statistical cost thus hardly

work in practice. To avoid the drawbacks of ERGMs, several

alternative approaches [4]–[6] have been proposed in recent

years to use latent feature vectors as ”coordinates” to represent

the characteristics of each node. Compared with the proposed

probability model in this paper, these ”coordinates” methods

always assume that highly connected objects have close latent

feature representations and vice versa. Since objects of the

same roles are not necessarily highly connected or may be

even far away from each other, these existing methods can not

encode object roles as latent feature representations in many

applications and are not effective in the learning of object roles

in dynamic networks.

TABLE I: Notation

t

ni

r

c

G

Gi

Vni ×1

i

i

En

i ×ni

i

Yn ×c

i

Λni ×r

i

Hr×r

W

the Hadamard product of two matrices A and B of the same

size, and (A◦B)ij = Aij ·Bij . Similarly, (AB)ij = Aij /Bij

is the Hadamard division. Besides, a Gaussian distribution of

A with mean B and variance σ 2 is denoted as N (A|B, σ 2 ).

The proposed approach is built upon two bases: first, object

roles can be extracted from structural information; and second,

object roles should change gradually rather than abruptly. The

second base means that previous data can provide hints about

the current status of the network, and this assumption has

been widely used in dynamic network mining such as [4]–

[6]. In experiments, we investigate how people’s importance

and political interests evolve in dynamic networks. The results

well support these two bases.

We summarize the major variable matrices used in this

paper in Table I. Each dynamic network is assumed to be

periodically sampled into t snapshots, and denoted as G =

{Gi |i ∈ [1, t]}. Gi = {Vnii ×1 , Eni i ×ni } is the i-th snapshot,

where V i is the set of ni vertices, and E i is the set of links

between the vertices. In our context, each vertex is an object.

In the dynamic network G, we suppose there are c object

role classes, and the label matrix for the objects in Gi is Ynii ×c .

In the paper, we suppose labels of objects are provided in the

first snapshot, and focus on the following three tasks:

Overall, the contributions of this work include:

• In Section II-B, a Gaussian model is proposed to effectively extract latent features of object roles for both

supervised and unsupervised cases. A solution based

on variational Bayesian inference is then provided to

efficiently optimize the proposed model.

• In Section II-C, we extend the Gaussian model to incorporate dynamic information for learning and analyzing

object roles in dynamic networks.

• In Section II-D, we also provide the details of implementing the proposed model for predicting object roles

according to the learned evolving patterns.

• In Section III, we experimentally evaluate the proposed

methods on the tasks of mining people’s evolving importance and political interests in dynamic networks. The

overall performance well confirms the effectiveness of the

proposed model.

II.

number of snapshots in the dynamic network

number of objects in the i-th snapshot

number of features in latent feature representations of object roles

number of object role classes

the dynamic network

the i-th snapshot of the dynamic network

the set of vertices in the i-th snapshot

the set of links in the i-th snapshot

the label matrix for each node

the latent feature matrix of object roles in the i-th snapshot

the interaction matrix of object roles in the i-th snapshot

the coefficient matrix for learning object role classes

• Learning the role of each object at each snapshot of the

dynamic network;

• Analyzing how object roles evolve over time; and

• Predicting the object roles at the (t + 1)-th snapshot of

the dynamic network using the first to the t-th snapshots.

B. Detection on Static Networks

In this section, we propose a probability model for object

role detection on static networks, as a ground for the dynamic

object role analysis.

Different from the existing methods [4]–[6] that view

object roles as object ”coordinates”, we interpret the role of

each object as its properties that determine to what extent

and how the object impacts the other objects in the formation

and the dynamics of the network.

M ETHODOLOGY

In this section, we present the details of the proposed

LAP (Learning, Analyzing and Predicting) model for mining

object roles on dynamic networks. Specifically, we start from

detecting object roles on static networks, then extend the

developed model to dynamic cases. The prediction of object

roles is achieved through the extended model.

To better explain the intuition of this definition and its

difference from the ”coordinates” concept, we give an example

on the trade history of the ancient Tamil country [7] which is a

region in southern India. During the ancient time, people there

were frequently involved in both local and international, and

both inland and overseas trade. We conclude the rules of the

trade as follows.

A. Notation

1) The closer two objects were, the more likely they could

trade/interact. In the Ancient Tamil, most trade was by

barter, which was prevalent locally. As a result, more

trades were performed locally than internationally.

2) Objects with larger activity range had higher ability to

interact with other objects. As an evidence, the development of seamanship and the discovery of new routes significantly increased the trades between Tamil and Rome.

3) The properties of two objects determined how they interacted. For instance, on the trades between Tamil and

We first introduce the notation rule of this paper. Without

further notification, a scalar is denoted by a lower case letter,

e.g., a, b and λ; a matrix is denoted by an upper case letter such

as A, B and Λ. An×m represents that the matrix A contains

n rows and m columns. Besides, Ai,: and A:,j represent the

i-th row and the j-th column of the matrix A, respectively,

and Aij is the element at the i-th row and the j-th column.

In the formulation of our model, T r(U

s×s ) is the trace of

s

the square matrix Us×s and T r(Us×s ) = i=1 Uii . A ◦ B is

429

The posterior of Λ and H is then:

Rome, according to the different goods they could produce, Tamil exported pepper, ivory and gold, and imported

glass, coral and wine. Another example is that the changes

of the Emperor of Rome had significant impact on the

trade between Tamil and Rome.

p(Λ, H|E, σ 2 ) =

p(E|Λ, H, σ 2 )p(Λ)p(H)

.

p(E)

(1)

By the model in Eq.1, we can extract the latent feature

representation Λ of object roles as well as the role interaction

matrix H from an observable link matrix E of a static network.

In a network representation of the trades of the Ancient

Tamil, each node denotes an object that participated in the

trade, and each link represents a trade; whether there is a

link between any two objects is determined by the rule 1 and

the rule 2; and the weights of the links, which represent the

interaction types of the objects, are determined by the rule 3.

The Supervised Model on Learning Object Roles

In our experiment setting, we assume at the first time point,

there are several labeled objects to guide the learning of each

type of object roles and to maximize the margins between

different object roles.

In this process, there are two factors impacting the formation of the network: object coordinates and object roles. The

coordinates of objects, covering rule 1, determine the closeness

of each two objects. The roles of objects, covering rule 2 and

rule 3, determine to what extent and how each object impacts

the others. The objective of this paper is to explore how to

detect, analyze and predict such object roles.

For the first snapshot, the label matrix for the labeled

objects is Ŷm×c , in which m is the number of the labeled

objects. To extend the unsupervised model for supervised

cases, we introduce a feature coefficient matrix Wr×c which

measures the contribution of each feature in Λ to the object

role classes:

m c

N Yˆij |(Λ̂ · W )ij , σY2 .

(2)

p(Ŷ |Λ, W ) =

The Unsupervised Model on Learning Object Roles

i=1 j=1

Suppose object coordinates are a-dimensional and object

roles are r-dimensional. Let Ξn×a and Λn×r be the latent

coordinate matrix and latent role matrix for n objects, respectively. An observed link Eij from a source object i to a target

object j is generated as:

In Eq.2, Λ̂ denotes the related latent role features for the

labeled objects. σY2 is the noise variance in the Gaussian

distribution.

The priors for the feature

weighting

matrix−1W is:

2

c

tr(W CW W )

i

=

exp

−

.

p(W ) ∝ exp − i=1 W

2CWi

2

In the prior, W is column-wise independent and CW =

diag{CW1 , CW2 , ..., CWc } is the covariance matrix of the

prior.

Eij = Λi,: · M→ · Λj,: · M← · K(Ξi,: , Ξj,: ) + .

In the above equation, M→ measures how the role Λi,:

of the source object i impacts the link Eij ; and similarly,

M← measures how the role Λj,: of the target object j impacts

the link Eij . K(Ξi,: , Ξj,: ) is a closeness function measuring

how close two objects (object i and object j) are. The larger

K(Ξi,: , Ξj,: ) is, the more likely two objects i and j interact.

During the past few years, extensive work has been done to

estimate the latent closeness of two objects in networks. In this

paper, we use the truncated Katz kernel [8] for this purpose.

By this

rkernel, the closeness matrix K on a network E is

K = i=1 αi · E i , and K(Ξi,: , Ξj,: ) = Kij .

For the supervised cases, the posterior is:

p(Λ, H, W |E, Ŷ ) =

p(E|Λ, H)p(Ŷ |Λ, W )p(Λ)p(H)p(W )

p(E, Ŷ )

.

(3)

Given E and Ŷ , by maximizing Eq.3, we are able to obtain

the latent feature presentation Λ of object roles, the interaction

matrix H and the coefficient matrix W for learning object role

classes on Λ.

If we assume each Eij is independently generated

from a Gaussian distribution with mean Λi,: · M→ · Λj,: ·

M← · Kij and noise variance σ 2 , the conditional distribu2

tion

nthe observed link matrix E2 is: p(E|Λ, H, σ ) =

n of

i=1

j=1 N (Eij |(ΛHΛ ◦ K)ij , σ ), in which H = M→ ·

M← is the object interaction matrix measuring how each object

contributes to each link.

Solution of the Supervised Model

1) Variational Bayesian Inference: In this part, we apply

the Variational Bayesian technique [9], [10] to maximize Eq.3.

Suppose there is a trial distribution on the matrices

Λ,

H and W as Q(Λ, H, W ) which has the constraint

Q(Λ, H, W )dΛdHdW = 1. The free energy of the system

is:

Suppose the priors of the latent feature matrix Λ of the

object roles and the object interaction matrix H follow the

spherical Gaussian distributions and they

are column-wise

Λ 2

r

independent, we have: p(Λ) ∝ exp − f =1 2CfΛ

=

f

−1 2

tr(ΛCΛ Λ )

H r

, and p(H) ∝ exp − f =1 2CfH

=

exp −

2

f

−1

tr(HCH H )

.

exp −

2

F = EQ(Λ,H,W ) [log p(E, Ŷ , Λ, H, W ) − log Q(Λ, H, W )],

(4)

which can be further formulated into:

F =EQ(Λ,H,W ) [log p(Λ, H, W |E, Ŷ ) + log p(E, Ŷ )

− log Q(Λ, H, W )]

= log p(E, Ŷ ) − KL(Q(Λ, H, W )p(Λ, H, W |E, Ŷ ))

In the above priors, CΛ = diag{CΛ1 , CΛ2 , ..., CΛr } and

CH = diag{CH1 , CH2 , ..., CHr } are the prior variances of

those columns.

≤ log p(E, Ŷ ).

430

(5)

In Eq.5, Eq (f (x)) denotes the expectation of the role f (x)

q(x)

with respect to a distribution q(x). KL(qp) = q(x) p(x)

dx

represents the Kullback-Leibler (KL) divergence of a distribution p with regards to q. Since the value of a KL divergence is

always non-negative, in the end of the above formulation, we

can conclude that the lower bound for the evidence log p(E, Ŷ )

is F.

where ρ is a constant which is irrelevant to Λ. δ(Ŷ , i) is the

indicator function that equals 1 if the labeled set includes

object i and equals 0 otherwise.

Comparing with Eq.6, the derivation leads to the following

rules:

n

1

−1

exp − (Λi,: − Λi,: ) ΦΛ (Λi,: − Λi,: ) , (9)

Q(Λ) ∝

2

i=1

Through maximizing the free energy F, we can obtain the

optimum if and only if Q(Λ, H, W ) = p(Λ, H, W |E, Ŷ ). It

is usually intractable to approximate Q(Λ, H, W ) due to the

complexity caused by the high dimensionality of the interacted

members Λ, H and W . Therefore, we apply the variational

approximation Q(Λ, H, W ) = Q(Λ)Q(H)Q(W ).

where

Λi,:

By the variational Bayesian framework, the variational

posteriors of the matrices Λ, H and W can be iteratively

updated through the following rules:

1

exp EH,W log p(E, Ŷ , Λ, H, W ) ,

Q(Λ) =

zΛ

1

exp EΛ,W log p(E, Ŷ , Λ, H, W ) , (6)

Q(H) =

zH

1

exp EΛ,H log p(E, Ŷ , Λ, H, W ) ,

Q(W ) =

zW

Φ−1

Λi

The variational posteriors for the factor matrix H is computed in a similar way, by which we obtain:

r

1

exp − (H:,j − H :,j ) Φ−1

(H

−

H

)

,

Q(H) ∝

:,j

j,:

H

2

j=1

(11)

where

n

1 H :,j = ΦHj

Êij Λi,: ,

2

σE

i=1

(12)

n

1 −1

−1

ΦHj = CH + 2

(Λi,: Λi,: + ΦΛi ).

σE i=1

wherezΛ , zH and zW are constants which enforce the conditions Q(Λ)dΛ = 1, Q(H)dH = 1 and Q(W )dW = 1.

In the iterative solution, the conditional probability over the

observed link p(E|Λ, H) will introduce a term ΛHΛ ΛHΛ

which includes the quadratic multiplications of H and the

quartic integrations of Λ. This term may cause high time and

space complexities. Therefore, in each iteration, we utilize the

Λ∗ optimized in the last iteration as:

p(E|Λ, H) =

n r

2

N ((Ê)ij |Λi,: H:,j , σE

),

⎞

r

c

δ(

Ŷ

,

i)

1

=⎝ 2

Êij H :,j +

Ŷi,k W :,k ⎠ ΦΛ ,

σE j=1

σY2

k=1

⎛

r

1 = ⎝CΛ−1 + 2

(H :,j H :,j + ΦHj )

σE j=1

c

δ(Ŷ , i) +

(W :,k W :,k + ΦWk )) .

σY2

k=1

(10)

⎛

Similarly, the variational posterior of W is computed

through:

c

1

Q(W ) ∝

exp − (W:,k − W :,k ) Φ−1

(W

−

W

)

,

:,k

:,k

W

2

k=1

(13)

where

n

1 W :,k = ΦWk

δ(Ŷ , i)Ŷik Λi,: ,

σY2 i=1

(14)

n

1 −1

−1

Φ Wk = C W + 2

δ(Ŷ , i)Ŷik (Λi,: Λi,: + ΦΛi ).

σY i=1

(7)

i=1 j=1

in which Ê = E K/Λ∗ .

To verify the correctness of this simplification is to prove

that the optimal Λ and H obtained by this simplification

can also satisfy the optimization of the original conditional

probability. The proving process is straightforward. Due to the

limited pages of this paper, we skip the details here.

Plugging in the model for p(Λ, H, W |E, Ŷ ), we can compute the variational posterior Q(Λ) by:

EH,W log p(E, Ŷ , Λ, H, W )

⎡

⎛

r

n

1 ⎣

1 =−

Λi,: ⎝CΛ−1 + 2

EH (H:,j H:,j

)

2 i=1

σE j=1

c

δ(Ŷ , i) +

EW (W:,k W:,k ) Λi,:

(8)

σY2

k=1

⎡

⎛

r

n

1 1 ⎣

−2Λi,: ⎝ 2

−

Êij EH (H:,j )

2 i=1

σE j=1

c

δ(Ŷ , i) +

+ ρ,

Ŷi,k EW (W:,k )

σY2

By simply updates Λ, H and W through the above derivations iteratively until convergence, we can obtain the optimal

solution.

2

, σY2 ,

2) Parameter Setting: To set the hyper-parameters σE

CΛ , CH and CW , we take derivatives of the expectation of the

logarithm evidence EΛ,H,W log p(E, Ŷ , Λ, H, W ) with respect

to each of the hyper-parameters and set the derivatives to 0,

then we can obtain:

n

r

1 2

2

Êij − 2Êij Λi,: H :,j

=

σE

n · r i=1 j=1

(15)

+T r (ΦΛ + Λi,: Λi,: )(ΦH + H :,j H :,j ) ,

k=1

431

Ȧͳ

Ȧʹ

Ȧ

ͳ

ʹ

ͳ

ʹ

ͳ

Since p(E i |Di ) ≡ p(E i |Λi , H i ), p(E i |Di ) can be well

approximated by Eq.7. The only thing remaining unsolved is

how to estimate p(Di |D1:i−1 ) ≡ p(Λi , H i |Λ1:i−1 , H 1:i−1 ).

We place Gaussian priors on Λi and H i as:

p(Λi |ΩiΛ , ΣiΛ ) =

j=1

p(H i |ΩiH , ΣiH ) =

ʹ

1

n c

δ(Ŷ , i) Ŷik2 − 2Ŷik Λi,: W :,k

Ŷ c i=1 k=1

+T r (ΦΛ + Λi,: Λi,: )(ΦW + W :,k W :,k ) ,

n

1 2

(ΦΛi )l,l + Λi,l

n i=1

r

1 2

(ΦHj )ll + H il

=

r j=1

C Wl

(16)

(19)

i

N (H:,j

|ΩiH:,j , ΣiHj I).

(20)

Let Λi for i ∈ [1, t] as a time series matrix independent of

other variables. When i = 2, since we only have Λ1 and no

change of Λ has been observed, the best prediction of Ω2Λ is

Λ1 . For i ≥ 3, we can approximate ΩiΛ through the MAR(1)

(Multivariate Autoregressive) model [11] as:

Λi = Λi−1 · AΛ + iΛ ,

(17)

(21)

where i is a Gaussian noise having zero mean and precision ΣiΛ . Suppose XΛi = [Λ1 , Λ2 , ..., Λi−2 ] and BΛi =

[Λ2 , Λ3 , ..., Λi−1 ], by maximum likelihood estimation, we

have:

(22)

AΛ = (XΛi XΛi )−1 XΛi BΛi ,

r

1 2

=

(ΦWl )ll + W hl

r

h=1

C. Detection and Analysis on Dynamic Networks

ΩiΛ = Λi−1 · AΛ ,

In this section, we extend the above probabilistic model for

detecting and analyzing object roles on dynamic networks.

ΣiΛ =

i

Let E denote the observed link matrix at the i-th sampled

time point. Suppose we have a sequence of such sampled

link matrices {E 1 , E 2 , ..., E t }, our goal of analyzing network dynamics is to obtain a sequence of low-rank matrices

{{Λ1 , H 1 }, {Λ2 , H 2 }, ..., {Λt , H t }}. The i-th pair {Λi , H i },

which approximates E i , captures the role of each object at

the i-th time point and the interaction patterns of these object

roles.

(23)

1

(B i − XΛi AΛ ) (BΛi − XΛi AΛ ).

i − 2 − r2 Λ

i

i

Similarly, XH

= [H 1 , H 2 , ..., H i−2 ] and BH

[H 2 , H 3 , ..., H i−1 ]. For H we have:

i

i −1 i

i

XH

) X H BH

,

AH = (XH

ΩiH

ΣiH =

The Object Role Model for Dynamic Networks

Let Di = {Λi , H i } denote the pair of the low-rank

matrices we wish to extract at the i-th time point. We present

the graphic model of our analyzing framework in Fig.1. The

observed network E i at the i-th snapshot is generated by

the latent parameters Di , and Di is determined by three

factors: the previous latent parameters D1:i−1 (D1 to Di−1 ),

the current object roles Λi and the current object interaction

pattern H i .

=H

i−1

· AH ,

1

i

i

i

(B i − XH

AH ) (BH

− XH

AH ).

i − 2 − r2 H

(24)

=

(25)

(26)

(27)

Since Λi and Hi are independent from each other, we

estimate p(Di |Di−1 ) as:

p(Di |Di−1 ) p(Λi |ΩiΛ , ΣiΛ )p(H i |ΩiH , ΣiH )

r

r

(28)

i

=

N (Λi:,j |ΩiΛ:,j , ΣiΛj I)

N (H:,j

|ΩiH:,j , ΣiHj I).

j=1

j=1

Thus, the evidence we wish to maximize is:

In the first snapshot, no previous latent parameters D1:i−1

exist. The object roles in E 1 can be effectively extracted

through the models proposed in Section II-B. In this paper,

we assume that labeled information exists in the first snapshot,

therefore we use the supervised model in Section II-B.

p(Λi , H i , E i , Di−1 ) p(E i |Λi , H i )p(Λi )p(H i )

p(Λi |ΩiΛ , ΣiΛ )p(H i |ΩiH , ΣiH ).

(29)

The Eq.29 is the model for learning object roles on

dynamic networks. Specifically, given previously extracted

feature D1:i−1 and the current observable network E i , we can

learn the current latent object roles Λi and their interaction

pattern H i .

i−1

has already been

At each snapshot i for i ∈ [2, t], D

calculated and E i is observable. With the assumption that

D1:i−1 and E i are independent, the posterior distribution of

on the parameter set Di is:

p(Di |D1:i−1 , E i ) = p(Di |D1:i−1 )p(Di |E i ).

N (Λi:,j |ΩiΛ:,j , ΣiΛj I),

In the above Gaussian priors, ΩiΛ and ΩiH are the

best estimations of Λi and H i based on D1:i−1 , respectively. ΣiΛ = diag{ΣiΛ1 , ΣiΛ2 , ..., ΣiΛr } and ΣiH =

diag{ΣiH1 , ΣiH2 , ..., ΣiHr } are the related variance matrices.

CΛ l =

C Hl

r

j=1

Fig. 1: The Graphical Model Representation

σY2 =

r

Solution of the Model for Dynamic Networks

(18)

432

Since E 1:t are all observable and Λt has already been extracted, by maximizing the logarithm of the above posterior

probability, we obtain Λt+1 = Λt · AΛ .

1) Variational Bayesian Inference: To optimize the objective in Eq.29, we follow an optimizing process which is almost

identical to Section II-B1, thus we skip the inference process

here. In this solution, we iteratively update:

r

r

i

1 i i ΩΛj,h

i

Λj,: =

ΦiΛ ,

Êjh H :,h +

2

σE

ΣiΛh

h=1

h=1

r

1

−1

i −1

i −1

i

(H :,h H :,h + ΦHh )

(Φ )Λj = CΛ + (ΣΛ ) + 2

σE

h=1

(30)

⎛

⎞

n

r

i

Ω

1

i

i

H

g,h

⎠,

H :,h = ΦiHh ⎝ 2

Ê i Λ +

σE j=1 jh j,: g=1 ΣiHh

(31)

n

1 i i

−1

i −1

i −1

i

(Φ )Hh = CH + (ΣH ) + 2

(Λ Λ + ΦΛj ).

σE j=1 j,: j,:

By sequential inference, we canpredict the object roles in

s

the (t + s)-th snapshot as Λt+s = j=1 Λt · AΛ .

With the predicted Λt+s , the object role probability in the

(t + s)-th snapshot is estimated as p(Fjt+s |Vit+s ) = Λt+s

i,: W:,j .

We can then obtain the role of each object following the

method at the end of Section II-C. .

III.

In this part, we experimentally evaluate the proposed LAP

algorithm on two real data sets: SocialEvolution [12] and

Robot.Net. The SocialEvolution data set is available upon

request1 and the Robot.Net data set is publicly available2 .

The experiments include three parts. On each data set, we

first evaluate the performance of LAP on the task of detecting

object role classes at each time point. Case studies are then

performed to evaluate the correctness of the extracted dynamic

patterns of object roles. To the end, we test the performance

of the proposed LAP algorithm on the task of object role

predictions. In the experiments, baselines are investigated

to quantitatively prove the superiority of the proposed LAP

algorithm.

2) Parameter Setting: By the derivatives similar to those

in Section II-B2, we can obtain:

n

r

1 i 2

i

i

2

(Êjh ) − 2Êjh Λj,: H :,h

=

σE

n · r j=1

(32)

h=1

i i

i

i

i

i

+T r (ΦΛ + Λi,: Λj,: )(ΦH + H :,h (H :,h ) ) ,

CΛi l =

i

CH

=

l

1 i

i

(ΦΛj )l,l + (Λj,l )2

n j=1

n

r 1

i

(ΦiHg )ll + (H gl )2

r g=1

A. Experiments on SocialEvolution Data Set

(33)

1) Dataset Description: We first evaluate LAP on the

SocialEvolution data set to demonstrate its capabilities in

analyzing the evolving patterns of people’s political interests

in dynamic networks. This data set was collected from October

2008 to May 2009, and it contains information of locations,

phone calls, music sharing logs, surveys on relationships,

political interests and etc.. In the experiments, we build the

dynamic networks of the SocialEvolution data set as: initially

the weights of links between the objects (people in the data set)

are set to 0 which stands for no link. If there is a phone call,

message or music share between two users at a time point, we

add the weight of the undirected link between the two users

by 1 for that time point. The constructed continuous dynamic

network is then divided into 5 snapshots which are denoted as

E 1 to E 5 , respectively.

Detection and Analysis on Object Role Classes

To make the final decision on the role label of each node,

we estimate p(Fj |Vi ), which is the conditional probability that

object Vi belongs to the role class Fj , through the estimation:

p(Fj |Vi ) = Ep(Λ),p(W ) Λi,: W:,j = Λi,: W :,j .

E XPERIMENTS AND A NALYSIS

(34)

By Eq.34, we can obtain the probability of each object

belonging to each object role class at each time point. For the

detection of object roles, each object is then assigned to the

class in which the object has the highest probability. For the

analysis of object roles, the trend of varying p(Fj |Vi ) over

different time points clearly reveals the dynamic patterns of

object roles. We explain more about how to use p(Fj |Vi ) in

the analysis of dynamic object roles in Section III-A3.

In this data set, surveys of some users’ political interests

at different time points have also been provided. According to

the surveys on whether people are interested in politics, we

divide the users into three classes: Indifferent, Moderate and

Enthusiastic. In the experiments, we suppose the surveys at the

first time point are known and use them in the training. The

surveys at the other time points are then used to numerically

evaluate the performance.

D. Prediction on Dynamic Networks

Based on the aforementioned object role detections and

analysis model, in this section, we develop the approach for

predicting object roles on dynamic networks.

2) Object Role Detection: In this part, we investigate the

performance of the proposed LAP algorithm on the task of

identifying object roles at each time point. For comparisons,

we also consider four baselines in this experiment as follows.

Suppose there are t + 1 periodically sampled snapshots

of a dynamic network, and among them, the first t snapshot

networks are observable. We predict the object roles at t +

1 based on the observable data by maximizing the posterior

probability below:

p(Λt+1 |E 1:t ) =

p(Λt+1 |Λt )p(Λt |E 1:t ).

(35)

As discussed in Section I, subjectively selected structural

properties are usually insufficient to cover the characteristics

1 http://realitycommons.media.mit.edu/socialevolution.html

2 http://www.trustlet.org/datasets/robots

Λt

433

net/

ROC curve

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0.7

0.6

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0.9

0.8

0.7

0.6

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

1

1

True Positive Rate

0.6

0.8

ROC curve

ROC curve

1

0.9

True Positive Rate

True Positive Rate

True Positive Rate

0.7

0

ROC curve

1

0.8

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

True Positive Rate

ROC curve

1

0.9

0.9

0.8

0.7

0.6

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

0.9

0.8

0.7

0.6

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

False Positive Rate

False Positive Rate

False Positive Rate

False Positive Rate

False Positive Rate

E1

E2

E3

E4

E5

(a)

(b)

(c)

(d)

(e)

0.9

1

Fig. 2: ROC curves for detecting Indifferent objects on SocialEvolution

TABLE II: Object Role Detection on SocialEvolution

of the aimed object roles. To prove it, we test two popular

topological properties PageRank [2] and Katz Centrality [13]

in the detection of object roles at each time point.

As explained in Section II-B, we assume that object roles

describe how and to what extent could the objects impact

the others. In the contrast, existing methods such as [4]–[6]

view object roles as the ”coordinates” of the objects, and they

assume objects of the same roles are highly connected. To

measure the impact of these two different assumptions, we

build a baseline named as Coordinates which is identical to

the proposed LAP model

H, σ 2 )

n that it defines p(E|Λ,

n except

2

as: p(E|Λ, H, σ ) = i=1 j=1 N (Kij |(ΛHΛ )ij , σ 2 ). By

this modification, highly connected objects tend to obtain close

latent feature representations in the results.

In the proposed LAP model, we predict the object roles at

time t base on the object roles at time 1 to time t − 1. The

major idea of the proposed model in utilizing the evolving

information is that the extracted object roles at time t should

be close to this prediction. However, in most existing methods

such as [6], the extracted object roles at time t are assumed

to be close to the object roles at time t − 1. To measure

the impact of these two different assumptions, we build a

baseline named as Prior which is identical to the proposed LAP

model except that it defines p(D i |Di−1 ) as: p(Di |Di−1 ) p(Λi |Λi−1 , CΛi−1 )p(H i |H i−1 , CH i−1 ). By this modification,

object roles at time t tend to be close to the learned object

roles at time t − 1.

E1

E2

E3

E4

E5

PageRank

0.537

0.581

0.534

0.520

0.572

E1

E2

E3

E4

E5

PageRank

0.586

0.503

0.606

0.552

0.549

E1

E2

E3

E4

E5

PageRank

0.622

0.724

0.661

0.600

0.502

Indifferent

Centrality

Coordinates

0.534

0.816

0.631

0.736

0.653

0.561

0.630

0.654

0.631

0.649

Moderate

Centrality

Coordinates

0.513

1.000

0.675

0.746

0.706

0.523

0.646

0.540

0.643

0.602

Enthusiastic

Centrality

Coordinates

0.540

1.000

0.709

0.649

0.688

0.536

0.597

0.594

0.575

0.505

Prior

1.000

0.890

0.774

0.721

0.795

LAP

1.000

0.890

0.863

0.827

0.882

Prior

1.000

0.811

0.766

0.675

0.741

LAP

1.000

0.811

0.784

0.700

0.728

Prior

1.000

0.888

0.543

0.569

0.540

LAP

1.000

0.888

0.875

0.843

0.840

performance is still good with the guidance of trained object

roles at E 1 . As time progresses, the guidance of the labeled

information at E 1 becomes weak, thus the performance of

Coordinates is very bad from E 3 to E 5 . The bad performance

on E 3 to E 5 indicates that Coordinates can not characterize the

object roles well without the guidance of labeled information.

This result supports that labeled information is critical for

extracting discriminant representations of object roles, and that

viewing object roles as ”Coordinates” does not work in these

cases.

For each investigated method, we test its performance on

the known labeled data with varying parameter values so as to

find the best parameter setting. In our model, we obtain that

the optimal number of latent features is 63.

An interesting finding in this experiment is that the fourth

baseline Prior performs significantly worse than the proposed

method LAP on detecting the Indifferent and Enthusiastic objects while achieving close performance on Moderate objects.

To seek the reason for this result, we analyze the data and

observe that the probabilities of Indifferent, Moderate and

Enthusiastic objects changing their levels of interests in politics

are on average 24.63%, 11.17% and 23.61%, respectively. This

observation indicates that Moderate objects are more consistent

in political interests while Indifferent and Enthusiastic objects

are more likely to change. Since Prior assumes the object

roles are close to previous object roles, it performs better

on Moderate objects that change less and performs worse on

Indifferent and Enthusiastic objects that change more.

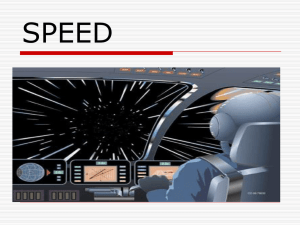

Since the numbers of objects in different classes are highly

imbalanced, accuracy is not meaningful in evaluating the

performance. Therefore, we calculate the Receiver Operating

Characteristics (ROC) curve and use the Area Under the Curve

(AUC) to capture the quality of the ROC curve.

Table II summarizes the performance of all the investigated

methods on the SocialEvolution data set.

We first notice that PageRank and Centrality generally do

not perform well on detecting all the three object roles over

different time points, and in some cases the AUC scores are

even close to random guess. This bad performance indicates

that PageRank and Centrality are not able to capture sufficient

information from the structure to characterize the object roles.

Comparing with all these baseline methods, LAP achieves

the best performance on almost all the experiments. Compared

with the best baseline Prior, LAP improves the AUC scores by

up to 61.14% and on average 13.75%. To better illustrate the

advantage of the proposed LAP model, in Fig.2, we show the

ROC curves on detecting Indifferent objects (due to the space

limit, we only show this case).

For the third baseline Coordinates, the performance varies

significantly over different cases. On E 1 , since labeled information for each object role is provided, Coordinates can

reach very high AUC scores. On E 2 which is close to E 1 , the

434

Enthusiastic

Enthusiastic

Enthusiastic

Enthusiastic

(d) E 4

Moderate

TABLE III: Object Role Prediction on SocialEvolution

Indifferent

(c) E 3

Moderate

Indifferent

(b) E 2

Moderate

Indifferent

(a) E 1

Moderate

Indifferent

Indifferent

Enthusiastic

Moderate

(e) E 5

(d) E 4

Enthusiastic

Enthusiastic

Enthusiastic

Enthusiastic

E1

E2

E3

E4

E5

DyPageRank

0.533

0.606

0.552

0.549

E1

E2

E3

E4

E5

DyPageRank

0.558

0.661

0.600

0.502

Moderate

Indifferent

(c) E 3

Moderate

Indifferent

(b) E 2

Moderate

Indifferent

(a) E 1

Moderate

Indifferent

Indifferent

Enthusiastic

Fig. 3: Role Dynamics of Object 42 in SocialEvolution

Moderate

E

E2

E3

E4

E5

DyPageRank

0.518

0.534

0.520

0.572

1

(e) E 5

Fig. 4: Role Dynamics of Object 70 in SocialEvolution

3) Object Role Analysis: In this section, we do case studies

to demonstrate how we implement the proposed model to

analyze changing patterns of object roles. Without loss of

generality, we use 0, 1 and 2 to represent the classes of

Indifferent, Moderate and Enthusiastic, respectively. With the

class probabilities learned by the proposed LAP model, we

calculate the class number expectation of each object at each

time point. We view the class numbers as angles, scale them

to the range of [0, π], and demonstrate the dynamic patterns

in compasses as in Fig.3 and Fig.4.

Indifferent

DyCentrality

Coordinates

0.632

0.718

0.653

0.696

0.630

0.642

0.631

0.649

Moderate

DyCentrality

Coordinates

0.676

0.777

0.706

0.774

0.646

0.542

0.643

0.602

Enthusiastic

DyCentrality

Coordinates

0.709

0.749

0.688

0.738

0.597

0.566

0.575

0.505

Prior

0.888

0.817

0.739

0.806

LAP

0.888

0.826

0.818

0.908

Prior

0.804

0.769

0.675

0.747

LAP

0.804

0.776

0.642

0.688

Prior

0.867

0.749

0.586

0.561

LAP

0.867

0.765

0.835

0.803

performance is evaluated in AUC by comparing the prediction

with the ground truth. We summarize the results in Table III.

We first notice that the prediction performance of DyPageRank and DyCentrality is very close to the detection

performance of PageRank and Centrality in Table II. This

fact indicates that DyPageRank and DyCentrality are very

effective in learning the evolving patterns of the PageRank and

Centrality scores. Nevertheless, these two baselines perform

the worst among all the investigated methods on the task of

object role predictions. The bad performance verifies the fact

that PageRank and Centrality scores can not well capture the

characteristics of the object roles considered in this experiment.

In Fig.3, we show the changing pattern of object 42.

According to the surveys on political interests, this person

appeared to be highly interested in politics in the first three

time points which are around the date of the U.S. presidential

election at 2008. During the last two time points, this person

was less interested in politics and had doubts about the

president and the congress. In Fig.3, the learned object roles

of this person well reflect the dynamics of his/her interest in

politics.

As for Coordinates, it shows better performance in prediction than in detection. Notice that for object role detection,

Coordinates integrates two kinds of information: the prediction

based on previously learned object roles and the extraction

based on the current network. In contrast, in prediction, it

only uses the previously learned object roles. Therefore, the

extraction part, in which object roles are viewed as object

”Coordinates”, reduces the overall performance of the detection. This experiment again prove that viewing object roles

as ”Coordinates” is not effective in learning, analyzing and

predicting object roles.

In Fig.4, we show the changing pattern of object 70.

Similar to the object 42, this person appeared to be more

interested in politics during the first three time points than

the rest snapshots. This person claimed himself/herself as

slightly interested in politics around the presidential election

date and frequently switched his/her preferred party between

the Independent and the Democrat. During the last two time

points, this person showed no interest in politics at all and

expressed nothing about the president, the congress or the

economy policies. In Fig.4, the learned object roles well reflect

the dynamics of his/her interest in politics.

Similar to the results in Table II, in Table III, Prior

achieves similar performance with the proposed method LAP

on predicting Moderate objects but performs much worse

than LAP on the other object roles. This fact supports the

conclusion that Prior performs better on Moderate objects that

change less and performs worse on Indifferent and Enthusiastic

objects that change more.

Due to space limit, we only show the two cases in this

section. The excellent performance supports that the proposed

LAP model is effective in analyzing and visualizing the

dynamics of object roles.

4) Object Role Prediction: In this section, we investigate

the performance of LAP on the task of predicting object roles.

For comparison, we extend the baselines in Section III-A2

to this task. Specifically, we utilize the results of PageRank

and Centrality in autoregressive model for prediction, and

obtain two baselines DyPageRank and DyCentrality, respectively. Since the other two baselines Coordinates and Prior are

dynamic model, they can be directly used in the task of object

role prediction.

Compared to all the baselines, the proposed LAP model

achieves significantly better performance in most cases than

any baseline. The experiments confirm the effectiveness of

LAP in predicting object roles.

B. Experiments on Robot.Net Data Set

1) Dataset Description: The Robot.Net data set was

crawled daily from the website Robot.Net3 since 2008. This

In this experiment, we predict the object roles at the

second to the fifth time points using the previous data. The

3 http://robots.net/

435

ROC curve

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

0.1

0

1

0

0.1

False Positive Rate

0.2

0.5

0.6

0.7

0.8

0.9

ROC curve

0

0.1

0.2

0.3

0.4

0.5

0.6

0.2

0.7

0.8

0.9

1

False Positive Rate

(d) R4

0.4

0.5

0.6

0.7

0.8

0.9

1

(c) R3

ROC curve

1

0.9

0.8

0.7

0.6

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

0.3

False Positive Rate

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

False Positive Rate

0.9

PageRank

0.511

0.564

0.624

0.540

0.575

0.521

R1

R2

R3

R4

R5

R6

PageRank

0.645

0.556

0.619

0.659

0.585

0.580

R1

R2

R3

R4

R5

R6

PageRank

0.718

0.564

0.654

0.630

0.623

0.585

R1

R2

R3

R4

R5

R6

PageRank

0.704

0.626

0.623

0.590

0.588

0.627

Master

(c) R3

Apprentice

Apprentice

Apprentice

0.7

0.6

0.5

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.3

0.2

0.1

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

False Positive Rate

(f) R6

Fig. 5: ROC curves for detecting Observer on Robot.Net

TABLE IV: Object Role Detection on Robot.Net

R1

R2

R3

R4

R5

R6

Master

(b) R2

0.8

(e) R5

Observer

Centrality

Coordinates

0.549

0.899

0.537

0.793

0.530

0.502

0.535

0.517

0.537

0.531

0.541

0.511

Apprentice

Centrality

Coordinates

0.677

0.778

0.662

0.775

0.657

0.705

0.658

0.684

0.654

0.671

0.654

0.670

Journeyer

Centrality

Coordinates

0.511

0.911

0.519

0.809

0.511

0.504

0.518

0.522

0.527

0.524

0.528

0.545

Master

Centrality

Coordinates

0.680

0.646

0.682

0.685

0.678

0.726

0.668

0.709

0.665

0.698

0.665

0.745

Master

(a) R1

Observer

PageRank

Centrality

Coordinates

Prior

LAP

0.1

0.1

Observer

0.6

0.2

0

Observer

True Positive Rate

0.7

0.3

0.1

ROC curve

0.8

0.4

0.2

0

1

1

0.9

0.5

0.3

(b) R2

1

True Positive Rate

0.4

PageRank

Centrality

Coordinates

Prior

LAP

0.4

False Positive Rate

(a) R1

0

0.3

0.5

Journeyer

0.2

0.6

Journeyer

0.3

True Positive Rate

0

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.7

Journeyer

0.1

0.5

Apprentice

0.8

Journeyer

0.2

0.6

Apprentice

0.9

Journeyer

0.3

True Positive Rate

True Positive Rate

True Positive Rate

PageRank

Centrality

Coordinates

Prior

LAP

0.4

0.7

Observer

0.5

0.8

Observer

0.6

1

0.9

Observer

0.7

Apprentice

ROC curve

1

0.8

Journeyer

ROC curve

1

0.9

Prior

1.000

0.998

0.853

0.832

0.806

0.810

LAP

1.000

0.998

0.998

0.964

0.960

0.958

Prior

1.000

0.988

0.891

0.878

0.792

0.783

LAP

1.000

0.988

0.988

0.844

0.841

0.844

Prior

1.000

0.982

0.867

0.851

0.720

0.706

LAP

1.000

0.982

0.983

0.927

0.921

0.983

Prior

1.000

0.990

0.794

0.769

0.785

0.784

LAP

1.000

0.990

0.973

0.969

0.969

0.969

Master

Master

Master

(d) R4

(e) R5

(f) R6

Fig. 6: Role Dynamics of Object 99 in Robot.Net

TABLE V: Object Role Prediction on Robot.Net

data set contains the interactions among the users of the

website. For the experiments, we choose the first sampled

snapshot in each year during 2007 to 2012 and denote the

obtained six snapshots as R1 to R6 . In this website, each user

is labeled by the others as Observer, Apprentice, Journeyer

or Master according to his/her importance in the website.

Based on these labels, we divide the users into four object role

classes: Observer, Apprentice, Journeyer and Master. In the

experiments, we use the labeled information at R1 for training,

and test the performance of the proposed LAP on detecting,

analyzing and predicting the above object roles. Using varying

parameter values to test the performance on R1 , we obtain that

the optimal number of latent features is 70.

R1

R2

R3

R4

R5

R6

DyPageRank

0.524

0.517

0.518

0.517

0.518

R1

R2

R3

R4

R5

R6

DyPageRank

0.643

0.644

0.645

0.645

0.645

R1

R2

R3

R4

R5

R6

DyPageRank

0.681

0.678

0.678

0.677

0.677

R1

R2

R3

R4

R5

R6

DyPageRank

0.704

0.691

0.692

0.694

0.694

Observer

DyCentrality

Coordinates

0.556

0.896

0.556

0.793

0.555

0.502

0.555

0.517

0.555

0.531

Apprentice

DyCentrality

Coordinates

0.669

0.767

0.669

0.773

0.668

0.704

0.666

0.682

0.668

0.672

Journeyer

DyCentrality

Coordinates

0.534

0.907

0.534

0.808

0.534

0.504

0.535

0.520

0.535

0.524

Master

DyCentrality

Coordinates

0.670

0.857

0.671

0.791

0.671

0.570

0.672

0.573

0.672

0.575

Prior

0.997

0.998

0.852

0.832

0.806

LAP

0.997

0.998

0.997

0.964

0.960

Prior

0.988

0.988

0.890

0.878

0.791

LAP

0.988

0.986

0.986

0.844

0.841

Prior

0.983

0.983

0.867

0.853

0.712

LAP

0.983

0.981

0.983

0.927

0.921

Prior

0.990

0.989

0.870

0.854

0.772

LAP

0.990

0.989

0.989

0.912

0.907

SocialEvolution in Table II. PageRank and Centrality can not

capture the characteristics of the four object roles thus perform

the worst. Coordinates performs well when close to R1 and

perform much worse after several snapshots. Among all the

baselines, Prior performs the best.

Compared to these baselines, the proposed LAP model

achieves the best in most cases. It outperforms the best baseline

Prior by up to 39.24% and on average 11.51%. To better

illustrate the advantage of the proposed LAP model, in Fig.5,

we show the ROC curves on detecting the Observer objects

(due to the space limit, we show this case only).

3) Object Role Analysis: To evaluate the performance on

analyzing the dynamics of object roles, we do case study on

object 99. Specifically, we use 0 to 3 to represent Observer,

Apprentice, Journeyer and Master, respectively. The class

number estimated by expectation is scaled to the range of

2) Object Role Detection: Table IV summarizes the performance of all the baselines as well as the proposed LAP model

on the Robot.Net.

Overall, the results show similar trends to the results of

436

[0, 3π

2 ]. We summarize the results of the object 99 in Fig.6.

According to the votes, in the first snapshot, four users vote

this object as Apprentice and two vote the object as Journeyer.

Starting from the second snapshot, more and more users vote

the object 99 as Master. As we can observe from Fig.6, this

trend on votes is well reflected by the results of the proposed

LAP model. The case study supports that the LAP is effective

in capturing the dynamics of object roles.

for the proposed model. In experiments, we evaluated the

proposed model through the tasks of learning the dynamics

of people’s importance and political interests in two real

world data sets. Overall, the proposed LAP model significantly

outperforms the baselines on learning and predicting seven

types of object roles. Moreover, the dynamic patterns extracted

by the proposed LAP model well reflect the real changing

states of the object roles.

4) Object Role Prediction: In this part, we predict the

object roles at the second to the sixth time points using the

previous data, and summarize the results in Table V. The

results show similar patterns to those in Table III and Table

IV. Across the four baselines, DyPageRank and DyCentrality

are still not effective in characterizing the four different object

classes; Coordinates significantly suffers from its assumption

of viewing object roles as ”Coordinates”; and Prior performs

the best in the baselines.

VI.

The materials published in this paper are partially supported by the National Science Foundation under Grants No.

1218393, No. 1016929, and No. 0101244.

R EFERENCES

[1]

C. J. Kuhlman, V. S. A. Kumar, M. V. Marathe, S. S. Ravi, and D. J.

Rosenkrantz, “Finding critical nodes for inhibiting diffusion of complex

contagions in social networks,” Proc. of ECML’10, pp. 111–127, 2010.

[2] L. Page, S. Brin, R. Motwani, and T. Winograd, “The pagerank citation

ranking: Bringing order to the web,” World Wide Web Internet And Web

Information Systems, 1998.

[3] M. Handcock, G. Robins, T. Snijders, and J. Besag, “Assessing degeneracy in statistical models of social networks,” Journal of the American

Statistical Association, 2003.

[4] J. R. Foulds, C. DuBois, A. U. Asuncion, C. T. Butts, and P. Smyth,

“A dynamic relational infinite feature model for longitudinal social

networks,” Journal of Machine Learning Research - Proceedings Track,

pp. 287–295, 2011.

[5] C. Heaukulani and Z. Ghahramani, “Dynamic probabilistic models for

latent feature propagation in social networks,” Proc. of ICML’2013, pp.

275–283, 2013.

[6] Y.-R. Lin, Y. Chi, S. Zhu, H. Sundaram, and B. L. Tseng, “Analyzing

communities and their evolutions in dynamic social networks,” ACM

Trans. Knowl. Discov. Data, pp. 8:1–8:31, 2009.

[7] “Economy of ancient tamil country,” http://en.wikipedia.org/wiki/

EconomyofancientTamilcountry, accessed: 2013-06-18.

[8] Z. Lu, B. Savas, W. Tang, and I. S. Dhillon, “Supervised link prediction

using multiple sources,” Proc. of ICDM’10, pp. 923–928, 2010.

[9] S. Nakajima, M. Sugiyama, and R. Tomioka, “Global solution of variational bayesian matrix factorization under matrix-wise independence,”

Proc. of NIPS’10, 2010.

[10] C. Fox and S. Roberts, “A tutorial on variational Bayesian inference,”

Artificial Intelligence Review, pp. 85–95, 2012.

[11] W. Penny and S. Roberts, “Bayesian multivariate autoregressive models

with structured priors,” Proc. of Vision Image and Signal Processing,

pp. 33–41, 2002.

[12] A. Madan, M. Cebrian, S. Moturu, K. Farrahi, and A. Pentland,

“Sensing the ’health state’ of a community,” Pervasive Computing, pp.

36–45, 2012.

[13] L. Katz, “A new status index derived from sociometric analysis,”

Psychometrika, 1953.

[14] D. Chakrabarti, S. Papadimitriou, D. S. Modha, and C. Faloutsos, “Fully

automatic cross-associations,” in Proc. of KDD’04, 2004, pp. 79–88.

[15] E. M. Airoldi, D. M. Blei, S. E. Fienberg, and E. P. Xing, “Mixed

membership stochastic blockmodels,” J. Mach. Learn. Res., pp. 1981–

2014, 2008.

[16] C. Kemp, J. B. Tenenbaum, T. L. Griffiths, T. Yamada, and N. Ueda,

“Learning systems of concepts with an infinite relational model,” Proc.

of AAAI’06, pp. 381–388, 2006.

[17] P. Koutsourelakis and T. Eliassi-Rad, “Finding mixed-memberships in

social networks,” Proc. of AAAI Spring Symposium’08, 2008.

[18] K. Henderson, B. Gallagher, T. Eliassi-Rad, and H. Tong,

“Roix:structural role extraction & mining in large graphs,” Proc. of

KDD’12, 2012.

[19] R. Rossi and B. Gallagher, “Role-dynamics:fast mining of large dynamic networks,” Proc. of WWW’12, 2012.

The proposed LAP model significantly outperforms all

the baselines in most cases over different object roles. LAP

improves over the best baseline Prior by up to 22.69% and on

average 5.86%.

IV.

R ELATED W ORK

As reviewed in Section I, the proposed model significantly

differs from the existing studies in the area of dynamic network

mining. Besides these, there are also several other approaches

that are related to the task in this paper, thus we explicitly

discuss them in this section.

As for object role mining in graphs, existing methods [14]–

[17] usually adopt the assumption that two objects have the

same roles if they have the same relationships to all other

objects. This assumption heavily restricts the applicability of

these existing methods. For instance, suppose there are two

spammers in an email network, and they focus on spamming

users from different areas. In this case, the two spammers have

the same roles but totally different connections to other objects

in the email network, which contradicts with the aforementioned assumption used by the above existing methods.

Besides PageRank [2] and Centrality [13], several other

existing methods such as [18], [19] also use statistics in

topology to characterize object behaviors. The major drawback

of these methods is that the object roles are estimated through

subjectively selected topological features, thus these methods

may not be discriminative in learning many types of object

roles. Moreover, these methods do not incorporate the dynamic

information at each time point into the estimation of object

roles, which differs them from the proposed method.

V.

ACKNOWLEDGMENTS

C ONCLUSIONS

In this paper, we have introduced a probability model for

learning, analyzing and predicting object roles in dynamic

networks. The proposed model effectively integrates structural

information, dynamic information and supervised information

for extracting the latent feature representation of object roles.

The extracted object role representation is then used to identify

the role of each node, track the changes of object roles over

time, and predict the object roles in dynamic networks. We

have also provided the detailed variational bayesian inference

437